Artificial Intelligence

Building Blocks for GenAI

We tailor architecture to application for increased performance, power and cost efficiency.

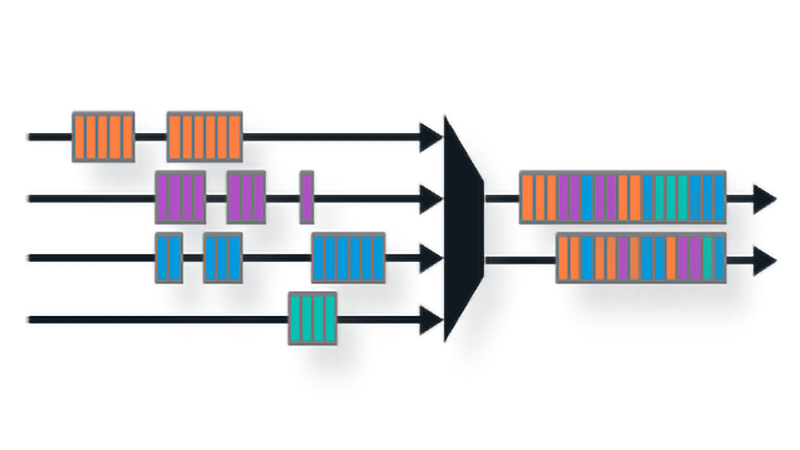

CPUs

- Compute solution that is optimized for Data Movement

- Using MIPS Multi-threading a Low Latency Coherence Architecture, data movement energy efficiency improves by 20-30%

- Scalar compute backbone for GP-GPUs,

near- memory compute, Programmable Multiheaded CXL Memory Architectures

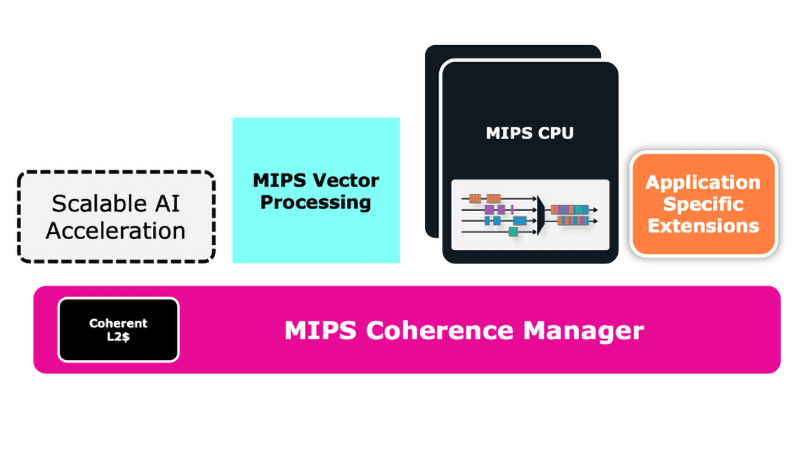

APUs

- Application specific compute vs. General Purpose compute enables solutions which scale to meet modern compute needs

- MIPS Application Processing Units can scale from small SoCs for real time data processing, Chiplets to larger Scale-Out designs

- Application specific extensions reduce complexity and cost in Automotive and Embedded solutions

APU: Processing for AI Application Workload

Application Workload End-to-End

Data Collection

Transport

Compute for Training

Storage

Compute for Inferencing

Application Optimization, AI

Design and Innovate with MIPS Today

We look forward to meeting you at our upcoming events!

"*" indicates required fields