There’s an interesting thread on Github about the tongue-twistingly named beforeinstallpromt JavaScript event.

Let me back up…

Progressive web apps. You know what they are, right? They’re websites that have taken their vitamins. Specifically, they’re responsive websites that:

- are served over HTTPS,

- have a web app manifest, and

- have a service worker handling the offline scenario.

The web app manifest—a JSON file of metadata—is particularly useful for describing how your site should behave if someone adds it to their home screen. You can specify what icon should be used. You can specify whether the site should launch in a browser or as a standalone app (practically indistinguishable from a native app). You can specify which URL on the site should be used as the starting point when the site is launched from the home screen.

So progressive web apps work just fine when you visit them in a browser, but they really shine when you add them to your home screen. It seems like pretty much everyone is in agreement that adding a progressive web app to your home screen shouldn’t be an onerous task. But how does the browser let the user know that it might be a good idea to “install” the web site they’re looking at?

The Samsung Internet browser does ambient badging—a + symbol shows up to indicate that a website can be installed. This is a great approach!

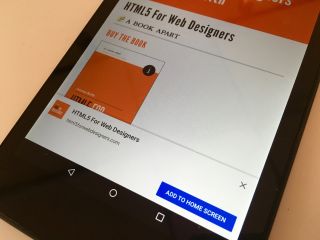

I hope that Chrome on Android will also use ambient badging at some point. To start with though, Chrome notified users that a site was installable by popping up a notification at the bottom of the screen. I think these might be called “toasts”.

Needless to say, the toast notification wasn’t very effective. That’s because we web designers and developers have spent years teaching people to immediately dismiss those notifications without even reading them. Accept our cookies! Sign up to our newsletter! Install our native app! Just about anything that’s user-hostile gets put in a notification (either a toast or an overlay) and shoved straight in the user’s face before they’ve even had time to start reading the content they came for in the first place. Users will then either:

- turn around and leave, or

- use muscle memory reach for that

X in the corner of the notification.

A tiny fraction of users might actually click on the call to action, possibly by mistake.

Chrome didn’t abandon the toast notification for progressive web apps, but it did change when they would appear. Rather than the browser deciding when to show the prompt—usually when the user has just arrived on the site—a new JavaScript event called beforeinstallprompt can be used.

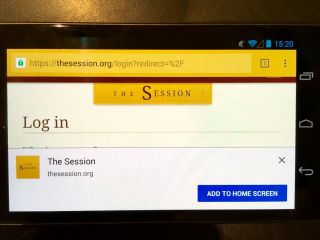

It’s a bit weird though. You have to “capture” the event that fires when the prompt would have normally been shown, subdue it, hold on to that event, and then re-release it when you think it should be shown (like when the user has completed a transaction, for example, and having your site on the home screen would genuinely be useful). That’s a lot of hoops. Here’s the code I use on The Session to only show the installation prompt to users who are logged in.

The end result is that the user is still shown a toast notification, but at least this time it’s the site owner who has decided when it will be shown. The Chrome team call this notification “the mini-info bar”, and Pete acknowledges that it’s not ideal:

The mini-infobar is an interim experience for Chrome on Android as we work towards creating a consistent experience across all platforms that includes an install button into the omnibox.

I think “an install button in the omnibox” means ambient badging in the browser interface, which would be great!

Anyway, back to that thread on Github. Basically, neither Apple nor Mozilla are going to implement the beforeinstallprompt event (well, technically Mozilla have implemented it but they’re not going to ship it). That’s fair enough. It’s an interim solution that’s not ideal for all reasons I’ve already covered.

But there’s a lot of pushback. Even if the details of beforeinstallprompt are troublesome, surely there should be some way for site owners to let users know that can—or should—install a progressive web app? As a site owner, I have a lot of sympathy for that viewpoint. But I also understand the security and usability issues that can arise from bad actors abusing this mechanism.

Still, I have to hand it to Chrome: even if we put the beforeinstallprompt event to one side, the browser still has a mechanism for letting users know that a progressive web app can be installed—the mini info bar. It’s not a great mechanism, but it’s better than nothing. Nothing is precisely what Firefox and Safari currently offer (though Firefox is experimenting with something).

In the case of Safari, not only do they not provide a mechanism for letting the user know that a site can be installed, but since the last iOS update, they’ve buried the “add to home screen” option even deeper in the “sharing sheet” (the list of options that comes up when you press the incomprehensible rectangle-with-arrow-emerging-from-it icon). You now have to scroll below the fold just to find the “add to home screen” option.

So while I totally get the misgivings about beforeinstallprompt, I feel that a constructive alternative wouldn’t go amiss.

And that’s all I have to say about that.

Except… there’s another interesting angle to that Github thread. There’s talk of allowing sites that are launched from the home screen to have access to more features than a site inside a web browser. Usually permissions on the web are explicitly granted or denied on a case-by-case basis: geolocation; notifications; camera access, etc. I think this is the first time I’ve heard of one action—adding to the home screen—being used as a proxy for implicitly granting more access. Very interesting. Although that idea seems to be roundly rejected here:

A key argument for using installation in this manner is that some APIs are simply so powerful that the drive-by web should not be able to ask for them. However, this document takes the position that installation alone as a restriction is undesirable.

Then again:

I understand that Chromium or Google may hold such a position but Apple’s WebKit team may not necessarily agree with such a position.