Want to dive deeper? Check out our infrastructure related blog posts.

The post How GitHub engineers tackle platform problems appeared first on The GitHub Blog.

]]>In my spare time I enjoy building Gundam models, which are model kits to build iconic mechas from the Gundam universe. You might be wondering what this has to do with software engineering. Product engineers can be seen as the engineers who take these kits and build the Gundam itself. They are able to utilize all pieces and build a working product that is fun to collect or even play with!

Platform engineers, on the other hand, supply the tools needed to build these kits (like clippers and files) and maybe even build a cool display so everyone can see the final product. They ensure that whoever is constructing it has all the necessary tools, even if they don’t physically build the Gundam themselves.

About a year ago, my team at GitHub moved to the infrastructure organization, inheriting new roles and Areas of Responsibility (AoRs). Previously, the team had tackled external customer problems, such as building the new deployment views across environments. This involved interacting with users who depend on GitHub to address challenges within their respective industries. Our new customers as a platform engineering team are internal, which makes our responsibilities different from the product-focused engineering work we were doing before.

Going back to my Gundam example, rather than constructing kits, we’re now responsible for building the components of the kits. Adapting to this change meant I had to rethink my approach to code testing and problem solving.

Whether you’re working on product engineering or on the platform side, here are a few best practices to tackle platform problems.

Understanding your domain

One of the most critical steps before tackling problems is understanding the domain. A “domain” is the business and technical subject area in which a team and platform organization operate. This requires gaining an understanding of technical terms and how these systems interact to provide fast and reliable solutions. Here’s how to get up to speed:

- Talk to your neighbors: Arrange a handover meeting with a team that has more knowledge and experience with the subject matter. This meeting provides an opportunity to ask questions about terminology and gain a deeper understanding of the problems the team will be addressing.

- Investigate old issues: If there is a backlog of issues that are either stale or still persistent, they may give you a better understanding of the system’s current limitations and potential areas for improvement.

- Read the docs: Documentation is a goldmine of knowledge that can help you understand how the system works.

Bridging concepts to platform-specific skills

While the preceding advice offers general guidance applicable to both product and platform teams, platform teams — serving as the foundational layer — necessitate a more in-depth understanding.

- Networks: Understanding network fundamentals is crucial for all engineers, even those not directly involved in network operations. This includes concepts like TCP, UDP, and L4 load balancing, as well as debugging tools such as dig. A solid grasp of these areas is essential to comprehend how network traffic impacts your platform.

- Operating systems and hardware: Selecting appropriate virtual machines (VMs) or physical hardware is vital for both scalability and cost management. Making well-informed choices for particular applications requires a strong grasp of both. This is closely linked to choosing the right operating system for your machines, which is important to avoid systems with vulnerabilities or those nearing end of life.

- Infrastructure as Code (IaC): Automation tools like Terraform, Ansible, and Consul are becoming increasingly essential. Proficiency in these tools is becoming a necessity as they significantly decrease human error during infrastructure provisioning and modifications.

- Distributed systems: Dealing with platform issues, particularly in distributed systems, necessitates a deep understanding that failures are inevitable. Consequently, employing proactive solutions like failover and recovery mechanisms is crucial for preserving system reliability and preventing adverse user experiences. The optimal approach for this depends entirely on the specific problem and the desired system behavior.

Knowledge sharing

By sharing lessons and ideas, engineers can introduce new perspectives that lead to breakthroughs and innovations. Taking the time to understand why a project or solution did or didn’t work and sharing those findings provides new perspectives that we can use going forward.

Here are three reasons why knowledge sharing is so important:

- Teamwork makes the dream work: Collaboration often results in quicker problem resolution and fosters new solution innovation, as engineers have the opportunity to learn from each other and expand upon existing ideas.

- Prevent lost knowledge: If we don’t share our lessons learned, we prevent the information from being disseminated across the team or organization. This becomes a problem if an engineer leaves the company or is simply unavailable.

- Improve our customer success: As engineers, our solutions should effectively serve our customers. By sharing our knowledge and lessons learned, we can help the team build reliable, scalable, and secure platforms, which will enable us to create better products that meet customer needs and expectations!

But big differences start to appear between product engineering and infrastructure engineering when it comes to the impact radius and the testing process.

Impact radius

With platforms being the fundamental building blocks of a system, any change (small or large) can affect a wide range of products. Our team is responsible for DNS, a foundational service that impacts numerous products. Even a minor alteration to this service can have extensive repercussions, potentially disrupting access to content across our site and affecting products ranging from GitHub Pages to GitHub Copilot.

- Understand the radius: Or understand the downstream dependencies. Direct communication with teams that depend on our service provides valuable insights into how proposed changes may affect other services.

- Postmortems: By looking at past incidents related to our platform and asking “What is the impact of this incident?”, we can form more context around what change or failure was introduced, how our platform played a role in it, and how it was fixed.

- Monitoring and telemetry: Condense important monitoring and logging into a small and quickly digestible medium to give you the general health of the system. This could be a Single Availability Metric (SAM), for example. The ability to quickly glance at a single dashboard allows engineers to rapidly pinpoint the source of an issue and streamlines the debugging and incident mitigation process, as compared to searching through and interpreting detailed monitors or log messages.

Testing changes

Testing changes in a distributed environment can be challenging, especially for services like DNS. A crucial step in solving this issue is utilizing a test site as a “real” machine where you can implement and assess all your changes.

- Infrastructure as Code (IaC): When using tools like Terraform or Ansible, it’s crucial to test fundamental operations like provisioning and deprovisioning machines. There are circumstances where a machine will need to be re-provisioned. In these cases, we want to ensure the machine is not accidentally deleted and that we retain the ability to create a new one if needed.

- End-to-End (E2E): Begin directing some network traffic to these servers. Then the team can observe host behavior by directly interacting with it, or we can evaluate functionality by diverting a small portion of traffic.

- Self-healing: We want to test the platform’s ability to recover from unexpected loads and identify bottlenecks before they impact our users. Early identification of bottlenecks or bugs is crucial for maintaining the health of our platform.

Ideally changes will be implemented on a host-by-host basis once testing is complete. This approach allows for individual machine rollback and prevents changes from being applied to unaffected hosts.

What to remember

Platform engineering can be difficult. The systems GitHub operates with are complex and there are a lot of services and moving parts. However, there’s nothing like seeing everything come together. All the hard work our engineering teams do behind the scenes really pays off when the platform is running smoothly and teams are able to ship faster and more reliably — which allows GitHub to be the home to all developers.

The post How GitHub engineers tackle platform problems appeared first on The GitHub Blog.

]]>The post How GitHub reduced testing time for iOS apps with new runner features appeared first on The GitHub Blog.

]]>GitHub Actions 🤝 GitHub for iOS

The GitHub iOS and GitHub Actions macOS runner teams are integral parts of each other’s development inner loop. Each team partners on testing new runner images and hardware long before the features land in the hands of developers. GitHub Actions has been working hard at bringing the latest Mac hardware to the community. Apple silicon (M1) macOS runners are available for free in public repositories, along with larger options available for those jobs that need more performance.

The GitHub iOS team has been busy improving the user experience in the app, recently shipping such as GitHub Copilot Chat, code search, localization for German and Korean, and making it easier to work with issues and projects. In this blog, we will discuss how the GitHub iOS team brings the app to developers around the world, the benefits of Apple silicon, and building on GitHub Actions using macOS runners.

How GitHub reduced testing time for iOS apps with new runner features

The GitHub iOS team previously used a single workflow with one job to build and test the entire codebase on GitHub Actions that took 38 minutes to complete with the prior generation runners. The GitHub iOS app consists of about 60 first-party modules, consisting of various targets, such as dynamic frameworks, static libraries, app extensions, or the GitHub app itself. These modules range from networking layers to design system components to entire features or products, helping us maintain the app.

Breaking down the monolith

We decided to leverage the power of Apple silicon to speed up their testing process. We switched to M1 macOS runners (macos-14-xlarge YAML label) on GitHub Actions and split their test suite into separate jobs for each module. This way, they could build and test each module independently and get faster feedback. Some of the smallest modules completed their tests in as little as 2-3 minutes on M1 macOS runners, getting feedback to developers on their pull requests faster than ever before. This also made it easier to identify and fix failures on specific modules without waiting for a monolithic build to finish.

By using Apple silicon, we reduced their testing time by 60%, from 38 minutes to 15 minutes, and improved our productivity and efficiency. The figure below demonstrates how we broke down the monolith into small modules in order to improve our build times.

As each build is kicked off, GitHub Actions is behind the scenes preparing the required number of machines to execute the workflow. Each request is sent to the GitHub Actions service where it picks up a freshly reimaged virtual machine to execute the required number of jobs. The figure below shows how a request travels from our repository to the Actions Mac servers in Azure.

With shorter build times and a scaling CI fleet, Apple silicon hosts allowed the GitHub iOS team to scale their jobs out across many shorter, faster steps, with GitHub Actions abstracting over the complexity of distributing CI jobs.

Analyzing CI performance

We further investigated the CI performance and divided each module’s CI into two separate steps, build and test, using xcodebuild’s build-without-testing and test-without-building. This helped us identify unit tests that ran for a long time or highlighted fast unit tests that finished in seconds.

Native development and test environments

With Apple silicon powering GitHub Actions runners and the developers’ laptops, our CI now had the same architecture as local development machines. Engineers could identify patterns that took a long time to compile or tests that failed due to the architecture from CI and fix them locally with confidence.

Benefits of Apple silicon

Apple silicon improves build performance, increases reliability, and lets iOS teams test natively for all Apple platforms throughout the software development lifecycle. They can avoid problems from cross-compilation or emulation and use the latest simulators on our GitHub Actions runner image. This ensures that their apps work well with the newest versions of iOS, iPadOS, watchOS, and tvOS. Our GitHub Actions M1 macOS runners help iOS teams leverage these benefits and deliver high-quality apps to their users faster and more efficiently. Additionally, GitHub Actions offers 50 concurrent runners for enterprise accounts and five for GitHub Free and Team plans. The GitHub for iOS team takes full advantage of these concurrent runners and initiates 50 jobs for every pull request to perform modular testing on the app in parallel.

Get started building on GitHub Actions using macOS runners

GitHub-hosted macOS runners are YAML-driven, meaning they are accessed by updating the runs on: key in your workflow file.

- Standard GitHub-hosted runners for Public repositories

- Standard GitHub-hosted runners for Private repositories

- macOS larger runners

The post How GitHub reduced testing time for iOS apps with new runner features appeared first on The GitHub Blog.

]]>The post Upgrading GitHub.com to MySQL 8.0 appeared first on The GitHub Blog.

]]>Over 15 years ago, GitHub started as a Ruby on Rails application with a single MySQL database. Since then, GitHub has evolved its MySQL architecture to meet the scaling and resiliency needs of the platform—including building for high availability, implementing testing automation, and partitioning the data. Today, MySQL remains a core part of GitHub’s infrastructure and our relational database of choice.

This is the story of how we upgraded our fleet of 1200+ MySQL hosts to 8.0. Upgrading the fleet with no impact to our Service Level Objectives (SLO) was no small feat–planning, testing and the upgrade itself took over a year and collaboration across multiple teams within GitHub.

Motivation for upgrading

Why upgrade to MySQL 8.0? With MySQL 5.7 nearing end of life, we upgraded our fleet to the next major version, MySQL 8.0. We also wanted to be on a version of MySQL that gets the latest security patches, bug fixes, and performance enhancements. There are also new features in 8.0 that we want to test and benefit from, including Instant DDLs, invisible indexes, and compressed bin logs, among others.

GitHub’s MySQL infrastructure

Before we dive into how we did the upgrade, let’s take a 10,000-foot view of our MySQL infrastructure:

- Our fleet consists of 1200+ hosts. It’s a combination of Azure Virtual Machines and bare metal hosts in our data center.

- We store 300+ TB of data and serve 5.5 million queries per second across 50+ database clusters.

- Each cluster is configured for high availability with a primary plus replicas cluster setup.

- Our data is partitioned. We leverage both horizontal and vertical sharding to scale our MySQL clusters. We have MySQL clusters that store data for specific product-domain areas. We also have horizontally sharded Vitess clusters for large-domain areas that outgrew the single-primary MySQL cluster.

- We have a large ecosystem of tools consisting of Percona Toolkit, gh-ost, orchestrator, freno, and in-house automation used to operate the fleet.

All this sums up to a diverse and complex deployment that needs to be upgraded while maintaining our SLOs.

Preparing the journey

As the primary data store for GitHub, we hold ourselves to a high standard for availability. Due to the size of our fleet and the criticality of MySQL infrastructure, we had a few requirements for the upgrade process:

- We must be able to upgrade each MySQL database while adhering to our Service Level Objectives (SLOs) and Service Level Agreements (SLAs).

- We are unable to account for all failure modes in our testing and validation stages. So, in order to remain within SLO, we needed to be able to roll back to the prior version of MySQL 5.7 without a disruption of service.

- We have a very diverse workload across our MySQL fleet. To reduce risk, we needed to upgrade each database cluster atomically and schedule around other major changes. This meant the upgrade process would be a long one. Therefore, we knew from the start we needed to be able to sustain operating a mixed-version environment.

Preparation for the upgrade started in July 2022 and we had several milestones to reach even before upgrading a single production database.

Prepare infrastructure for upgrade

We needed to determine appropriate default values for MySQL 8.0 and perform some baseline performance benchmarking. Since we needed to operate two versions of MySQL, our tooling and automation needed to be able to handle mixed versions and be aware of new, different, or deprecated syntax between 5.7 and 8.0.

Ensure application compatibility

We added MySQL 8.0 to Continuous Integration (CI) for all applications using MySQL. We ran MySQL 5.7 and 8.0 side-by-side in CI to ensure that there wouldn’t be regressions during the prolonged upgrade process. We detected a variety of bugs and incompatibilities in CI, helping us remove any unsupported configurations or features and escape any new reserved keywords.

To help application developers transition towards MySQL 8.0, we also enabled an option to select a MySQL 8.0 prebuilt container in GitHub Codespaces for debugging and provided MySQL 8.0 development clusters for additional pre-prod testing.

Communication and transparency

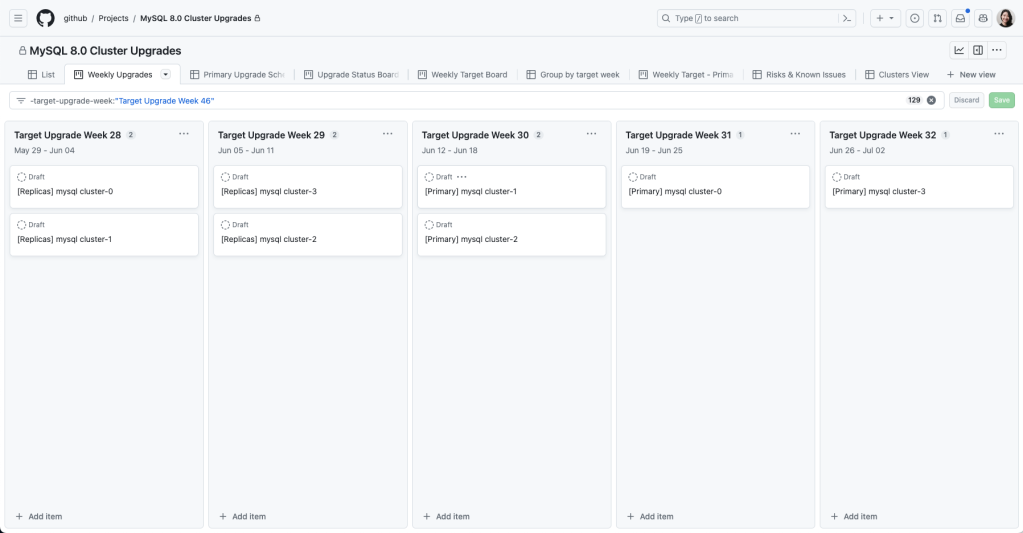

We used GitHub Projects to create a rolling calendar to communicate and track our upgrade schedule internally. We created issue templates that tracked the checklist for both application teams and the database team to coordinate an upgrade.

Upgrade plan

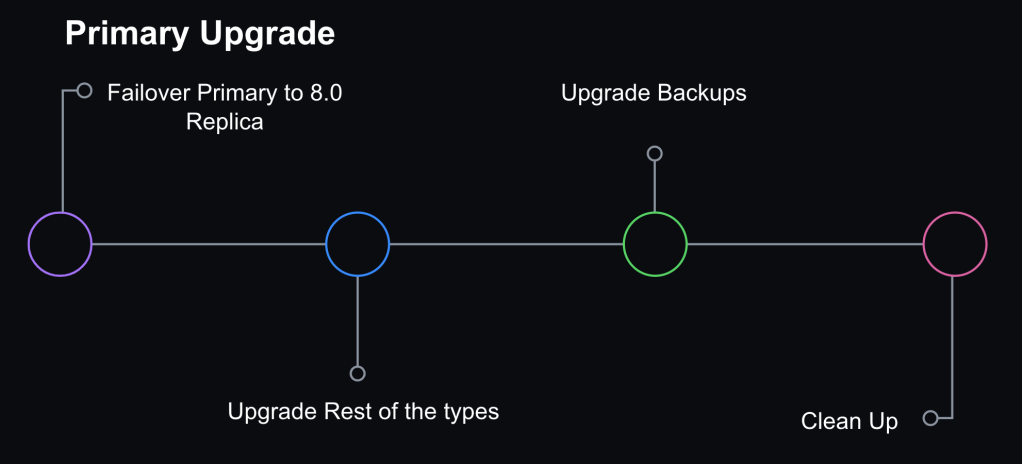

To meet our availability standards, we had a gradual upgrade strategy that allowed for checkpoints and rollbacks throughout the process.

Step 1: Rolling replica upgrades

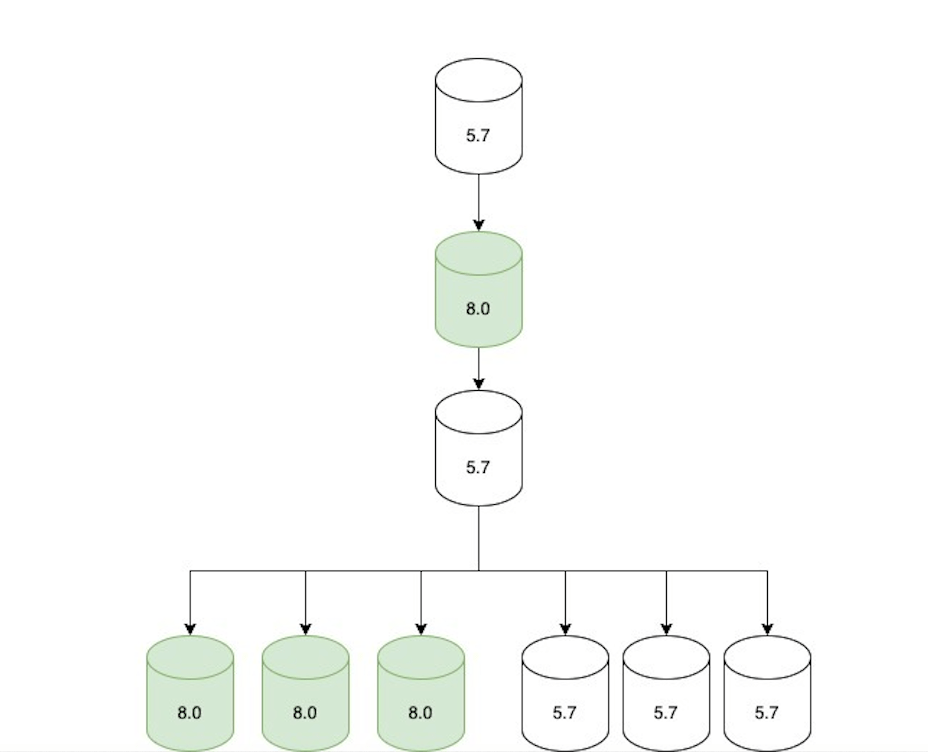

We started with upgrading a single replica and monitoring while it was still offline to ensure basic functionality was stable. Then, we enabled production traffic and continued to monitor for query latency, system metrics, and application metrics. We gradually brought 8.0 replicas online until we upgraded an entire data center and then iterated through other data centers. We left enough 5.7 replicas online in order to rollback, but we disabled production traffic to start serving all read traffic through 8.0 servers.

Step 2: Update replication topology

Once all the read-only traffic was being served via 8.0 replicas, we adjusted the replication topology as follows:

- An 8.0 primary candidate was configured to replicate directly under the current 5.7 primary.

- Two replication chains were created downstream of that 8.0 replica:

- A set of only 5.7 replicas (not serving traffic, but ready in case of rollback).

- A set of only 8.0 replicas (serving traffic).

- The topology was only in this state for a short period of time (hours at most) until we moved to the next step.

Step 3: Promote MySQL 8.0 host to primary

We opted not to do direct upgrades on the primary database host. Instead, we would promote a MySQL 8.0 replica to primary through a graceful failover performed with Orchestrator. At that point, the replication topology consisted of an 8.0 primary with two replication chains attached to it: an offline set of 5.7 replicas in case of rollback and a serving set of 8.0 replicas.

Orchestrator was also configured to blacklist 5.7 hosts as potential failover candidates to prevent an accidental rollback in case of an unplanned failover.

Step 4: Internal facing instance types upgraded

We also have ancillary servers for backups or non-production workloads. Those were subsequently upgraded for consistency.

Step 5: Cleanup

Once we confirmed that the cluster didn’t need to rollback and was successfully upgraded to 8.0, we removed the 5.7 servers. Validation consisted of at least one complete 24 hour traffic cycle to ensure there were no issues during peak traffic.

Ability to Rollback

A core part of keeping our upgrade strategy safe was maintaining the ability to rollback to the prior version of MySQL 5.7. For read-replicas, we ensured enough 5.7 replicas remained online to serve production traffic load, and rollback was initiated by disabling the 8.0 replicas if they weren’t performing well. For the primary, in order to roll back without data loss or service disruption, we needed to be able to maintain backwards data replication between 8.0 and 5.7.

MySQL supports replication from one release to the next higher release but does not explicitly support the reverse (MySQL Replication compatibility). When we tested promoting an 8.0 host to primary on our staging cluster, we saw replication break on all 5.7 replicas. There were a couple of problems we needed to overcome:

- In MySQL 8.0,

utf8mb4is the default character set and uses a more modernutf8mb4_0900_ai_cicollation as the default. The prior version of MySQL 5.7 supported theutf8mb4_unicode_520_cicollation but not the latest version of Unicodeutf8mb4_0900_ai_ci. - MySQL 8.0 introduces roles for managing privileges but this feature did not exist in MySQL 5.7. When an 8.0 instance was promoted to be a primary in a cluster, we encountered problems. Our configuration management was expanding certain permission sets to include role statements and executing them, which broke downstream replication in 5.7 replicas. We solved this problem by temporarily adjusting defined permissions for affected users during the upgrade window.

To address the character collation incompatibility, we had to set the default character encoding to utf8 and collation to utf8_unicode_ci.

For the GitHub.com monolith, our Rails configuration ensured that character collation was consistent and made it easier to standardize client configurations to the database. As a result, we had high confidence that we could maintain backward replication for our most critical applications.

Challenges

Throughout our testing, preparation and upgrades, we encountered some technical challenges.

What about Vitess?

We use Vitess for horizontally sharding relational data. For the most part, upgrading our Vitess clusters was not too different from upgrading the MySQL clusters. We were already running Vitess in CI, so we were able to validate query compatibility. In our upgrade strategy for sharded clusters, we upgraded one shard at a time. VTgate, the Vitess proxy layer, advertises the version of MySQL and some client behavior depends on this version information. For example, one application used a Java client that disabled the query cache for 5.7 servers—since the query cache was removed in 8.0, it generated blocking errors for them. So, once a single MySQL host was upgraded for a given keyspace, we had to make sure we also updated the VTgate setting to advertise 8.0.

Replication delay

We use read-replicas to scale our read availability. GitHub.com requires low replication delay in order to serve up-to-date data.

Earlier on in our testing, we encountered a replication bug in MySQL that was patched on 8.0.28:

Replication: If a replica server with the system variable

replica_preserve_commit_order= 1 set was used under intensive load for a long period, the instance could run out of commit order sequence tickets. Incorrect behavior after the maximum value was exceeded caused the applier to hang and the applier worker threads to wait indefinitely on the commit order queue. The commit order sequence ticket generator now wraps around correctly. Thanks to Zhai Weixiang for the contribution. (Bug #32891221, Bug #103636)

We happen to meet all the criteria for hitting this bug.

- We use

replica_preserve_commit_orderbecause we use GTID based replication. - We have intensive load for long periods of time on many of our clusters and certainly for all of our most critical ones. Most of our clusters are very write-heavy.

Since this bug was already patched upstream, we just needed to ensure we are deploying a version of MySQL higher than 8.0.28.

We also observed that the heavy writes that drove replication delay were exacerbated in MySQL 8.0. This made it even more important that we avoid heavy bursts in writes. At GitHub, we use freno to throttle write workloads based on replication lag.

Queries would pass CI but fail on production

We knew we would inevitably see problems for the first time in production environments—hence our gradual rollout strategy with upgrading replicas. We encountered queries that passed CI but would fail on production when encountering real-world workloads. Most notably, we encountered a problem where queries with large WHERE IN clauses would crash MySQL. We had large WHERE IN queries containing over tens of thousands of values. In those cases, we needed to rewrite the queries prior to continuing the upgrade process. Query sampling helped to track and detect these problems. At GitHub, we use Solarwinds DPM (VividCortex), a SaaS database performance monitor, for query observability.

Learnings and takeaways

Between testing, performance tuning, and resolving identified issues, the overall upgrade process took over a year and involved engineers from multiple teams at GitHub. We upgraded our entire fleet to MySQL 8.0 – including staging clusters, production clusters in support of GitHub.com, and instances in support of internal tools. This upgrade highlighted the importance of our observability platform, testing plan, and rollback capabilities. The testing and gradual rollout strategy allowed us to identify problems early and reduce the likelihood for encountering new failure modes for the primary upgrade.

While there was a gradual rollout strategy, we still needed the ability to rollback at every step and we needed the observability to identify signals to indicate when a rollback was needed. The most challenging aspect of enabling rollbacks was holding onto the backward replication from the new 8.0 primary to 5.7 replicas. We learned that consistency in the Trilogy client library gave us more predictability in connection behavior and allowed us to have confidence that connections from the main Rails monolith would not break backward replication.

However, for some of our MySQL clusters with connections from multiple different clients in different frameworks/languages, we saw backwards replication break in a matter of hours which shortened the window of opportunity for rollback. Luckily, those cases were few and we didn’t have an instance where the replication broke before we needed to rollback. But for us this was a lesson that there are benefits to having known and well-understood client-side connection configurations. It emphasized the value of developing guidelines and frameworks to ensure consistency in such configurations.

Prior efforts to partition our data paid off—it allowed us to have more targeted upgrades for the different data domains. This was important as one failing query would block the upgrade for an entire cluster and having different workloads partitioned allowed us to upgrade piecemeal and reduce the blast radius of unknown risks encountered during the process. The tradeoff here is that this also means that our MySQL fleet has grown.

The last time GitHub upgraded MySQL versions, we had five database clusters and now we have 50+ clusters. In order to successfully upgrade, we had to invest in observability, tooling, and processes for managing the fleet.

Conclusion

A MySQL upgrade is just one type of routine maintenance that we have to perform – it’s critical for us to have an upgrade path for any software we run on our fleet. As part of the upgrade project, we developed new processes and operational capabilities to successfully complete the MySQL version upgrade. Yet, we still had too many steps in the upgrade process that required manual intervention and we want to reduce the effort and time it takes to complete future MySQL upgrades.

We anticipate that our fleet will continue to grow as GitHub.com grows and we have goals to partition our data further which will increase our number of MySQL clusters over time. Building in automation for operational tasks and self-healing capabilities can help us scale MySQL operations in the future. We believe that investing in reliable fleet management and automation will allow us to scale github and keep up with required maintenance, providing a more predictable and resilient system.

The lessons from this project provided the foundations for our MySQL automation and will pave the way for future upgrades to be done more efficiently, but still with the same level of care and safety.

The post Upgrading GitHub.com to MySQL 8.0 appeared first on The GitHub Blog.

]]>The post How GitHub uses GitHub Actions and Actions larger runners to build and test GitHub.com appeared first on The GitHub Blog.

]]>Read on to see how we run 15,000 CI jobs within an hour across 150,000 cores of compute!

Brief history of CI at GitHub

GitHub has invested in a variety of different CI systems throughout its history. With each system, our aim has been to enhance the development experience for both GitHub engineers writing and deploying code and for engineers maintaining the systems.

However, with past CI systems we faced challenges with scaling the system to meet the needs of our engineering team to provide both stable and ephemeral build environments. Neither of these challenges allowed us to provide the optimal developer experience.

Then, GitHub released GitHub Actions larger runners. This gave us an opportunity not only to transition to a fully featured CI system, but also to develop, experience, and utilize the systems we are creating for our customers and to drive feedback to help build the product. For the GitHub DX team, this transition was a great opportunity to move away from maintaining our past CI systems while delivering a superior developer experience.

What are larger runners?

Larger runners are GitHub Actions runners that are hosted by GitHub. They are managed virtual machines (VMs) with more RAM, CPU, and disk space than standard GitHub-hosted runners. There are a variety of different machine sizes offered for the runners as well as some additional features compared to the standard GitHub-hosted runners.

Larger runners are available to GitHub Team and GitHub Enterprise Cloud customers. Check out these docs to learn more about larger runners.

Why did we pick larger runners?

Autoscaling and managed

Coming from previous iterations of GitHub’s CI systems, we needed the ability to create CI machines on demand to meet the fast feedback cycles needed by GitHub engineers and to scale with the rate of change of the site.

With larger runners, we maintain the ability to autoscale our CI system because GitHub will automatically create multiple instances of a runner that scale up and down to match the job demands of our engineers. An added benefit is that the GitHub DX team no longer has to worry about the scaling of the runners since all of those complexities are handled by GitHub itself!

We wanted to share some raw numbers on our current peak utilization of larger runners:

- Uses 4,500 concurrent 32-core runners

- Runs 125,000 build minutes per hour

- Queues and runs approximately 15,000 jobs within an hour

- Allocates around 150,000 cores of compute

(Beta) Custom VM image support

GitHub Actions provides runners with a lot of tools already baked in, which is sufficient and convenient for a variety of projects across the company. However, for some complex production GitHub services, the prebuilt runners did not satisfy all our requirements.

To maintain an efficient and fast CI system, the DX team needed the ability to provide machines with all the tools needed to build those production services. We didn’t want to spend extra time installing tools or compiling projects during CI jobs.

We are currently building features into larger runners so they have the ability to be launched from a custom VM image, called custom images. While this feature is still in beta, using custom images is a huge benefit to GitHub’s CI lifecycle for a couple of reasons.

First, custom images allows GitHub to bundle all the required software and tools needed to build and test complex production bearing services. Anything that is unique to GitHub or one of our projects can be pre-installed on the image before a GitHub Actions workflow even starts.

Second, custom images enable GitHub to dramatically speed up our GitHub Actions workflows by acting as a bootstrapping cache for some projects. During custom image creation, we bundle a pre-built version of a project’s source code into the image. Subsequently, when the project starts a GitHub Actions workflow, it can utilize a cached version of its source code, and any other build artifacts, to speed up its build process.

The cached project source code on the custom VM image can quickly become out of date due to the rapid rate of development within GitHub. This, in turn, causes workflow durations to increase. The DX team worked with the GitHub Actions engineering team to create an API on GitHub to regularly update the custom image multiple times a day to keep the project source up to date.

In practice, this has reduced the bootstrapping time of our projects significantly. Without custom images, our workflows would take around 50 minutes from start to finish, versus the 12 minutes they take today. This is a game changer for our engineers.

We’re working on a way to offer this functionality at scale. If you are interested in custom images for your CI/CD workflows, please reach out to your account manager to learn more!

Important GitHub Actions features

There are thousands of projects at GitHub — from services that run production workloads to small tools that need to run CI to perform their daily operations. To make this a reality, GitHub leverages several important features in GitHub Actions that enable us to use the platform efficiently and securely across the company at scale.

Reusable workflows

One of the DX team’s driving goals is to pave paths for all repositories to run CI without introducing unnecessary repetition across repositories. Prior to GitHub Actions, we created single job configurations that could be used across multiple projects. In GitHub Actions, this was not as easy because any repository can define its own workflows. Reusable workflows to the rescue!

The reusable workflows feature in GitHub Actions provides a way to centrally manage a workflow in a repository that can be utilized by many other repositories in an organization. This was critical in our transition from our previous CI system to GitHub Actions. We were able to create several prebuilt workflows in a single repository, and many repositories could then use those workflows. This makes the process of adding CI to an existing or new project very much plug and play.

In our central repository hosting our reusable workflows, we can have workflows defined like:

on:

workflow_call:

inputs:

cibuild-script:

description: 'Which cibuild script to run.'

type: string

required: false

default: "script/cibuild"

secrets:

service-api-key:

required: true

jobs:

reusable_workflow_job:

runs-on: gh-larger-runner-medium

name: Simple Workflow Job

timeout-minutes: 20

steps:

- name: Checkout Project

uses: actions/checkout@v3

- name: Run cibuild script

run: |

bash ${{ inputs.cibuild-script }}

shell: bash

And in consuming repositories, they can simply utilize the reusable workflow, with just a few lines of code!

name: my-new-project

on:

workflow_dispatch:

push:

jobs:

call-reusable-workflow:

uses: github/internal-actions/.github/workflows/default.yml@main

with:

cibuild-script: "script/cibuild-my-tests"

secrets:

service-api-key: ${{ secrets.SERVICE_API_KEY }}

Another great benefit of the reusable workflows feature is that the runner can be defined in the Reusable Workflow, meaning that we can guarantee all users of the workflow will run on our designated larger runner pool. Now, projects don’t need to worry about which runner they need to use!

(Beta) Reusing previous workflow outcomes

To optimize our developer experience, the DX team worked with our engineering team to create a feature for GitHub Actions that allows workflows to reuse the outcome of a previous workflow run where the outcomes would be the same.

In some cases, the file contents of a repository are exactly the same between workflow runs that run on different commits. That is, the Git tree IDs for the current commit is the same as the previous commit (there are no file differences). In these cases, we can bypass CI checks by reusing the previous workflow outcomes and allow engineers to not have to wait for CI to run again.

This feature saves GitHub engineers from running anywhere from 300 to 500 workflows runs a day!

Other challenges faced

Private service access

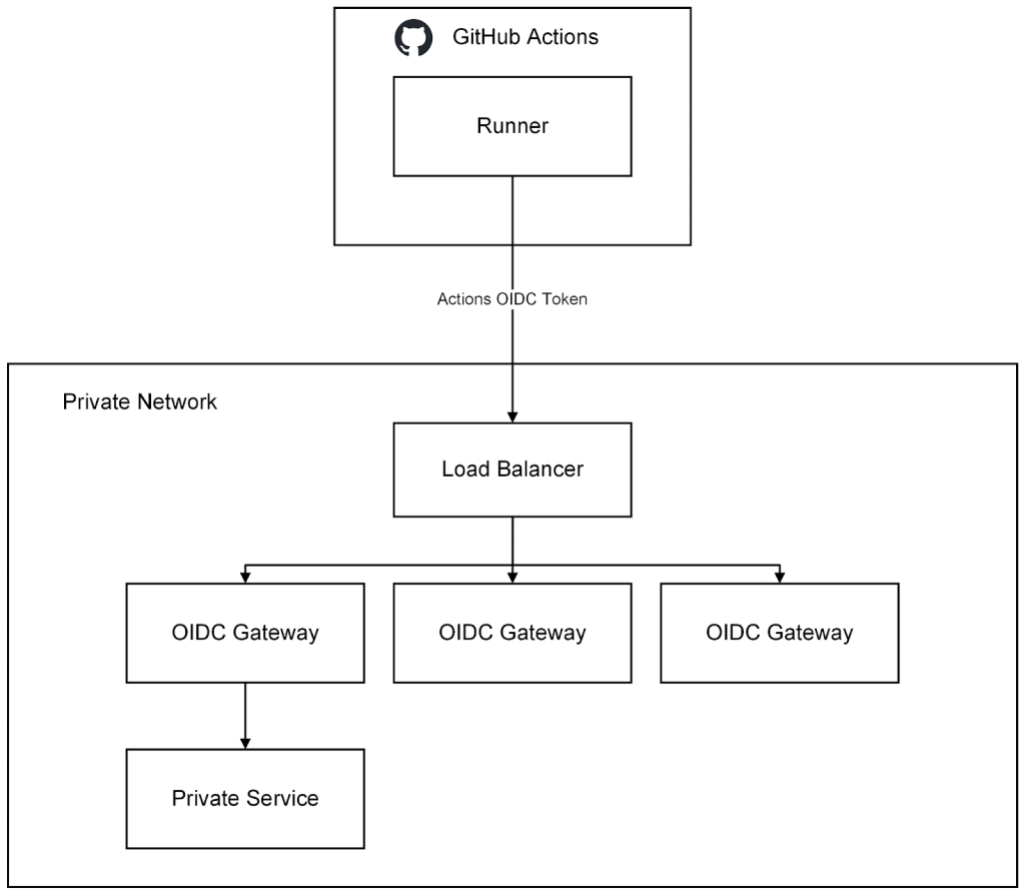

During some internal GitHub Actions workflow runs, the workflows need the ability to access some GitHub private services, within a GitHub virtual private cloud (VPC), over the network. These could be resources such as artifact storage, application metadata services, and other services that enable invocation of our test harness.

When we moved to larger runners, this requirement to access private services became a top-of-mind concern. In previous iterations of our CI infrastructure, these private services were accessible through other cloud and network configurations. However, larger runners are isolated from other production environments, meaning they cannot access our private services.

Like all companies, we need to focus on both the security of our platform as well as the developer experience. To satisfy these two requirements, GitHub developed a remote access solution that allows clients residing outside of our VPCs (larger runners) to securely access select private services.

This remote access solution works on the principle of minting an OIDC token in GitHub Actions, passing the OIDC token to a remote access gateway that authorizes the request by validating the OIDC token, and then proxying the request to the private service residing in a private network.

With this solution we are able to securely provide remote access from larger runners running GitHubActions to our private resources within our VPC.

GitHub has open sourced the basic scaffolding of this remote access gateway in the github/actions-oidc-gateway-example repository, so be sure to check it out!

Conclusion

GitHub Actions provides a robust and smooth developer experience for GitHub engineers working on GitHub.com. We have been able to accomplish this by using the power of GitHub Actions features, such as reusable workflows and reusable workflow outcomes, and by leveraging the scalability and manageability of the GitHub Actions larger runners. We have also used this effort to enhance the GitHub Actions product. To put it simply, GitHub runs on GitHub.

- Level up your GitHub Actions skillset with our learning pathways on automation.

- Explore how investing in a better developer experience frees developers to do what matters most: building great software.

- Learn how generative AI is changing the developer experience.

- Modernize your software development environment and transform your business with GitHub.

The post How GitHub uses GitHub Actions and Actions larger runners to build and test GitHub.com appeared first on The GitHub Blog.

]]>The post Scaling merge-ort across GitHub appeared first on The GitHub Blog.

]]>Our requirements for a merge strategy

There are a few non-negotiable parts of any merge strategy we want to employ:

- It has to be fast. At GitHub’s scale, even a small slowdown is multiplied by the millions of activities going on in repositories we host each day.

- It has to be correct. For merge strategies, what’s “correct” is occasionally a matter of debate. In those cases, we try to match what users expect (which is often whatever the Git command line does).

- It can’t check out the repository. There are both scalability and security implications to having a working directory, so we simply don’t.

Previously, we used libgit2 to tick these boxes: it was faster than Git’s default merge strategy and it didn’t require a working directory. On the correctness front, we either performed the merge or reported a merge conflict and halted. However, because of additional code related to merge base selection, sometimes a user’s local Git could easily merge what our implementation could not. This led to a steady stream of support tickets asking why the GitHub web UI couldn’t merge two files when the local command line could. We weren’t meeting those users’ expectations, so from their perspective, we weren’t correct.

A new strategy emerges

Two years ago, Git learned a new merge strategy, merge-ort. As the author details on the mailing list, merge-ort is fast, correct, and addresses many shortcomings of the older default strategy. Even better, unlike merge-recursive, it doesn’t need a working directory. merge-ort is much faster even than our optimized, libgit2-based strategy. What’s more, merge-ort has since become Git’s default. That meant our strategy would fall even further behind on correctness.

It was clear that GitHub needed to upgrade to merge-ort. We split this effort into two parts: first deploy merge-ort for merges, then deploy it for rebases.

merge-ort for merges

Last September, we announced that we’re using merge-ort for merge commits. We used Scientist to run both code paths in production so we can compare timing, correctness, etc. without risking much. The customer still gets the result of the old code path, while the GitHub feature team gets to compare and contrast the behavior of the new code path. Our process was:

- Create and enable a Scientist experiment with the new code path.

- Roll it out to a fraction of traffic. In our case, we started with some GitHub-internal repositories first before moving to a percentage-based rollout across all of production.

- Measure gains, check correctness, and fix bugs iteratively.

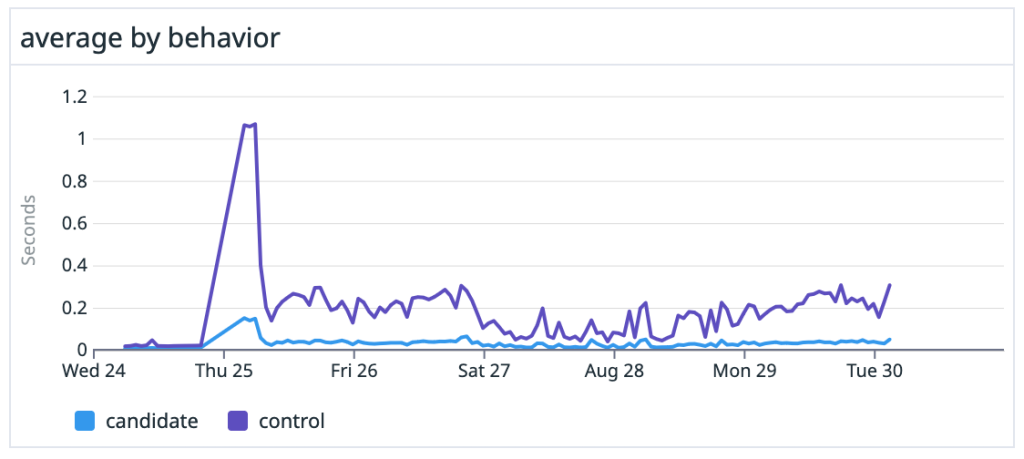

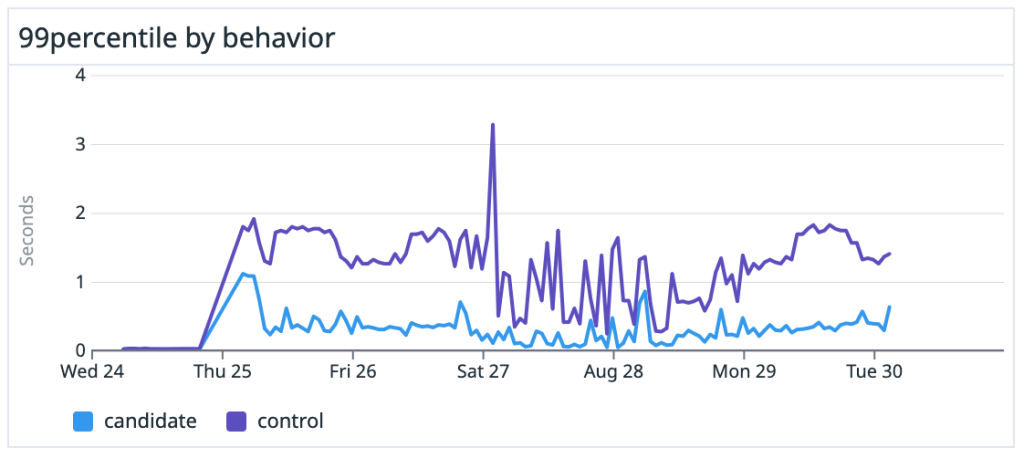

We saw dramatic speedups across the board, especially on large, heavily-trafficked repositories. For our own github/github monolith, we saw a 10x speedup in both the average and P99 case. Across the entire experiment, our P50 saw the same 10x speedup and P99 case got nearly a 5x boost.

merge-ort for rebases

Like merges, we also do a huge number of rebases. Customers may choose rebase workflows in their pull requests. We also perform test rebases and other “behind the scenes” operations, so we also brought merge-ort to rebases.

This time around, we powered rebases using a new Git subcommand: git-replay. git replay was written by the original author of merge-ort, Elijah Newren (a prolific Git contributor). With this tool, we could perform rebases using merge-ort and without needing a worktree. Once again, the path was pretty similar:

- Merge

git-replayinto our fork of Git. (We were running the experiment with Git 2.39, which didn’t include thegit-replayfeature.) - Before shipping, leverage our test suite to detect discrepancies between the old and the new implementations.

- Write automation to flush out bugs by performing test rebases of all open pull requests in

github/githuband comparing the results. - Set up a Scientist experiment to measure the performance delta between

libgit2-powered rebases and monitor for unexpected mismatches in behavior. - Measure gains, check correctness, and fix bugs iteratively.

Once again, we were amazed at the results. The following is a great anecdote from testing, as relayed by @wincent (one of the GitHub engineers on this project):

Another way to think of this is in terms of resource usage. We ran the experiment over 730k times. In that interval, our computers spent 2.56 hours performing rebases with

libgit2, but under 10 minutes doing the same work withmerge-ort. And this was running the experiment for 0.5% of actors. Extrapolating those numbers out to 100%, if we had done all rebases during that interval withmerge-ort, it would have taken us 2,000 minutes, or about 33 hours. That same work done withlibgit2would have taken 512 hours!

What’s next

While we’ve covered the most common uses, this is not the end of the story for merge-ort at GitHub. There are still other places in which we can leverage its superpowers to bring better performance, greater accuracy, and improved availability. Squashing and reverting are on our radar for the future, as well as considering what new product features it could unlock down the road.

Appreciation

Many thanks to all the GitHub folks who worked on these two projects. Also, GitHub continues to be grateful for the hundreds of volunteer contributors to the Git open source project, including Elijah Newren for designing, implementing, and continually improving merge-ort.

The post Scaling merge-ort across GitHub appeared first on The GitHub Blog.

]]>The post Experiment: The hidden costs of waiting on slow build times appeared first on The GitHub Blog.

]]>When you ask a developer whether they’d prefer more or less powerful hardware, the answer is almost always the same: they want more powerful hardware. That’s because more powerful hardware means less time waiting on builds—and that means more time to build the next feature or fix a bug.

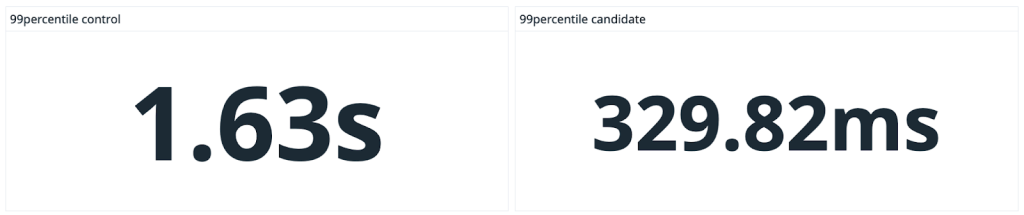

But even if the upfront cost is higher for higher-powered hardware, what’s the actual cost when you consider the impact on developer productivity?

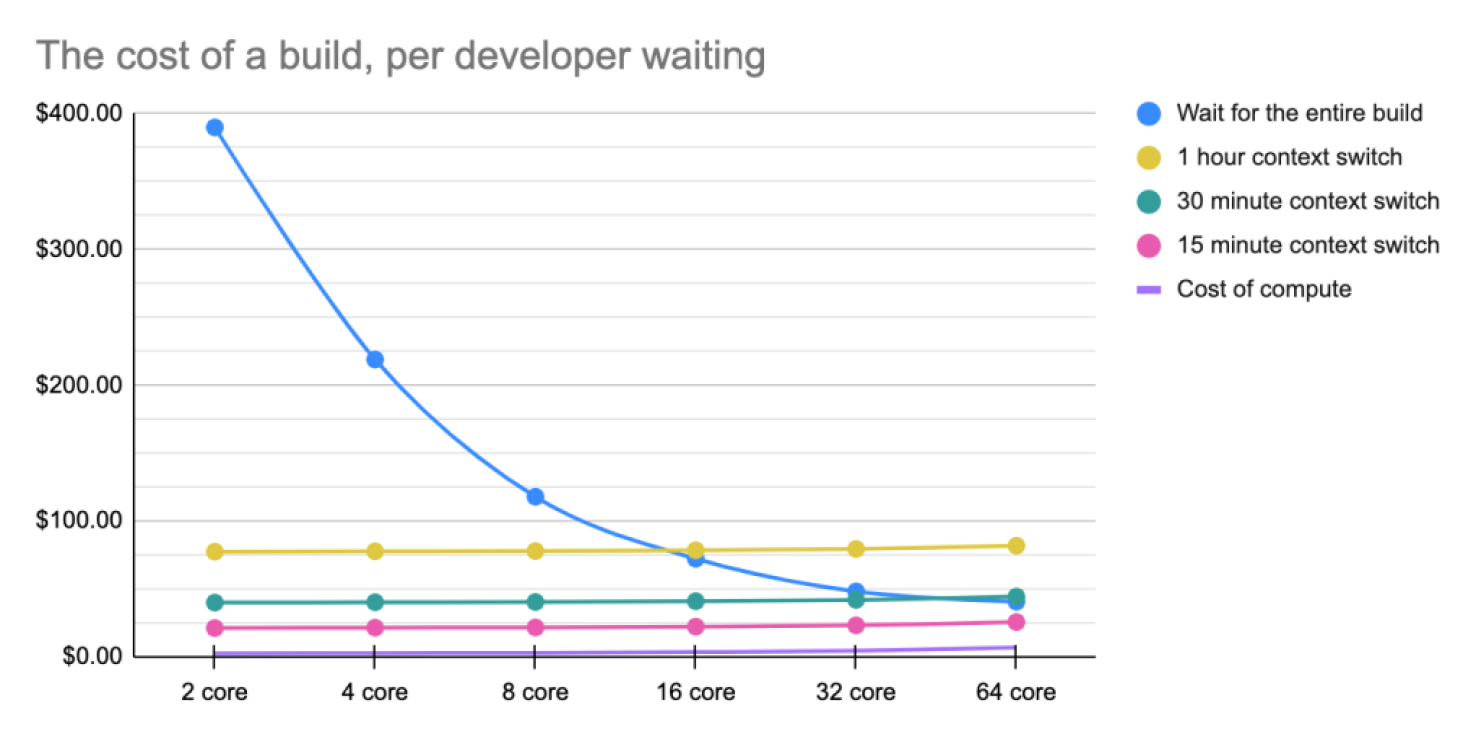

To find out, I set up an experiment using GitHub’s new, larger hosted runners, which offer powerful cloud-based compute resources, to execute a large build at each compute tier from 2 cores to 64 cores. I wanted to see what the cost of each build time would be, and then compare that with the average hourly cost of a United States-based developer to figure out the actual operational expense for a business.

The results might surprise you.

Testing build times vs. cost by core size on compute resources

For my experiment, I used my own personal project where I compile the Linux kernel (seriously!) for Fedora 35 and Fedora 36. For background, I need a non-standard patch to play video games on my personal desktop without having to deal with dual booting.

Beyond being a fun project, it’s also a perfect case study for this experiment. As a software build, it takes a long time to run—and it’s a great proxy for more intensive software builds developers often navigate at work.

Now comes the fun part: our experiment. Like I said above, I’m going to initiate builds of this project at each compute tier from 2 cores to 64 cores, and then determine how long each build takes and its cost on GitHub’s larger runners. Last but not least: I’ll compare how much time we save during the build cycle and square that with how much more time developers would have to be productive to find the true business cost.

The logic here is that developers could either be waiting the entire time a build runs or end up context-switching to work on something else while a build runs. Both of these impact overall productivity (more on this below).

To simplify my calculations, I took the average runtimes of two builds per compute tier.

| Pro tip: You can find my full spreadsheet for these calculations here if you want to copy it and play with the numbers yourself using other costs, times for builds, developer salaries, etc. |

How much slow build times cost companies

In scenario number one of our experiment, we’ll assume that developers may just wait for a build to run and do nothing else during that time frame. That’s not a great outcome, but it happens.

So, what does this cost a business? According to StackOverflow’s 2022 Developer Survey, the average annual cost of a developer in the United States is approximately $150,000 per year including fringe benefits, taxes, and so on. That breaks down to around $75 (USD) an hour. In short, if a developer is waiting on a build to run for one hour and doing nothing in that timeframe, the business is still spending $75 on average for that developer’s time—and potentially losing out on time that developer could be focusing on building more code.

Now for the fun part: calculating the runtimes and cost to execute a build using each tier of compute power, plus the cost of a developer’s time spent waiting on the build. (And remember, I ran each of these twice at each tier and then averaged the results together.)

You end up with something like this:

| Compute power | Fedora 35 build | Fedora 36 build | Average time

(minutes) |

Cost/minute for compute | Total cost of 1 build | Developer cost

(1 dev) |

Developer cost

(5 devs) |

| 2 core | 5:24:27 | 4:54:02 | 310 | $0.008 | $2.48 | $389.98 | $1,939.98 |

| 4 core | 2:46:33 | 2:57:47 | 173 | $0.016 | $2.77 | $219.02 | $1,084.02 |

| 8 core | 1:32:13 | 1:30:41 | 92 | $0.032 | $2.94 | $117.94 | $577.94 |

| 16 core | 0:54:31 | 0:54:14 | 55 | $0.064 | $3.52 | $72.27 | $347.27 |

| 32 core | 0:36:21 | 0:32:21 | 35 | $0.128 | $4.48 | $48.23 | $223.23 |

| 64 core | 0:29:25 | 0:24:24 | 27 | $0.256 | $6.91 | $40.66 | $175.66 |

You can immediately see how much faster each build completes on more powerful hardware—and that’s hardly surprising. But it’s striking how much money, on average, a business would be paying their developers in the time it takes for a build to run.

When you plot this out, you end up with a pretty compelling case for spending more money on stronger hardware.

The bottom line: The cost of hardware is much, much less than the total cost for developers, and giving your engineering teams more CPU power means they have more time to develop software instead of waiting on builds to complete. And the bigger the team you have in a given organization, the more upside you have to invest in more capable compute resources.

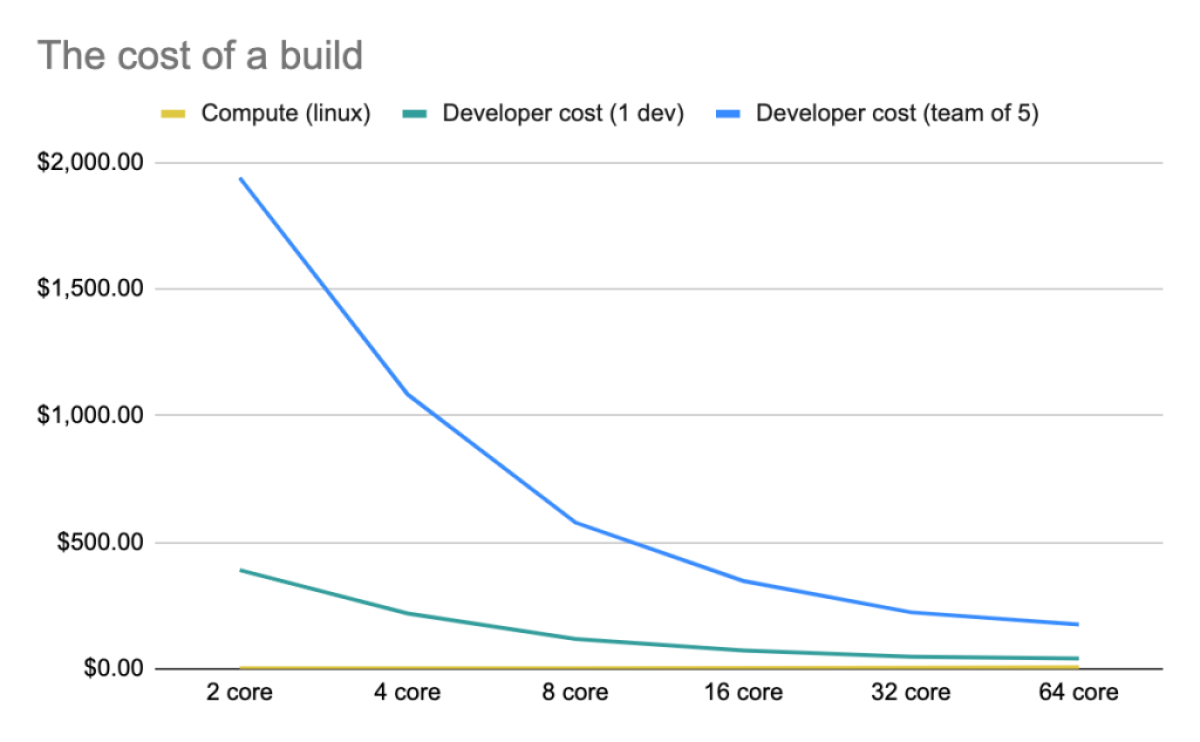

How much context switching costs companies

Now let’s change the scenario in our experiment: Instead of assuming that developers are sitting idly while waiting for a build to finish, let’s consider they instead start working on another task while a build runs.

This is a classic example of context switching, and it comes with a cost, too. Research has found that context switching is both distracting and an impediment to focused and productive work. In fact, Gloria Mark, a professor of informatics at the University of California, Irvine, has found it takes about 23 minutes for someone to get back to their original task after context switching—and that isn’t even specific to development work, which often entails deeply involved work.

Based on my own experience, switching from one focused task to another takes at least an hour so that’s what I used to run the numbers against. Now, let’s break down the data again:

| Compute power | Minutes | Cost of 1 build | Partial developer cost

(1 dev) |

Partial developer cost

(5 devs) |

| 2 core | 310 | $2.48 | $77.48 | $377.48 |

| 4 core | 173 | $2.77 | $77.77 | $377.77 |

| 8 core | 92 | $2.94 | $77.94 | $377.94 |

| 16 core | 55 | $3.52 | $78.52 | $378.52 |

| 32 core | 35 | $4.48 | $79.48 | $379.48 |

| 64 core | 27 | $6.91 | $81.91 | $381.91 |

Here, the numbers tell a different story—that is, if you’re going to switch tasks anyways, the speed of build runs doesn’t significantly matter. Labor is much, much more expensive than compute resources. And that means spending a few more dollars to speed up the build is inconsequential in the long run.

Of course, this assumes it will take an hour for developers to get back on track after context switching. But according to the research we cited above, some people can get back on track in 23 minutes (and, additional research from Cornell found that it sometimes takes as little as 10 minutes).

To account for this, let’s try shortening the time frames to 30 minutes and 15 minutes:

| Compute power | Minutes | Cost of 1 build | Partial dev cost

(1 dev, 30 mins) |

Partial dev cost

(5 devs, 30 mins) |

Partial dev cost

(1 dev, 15 mins) |

Partial dev cost

(5 devs, 15 mins) |

| 2 core | 310 | $2.48 | $39.98 | $189.98 | $21.23 | $96.23 |

| 4 core | 173 | $2.77 | $40.27 | $190.27 | $21.52 | $96.52 |

| 8 core | 92 | $2.94 | $40.44 | $190.44 | $21.69 | $96.69 |

| 16 core | 55 | $3.52 | $41.02 | $191.02 | $22.27 | $97.27 |

| 32 core | 35 | $4.48 | $41.98 | $191.98 | $23.23 | $98.23 |

| 64 core | 27 | $6.91 | $44.41 | $194.41 | $25.66 | $100.66 |

And when you visualize this data on a graph, the cost for a single developer waiting on a build or switching tasks looks like this:

When you assume the average hourly rate of a developer is $75 (USD), the graph above shows that it almost always makes sense to pay more for more compute power so your developers aren’t left waiting or context switching. Even the most expensive compute option—$15 an hour for 64 cores and 256GB of RAM—only accounts for a fifth of the hourly cost of a single developer’s time. As developer salaries increase, the cost of hardware decreases, or the time the job takes to run decreases—and this inverse ratio bolsters the case for buying better equipment.

That’s something to consider.

The bottom line

It’s cheaper—and less frustrating for your developers—to pay more for better hardware to keep your team on track.

In this case, spending an extra $4-5 on build compute saves about $40 per build for an individual developer, or a little over $200 per build for a team of five, and the frustration of switching tasks with a productivity cost of about an hour. That’s not nothing. Of course, spending that extra $4-5 at scale can quickly compound—but so can the cost of sunk productivity.

Even though we used GitHub’s larger runners as an example here, these findings are applicable to any type of hardware—whether self-hosted or in the cloud. So remember: The upfront cost for more CPU power pays off over time. And your developers will thank you (trust us).

Want to try our new high-performance GitHub-hosted runners? Sign up for the beta today.

The post Experiment: The hidden costs of waiting on slow build times appeared first on The GitHub Blog.

]]>The post How GitHub converts previously encrypted and unencrypted columns to ActiveRecord encrypted columns appeared first on The GitHub Blog.

]]>In the first post in this series, we detailed how we designed our easy‐to‐use column encryption paved path. We found during the rollout that the bulk of time and effort was spent in robustly supporting the reading and upgrading of previous encryption formats/plaintext and key rotation. In this post, we’ll explain the design decisions we made in our migration plan and describe a simplified migration pattern you can use to encrypt (or re-encrypt) existing records in your Rails application.

We have two cases for encrypted columns data migration–upgrading plaintext or previously encrypted data to our new standard and key rotation.

Upon consulting the Rails documentation to see if there was any prior art we could use, we found the previous encryptor strategy but exactly how to migrate existing data is, as they say, an “exercise left for the reader.”

Dear reader, lace up your sneakers because we are about to exercise.

To convert plaintext columns or columns encrypted with our deprecated internal encryption library, we used ActiveRecord::Encryption’s previous encryptor strategy, our existing feature flag mechanism and our own type of database migration called a transition. Transitions are used by GitHub to modify existing data, as opposed to migrations that are mainly used to add or change columns. To simplify things and save time, in the example migration strategy, we’ll rely on the Ruby gem, MaintenanceTasks.

Previous encryptor strategy

ActiveRecord::Encryption provides as a config option config.active_record.encryption.support_unencrypted_data that allows plaintext values in an encrypted_attribute to be read without error. This is enabled globally and could be a good strategy to use if you are migrating only plaintext columns and you are going to migrate them all at once. We chose not to use this option because we want to migrate columns to ActiveRecord::Encryption without exposing the ciphertext of other columns if decryption fails. By using a previous encryptor, we can isolate this “plaintext mode” to a single model.

In addition to this, GitHub’s previous encryptor uses a schema validator and regex to make sure that the “plaintext” being returned does not have the same shape as Rails encrypted columns data.

Feature flag strategy

We wanted to have fine-grained control to safely roll out our new encryption strategy, as well as the ability to completely disable it in case something went wrong, so we created our own custom type using the ActiveModel::Type API, which would only perform encryption when the feature flag for our new column encryption strategy was disabled.

A common feature flag strategy would be to start a feature flag at 0% and gradually ramp it up to 100% while you observe and verify the effects on your application. Once a flag is verified at 100%, you would remove the feature flag logic and delete the flag. To gradually increase a flag on column encryption, we would need to have an encryption strategy that could handle plaintext and encrypted records both back and forth because there would be no way to know if a column was encrypted without attempting to read it first. This seemed like unnecessary additional and confusing work, so we knew we’d want to use flagging as an on/off switch.

While a feature flag should generally not be long running, we needed the feature flag logic to be long running because we want it to be available for GitHub developers who will want to upgrade existing columns to use ActiveRecord::Encryption.

This is why we chose to inverse the usual feature flag default to give us the flexibility to upgrade columns incrementally without introducing unnecessary long‐running feature flags. This means we set the flag at 100% to prevent records from being encrypted with the new standard and set it to 0% to cause them to be encrypted with our new standard. If for some reason we are unable to prioritize upgrading a column, other columns do not need to be flagged at 100% to continue to be encrypted on our new standard.

We added this logic to our monkeypatch of ActiveRecord::Base::encrypts method to ensure our feature flag serializer is used:

Code sample 1

self.attribute(attribute) do |cast_type|

GitHub::Encryption::FeatureFlagEncryptedType.new(cast_type: cast_type, attribute_name: attribute, model_name: self.name)

end

Which instantiates our new ActiveRecord Type that checks for the flag in its serialize method:

Code sample 2

# frozen_string_literal: true

module GitHub

module Encryption

class FeatureFlagEncryptedType < ::ActiveRecord::Type::Text

attr_accessor :cast_type, :attribute_name, :model_name

# delegate: a method to make a call to `this_object.foo.bar` into `this_object.bar` for convenience

# deserialize: Take a value from the database, and make it suitable for Rails

# changed_in_place?: determine if the value has changed and needs to be rewritten to the database

delegate :deserialize, :changed_in_place?

, to: :cast_type

def initialize(cast_type:, attribute_name:, model_name:)

raise RuntimeError, "Not an EncryptedAttributeType" unless cast_type.is_a?(ActiveRecord::Encryption::EncryptedAttributeType)

@cast_type = cast_type

@attribute_name = attribute_name

@model_name = model_name

end

# Take a value from Rails and make it suitable for the database

def serialize(value)

if feature_flag_enabled?("encrypt_as_plaintext_#{model_name.downcase}_#{attribute_name.downcase}")

# Fall back to plaintext (ignore the encryption serializer)

cast_type.cast_type.serialize(value)

else

# Perform encryption via active record encryption serializer

cast_type.serialize(value)

end

end

end

end

end

A caveat to this implementation is that we extended from ActiveRecord::Type::Text which extends from ActiveModel::Type:String, which implements changed_in_place? by checking if the new_value is a string, and, if it is, does a string comparison to determine if the value was changed.

We ran into this caveat during our roll out of our new encrypted columns. When migrating a column previously encrypted with our internal encryption library, we found that changed_in_place? would compare the decrypted plaintext value to the encrypted value stored in the database, always marking the record as changed in place as these were never equal. When we migrated one of our fields related to 2FA recovery codes, this had the unexpected side effect of causing them to all appear changed in our audit log logic and created false-alerts in customer facing security logs. Fortunately, though, there was no impact to data and our authentication team annotated the false alerts to indicate this to affected customers.

To address the cause, we delegated the changed_in_place? to the cast_type, which in this case will always be ActiveRecord::Encryption::EncryptedAttributeType that attempts to deserialize the previous value before comparing it to the new value.

Key rotation

ActiveRecord::Encryption accommodates for a list of keys to be used so that the most recent one is used to encrypt records, but all entries in the list will be tried until there is a successful decryption or an ActiveRecord::DecryptionError is raised. On its own, this will ensure that when you add a new key, records that are updated after will automatically be re-encrypted with the new key.

This functionality allows us to reuse our migration strategy (see code sample 5) to re-encrypt all records on a model with the new encryption key. We do this simply by adding a new key and running the migration to re-encrypt.

Example migration strategy

This section will describe a simplified version of our migration process you can replicate in your application. We use a previous encryptor to implement safe plaintext support and the maintanence_tasks gem to backfill the existing records.

Set up ActiveRecord::Encryption and create a previous encryptor

Because this is a simplified example of our own migration strategy, we recommend using a previous encryptor to restrict the “plaintext mode” of ActiveRecord::Encryption to the specific model(s) being migrated.

Set up ActiveRecord::Encryption by generating random key set:

bin/rails db:encryption:init

And adding it to the encrypted Rails.application.credentials using:

bin/rails credentials:edit

If you do not have a master.key, this command will generate one for you. Remember never to commit your master key!

Create a previous encryptor. Remember, when you provide a previous strategy, ActiveRecord::Encryption will use the previous to decrypt and the current (in this case ActiveRecord’s default encryptor) to encrypt the records.

Code sample 3

app/lib/encryption/previous_encryptor.rb

# frozen_string_literal: true

module Encryption

class PreviousEncryptor

def encrypt(clear_text, key_provider: nil, cipher_options: {})

raise NotImplementedError.new("This method should not be called")

end

def decrypt(previous_data, key_provider: nil, cipher_options: {})

# JSON schema validation

previous_data

end

end

end

Add the previous encryptor to the encrypted column

Code sample 4

app/models/secret.rb

class Secret < ApplicationRecord

encrypts :code, previous: { encryptor: Encryption::PreviousEncryptor.new }

end

The PreviousEncryptor will allow plaintext records to be read as plaintext but will encrypt all new records up until and while the task is running.

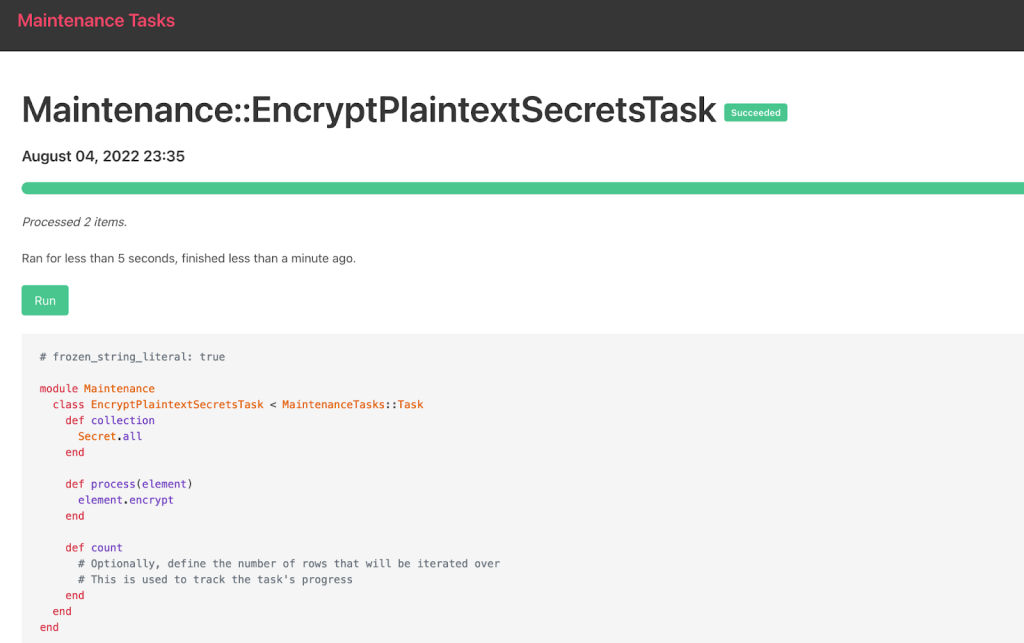

Install the Maintenance Tasks gem and create a task

Install the Maintenance Tasks gem per the instructions and you will be ready to create the maintenance task.

Create the task.

bin/rails generate maintenance_tasks:task encrypt_plaintext_secrets

In day‐to‐day use, you shouldn’t ever need to call secret.encrypt because ActiveRecord handles the encryption before inserting into the database, but we can use this API in our task:

Code sample 5

app/tasks/maintenance/encrypt_plaintext_secrets_task.rb

# frozen_string_literal: true

module Maintenance

class EncryptPlaintextSecretsTask < MaintenanceTasks::Task

def collection

Secret.all

end

def process(element)

element.encrypt

end

…

end

end

Run the Maintenance Task

Maintenance Tasks provides several options to run the task, but we use the web UI in this example:

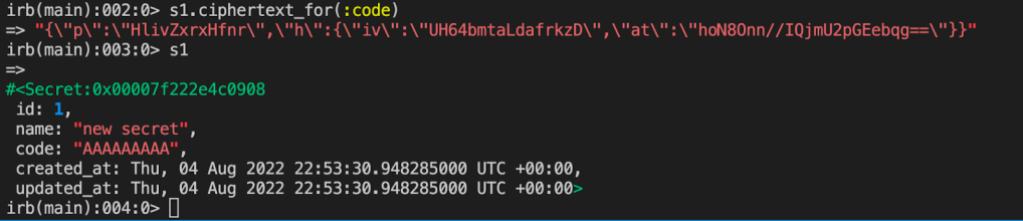

Verify your encryption and cleanup

You can verify encryption in Rails console, if you like:

And now you should be able to safely remove your previous encryptor leaving the model of your newly encrypted column looking like this:

Code sample 6

app/models/secret.rb

class Secret < ApplicationRecord

encrypts :code

end

And so can you!

Encrypting database columns is a valuable extra layer of security that can protect sensitive data during exploits, but it’s not always easy to migrate data in an existing application. We wrote this series in the hope that more organizations will be able to plot a clear path forward to using ActiveRecord::Encryption to start encrypting existing sensitive values.

The post How GitHub converts previously encrypted and unencrypted columns to ActiveRecord encrypted columns appeared first on The GitHub Blog.

]]>The post Why and how GitHub encrypts sensitive database columns using ActiveRecord::Encryption appeared first on The GitHub Blog.

]]>You may know that GitHub encrypts your source code at rest, but you may not have known that we also encrypt sensitive database columns in our Ruby on Rails monolith. We do this to provide an additional layer of defense in depth to mitigate concerns, such as:

- Reading or tampering with sensitive fields if a database is inappropriately accessed

- Accidentally exposing sensitive data in logs

Motivation

Until recently, we used an internal library called Encrypted Attributes. GitHub developers would declare a column should be encrypted using an API that might look familiar if you have used ActiveRecord::Encryption:

class TotpAppRegistration

encrypted_attribute :encrypted_otp_secret, :plaintext_otp_secret

end

Given that we had an existing implementation, you may be wondering why we chose to take on the work of converting our columns to ActiveRecord::Encryption. Our main motivation was to ensure that developers did not have to learn a GitHub-specific pattern to encrypt their sensitive data.

We believe strongly that using familiar, intuitive patterns results in better adoption of security tools and, by extension, better security for our users.

In addition to exposing some of the implementation details of the underlying encryption, this API did not provide an easy way for developers to encrypt existing columns. Our internal library required a separate encryption key to be generated and stored in our secure environment variable configuration—for each new database column. This created a bottleneck, as most developers don’t work with encryption every day and needed support from the security team to make changes.

When assessing ActiveRecord::Encryption, we were particularly interested in its ease of use for developers. We wanted a developer to be able to write one line of code, and no matter if their column was previously plaintext or used our previous solution, their column would magically start using ActiveRecord::Encryption. The final API looks something like this:

class TotpAppRegistration

encrypts :encrypted_otp_secret

end

This API is the exact same as what is used by traditional ActiveRecord::Encryption while hiding all the complexity of making it work at GitHub scale.

How we implemented this

As part of implementing ActiveRecord::Encryptioninto our monolith, we worked with our architecture and infrastructure teams to make sure the solution met GitHub’s scalability and security requirements. Below is a brief list of some of the customizations we made to fit the implementation to our infrastructure.

As always, there are specific nuances that must be considered when modifying existing encryption implementations, and it is always a good practice to review any new cryptography code with a security team.

Secure primary key storage

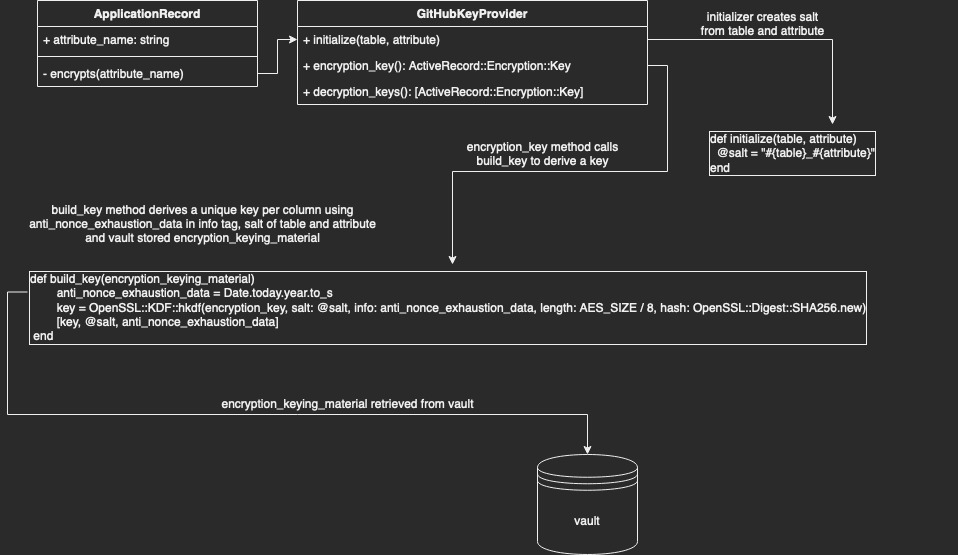

By default, Rails uses its built-in credentials.yml.enc file to securely store the primary key and static salt used for deriving the column encryption key in ActiveRecord::Encryption.

GitHub’s key management strategy for ActiveRecord::Encryption differs from the Rails default in two key ways: deriving a separate key per column and storing the key in our centralized secret management system.

Deriving per-column keys from a single primary key

As explained above, one of the goals of this transition was to no longer bottleneck teams by managing keys manually. We did, however, want to maintain the security properties of separate keys. Thankfully, cryptography experts have created a primitive known as a Key Derivation Function (KDF) for this purpose. These functions take (roughly) three important parameters: the primary key, a unique salt, and a string termed “info” by the spec.

Our salt is simply the table name, an underscore, and the attribute name. So for TotpAppRegistrations#encrypted_otp_secret the salt would be totp_app_registrations_encrypted_otp_secret. This ensures the key is different per column.

Due to the specifics of the ActiveRecord::Encryption algorithm (AES256-GCM), we need to be careful not to encrypt too many values using the same key (to avoid nonce reuse). We use the “info” string parameter to ensure the key for each column changes automatically at least once per year. Therefore, we can populate the info input with the current year as a nonce during key derivation.

The applications that make up GitHub store secrets in Hashicorp Vault. To conform with this pre-existing pattern, we wanted to pull our primary key from Vault instead of the credentials.yml.enc file. To accommodate for this, we wrote a custom key provider that behaves similarly to the default DerivedSecretKeyProvider, retrieving the key from Vault and deriving the key with our KDF (see Diagram 1).

Making new behavior the default

One of our team’s key principles is that solutions we develop should be intuitive and not require implementation knowledge on the part of the product developer. ActiveRecord::Encryption includes functionality to customize the Encryptor used to encrypt data for a given column. This functionality would allow developers to optionally use the strategies described above, but to make it the default for our monolith we needed to override the encrypts model helper to automatically select an appropriate GitHub-specific key provider for the user.

{

def self.encrypts(*attributes, key_provider: nil, previous: nil, **options)

# snip: ensure only one attribute is passed

# ...

# pull out the sole attribute

attribute = attributes.sole

# snip: ensure if a key provider is passed, that it is a GitHubKeyProvider

# ...

# If no key provider is set, instantiate one

kp = key_provider || GitHub::Encryption::GitHubKeyProvider.new(table: table_name.to_sym, attribute: attribute)

# snip: logic to ensure previous encryption formats and plaintext are supported for smooth transition (see part 2)

# github_previous = ...

# call to rails encryption

super(attribute, key_provider: kp, previous: github_previous, **options)

end

}

Currently, we only provide this API to developers working on our internal github.com codebase. As we work with the library, we are experimenting with upstreaming this strategy to ActiveRecord::Encryption by replacing the per-class encryption scheme with a per-column encryption scheme.

Turn off compression by default

Compressing values prior to encryption can reveal some information about the content of the value. For example, a value with more repeated bytes, such as “abcabcabc,” will compress better than a string of the same length, such as “abcdefghi”. In addition to the common encryption property that ciphertext generally exposes the length, this exposes additional information about the entropy (randomness) of the underlying plaintext.

ActiveRecord::Encryption compresses data by default for storage efficiency purposes, but since the values we are encrypting are relatively small, we did not feel this tradeoff was worth it for our use case. This is why we replaced the default to compress values before encryption with a flag that makes compression optional.

Migrating to a new encryption standard: the hard parts

This post illustrates some of the design decisions and tradeoffs we encountered when choosing ActiveRecord::Encryption, but it’s not quite enough information to guide developers of existing applications to start encrypting columns. In the next post in this series we’ll show you how we handled the hard parts—how to upgrade existing columns in your application from plaintext or possibly another encryption standard.

The post Why and how GitHub encrypts sensitive database columns using ActiveRecord::Encryption appeared first on The GitHub Blog.

]]>The post Improve Git monorepo performance with a file system monitor appeared first on The GitHub Blog.

]]>git status and git add. These commands are slow because they need to search the entire worktree looking for changes. When the worktree is very large, Git needs to do a lot of work.

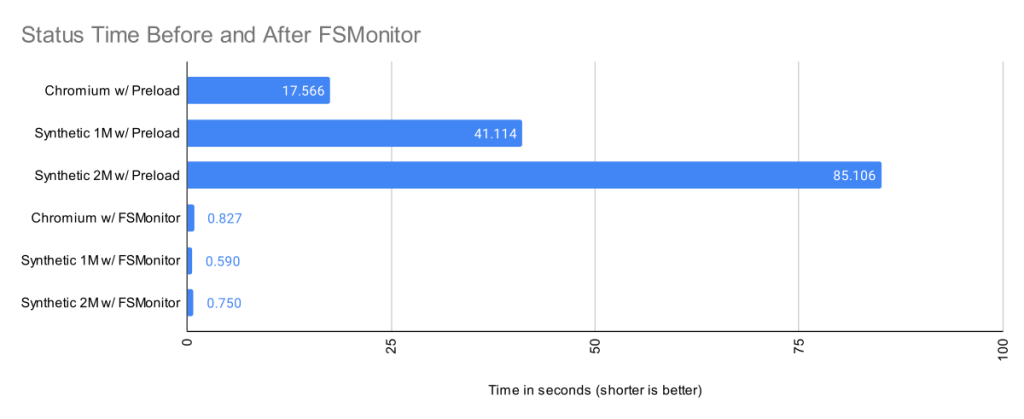

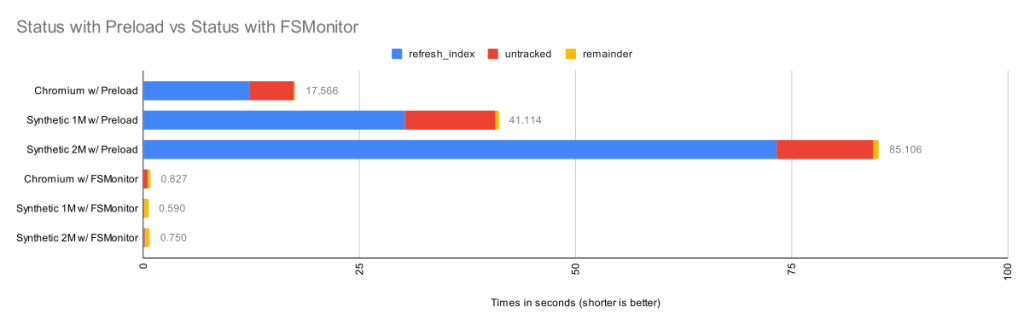

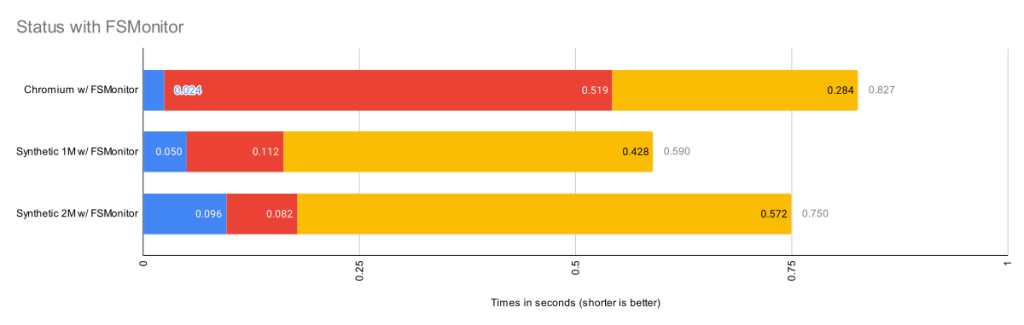

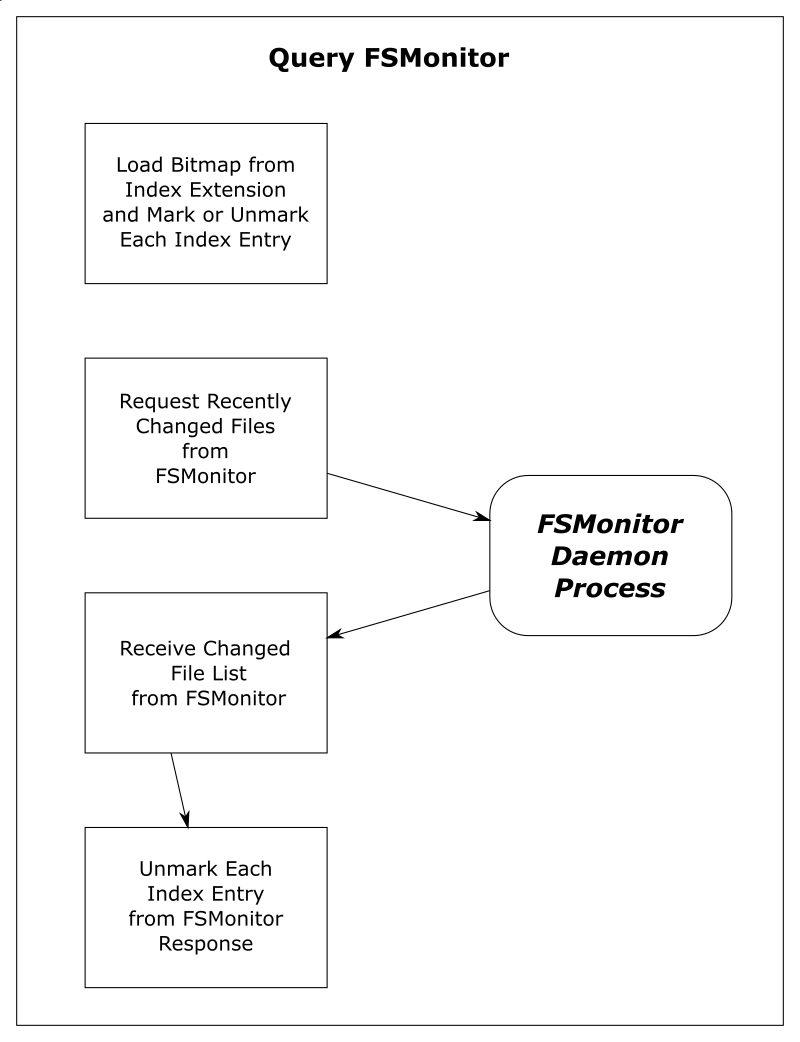

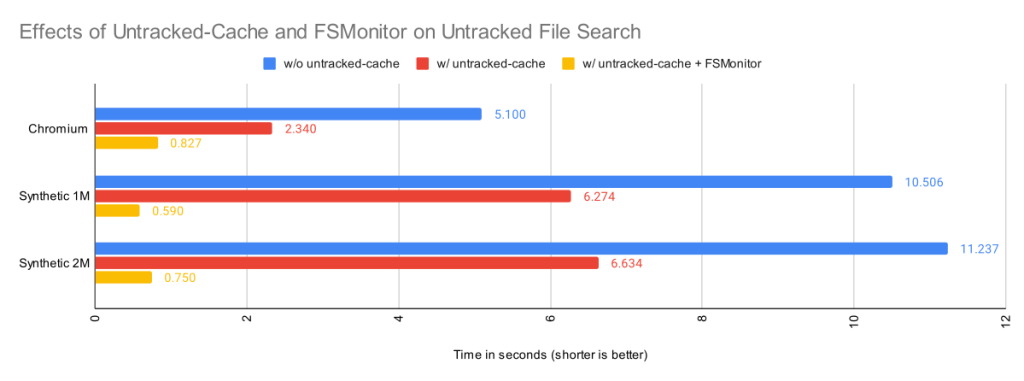

The Git file system monitor (FSMonitor) feature can speed up these commands by reducing the size of the search, and this can greatly reduce the pain of working in large worktrees. For example, this chart shows status times dropping to under a second on three different large worktrees when FSMonitor is enabled!

In this article, I want to talk about the new builtin FSMonitor git fsmonitor--daemon added in Git version 2.37.0. This is easy to set up and use since it is “in the box” and does not require any third-party tooling nor additional software. It only requires a config change to enable it. It is currently available on macOS and Windows.

To enable the new builtin FSMonitor, just set core.fsmonitor to true. A daemon will be started automatically in the background by the next Git command.

FSMonitor works well with core.untrackedcache, so we’ll also turn it on for the FSMonitor test runs. We’ll talk more about the untracked-cache later.

$ time git status

On branch main

Your branch is up to date with 'origin/main'.

It took 5.25 seconds to enumerate untracked files. 'status -uno'

may speed it up, but you have to be careful not to forget to add

new files yourself (see 'git help status').

nothing to commit, working tree clean

real 0m17.941s

user 0m0.031s

sys 0m0.046s

$ git config core.fsmonitor true

$ git config core.untrackedcache true

$ time git status

On branch main

Your branch is up to date with 'origin/main'.

It took 6.37 seconds to enumerate untracked files. 'status -uno'

may speed it up, but you have to be careful not to forget to add

new files yourself (see 'git help status').

nothing to commit, working tree clean

real 0m19.767s

user 0m0.000s

sys 0m0.078s

$ time git status

On branch main

Your branch is up to date with 'origin/main'.

nothing to commit, working tree clean

real 0m1.063s

user 0m0.000s

sys 0m0.093s

$ git fsmonitor--daemon status

fsmonitor-daemon is watching 'C:/work/chromium'

_Note that when the daemon first starts up, it needs to synchronize with the state of the index, so the next git status command may be just as slow (or slightly slower) than before, but subsequent commands should be much faster.

In this article, I’ll introduce the new builtin FSMonitor feature and explain how it improves performance on very large worktrees.

How FSMonitor improves performance

Git has a “What changed while I wasn’t looking?” problem. That is, when you run a command that operates on the worktree, such as git status, it has to discover what has changed relative to the index. It does this by searching the entire worktree. Whether you immediately run it again or run it again tomorrow, it has to rediscover all of that same information by searching again. Whether you edit zero, one, or a million files in the mean time, the next git status command has to do the same amount of work to rediscover what (if anything) has changed.