Peer Reviewed

Breaking Harmony Square: A game that “inoculates” against political misinformation

Article Metrics

51

CrossRef Citations

Altmetric Score

PDF Downloads

Page Views

We present Harmony Square, a short, free-to-play online game in which players learn how political misinformation is produced and spread. We find that the game confers psychological resistance against manipulation techniques commonly used in political misinformation: players from around the world find social media content making use of these techniques significantly less reliable after playing, are more confident in their ability to spot such content, and less likely to report sharing it with others in their network.

Research Questions

- Does playing Harmony Square make people better at spotting manipulation techniques commonly used in political misinformation?

- Does playing the game increase people’s confidence in their ability to spot such manipulation techniques in social media content?

- Does playing the game reduce people’s self-reported willingness to share manipulative social media content with people in their network?

Essay Summary

- In collaboration with the Dutch media collective DROG, design agency Gusmanson, Park Advisors, the U.S. Department of State’s Global Engagement Center and the Department of Homeland Security, we created a 10-minute, free online browser game called Harmony Square.

- Drawing on “inoculation theory,” the game functions as a psychological “vaccine” by exposing people to weakened doses of the common techniques used in political misinformation especially during elections.

- The game incorporates active experiential learning through a perspective-taking exercise: players are tasked with spreading misinformation and fomenting internal divisions in the quiet, peaceful neighborhood of Harmony Square.

- Over the course of 4 levels, players learn about 5 manipulation techniques commonly used in the spread of political media content: trolling, using emotional language, polarizing audiences, spreading conspiracy theories, and artificially amplifying the reach of their content through bots and fake likes.

- In a mixed randomized controlled trial (international sample, N = 681), we tested if playing Harmony Square improves (a) people’s ability to spot both “real” and “fictional” misinformation, (b) whether it increases their confidence in their own judgments, and (c) makes them less likely to report sharing such content within their network.

- Overall, we find that people who play the game find misinformation significantly less reliable after playing, are significantly more confident in their assessment, and are significantly less likely to report sharing misinformation, supporting Harmony Square’s effectiveness as a tool to inoculate people against online manipulation.

Implications

Harmony Square is an interactive social impact game about election misinformation.1The game can be played in any browser at https://www.harmonysquare.game The goal of the game is to reveal the tactics and manipulation techniques that fake news producers use to mislead their audience, build a following, and exploit societal tensions to achieve a political goal. The game’s setting is Harmony Square, a peaceful place where residents have a healthy obsession with democracy. At the start of the game, players are hired as Chief Disinformation Officer. Their job is to ruin the square’s idyllic state by fomenting internal divisions and pitting its residents against each other, all while gathering as many “likes” as they can. In order to deliver sufficiently weakened doses of the informational “virus,” Harmony Square makes use of humor throughout the game. For example, players can share humorous messages in a fictional social network, and are shown entertaining headlines in a news ticker at the top of the screen (see the second panel in Figure 1). Aside from increasing the entertainment value of the game, the use of humor in inoculation interventions has the added benefit of potentially decreasing reactance, i.e., resistance to voluntarily engaging with the intervention (Compton, 2018; Vraga et al., 2019). Over the course of 4 different levels (Trolling, Emotion, Amplification and Escalation), the player’s misinformation campaign causes the square to gradually go from a peaceful state to full-blown mayhem. Figure 1 shows a number of screenshots of what the game’s landing page and game environment look like.

The game was produced with and based on the US Department of Homeland Security’s Cybersecurity and Infrastructure Agency’s (CISA) approach to understanding foreign interference in elections. In an effort to “strengthen the national immune system for disinformation,” CISA launched a campaign in 2019 that sought to expose common misinformation tactics through the lens of an ostensibly innocent and non-partisan issue: whether or not to put pineapple on pizza (Ward, 2019). In a similar vein to the “pineapple pizza” campaign, Harmony Square exposes the 5 steps of the election misinformation playbook: targeting divisive issues (in line with the “trolling” & “emotion” scenarios in Harmony Square, in which players learn how to turn an ostensibly neutral issue into a heated and polarizing debate); moving accounts into place; amplifying and distorting the conversation (the “amplification” scenario, in which players learn how bots and fake accounts can be used to amplify the reach of manipulative content); making the mainstream; and taking the conversation into the real world (the “escalation” scenario, in which players learn how to escalate online debates into real-world action) (CISA, 2019).

Harmony Square builds on the success of our other gamified anti-misinformation interventions, such as the Bad News game (Roozenbeek & van der Linden, 2019; Roozenbeek, van der Linden, et al., 2020).2The Bad News game can be played at https://www.getbadnews.com Similar to Bad News, playing Harmony Square builds cognitive resistance against common forms of manipulation that people may encounter online by preemptively warning and exposing people to weakened doses of these techniques in a controlled environment. Unlike Bad News, Harmony Square is an election game that focuses specifically on how misinformation can be used to achieve political polarization (Bessi et al., 2016; Shao et al., 2017), for example, by fueling outgroup hostility, a critical element of both organic misinformation and targeted disinformation campaigns, particularly during contentious political events such as the 2020 US Presidential elections (Groshek & Koc-Michalska, 2017; Hindman & Barash, 2018; Iyengar & Massey, 2018; Keller et al., 2020).

The idea of psychological “vaccines,” coined in the 1960s by the psychologist William McGuire (McGuire, 1964; McGuire & Papageorgis, 1961b), is called inoculation theory. It follows a medical analogy: a vaccine is usually a weakened version of a particular pathogen which, after being introduced to the body, induces the production of antibodies, preventing an individual from becoming sick when exposed to the real illness. Inoculation theory states that the same can be achieved with (malicious) persuasion attempts: pre-emptively exposing someone to a weakened version of a particular misleading argument prompts a process that is akin to the production of “mental antibodies,” which make it less likely that a person is persuaded by the “real” manipulation later on (Compton, 2013; van der Linden et al., 2017). During gameplay, players are exposed to weakened doses of manipulation techniques by stepping into the shoes of a fake news producer to trigger the production of psychological antibodies.

Instead of focusing on specific examples, also known as issue-based inoculation (which has been the standard in inoculation research), Harmony Square builds cognitive resistance against the techniques that underpin a whole range of political misinformation in an attempt to achieve broad-spectrum resistance against manipulation (Basol et al., 2020; Cook et al., 2017). The game functions as a perspective-taking exercise (i.e., by putting the player in the position of a fake news creator), an approach known as “active inoculation” (McGuire & Papageorgis, 1961a; Roozenbeek & van der Linden, 2018). Actively involving individuals in the inoculation process by playing a game—as opposed to subjecting them to a more passive reading exercise—has the potential advantage of increasing retention in memory and increasing the longevity of in the inoculation effect (Compton & Pfau, 2005; Maertens et al., 2020; Roozenbeek & van der Linden, 2019).

By playing through the 4 levels in Harmony Square, players learn about 5 manipulation techniques, all of which are common features of political misinformation (van der Linden & Roozenbeek, 2020):

- Trolling people, i.e., deliberately provoking people to react emotionally, thus evoking outrage see (McCosker, 2014; Roozenbeek & van der Linden, 2019).

- Exploiting emotional language, i.e., trying to make people afraid or angry about a particular topic (Brady et al., 2017; Zollo et al., 2015).

- Artificially amplifying the reach and popularity of certain messages, for example through social media bots or by buying fake followers (McKew, 2018; Shao et al., 2017).

- Creating and spreading conspiracy theories, i.e., blaming a small, secretive and nefarious organization for events going on in the world (Lewandowsky et al., 2013; van der Linden, 2015).

- Polarizing audiences by deliberately emphasizing and magnifying inter-group differences (Iyengar & Massey, 2018; Prior, 2013).

In this study, we find that people who played Harmony Square rated manipulative social media posts making use of the above techniques as less reliable after playing, were more confident in their ability to spot such content, and importantly, were less likely to report to share it in their social network. This finding held for both “real” manipulative content that has gone viral online in the past, as well as for “fictional” content that participants in our study had never seen before. These findings highlight the potential for social impact games as an effective approach to counter misleading, fake, or manipulative content proliferating online (Basol et al., 2020; Maertens et al., 2020; Roozenbeek, Schneider, et al., 2020).

In addition, we find that political ideology did not interact with the learning effect conferred by Harmony Square, meaning that the game was effective at teaching manipulation techniques for both liberals and conservatives. This finding that the intervention can be effective across partisan lines is particularly important in light of the polarization of not just the US media landscape, but of the term “fake news” itself (van der Linden et al., 2020).

Specifically, we tested if people became better at spotting troll posts (i.e., posts that “bait” people into responding emotionally), exploitative emotional language use, conspiratorial content, and content that deliberately seeks to polarize different groups. These are all important manipulation techniques used in political misinformation (Roozenbeek & van der Linden, 2019; van der Linden & Roozenbeek, 2020). In addition, these techniques are key components of many organized disinformation campaigns (Bertolin et al., 2017; Cook et al., 2017; Hindman & Barash, 2018). Building cognitive resistance against these techniques at scale is a powerful tool to reduce the risk of disinformation campaigns affecting the democratic process. Recent research with the Bad News game has looked into the longevity of the inoculation effect conferred by “fake news” games such as Bad News (Maertens et al., 2020). This research finds that significant inoculation effects remain detectable for at least one week, and much longer when participants are presented with very short reminders or “booster shots.”

In short, we show that Harmony Square is effective at reducing some of the harmful effects that manipulative content can have on individuals. Taking only around 10 minutes to complete, the game conveys critical information about key manipulation techniques in a fun and interactive manner.We note that Harmony Square focuses mostly on political misinformation, and the game’s setting is a fictional democratic society. Since (political) misinformation is a significant problem in non-democratic countries, one of the limitations of this game is its limited applicability in countries that lack free elections. In addition, although research has shown that gamified inoculation effects can persist for months (Maertens et al., 2020), the longevity of the effect of Harmony Square was not evaluated here.

Findings

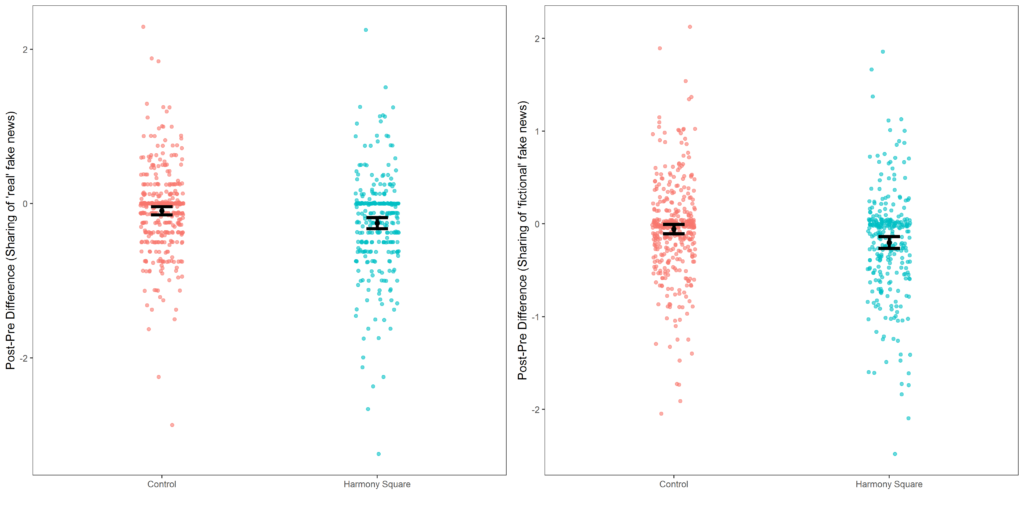

The purpose of this study was to find out whether people who play Harmony Square 1) find manipulative social media content less reliable after playing; 2) are more confident in their ability to spot such manipulative content; and 3) are less likely to indicate that they are willing to share manipulative social media content in their network, compared to a gamified control group (which played Tetris for around the same amount of time it takes to complete Harmony Square). To answer these questions, we first calculated the difference between the average score on all 3 of these questions for all 8 “real fake news” and all 8 “fictional fake news” social media posts that we used as measures (see the “methods” section) before and after the intervention, for each participant. We call this the “pre-post difference score.” We then checked if these difference scores for the treatment group were significantly different from the control group for each outcome variable, using an Analysis of Variance (ANOVA).

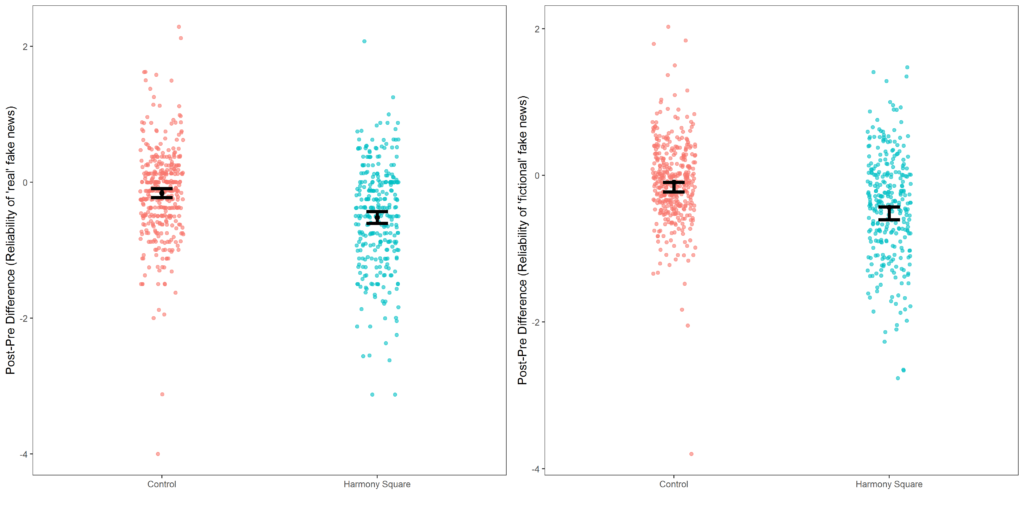

Finding 1: People who play Harmony Square find manipulative social media content significantly less reliable after playing compared to a control group.

For all the “real fake news” social media posts combined (see “methods” section), we find a significant main effect of the treatment condition (i.e. playing Harmony Square) on aggregate reliability judgments, meaning that playing the game significantly reduces the perceived reliability of “real fake news” compared to the control group (F(1,679) = 43.21, p < .001, η2 = .060, d = 0.51, Figure 2).3Specifically, compared to the control condition, the shift in post-pre difference scores for reliability judgments was significantly more negative for the treatment group (Mdiff,control = -0.16, SDdiff,control = 0.66 vs Mdiff,treatment = -0.52, SDdiff,treatment = 0.77, d = 0.51). We found no main effect (F(2,675) = 0.486, p = 0.62) nor an interaction effect (F(2,675) = 0.663, p = 0.52) for political ideology, meaning that ideology does not make a significant difference to the inoculation effect. We find the same result for the “fictitious fake news” items (F(1,679) = 48.79, p < .001, η2 = .067, d = 0.54).4Similar to “real” fake news, the shift in post-pre difference scores for reliability judgments was significantly more negative for the treatment group (Mdiff,control = -0.095, SDdiff,control = 0.55 vs Mdiff,treatment = -0.44, SDdiff,treatment = 0.72, d = 0.54). Here, we also found no main effect (F(2,675) = 1.154, p = 0.32) nor an interaction effect (F(2,675) = 0.810, p = 0.45) for political ideology. Importantly, the effect-sizes are nearly identical, which illustrates that using real or fictitious fake news does not matter much for assessment. The results are visualized in Figure 2.

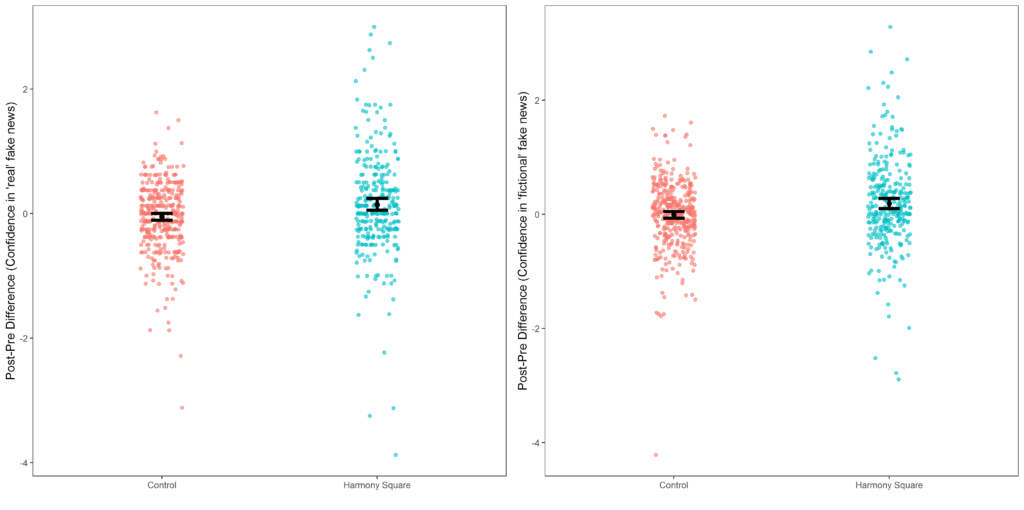

Finding 2: People who played Harmony Square are significantly more confident in their ability to spot manipulative content in social media posts, compared to a control group.

Next, we checked if playing Harmony Square increases people’s confidence in spotting manipulative content. We used the same method as above: first, we calculated the difference between the average scores before and after the intervention for both the treatment and control group, and then conducted an ANOVA to see if the differences between treatment and control were significant. Participants who played Harmony Square became significantly more confident in their ability to spot both “real” (F(1,679) = 14.52, p < .001, η2 = .021, d = 0.30)5Compared to the control condition, the shift in post-pre difference scores for confidence judgments was significantly more positive for the treatment group (Mdiff,control = -0.051, SDdiff,control = 0.55 vs Mdiff,treatment = 0.15, SDdiff,treatment = 0.82, d = 0.30). and “fictional” (F(1,679) = 14.55, p < .001, η2 = .021, d = 0.30)6Compared to the control condition, the shift in post-pre difference scores for confidence judgments was significantly more positive for the treatment group (Mdiff,control = -0.011, SDdiff,control = 0.59 vs Mdiff,treatment = 0.19, SDdiff,treatment = 0.79, d = 0.30). manipulative content. Figure 3 visualizes the results.

Finding 3: People who played Harmony Square are significantly less likely to report sharing manipulative social media content with others.

Finally, we checked whether playing Harmony Square reduces participants’ self-reported willingness to share manipulative content with people in their network. Using the same method as above, we found that people who played the game were significantly less likely to share both “real” (F(1,679) = 12.85, p < .001, η2 = .019, d = 0.28)7Compared to the control condition, the shift in post-pre difference scores for willingness to share was significantly more negative for the treatment group (Mdiff,control = -0.09, SDdiff,control = 0.53 vs Mdiff,treatment = -0.25, SDdiff,treatment = 0.64, d = 0.28). and “fictional” (F(1,679) = 12.62, p < .001, η2 = .018, d = 0.27)8Compared to the control condition, the shift in post-pre difference scores for willingness to share was significantly more negative for the treatment group (Mdiff,control = -0.06, SDdiff,control = 0.51 vs Mdiff,treatment = -0.20, SDdiff,treatment = 0.56, d = 0.27). manipulative content that they encounter online. Figure 4 visualizes the results.

To restrict multiple testing, we only present results for the aggregated fake news indices here. However, when looking at the manipulation techniques featured in the game (trolling, emotion, conspiracy and polarization), we show that players improve on each technique as well. The full list of ANOVAs per manipulation technique, including effect size estimates, can be found in Supplementary Table S3. A bar plot which summarizes the results for the pre and post-test separately in a single figure can be found in Supplementary Figure S1. We conducted two robustness checks to verify the main analyses presented here: a linear regression using post-test as the dependent variable, pre-test as a covariate, and condition as a between-subject factor (see the Supplementary Methods and Analyses section and Supplementary Table S4); and a robust linear regression clustering scores at the participant and rating level (see the Supplementary Methods and Analyses section and Supplementary Table S5). Both approaches give the same results as what is presented above.

Methods

To test if Harmony Square improves people’s ability to spot manipulative online content, we conducted a 2 (treatment vs control) by 2 (pre vs post) mixed design randomized controlled trial.9The full dataset, “real” and “fictional” social media posts and R scripts used in this study are available on the OSF. Link: https://osf.io/r89h3/. The treatment condition involved playing Harmony Square from beginning to end. The control condition played Tetris for about 10 minutes. We chose Tetris because it is in the public domain, most people know how it works without practicing, and it involves about the same amount of cognitive effort as playing Harmony Square. Following the methodology established in prior research on “fake news” games (Basol et al., 2020; Maertens et al., 2020; Roozenbeek, Maertens, et al., 2020; Roozenbeek & van der Linden, 2019), we measured reliability judgments of social media posts containing misinformation, both before and after the intervention on a 1-7 Likert scale.

Measures

We sought to answer three questions about the effectiveness of Harmony Square as an anti-misinformation tool:

- Does playing Harmony Square make people better at spotting manipulation techniques commonly used in political misinformation?

- Does playing the game increase people’s confidence in their ability to spot such manipulation techniques in social media content?

- Does playing the game reduce people’s self-reported willingness to share manipulative social media content with people in their network?

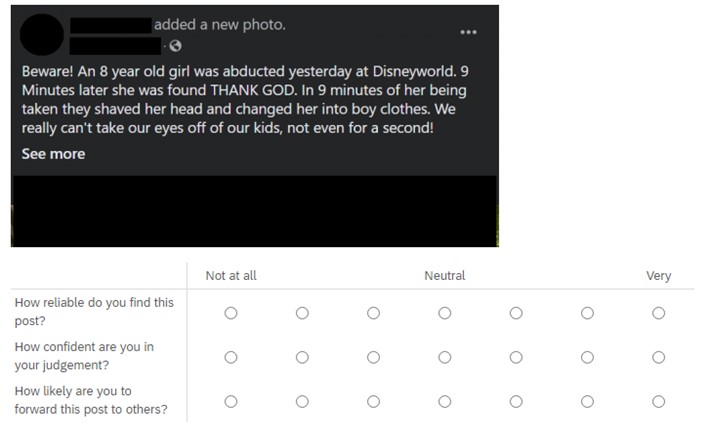

To address these questions, we showed the participants in our study 16 social media posts, each of which made use of one of 4 manipulation techniques learned while playing Harmony Square: trolling, using emotional language, conspiratorial reasoning, and group polarization. These posts were selected to be a mix of politically partisan and politically neutral content. Politically neutral items covered topics such as a kidnapping at an amusement park. Since the Harmony Square game is about political misinformation, we also included several items that were ideologically or politically charged. These items were balanced overall, with an equal number of right-leaning and left-leaning items. All items are available on our OSF page as well as in supplementary table S6.

In total, 8 of these posts were examples of “real” manipulative content found “in the wild” on social media and in fake news articles. The other 8 were social media posts that we created (“fictional fake news”), which were validated in previous research (Basol et al., 2020; Maertens et al., 2020; Roozenbeek, Maertens, et al., 2020). We did not hypothesize any significant differences between participants’ assessments of “real” and “fictional” misinformation, but chose to include both types for the following reasons: 1) including “real” items increases the ecological validity of the study, as participants are tested on information that they could have encountered “in the wild”; 2) including “fictional” items maximizes experimental control and thus allows us to better isolate each manipulation technique and ensure political neutrality, and 3) by including “fictional” items, we account for the possibility that participants may have seen the “real” manipulative content before, a memory confound which could bias their assessment (Roozenbeek & van der Linden, 2019).10The Supplementary Methods & Analyses appendix contains further information on the item selection procedure and a Principal Component Analysis for both the real and fake social media posts. Following Basol et al. (2020), we deliberately chose to only include manipulative content, as opposed to a mix of manipulative and non-manipulative content. The purpose of the Harmony Square game was not to learn how to distinguish high-quality and low-quality content, but rather to teach people how to spot common types of misinformation on social media. We therefore chose to focus on addressing the question of whether Harmony Square is effective at reducing susceptibility to political misinformation, rather than truth discernment (Pennycook et al., 2020) but we note that media literacy interventions can affect the rating of both credible and non-credible items (Guess et al., 2020). For a more detailed discussion on how gamified inoculation interventions affect people’s perception of “real” (high-quality) news we refer the reader to Roozenbeek, Maertens et al. (2020).

Figure 5 shows an example of what our items look like in the survey environment. The social media post in the figure is a real example of a rumor that went viral about a girl being kidnapped in a theme park, an example of the “emotional language” technique learned in the game (AFP Canada, 2019; Pennycook et al., 2019). The full list of items can be found on the OSF11See https://osf.io/r89h3/ and in the supplement (Table S6).

For each of the 16 items, we asked participants 3 questions, which they could answer on a 1-7 scale (1 being “not at all”, 4 being “neutral” and 7 being “very”):12A reliability analysis shows acceptable to good internal consistency for all 3 outcome measures, both for the “real” fake news item set and the “fictional” fake news item set (Mreal,reliability = 3.26, SDreal,reliability = 0.98, α = 0.68; Mfake,reliability = 2.99, SDfake,reliability = 1.06, α = 0.78; Mreal,confidence = 5.20, SDreal,confidence = 1.06, α = 0.84; Mfake,confidence = 5.13, SDfake,confidence = 1.08, α = 0.86; Mreal,sharing = 2.26, SDreal,sharing = 1.17, α = 0.85; Mfake,sharing = 2.15, SDfake,sharing = 1.19, α = 0.88).

- How reliable do you find this post?

- How confident are you in your judgment?

- How likely are you to forward this post to others?

We asked these questions for all 16 social media posts both before and after the intervention (Harmony Square for the treatment group, and Tetris for the control group). This allowed us to measure the difference between the “before score” and the “after score” for each group (the “pre-post difference score”). We thus arrive at our hypotheses: if Harmony Square is effective as an anti-misinformation tool, participants who played it should 1) find manipulative content significantly less reliable after playing, 2) be significantly more confident in their judgment, and 3) be significantly less likely to report sharing such content with people in their network, whereas the control group—who did not learn anything about manipulative content while playing Tetris—should show no significant differences for each of the three questions before and after playing. To control for multiple testing, we only evaluated the aggregate indices for each dependent variable but for completeness we report effects for all 4 manipulation techniques separately in the supplementary information (Supplementary Table S3). Descriptive statistics for each individual item can be found in Supplementary Table S2.

In total, 681 people were recruited in 2 separate data collections; a US-only sample (n = 312) and an international sample (n = 369). We pooled the results here (effect-sizes are slightly larger for the US-only sample). In total, 296 participants played Harmony Square (the treatment group), and 385 people played Tetris (the control group). A detailed overview of the sample selection process and study participants, as well as several robustness checks for the main analyses, can be found in the Supplementary Methods & Analyses appendix.

Topics

Bibliography

AFP Canada. (2019). Tale of thwarted child abduction returns with Canadian theme park twist. Factcheck.afp.https://factcheck.afp.com/tale-thwarted-child-abduction-returns-canadian-theme-park-twist

Basol, M., Roozenbeek, J., & van der Linden, S. (2020). Good news about bad news: Gamified inoculation boosts confidence and cognitive immunity against fake news. Journal of Cognition, 3(1), 2, 1–9. http://doi.org/10.5334/joc.91

Bertolin, G., Agarwal, N., Bandeli, K., Biteniece, N., & Sedova, K. (2017). Digital hydra: Security implications of false information online. https://www.stratcomcoe.org/digital-hydra-security-implications-false-information-online

Bessi, A., Zollo, F., Del Vicario, M., Puliga, M., Scala, A., Caldarelli, G., Uzzi, B., & Quattrociocchi, W. (2016). Users polarization on Facebook and YouTube. PLoS ONE, 11(8), 1–24. https://doi.org/10.1371/journal.pone.0159641

Brady, W. J., Wills, J. A., Jost, J. T., Tucker, J. A., & Van Bavel, J. J. (2017). Emotion shapes the diffusion of moralized content in social networks. Proceedings of the National Academy of Sciences, 114(28), 7313–7318. https://doi.org/10.1073/pnas.1618923114

CISA. (2019). The war on pineapple: Understanding foreign interference in 5 steps. DHS. https://www.dhs.gov/sites/default/files/publications/19_0717_cisa_the-war-on-pineapple-understanding-foreign-interference-in-5-steps.pdf

Compton, J. (2013). Inoculation theory. In J. P. Dillard & L. Shen (Eds.), The SAGE Handbook of Persuasion: Developments in Theory and Practice (2nd ed., pp. 220–236). https://doi.org/10.4135/9781452218410

Compton, J. (2018). Inoculation against/with political humor. In J. C. Baumgartner & A. B. Becker (Eds.), Political humor in a changing media landscape: A new generation of research (pp. 95–113). London: Lexington Books.

Compton, J., & Pfau, M. (2005). Inoculation theory of resistance to influence at maturity: Recent progress in theory development and application and suggestions for future research. Annals of the International Communication Association, 29(1), 97–145. https://doi.org/10.1207/s15567419cy2901_4

Cook, J., Lewandowsky, S., & Ecker, U. K. H. (2017). Neutralizing misinformation through inoculation: Exposing misleading argumentation techniques reduces their influence. PLoS ONE, 12(5), 1–21. https://doi.org/10.1371/journal.pone.0175799

Groshek, J., & Koc-Michalska, K. (2017). Helping populism win? Social media use, filter bubbles, and support for populist presidential candidates in the 2016 US election campaign. Information Communication and Society, 20(9). https://doi.org/10.1080/1369118X.2017.1329334

Hindman, M., & Barash, V. (2018). Disinformation, “fake news” and influence campaigns on Twitter. https://kf-site-production.s3.amazonaws.com/media_elements/files/000/000/238/original/KF-DisinformationReport-final2.pdf

Iyengar, S., & Massey, D. S. (2018). Scientific communication in a post-truth society. Proceedings of the National Academy of Sciences, 116(16), 7656–7661. https://doi.org/10.1073/PNAS.1805868115

Keller, F. B., Schoch, D., Stier, S., & Yang, J. (2020). Political astroturfing on Twitter: How to coordinate a disinformation campaign. Political Communication, 37(2), 256–280. https://doi.org/10.1080/10584609.2019.1661888

Lewandowsky, S., Oberauer, K., & Gignac, G. E. (2013). NASA faked the moon landing—Therefore, (climate) science is a hoax: An anatomy of the motivated rejection of science. Psychological Science, 24(5), 622–633. https://doi.org/10.1177/0956797612457686

Maertens, R., Roozenbeek, J., Basol, M., & van der Linden, S. (2020). Long-term effectiveness of inoculation against misinformation: Three longitudinal experiments. Journal of Experimental Psychology: Applied. https://doi.org/https://dx.doi.org/10.1037/xap0000315

McCosker, A. (2014). Trolling as provocation: YouTube’s agonistic publics. Convergence, 20(2), 201–217. https://doi.org/10.1177/1354856513501413

McGuire, W. J. (1964). Inducing resistance against persuasion: Some contemporary approaches. Advances in Experimental Social Psychology, 1, 191–229. https://doi.org/http://dx.doi.org/10.1016/S0065-2601(08)60052-0

McGuire, W. J., & Papageorgis, D. (1961a). Resistance to persuasion conferred by active and passive prior refutation of the same and alternative counterarguments. Journal of Abnormal and Social Psychology, 63, 326–332.

McGuire, W. J., & Papageorgis, D. (1961b). The relative efficacy of various types of prior belief-defense in producing immunity against persuasion. Journal of Abnormal and Social Psychology, 62(2), 327–337.

McKew, M. K. (2018, February 4). How Twitter bots and Trump fans made #ReleaseTheMemo go viral. Politico. https://www.politico.com/magazine/story/2018/02/04/trump-twitter-russians-release-the-memo-216935

Pennycook, G., Martel, C., & Rand, D. G. (2019). Knowing how fake news preys on your emotions can help you spot it. CBC.https://www.cbc.ca/news/canada/saskatchewan/analysis-fake-news-appeals-to-emotion-1.5274207

Pennycook, G., McPhetres, J., Zhang, Y., Lu, J. G., & Rand, D. G. (2020). Fighting COVID-19 misinformation on social media: Experimental evidence for a scalable accuracy-nudge intervention. Psychological Science, 31(7), 770–780. https://doi.org/10.1177/0956797620939054

Prior, M. (2013). Media and political polarization. Annual Review of Political Science, 16(1), 101–127. https://doi.org/10.1146/annurev-polisci-100711-135242

Roozenbeek, J., Maertens, R., McClanahan, W., & van der Linden, S. (2020). Differentiating item and testing effects in inoculation research on online misinformation. Educational and Psychological Measurement, 1–23. https://doi.org/10.1177/0013164420940378

Roozenbeek, J., Schneider, C. R., Dryhurst, S., Kerr, J., Freeman, A. L. J., Recchia, G., van der Bles, A.M., & van der Linden, S. (2020). Susceptibility to misinformation about COVID-19 around the world. Royal Society Open Science, 7(2011199). https://doi.org/10.1098/rsos.201199

Roozenbeek, J., & van der Linden, S. (2018). The fake news game: actively inoculating against the risk of misinformation. Journal of Risk Research, 22(5), 570–580. https://doi.org/10.1080/13669877.2018.1443491

Roozenbeek, J., & van der Linden, S. (2019). Fake news game confers psychological resistance against online misinformation. Humanities and Social Sciences Communications, 5(65), 1–10. https://doi.org/10.1057/s41599-019-0279-9

Roozenbeek, J., van der Linden, S., & Nygren, T. (2020). Prebunking interventions based on “inoculation” theory can reduce susceptibility to misinformation across cultures. Harvard Kennedy School (HKS) Misinformation Review, 1(2). https://doi.org/10.37016//mr-2020-008

Shao, C., Ciampaglia, G. L., Flammini, A., & Menczer, F. (2017). The spread of fake news by social bots. ArXiv:1707.07592 (cs.SI).http://arxiv.org/abs/1707.07592

van der Linden, S. (2015). The conspiracy-effect: Exposure to conspiracy theories (about global warming) decreases pro-social behavior and science acceptance. Personality and Individual Differences, 87, 171–173. http://www.sciencedirect.com/science/article/pii/S0191886915005024

van der Linden, S., Panagopoulos, C., & Roozenbeek, J. (2020). You are fake news: The emergence of political bias in perceptions of fake news. Media, Culture & Society, 42(3), 460–470. https://doi.org/10.1177/0163443720906992

van der Linden, S., & Roozenbeek, J. (2020). Psychological inoculation against fake news. In R. Greifenader, M. Jaffé, E. Newman, & N. Schwarz (Eds.), The Psychology of Fake News: Accepting, Sharing, and Correcting Misinformation. https://doi.org/10.4324/9780429295379-11

Vraga, E. K., Kim, S. C., & Cook, J. (2019). Testing logic-based and humor-based corrections for science, health, and political misinformation on social media. Journal of Broadcasting & Electronic Media, 63(3), 393–414. https://doi.org/10.1080/08838151.2019.1653102

Ward, J. (2019, July 27). U.S. cybersecurity agency uses pineapple pizza to demonstrate vulnerability to foreign influence. NBC News. https://www.nbcnews.com/news/us-news/u-s-cybersecurity-agency-uses-pineapple-pizza-demonstrate-vulnerability-foreign-n1035296

Zollo, F., Novak, P. K., Del Vicario, M., Bessi, A., Mozetič, I., Scala, A., Caldarelli, G., & Quattrociocchi, W. (2015). Emotional dynamics in the age of misinformation. PLoS ONE, 10(9), 1–22. https://doi.org/10.1371/journal.pone.0138740

Funding

The Department of State’s Global Engagement Center (GEC) has, in collaboration with DROG and the University of Cambridge, assisted in the development and tailoring of Harmony Square within the scope of addressing foreign adversarial propaganda and disinformation and its impact on foreign audiences and elections overseas. The GEC seeks to “facilitate the use of a wide range of technologies and techniques by sharing expertise among federal department and agencies, seeking expertise from external sources and implementing best practices” in order to “recognize, understand, expose, and counter foreign state and foreign non-state propaganda and disinformation” (NDAA section 1287).

In support of this mission, the GEC’s Technology Engagement Team collaborated in tailoring Harmony Square to address the challenges of foreign adversarial propaganda and disinformation that could impact foreign elections. Harmony Square is an example of how the GEC’s programmatic initiatives – the Tech Demo Series and the Testbed – assist in identifying and testing tools to facilitate unique technological approaches to countering propaganda and disinformation.

Harmony Square is freely available to play at: https://www.harmonysquare.game

Competing Interests

J.R. and S.v.d.L. were responsible for the content of the Harmony Square game. J.R. was financially compensated for this work by DROG. Neither DROG nor GEC and DHS were involved in any way in this study’s design, analysis, or write-up.

Ethics

This study was approved by the Department of Psychology Research Ethics Committee at the University of Cambridge (PRE2020.052).

We asked participants to indicate their gender in this study. In line with established survey research practice in psychology, participants could indicate whether they self-identified as male, female, or other (in which case they could fill in a text box to specify how they self-identify).

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

The data, items and scripts used in this study is available in the OSF: https://osf.io/r89h3/

Acknowledgements

We thank Cecilie Steenbuch Traberg for designing several items (social media posts) used in this study.