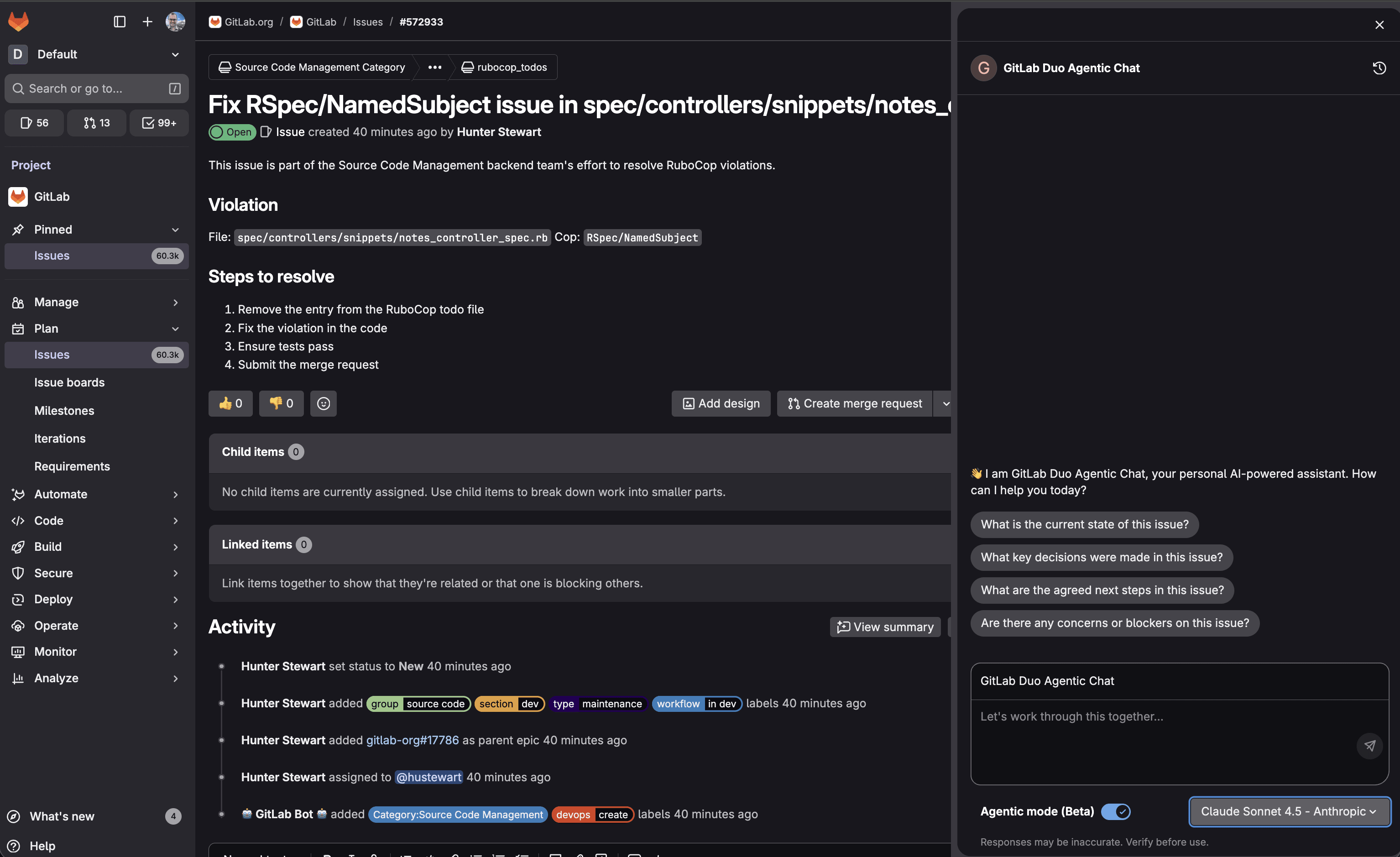

Users have the flexibility to choose Claude Haiku 4.5 alongside other leading models, enhancing their GitLab Duo experience with near-frontier performance at remarkable speed. With strong performance on SWE-bench Verified (73.3%) and more than 2x the speed of Claude Sonnet 4.5, GitLab users can apply Claude Haiku 4.5 to accelerate their development workflows with rapid, intelligent responses.

GitLab Duo Agent Platform + Claude Haiku 4.5

GitLab Duo Agent Platform extends the value of Claude Haiku 4.5 by enabling multi-agent orchestration, where Claude Haiku 4.5 can serve as a fast sub-agent executing parallel tasks while more powerful models handle high-level planning. This combination creates efficient agentic workflows, where speed meets intelligence across the software development lifecycle. The result is faster iterations, cost-effective AI assistance, and responsive experiences, all delivered inside the GitLab workflow developers already use every day.

Where you can use Claude Haiku 4.5

Claude Haiku 4.5 is now available as a model option in GitLab Duo Agent Platform Agentic Chat on GitLab.com. You can choose Claude Haiku 4.5 from the model selection dropdown to leverage its speed and coding capabilities for your development tasks.

Note: Ability to select Claude Haiku 4.5 in supported IDEs will be available soon.

Key capabilities:

- Superior coding performance: Achieves 73% on SWE-bench Verified, matching the intelligence level of models that were cutting-edge just months ago.

- Lightning-fast responses: More than 2x faster than Sonnet 4.5, perfect for real-time pair programming.

- Enhanced computer use: Outperforms Claude Sonnet 4 at autonomous task execution.

- Context awareness: First Haiku model with native context window tracking for better task persistence.

- Extended thinking: Pause and reason through complex problems before generating responses.

Get started today

GitLab Duo Pro and Enterprise customers can access Claude Haiku 4.5 today. Visit our documentation to learn more about GitLab Duo capabilities and models.

Questions or feedback? Share your experience with us through the GitLab community.

Want to try GitLab Ultimate with Duo Enterprise? Sign up for a free trial today.

Read more

]]>CI/CD pipelines can help with the challenge of building and validating software projects consistently, but, much like the software itself, these pipelines can become complex with many dependencies. This is where ideas like parent-child pipelines and data exchange in CI/CD setups become incredibly important.

In this article, we will cover common CI/CD data exchange challenges users may encounter with parent-child pipelines in GitLab — and how to solve them. You'll learn how to turn complex CI/CD processes into more manageable setups.

Using parent-child pipelines

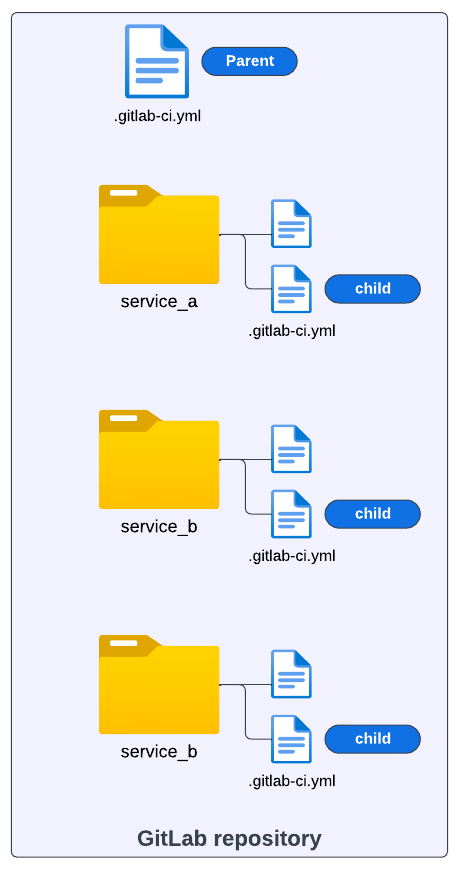

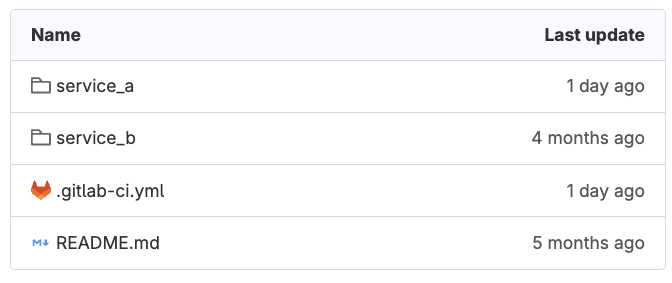

The pipeline setup in the image below illustrates a scenario where a project could require a large, complex pipeline. The whole project resides in one repository and contains different modules. Each module requires its own set of build and test automation steps.

One approach to address the CI/CD configuration in a scenario like this is to break down the larger pipeline into smaller ones (i.e., child pipelines) and keep a common CI/CD process that is shared across all modules in charge of the whole orchestration (i.e., parent pipeline).

The parent-child pipeline pattern allows a single pipeline to orchestrate one or many downstream pipelines. Similar to how a single pipeline coordinates the execution of multiple jobs, the parent pipeline coordinates the running of full pipelines with one or more jobs.

This pattern has been shown to be helpful in a variety of use cases:

-

Breaking down large, complex pipelines into smaller, manageable pieces

-

Conditionally executing certain pipelines as part of a larger CI/CD process

-

Executing pipelines in parallel

-

Helping manage user permissions to access and run certain pipelines

GitLab’s current CI/CD structure supports this pattern and makes it simple to implement parent-child pipelines. While there are many benefits when using the parent-child pipeline pattern with GitLab, one question we often get is how to share data between the parent and child pipelines. In the next sections, we’ll go over how to make use of GitLab variables and artifacts to address this concern.

Sharing variables

There are cases where it is necessary to pass the output from a parent pipeline job to a child pipeline. These outputs can be shared as variables, artifacts, and inputs.

Consider a case where we create a custom variable var_1 during the runtime of a job:

stages:

- build

- triggers

# This job only creates a variable

create_var_job:

stage: build

script:

- var_1="Hi, I'm a Parent pipeline variable"

- echo "var_1=$var_1" >> var.env

artifacts:

reports:

dotenv: var.env

Notice that the variable is created as part of the script steps in the job (during runtime). In this example, we are using a simple string "Hi, I'm a Parent pipeline variable" to illustrate the main syntax required to later share this variable with a child pipeline. Let's break down the create_var_job and analyze the main steps from this GitLab job

First, we need to save var_1 as dotenv:

script:

- var_1="Hi, I'm a pipeline variable"

- echo "var_1=$var_1" >> var.env

After saving var_1 as var.env, the next important step is to make this variable available as an artifact produced by the create_var_job. To do that, we use the following syntax:

artifacts:

reports:

dotenv: var.env

Up to this point, we have created a variable during runtime and saved it as a dotenv report. Now let's add the job that should trigger the child pipeline:

telco_service_a:

stage: triggers

trigger:

include: service_a/.gitlab-ci.yml

rules:

- changes:

- service_a/*

The goal of telco_service_a job is to find the .gitlab-ci.yml configuration of the child pipeline, which is defined in this case as service_a, and trigger its execution. Let's examine this job:

telco_service_a:

stage: triggers

trigger:

include: service_a/.gitlab-ci.yml

We see it belongs to another stage of the pipeline named triggers.This job will run only after create_var_job from the first stage successfully finishes and where the variable var_1 we want to pass is created.

After defining the stage, we use the reserved words trigger and include to tell GitLab where to search for the child pipeline configuration, as illustrated in the YAML below:

trigger:

include: service_a/.gitlab-ci.yml

Our child-pipeline YAML configuration is under service_a/.gitlab-ci.yml folder in the GitLab repository, for this example.

<p></p>

<center><i>Child pipelines folders with configurations</i></center>

<p></p>

Take into consideration that the repository structure depicted above can vary. What matters is properly pointing the triggers: include properties at the location of your child-pipeline configuration in your repository.

Finally, we use rules: changes to indicate to GitLab that this child pipeline should be triggered only if there is any change in any file in the service_a/.gitlab-ci.yml directory, as illustrated in the following code snippet:

rules:

- changes:

- service_a/*

Using this rule helps to optimize cost by triggering the child pipeline job only when necessary. This approach is particularly valuable in a monorepo architecture where specific modules contain numerous components, allowing us to avoid running their dedicated pipelines when no changes have been made to their respective codebases.

Configuring the parent pipeline

Up to this point, we have put together our parent pipeline. Here's the full code snippet for this segment:

# Parent Pipeline Configuration

# This pipeline creates a custom variable and triggers a child pipeline

stages:

- build

- trigger

create_var_job:

stage: build

script:

- var_1="Hi, I'm a Parent pipeline variable"

- echo "var_1=$var_1" >> var.env

artifacts:

reports:

dotenv: var.env

telco_service_a:

stage: triggers

trigger:

include: service_a/.gitlab-ci.yml

rules:

- changes:

- service_a/*

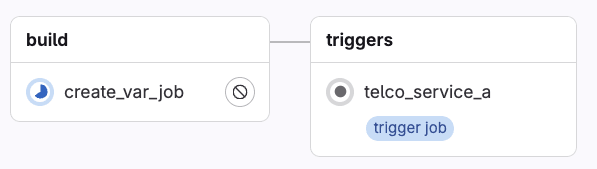

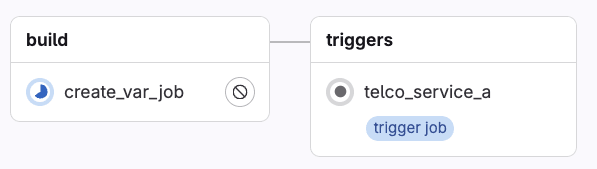

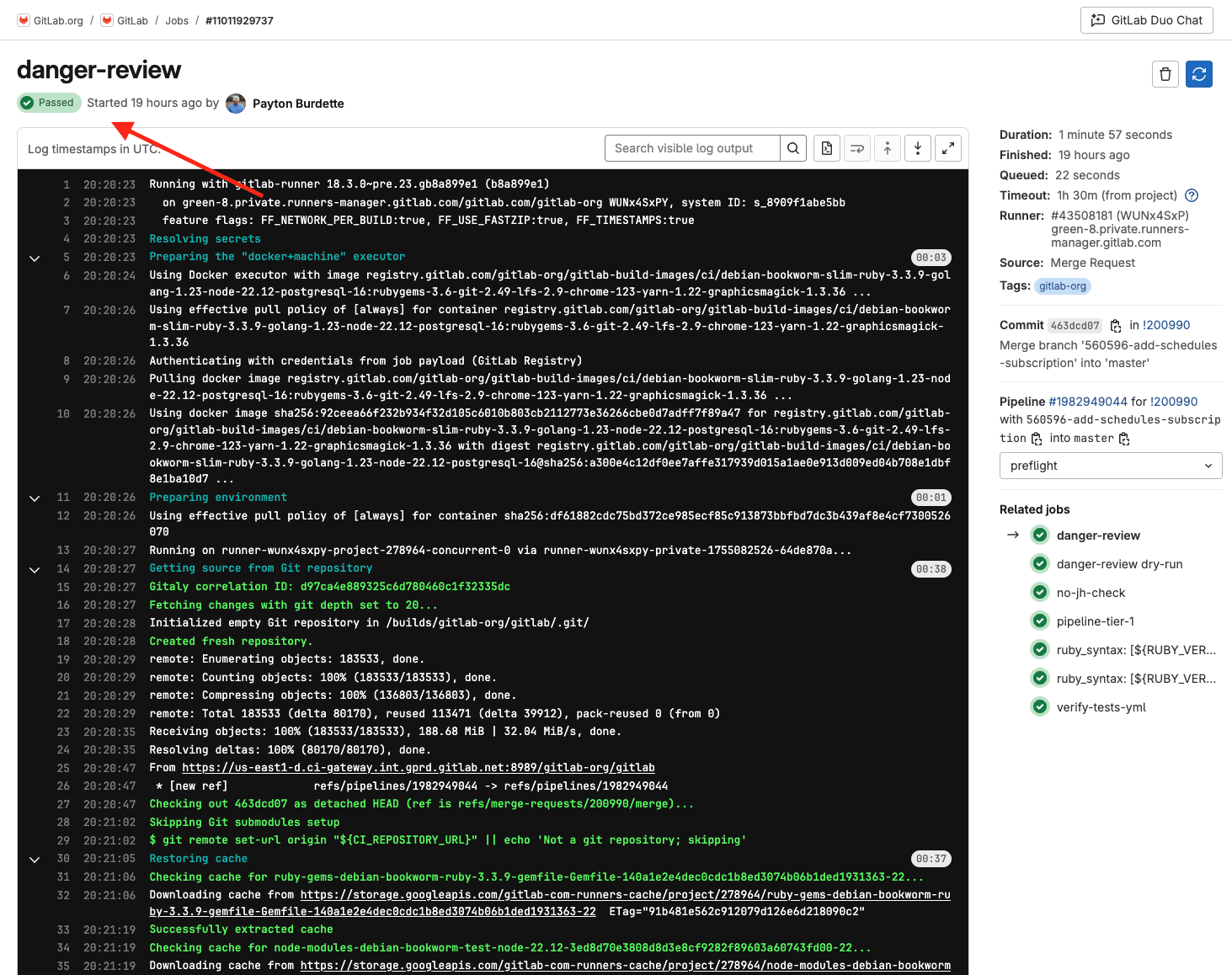

When GitLab executes the YAML configuration in the GitLab UI, the parent pipeline gets rendered as follows:

Notice the label "trigger job," which indicates this job will start the execution of another pipeline configuration.

Configuring the child pipeline

Moving forward, let's now focus on the child pipeline configuration, where we expect to inherit and print the value of the var_1 created in the parent pipeline.

The pipeline configuration in service_a/.gitlab_ci.yml has the following definition:

stages:

- build

build_a:

stage: build

script:

- echo "this job inherits the variable from the Parent pipeline:"

- echo $var_1

needs:

- project: gitlab-da/use-cases/7-4-parent-child-pipeline

job: create_var_job

ref: main

artifacts: true

Like before, let's break down this pipeline and highlight the main parts to achieve our goal. This pipeline only contains one stage (i.e., build) and one job (i.e., build_a). The script in the job contains two steps:

build_a:

stage: build

script:

- echo "this job inherits the variable from the Parent pipeline:"

- echo $var_1

These two steps print output during the execution. The most interesting one is the second step, echo $var_1, where we expect to print the variable value inherited from the parent pipeline. Remember, this was a simple string with value: "Hi, I'm a Parent pipeline variable."

Inheriting variables using needs

To set and link this job to inherit variables from the parent pipeline, we use the reserved GitLab CI properties needs as depicted in the following snippet:

needs:

- project: gitlab-da/use-cases/7-4-parent-child-pipeline

job: create_var_job

ref: main

artifacts: true

Using the "needs" keyword, we define dependencies that must be completed before running this job. In this case, we pass four different values. Let's walk through each one of them:

-

Project: The complete namespace of the project where the main

gitlab-ci.ymlcontaining the parent pipeline YAML is located. Make sure to include the absolute path. -

Job: The specific job name in the parent pipeline from where we want to inherit the variable.

-

Ref: The name of the branch where the main

gitlab-ci.ymlcontaining the parent pipeline YAML is located. -

Artifacts: Where we set a boolean value, indicating that artifacts from the parent pipeline job should be downloaded and made available to this child pipeline job.

Note: This specific approach using the needs property is only available to GitLab Premium and Ultimate users. We will cover another example for GitLab community users later on.

Putting it all together

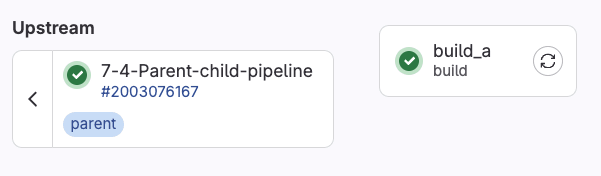

Now let's assume we make a change to any of the files under service_a folder and commit the changes to the repository. When GitLab detects the change, the rule we set up will trigger the child job pipeline execution. This gets displayed in the GitLab UI as follows:

Clicking on the telco_service_a will take us to the jobs in the child pipeline:

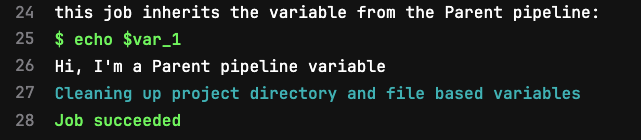

We can see the parent-child relationship, and finally, by clicking on the build_a job, we can visually verify the variable inheritance in the job execution log:

This output confirms the behavior we expected. The custom runtime variable var_1 created in the parent job is inherited in the child job, unpacked from the dotenv report, and its value accessible as can be confirmed in Line 26 above.

This use case illustrates how to share custom variables that can contain any value between pipelines. This example is intentionally simple and can be extrapolated to more realistic scenarios. Take, for instance, the following CI/CD configuration, where the custom variable we need to share is the tag of a Docker image:

# Pipeline

build-prod-image:

tags: [ saas-linux-large-amd64 ]

image: docker:20.10.16

stage: build

services:

- docker:20.10.16-dind

script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

- docker build -t $PRODUCTION_IMAGE .

- docker push $PRODUCTION_IMAGE

- echo "UPSTREAM_CONTAINER_IMAGE=$PRODUCTION_IMAGE" >> prodimage.env

artifacts:

reports:

dotenv: prodimage.env

rules:

- if: '$CI_COMMIT_BRANCH == "main"'

when: always

- when: never

And use the variable with the Docker image tag, in another job that updates a Helm manifest file:

update-helm-values:

stage: update-manifests

image:

name: alpine:3.16

entrypoint: [""]

before_script:

- apk add --no-cache git curl bash yq

- git remote set-url origin https://${CI_USERNAME}:${GITOPS_USER}@${SERVER_PATH}/${PROJECT_PATH}

- git config --global user.email "gitlab@gitlab.com"

- git config --global user.name "GitLab GitOps"

- git pull origin main

script:

- cd src

- echo $UPSTREAM_CONTAINER_IMAGE

- yq eval -i ".spec.template.spec.containers[0].image |= \"$UPSTREAM_CONTAINER_IMAGE\"" store-deployment.yaml

- cat store-deployment.yaml

- git pull origin main

- git checkout -B main

- git commit -am '[skip ci] prod image update'

- git push origin main

needs:

- project: gitlab-da/use-cases/devsecops-platform/simply-find/simply-find-front-end

job: build-prod-image

ref: main

artifacts: true

Mastering how to share variables between pipelines while maintaining the relationship between them enables us to create more sophisticated workflow orchestration that can meet our software building needs.

Using GitLab Package Registry to share artifacts

While the needs feature mentioned above works great for Premium and Ultimate users, GitLab also has features to help achieve similar results for Community Edition users. One suggested approach is to store artifacts in the GitLab Package Registry.

Using a combination of the variables provided in GitLab CI/CD jobs and the GitLab API, you can upload artifacts to the GitLab Package Registry from a parent pipeline. In the child pipeline, you can then access the uploaded artifact from the package registry using the same variables and API to access the artifact. Let’s take a look at the example pipeline and some supplementary scripts that illustrate this:

gitlab-ci.yml (parent pipeline)

# Parent Pipeline Configuration

# This pipeline creates an artifact, uploads it to Package Registry, and triggers a child pipeline

stages:

- create-upload

- trigger

variables:

PACKAGE_NAME: "pipeline-artifacts"

PACKAGE_VERSION: "$CI_PIPELINE_ID"

ARTIFACT_FILE: "artifact.txt"

# Job 1: Create and upload artifact to Package Registry

create-and-upload-artifact:

stage: create-upload

image: alpine:latest

before_script:

- apk add --no-cache curl bash

script:

- bash scripts/create-artifact.sh

- bash scripts/upload-to-registry.sh

rules:

- if: $CI_PIPELINE_SOURCE == "push"

# Job 2: Trigger child pipeline

trigger-child:

stage: trigger

trigger:

include: child-pipeline.yml

strategy: depend

variables:

PARENT_PIPELINE_ID: $CI_PIPELINE_ID

PACKAGE_NAME: $PACKAGE_NAME

PACKAGE_VERSION: $PACKAGE_VERSION

ARTIFACT_FILE: $ARTIFACT_FILE

rules:

- if: $CI_PIPELINE_SOURCE == "push"

child-pipeline.yml

# Child Pipeline Configuration

# This pipeline downloads the artifact from Package Registry and processes it

stages:

- download-process

variables:

# These variables are passed from the parent pipeline

PACKAGE_NAME: "pipeline-artifacts"

PACKAGE_VERSION: "$PARENT_PIPELINE_ID"

ARTIFACT_FILE: "artifact.txt"

# Job 1: Download and process artifact from Package Registry

download-and-process-artifact:

stage: download-process

image: alpine:latest

before_script:

- apk add --no-cache curl bash

script:

- bash scripts/download-from-registry.sh

- echo "Processing downloaded artifact..."

- cat $ARTIFACT_FILE

- echo "Artifact processed successfully!"

upload-to-registry.sh

#!/bin/bash

set -e

# Configuration

PACKAGE_NAME="${PACKAGE_NAME:-pipeline-artifacts}"

PACKAGE_VERSION="${PACKAGE_VERSION:-$CI_PIPELINE_ID}"

ARTIFACT_FILE="${ARTIFACT_FILE:-artifact.txt}"

# Validate required variables

if [ -z "$CI_PROJECT_ID" ]; then

echo "Error: CI_PROJECT_ID is not set"

exit 1

fi

if [ -z "$CI_JOB_TOKEN" ]; then

echo "Error: CI_JOB_TOKEN is not set"

exit 1

fi

if [ -z "$CI_API_V4_URL" ]; then

echo "Error: CI_API_V4_URL is not set"

exit 1

fi

if [ ! -f "$ARTIFACT_FILE" ]; then

echo "Error: Artifact file '$ARTIFACT_FILE' not found"

exit 1

fi

# Construct the upload URL

UPLOAD_URL="${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/packages/generic/${PACKAGE_NAME}/${PACKAGE_VERSION}/${ARTIFACT_FILE}"

# Upload the file using curl

response=$(curl -w "%{http_code}" -o /tmp/upload_response.json \

--header "JOB-TOKEN: $CI_JOB_TOKEN" \

--upload-file "$ARTIFACT_FILE" \

"$UPLOAD_URL")

if [ "$response" -eq 201 ]; then

echo "Upload successful!"

else

echo "Upload failed with HTTP code: $response"

exit 1

fi

download-from-regsitry.sh

#!/bin/bash

set -e

# Configuration

PACKAGE_NAME="${PACKAGE_NAME:-pipeline-artifacts}"

PACKAGE_VERSION="${PACKAGE_VERSION:-$PARENT_PIPELINE_ID}"

ARTIFACT_FILE="${ARTIFACT_FILE:-artifact.txt}"

# Validate required variables

if [ -z "$CI_PROJECT_ID" ]; then

echo "Error: CI_PROJECT_ID is not set"

exit 1

fi

if [ -z "$CI_JOB_TOKEN" ]; then

echo "Error: CI_JOB_TOKEN is not set"

exit 1

fi

if [ -z "$CI_API_V4_URL" ]; then

echo "Error: CI_API_V4_URL is not set"

exit 1

fi

if [ -z "$PACKAGE_VERSION" ]; then

echo "Error: PACKAGE_VERSION is not set"

exit 1

fi

# Construct the download URL

DOWNLOAD_URL="${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/packages/generic/${PACKAGE_NAME}/${PACKAGE_VERSION}/${ARTIFACT_FILE}"

# Download the file using curl

response=$(curl -w "%{http_code}" -o "$ARTIFACT_FILE" \

--header "JOB-TOKEN: $CI_JOB_TOKEN" \

--fail-with-body \

"$DOWNLOAD_URL")

if [ "$response" -eq 200 ]; then

echo "Download successful!"

else

echo "Download failed with HTTP code: $response"

exit 1

fi

In this example, the parent pipeline uploads a file to the GitLab Package Registry by calling a script named upload-to-registry.sh. The script gives the artifact a name and version and constructs the API call to upload the file to the package registry. The parent pipeline is able to authenticate using a $CI_JOB_TOKEN to push the artifact.txt file to the registry.

The child pipeline operates the same as the parent pipeline by using a script to construct the API call to download the artifact.txt file from the package registry. It also is able to authenticate to the registry using the $CI_JOB_TOKEN.

Since the GitLab Package Registry is available to all GitLab users, it helps to serve as a central location for storing and versioning artifacts. It is a great option for users working with many kinds of artifacts and needing to version artifacts for workflows even beyond CI/CD.

Using inputs to pass variables to a child pipeline

If you made it this far in this tutorial, and you have plans to start creating new pipeline configurations, you might want to start by evaluating if your use case can benefit from using inputs to pass variables to other pipelines.

Using inputs is a recommended way to pass variables when you need to define specific values in a CI/CD job and have those values remain fixed during the pipeline run. Inputs might offer certain advantages over the method we implemented before. For example, with inputs, you can include data validation through options (i.e., values must be one of these: [‘staging', ‘prod’]), variable descriptions, type checking, and assign default values before the pipeline run.

Configuring CI/CD inputs

Consider the following parent pipeline configuration:

# .gitlab-ci.yml (main file)

stages:

- trigger

trigger-staging:

stage: trigger

trigger:

include:

- local: service_a/.gitlab-ci.yml

inputs:

environment: staging

version: "1.0.0"

Let's zoom in at the main difference between the code snippet above and the previous parent pipeline examples in this tutorial:

trigger:

include:

- local: service_a/.gitlab-ci.yml

inputs:

environment: staging

version: "1.0.0"

The main difference is using the reserved word "inputs". This part of the YAML configuration can be read in natural language as: “trigger the child pipeline defined in service_a.gitlab-ci.yml and make sure to pass ‘environment: staging’ and ‘version:1.0.0’ as input variables that the child pipeline will know how to use.

Reading CI/CD inputs in child pipelines

Moving to the child pipeline, it must contain in its declaration a spec that defines the inputs it can take. For each input, it is possible to add a little description, a set of predefined options the input value can take, and the type of value it will take. This is illustrated as follows:

# target pipeline or child-pipeline in this case

spec:

inputs:

environment:

description: "Deployment environment"

options: [staging, production]

version:

type: string

description: "Application version"

---

stages:

- deploy

# Jobs that will use the inputs

deploy:

stage: deploy

script:

- echo "Deploying version $[[ inputs.version ]] to $[[ inputs.environment ]]"

Notice from the code snippet that after defining the spec, there is a YAML document separator "---" followed by the actual child pipeline definition where we access the variables $[[ inputs.version ]] and $[[ inputs.environment ]]" from the defined inputs using input interpolation.

Get hands-on with parent-child pipelines, artifacts, and more

We hope this article has helped with navigating the challenge of sharing variables and artifacts in parent-child pipeline setups.

To try these examples for yourself, feel free to view or fork the Premium/Ultimate and the GitLab Package Registry examples of sharing artifacts.

You can also sign up for a 30-day free trial of GitLab Ultimate to experience all the features GitLab has to offer. Thanks for reading!

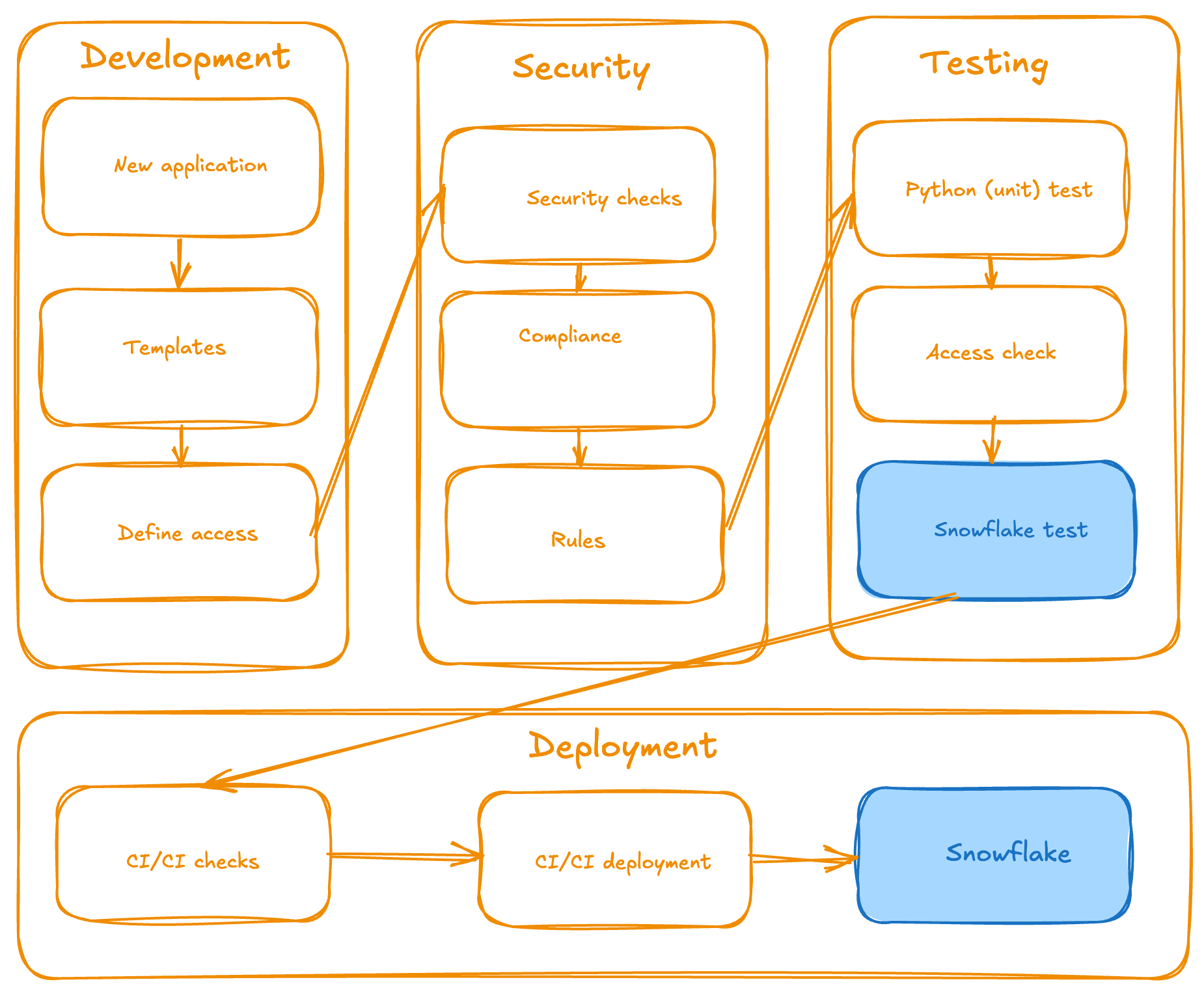

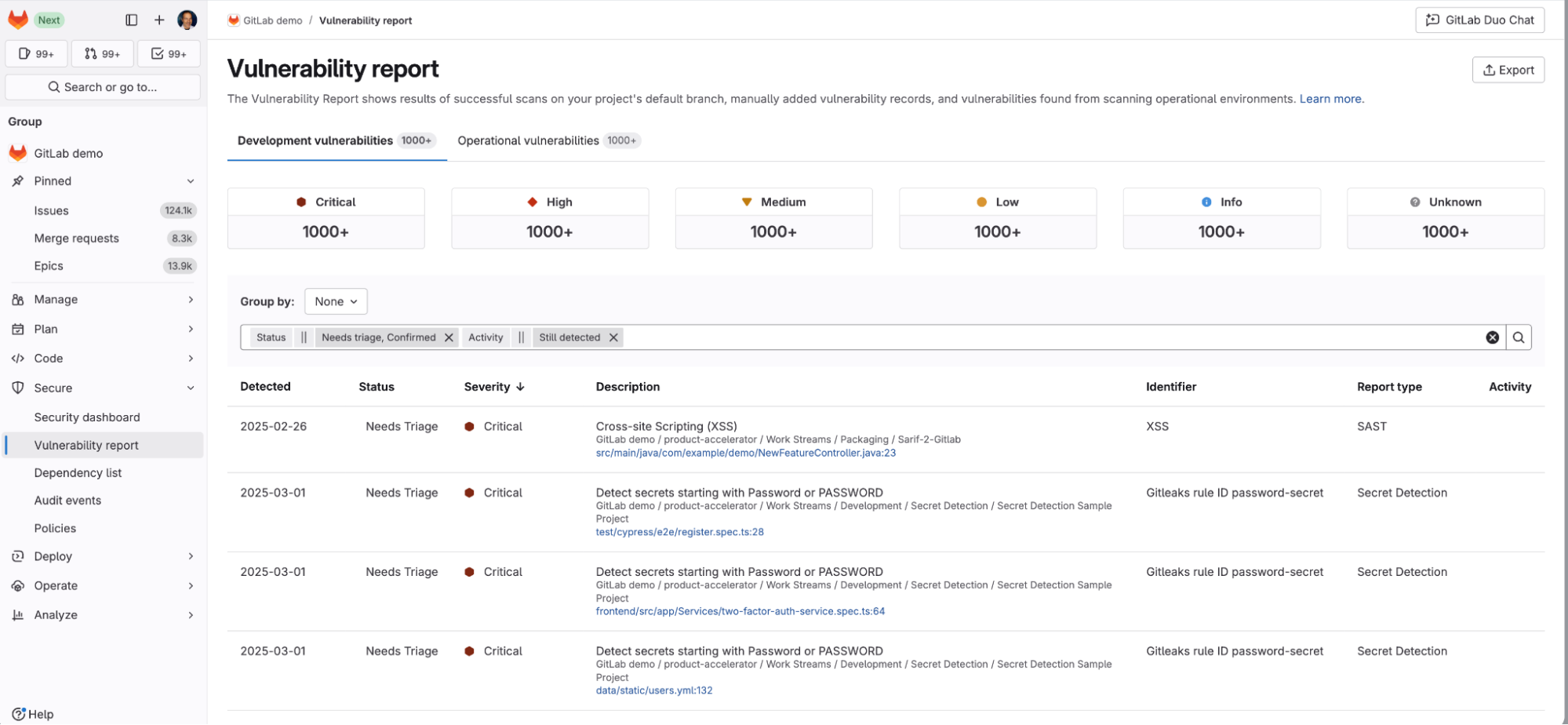

]]>The challenge

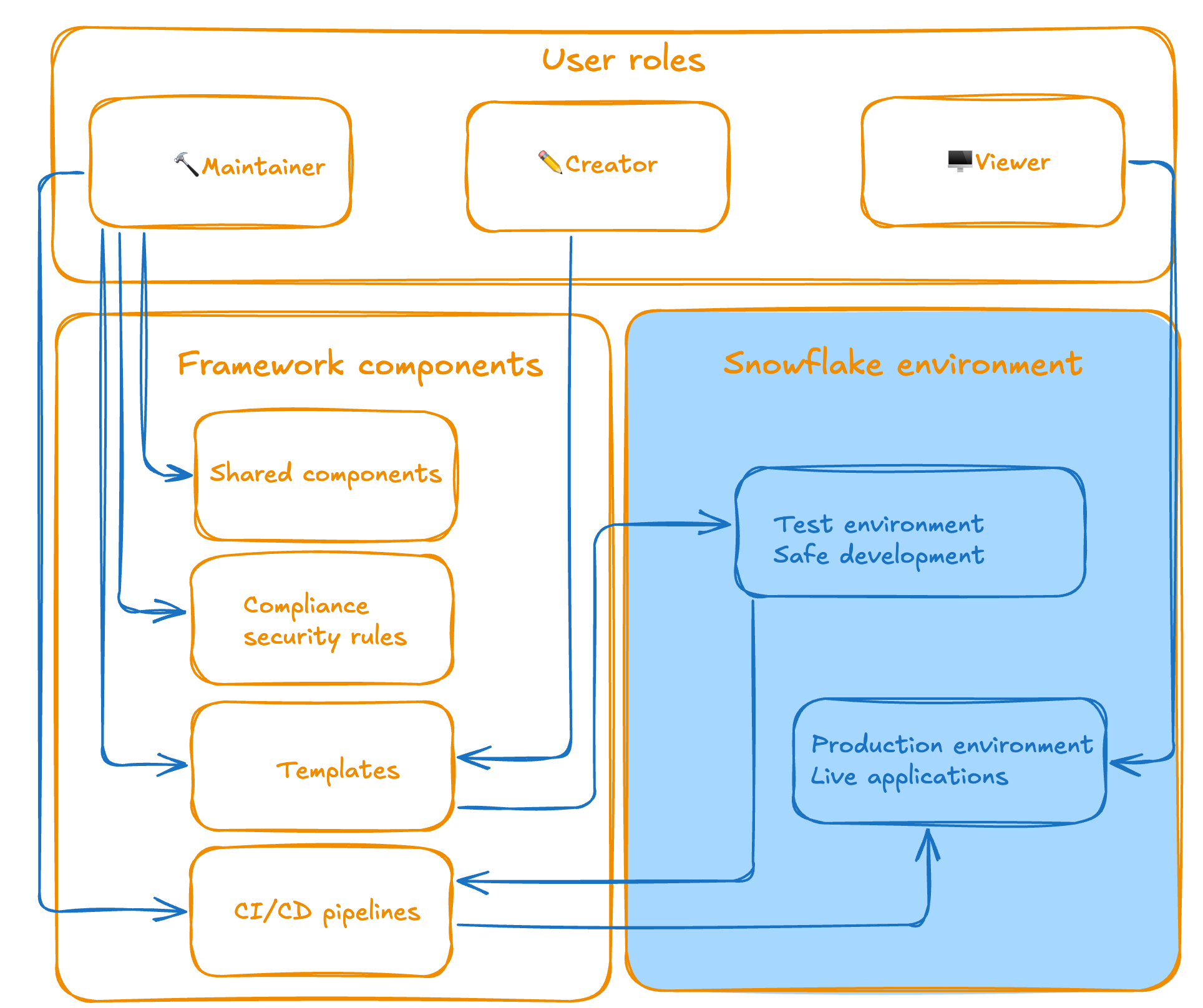

Imagine this scenario: Your organization has dozens of Streamlit applications across different environments, running various Python versions, connecting to sensitive data with inconsistent security practices. Some apps work, others break mysteriously, and nobody knows who built what or how to maintain them.

This was exactly the challenge our data team faced. Applications were being created in isolation, with no standardization, no security oversight, and no clear deployment process. The result? A compliance nightmare and a maintenance burden that was growing exponentially.

<p></p>

<center><i>Functional architectural design (high level)</i></center>

How we started

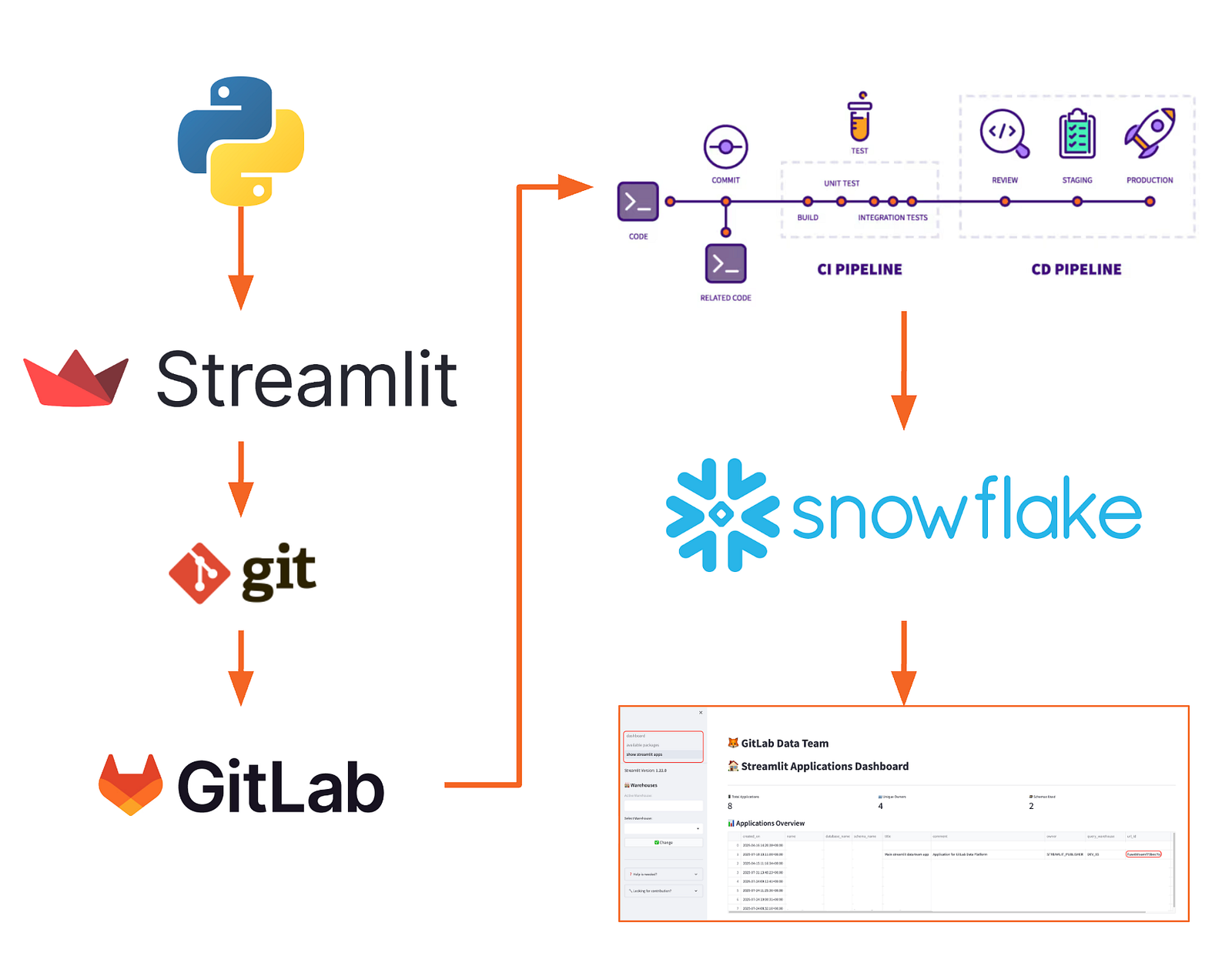

We leveraged our unique position as customer zero by building this entire framework on GitLab's own CI/CD infrastructure and project management tools. Here are the ingredients we started with:

-

GitLab (product)

-

Snowflake - our single source of truth (SSOT) for the data warehouse activities (and more than that)

-

Streamlit - an open-source tool for visual applications that has pure Python code under the hood

This provided us with immediate access to enterprise-grade DevSecOps capabilities, enabling us to implement automated testing, code review processes, and deployment pipelines from the outset. By utilizing GitLab's built-in features for issue tracking, merge requests, and automated deployments (CI/CD pipelines), we can iterate rapidly and validate the framework against real-world enterprise requirements. This internal-first approach ensured our solution was battle-tested on GitLab's own infrastructure before any external implementation.

The lessons we learned

The most critical lesson we learned from building the Streamlit Application Framework in Snowflake is that structure beats chaos every time — implement governance early rather than retrofitting it later when maintenance becomes exponential.

You also need to clearly define roles and responsibilities, separating infrastructure concerns from application development, so that each team can focus on its strengths.

Security and compliance cannot be afterthoughts; they must be built into templates and automated processes from day one, as it's far easier to enforce consistent standards upfront than to force them after the fact. Invest heavily in automation and CI/CD pipelines, as manual processes don't scale and introduce human error.

<p></p>

<center><i>Architecture of the framework (general overview)</i></center>

How the Streamlit Application Framework changes everything

The Streamlit Application Framework turns a scattered approach into a structure. It gives developers freedom within secure guardrails, while automating deployment and eliminating maintenance complexity.

Three clear roles, one unified process

The framework introduces a structured approach with three distinct roles:

-

Maintainers (Data team members and contributors) handle the infrastructure, including CI/CD pipelines, security templates, and compliance rules. They ensure the framework runs smoothly and stays secure.

-

Creators (those who need to build applications) can focus on what they do best: creating visualizations, connecting to Snowflake data, and building user experiences. They have full flexibility to create new applications from scratch, add new pages to existing apps, integrate additional Python libraries, and build complex data visualisations — all without worrying about deployment pipelines or security configurations.

-

Viewers (end users) access polished, secure applications without any technical overhead. All they need is Snowflake access.

<p></p>

<center><i>Overview of roles and their functions</i></center>

Automate everything

By implementing CI/CD, days of manual deployments and configuration headaches are gone. The framework provides:

- One-click environment preparation: With a set of

makecommands, the environment is installed and ready in a few seconds.

================================================================================

✅ Snowflake CLI successfully installed and configured!

Connection: gitlab_streamlit

User: YOU@GITLAB.COM

Account: gitlab

================================================================================

Using virtualenv: /Users/YOU/repos/streamlit/.venv

📚 Installing project dependencies...

Installing dependencies from lock file

No dependencies to install or update

✅ Streamlit environment prepared!

-

Automated CI/CD pipelines: Handle testing, code review, and deployment from development to production.

-

Secure sandbox environments: Provide for safe development and testing before production deployment.

╰─$ make streamlit-rules

🔍 Running Streamlit compliance check...

================================================================================

CODE COMPLIANCE REPORT

================================================================================

Generated: 2025-07-09 14:01:16

Files checked: 1

SUMMARY:

✅ Passed: 1

❌ Failed: 0

Success Rate: 100.0%

APPLICATION COMPLIANCE SUMMARY:

📱 Total Applications Checked: 1

⚠️ Applications with Issues: 0

📊 File Compliance Rate: 100.0%

DETAILED RESULTS BY APPLICATION:

...

- Template-based application creation: Ensures consistency across all applications and pages.

╰─$ make streamlit-new-page STREAMLIT_APP=sales_dashboard STREAMLIT_PAGE_NAME=analytics

📝 Generating new Streamlit page: analytics for app: sales_dashboard

📃 Create new page from template:

Page name: analytics

App directory: sales_dashboard

Template path: page_template.py

✅ Successfully created 'analytics.py' in 'sales_dashboard' directory from template

-

Poetry-based dependency management: Prevents version conflicts and maintains clean environments.

-

Organized project structure: Has dedicated folders for applications, templates, compliance rules, and configuration management.

├── src/

│ ├── applications/ # Folder for Streamlit applications

│ │ ├── main_app/ # Main dashboard application

│ │ ├── components/ # Shared components

│ │ └── <your_apps>/ # Your custom application

│ │ └── <your_apps2>/ # Your 2nd custom application

│ ├── templates/ # Application and page templates

│ ├── compliance/ # Compliance rules and checks

│ └── setup/ # Setup and configuration utilities

├── tests/ # Test files

├── config.yml # Environment configuration

├── Makefile # Build and deployment automation

└── README.md # Main README.md file

- Streamlined workflow: Takes local development through testing schema to production, all automated through GitLab CI/CD pipelines.

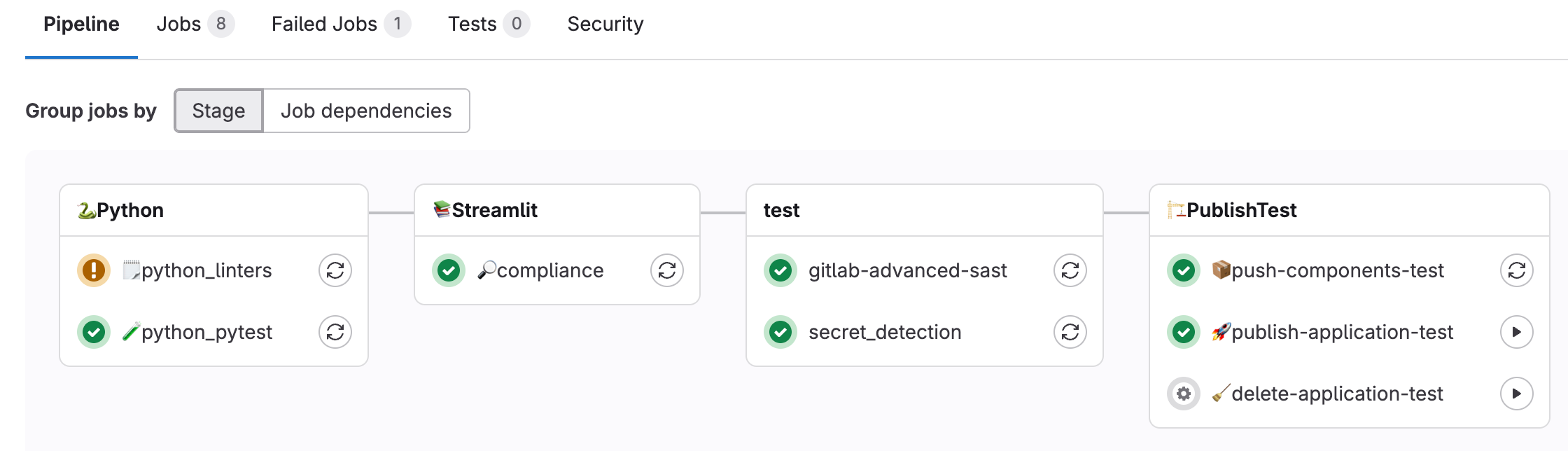

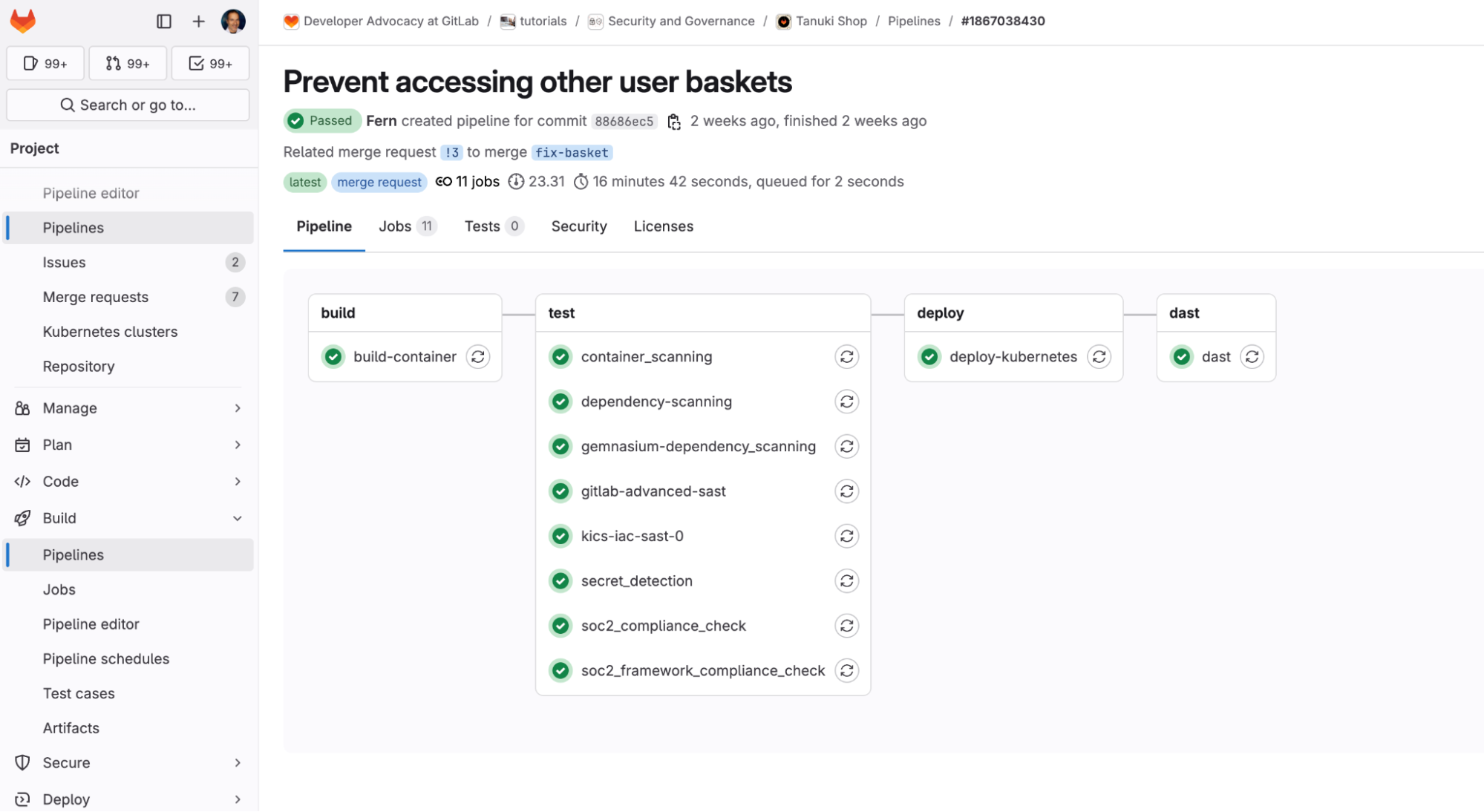

<p></p> <center><i>GitLab CI/CD pipelines for full automation of the process</i></center>

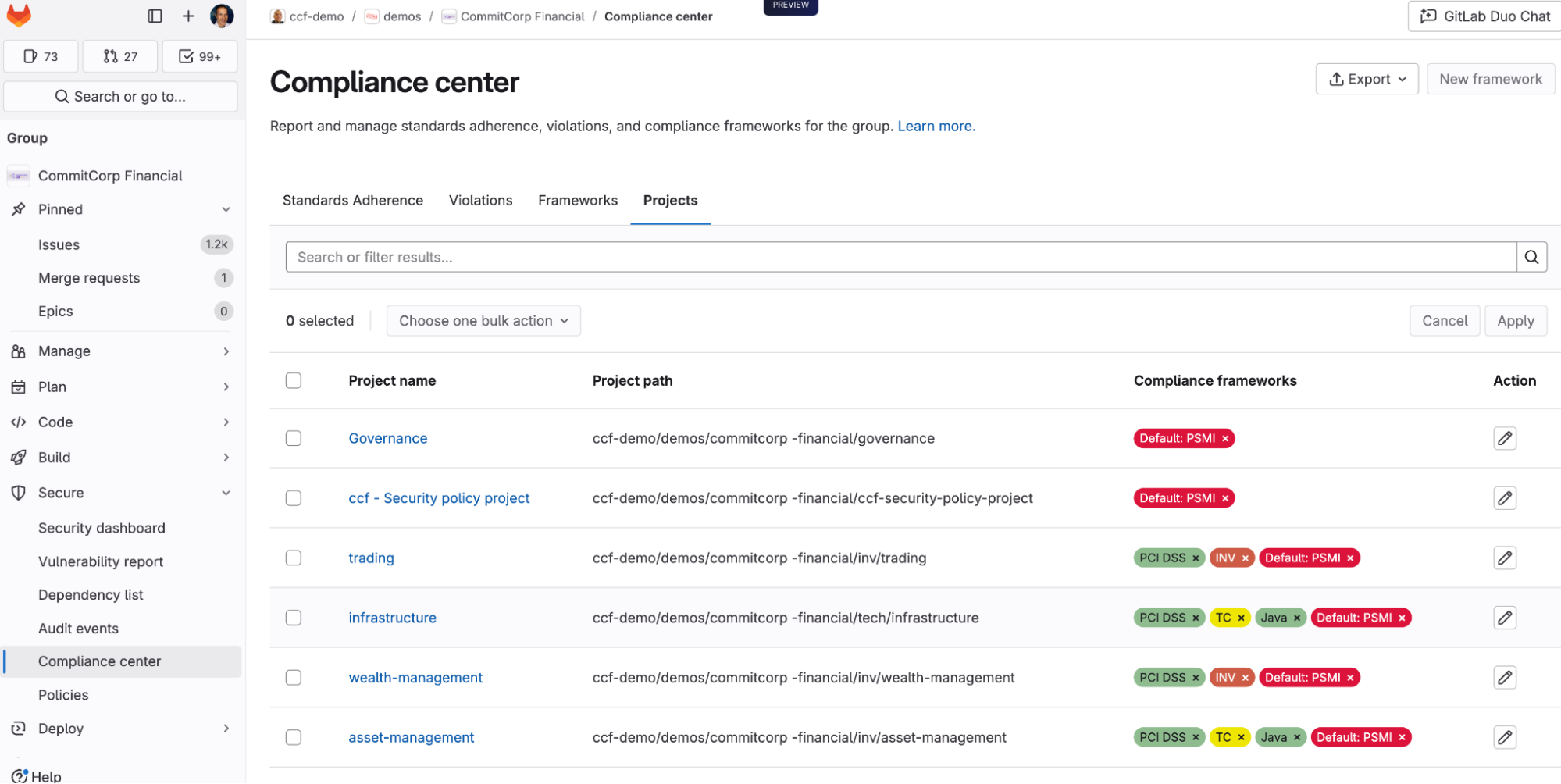

Security and compliance by design

Instead of bolting on security as an afterthought, the structured Streamlit Application Framework builds it in from the ground up. Every application adheres to the same security standards, and compliance requirements are automatically enforced. Audit trails are maintained throughout the development lifecycle.

We introduce our compliance rules and verify them with a single command. For instance, we can list which classes and methods are mandatory to use, which files you should have, and which roles are allowed and which are forbidden to share the application with. The rules are flexible and descriptive; all you need to do is define them in a YAML file:

class_rules:

- name: "Inherit code for the page from GitLabDataStreamlitInit"

description: "All Streamlit apps must inherit from GitLabDataStreamlitInit"

severity: "error"

required: true

class_name: "*"

required_base_classes:

- "GitLabDataStreamlitInit"

required_methods:

- "__init__"

- "set_page_layout"

- "setup_ui"

- "run"

function_rules:

- name: "Main function required"

description: "Must have a main() function"

severity: "error"

required: true

function_name: "main"

import_rules:

- name: "Import GitLabDataStreamlitInit"

description: "Must import the mandatory base class"

severity: "error"

required: true

module_name: "gitlab_data_streamlit_init"

required_items:

- "GitLabDataStreamlitInit"

- name: "Import streamlit"

description: "Must import streamlit library"

severity: "error"

required: true

module_name: "streamlit"

file_rules:

- name: "Snowflake configuration required (snowflake.yml)"

description: "Each application must have a snowflake.yml configuration file"

severity: "error"

required: true

file_pattern: "**/applications/**/snowflake.yml"

base_path: ""

- name: "Snowflake environment required (environment.yml)"

description: "Each application must have a environment.yml configuration file"

severity: "error"

required: true

file_pattern: "**/applications/**/environment.yml"

base_path: ""

- name: "Share specification required (share.yml)"

description: "Each application must have a share.yml file"

severity: "warning"

required: true

file_pattern: "**/applications/**/share.yml"

base_path: ""

- name: "README.md required (README.md)"

description: "Each application should have a README.md file with a proper documentation"

severity: "error"

required: true

file_pattern: "**/applications/**/README.md"

base_path: ""

- name: "Starting point recommended (dashboard.py)"

description: "Each application must have a dashboard.py as a starting point"

severity: "warning"

required: true

file_pattern: "**/applications/**/dashboard.py"

base_path: ""

sql_rules:

- name: "SQL files must contain only SELECT statements"

description: "SQL files and SQL code in other files should only contain SELECT statements for data safety"

severity: "error"

required: true

file_extensions: [".sql", ".py"]

select_only: true

forbidden_statements:

- ....

case_sensitive: false

- name: "SQL queries should include proper SELECT statements"

description: "When SQL is present, it should contain proper SELECT statements"

severity: "warning"

required: false

file_extensions: [".sql", ".py"]

required_statements:

- "SELECT"

case_sensitive: false

share_rules:

- name: "Valid functional roles in share.yml"

description: "Share.yml files must contain only valid functional roles from the approved list"

severity: "error"

required: true

file_pattern: "**/applications/**/share.yml"

valid_roles:

- ...

safe_data_roles:

- ...

- name: "Share.yml file format validation"

description: "Share.yml files must follow the correct YAML format structure"

severity: "error"

required: true

file_pattern: "**/applications/**/share.yml"

required_keys:

- "share"

min_roles: 1

max_roles: 10

With one command running:

╰─$ make streamlit-rules

We can verify all the rules we have created and validate that the developers (who are building a Streamlit application) are following the policy specified by the creators (who determine the policies and building blocks of the framework), and that all the building blocks are in the right place. This ensures consistent behavior across all Streamlit applications.

🔍 Running Streamlit compliance check...

================================================================================

CODE COMPLIANCE REPORT

================================================================================

Generated: 2025-08-18 17:05:12

Files checked: 4

SUMMARY:

✅ Passed: 4

❌ Failed: 0

Success Rate: 100.0%

APPLICATION COMPLIANCE SUMMARY:

📱 Total Applications Checked: 1

⚠️ Applications with Issues: 0

📊 File Compliance Rate: 100.0%

DETAILED RESULTS BY APPLICATION:

================================================================================

✅ PASS APPLICATION: main_app

------------------------------------------------------------

📁 FILES ANALYZED (4):

✅ dashboard.py

📦 Classes: SnowflakeConnectionTester

🔧 Functions: main

📥 Imports: os, pwd, gitlab_data_streamlit_init, snowflake.snowpark.exceptions, streamlit

✅ show_streamlit_apps.py

📦 Classes: ShowStreamlitApps

🔧 Functions: main

📥 Imports: pandas, gitlab_data_streamlit_init, snowflake_session, streamlit

✅ available_packages.py

📦 Classes: AvailablePackages

🔧 Functions: main

📥 Imports: pandas, gitlab_data_streamlit_init, streamlit

✅ share.yml

👥 Share Roles: snowflake_analyst_safe

📄 FILE COMPLIANCE FOR MAIN_APP:

✅ Required files found:

✓ snowflake.yml

✓ environment.yml

✓ share.yml

✓ README.md

✓ dashboard.py

RULES CHECKED:

----------------------------------------

Class Rules (1):

- Inherit code for the page from GitLabDataStreamlitInit (error)

Function Rules (1):

- Main function required (error)

Import Rules (2):

- Import GitLabDataStreamlitInit (error)

- Import streamlit (error)

File Rules (5):

- Snowflake configuration required (snowflake.yml) (error)

- Snowflake environment required (environment.yml) (error)

- Share specification required (share.yml) (warning)

- README.md required (README.md) (error)

- Starting point recommended (dashboard.py) (warning)

SQL Rules (2):

- SQL files must contain only SELECT statements (error)

🗄 SELECT-only mode enabled

🚨 Forbidden: INSERT, UPDATE, DELETE, DROP, ALTER...

- SQL queries should include proper SELECT statements (warning)

Share Rules (2):

- Valid functional roles in share.yml (error)

👥 Valid roles: 15 roles defined

🔒 Safe data roles: 11 roles

- Share.yml file format validation (error)

------------------------------------------------------------

✅ Compliance check passed

-----------------------------------------------------------

Developer experience that works

Whether you prefer your favorite IDE, a web-based development environment, or Snowflake Snowsight, the experience remains consistent. The framework provides:

- Template-driven development: New applications and pages are created through standardized templates, ensuring consistency and best practices from day one. No more scattered design and elements.

╰─$ make streamlit-new-app NAME=sales_dashboard

🔧 Configuration Environment: TEST

📝 Configuration File: config.yml

📜 Config Loader Script: ./setup/get_config.sh

🐍 Python Version: 3.12

📁 Applications Directory: ./src/applications

🗄 Database: ...

📊 Schema: ...

🏗 Stage: ...

🏭 Warehouse: ...

🆕 Creating new Streamlit app: sales_dashboard

Initialized the new project in ./src/applications/sales_dashboard

- Poetry package management: All dependencies are managed through Poetry, creating isolated environments that won't disrupt your existing Python setup.

[tool.poetry]

name = "GitLab Data Streamlit"

version = "0.1.1"

description = "GitLab Data Team Streamlit project"

authors = ["GitLab Data Team <*****@gitlab.com>"]

readme = "README.md"

[tool.poetry.dependencies]

python = "<3.13,>=3.12"

snowflake-snowpark-python = "==1.32.0"

snowflake-connector-python = {extras = ["development", "pandas", "secure-local-storage"], version = "^3.15.0"}

streamlit = "==1.22.0"

watchdog = "^6.0.0"

types-toml = "^0.10.8.20240310"

pytest = "==7.0.0"

black = "==25.1.0"

importlib-metadata = "==4.13.0"

pyyaml = "==6.0.2"

python-qualiter = "*"

ruff = "^0.1.0"

types-pyyaml = "^6.0.12.20250516"

jinja2 = "==3.1.6"

[build-system]

requires = ["poetry-core"]

build-backend = "poetry.core.masonry.api"

- Multi-page application support: Creators can easily build complex applications with multiple pages and add new libraries as needed. Multi-page applications are part of the framework and a developer is focusing on the logic, not the design and structuring.

<p></p>

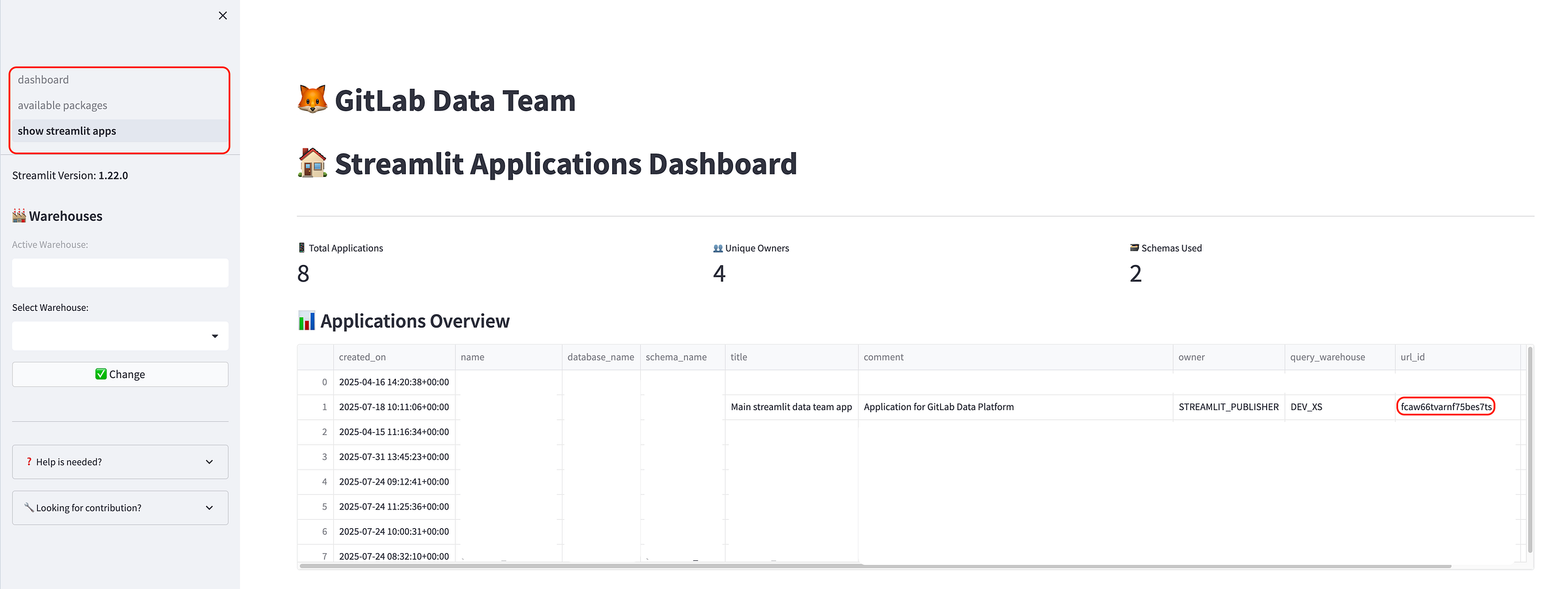

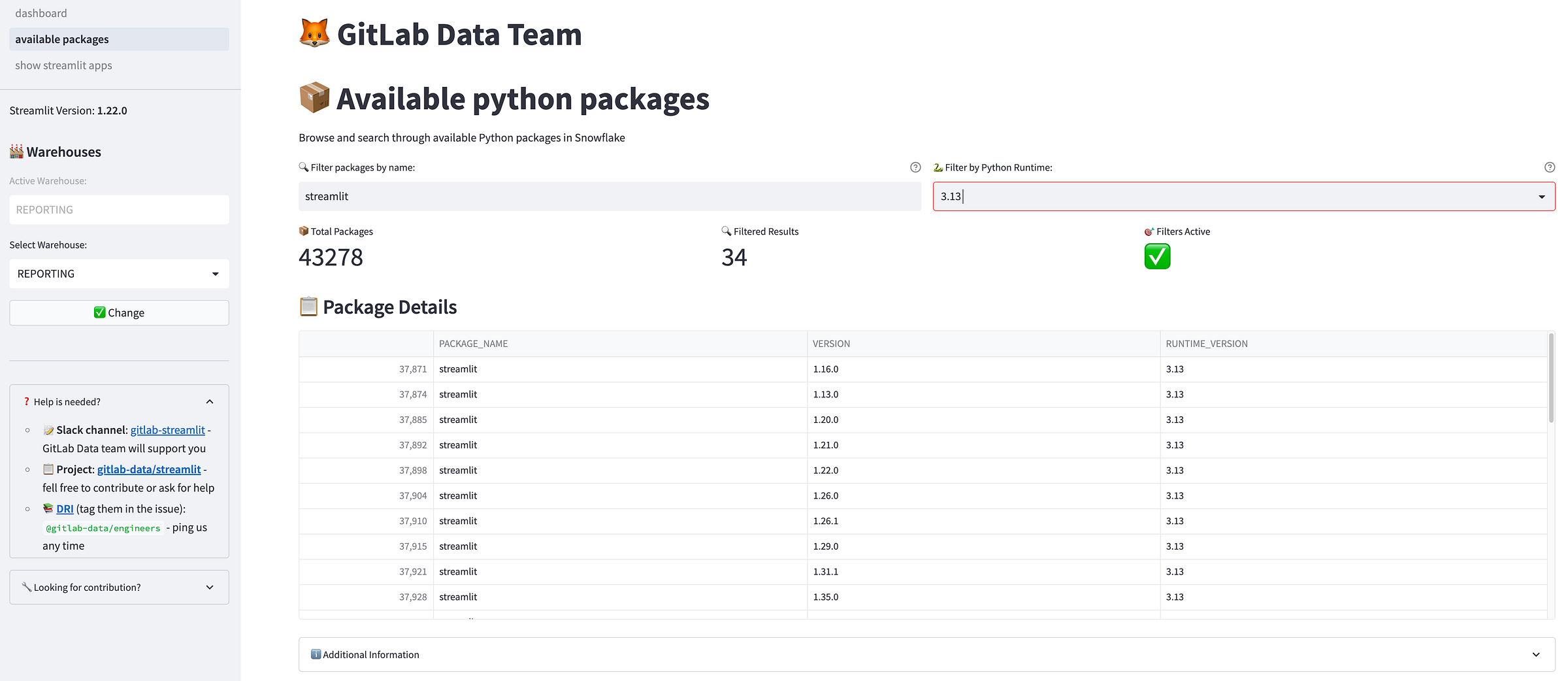

<center><i>Multipage application example (in Snowflake)</i></center>

<p></p>

- Seamless Snowflake integration: Built-in connectors and authentication handling for secure data access provide the same experience, whether in local development or directly in Snowflake.

make streamlit-push-test APPLICATION_NAME=sales_dashboard

📤 Deploying Streamlit app to test environment: sales_dashboard

...

------------------------------------------------------------------------------------------------------------

🔗 Running share command for application: sales_dashboard

Running commands to grant shares

🚀 Executing: snow streamlit share sales_dashboard with SOME_NICE_ROLE

✅ Command executed successfully

📊 Execution Summary: 1/1 commands succeeded

-

Comprehensive Makefile: All common commands are wrapped in simple Makefile commands, from local development to testing and deployment, including CI/CD pipelines.

-

Safe local development: Everything runs in isolated Poetry environments, protecting your system while providing production-like experiences.

<p></p>

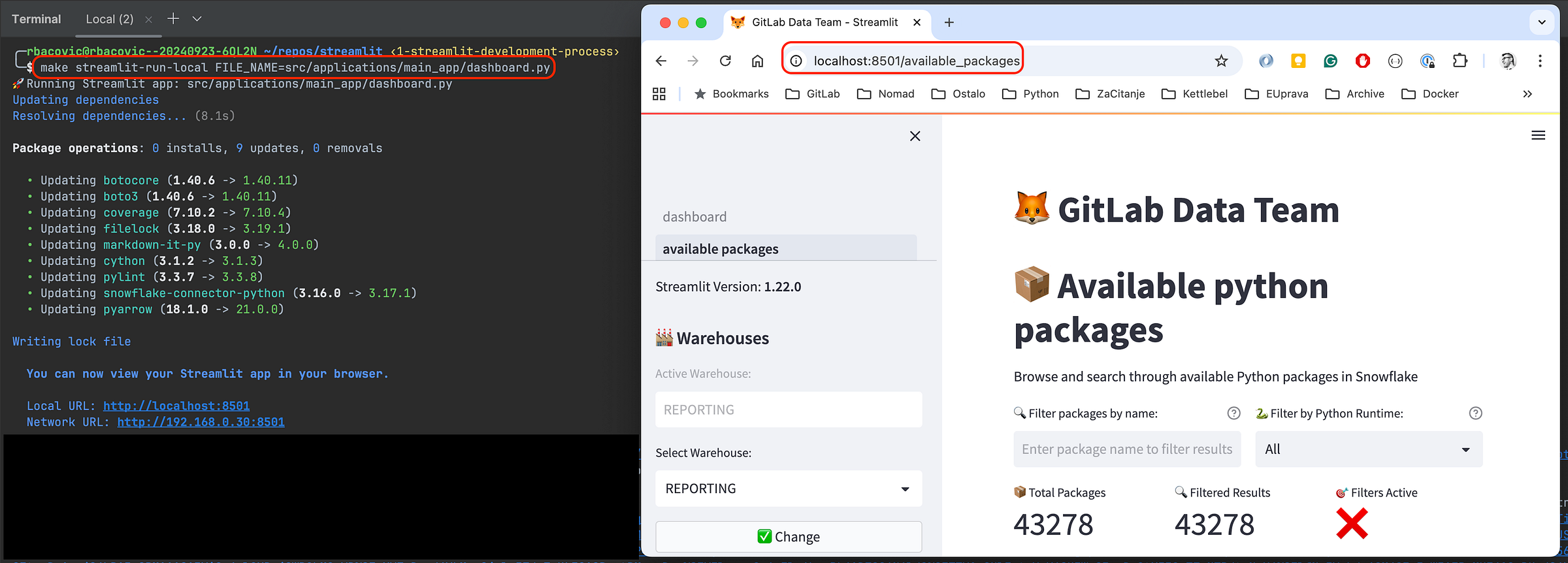

<center><i>Same experience despite the environment (example of the local development)</i></center>

<p></p>

- Collaboration via code: All applications and components are wrapped up in one repository, which allows the entire organization to collaborate on the same resources and avoid double work and redundant setup.

How you can get started

If you're facing similar challenges with scattered Streamlit applications, here's how to begin and move quickly:

-

Assess your current state: Inventory your existing applications and identify pain points.

-

Define your roles: Separate maintainer responsibilities from creator and end users' needs.

-

Start with templates: Create standardized application templates that enforce your security and compliance requirements.

-

Implement CI/CD: Automate your deployment pipeline to reduce manual errors and ensure consistency.

<p></p>

<center><i>The application deployed in Snowflake</i></center>

The bigger picture

This framework represents more than just a technical solution — it's a paradigm shift toward treating data applications as first-class citizens in your enterprise (data) architecture.

By providing structure without sacrificing flexibility, the GitLab Data team created an environment where anyone in the company with minimal technical knowledge can innovate rapidly while maintaining the highest standards of security and compliance.

What's next?

We're continuing to enhance the framework based on user feedback and emerging needs. Future improvements include expanded template libraries, enhanced monitoring capabilities, more flexibility, and a smoother user experience.

The goal isn't just to solve today's problems, but to create a foundation that scales with your organization's growing data application needs.

Summary

The GitLab Data Team transformed dozens of scattered, insecure Streamlit applications with no standardization into a unified, enterprise-grade framework that separates roles cleanly:

-

Maintainers handle infrastructure and security.

-

Creators focus on building applications without deployment headaches.

-

Viewers access polished, compliant apps.

And we used these building blocks:

-

Automated CI/CD pipelines

-

Fully collaborative and versioned code in git

-

Template-based development

-

Built-in security compliance, testing

-

Poetry-managed environments

We eliminated the maintenance nightmare while enabling rapid innovation — proving that you can have both structure and flexibility when you treat data applications as first-class enterprise assets rather than throwaway prototypes.

]]>Here's how to configure object storage for maximum performance, security,

and reliability across your GitLab components.

Use consolidated form for GitLab components

For artifacts, LFS, uploads, packages, and other GitLab data, eliminate credential duplication with the consolidated form:

gitlab_rails['object_store']['enabled'] = true

gitlab_rails['object_store']['connection'] = {

'provider' => 'AWS',

'region' => 'us-east-1',

'use_iam_profile' => true

}

gitlab_rails['object_store']['objects']['artifacts']['bucket'] = 'gitlab-artifacts'

gitlab_rails['object_store']['objects']['lfs']['bucket'] = 'gitlab-lfs'

# ... additional buckets for each object type

This reduces complexity while enabling encrypted S3 buckets and proper Content-MD5 headers.

Configure container registry separately

The container registry requires its own configuration since it doesn't support the consolidated form:

registry['storage'] = {

's3_v2' => { # Use the new v2 driver

'bucket' => 'gitlab-registry',

'region' => 'us-east-1',

# Omit access keys to use IAM roles

}

}

Note: The s3_v1 driver is deprecated and will be removed in GitLab 19.0. Migrate to s3_v2 for better performance and reliability.

Disable proxy download for performance

Set proxy_download to false (default) for direct downloads:

# For GitLab objects - can be set globally

gitlab_rails['object_store']['proxy_download'] = false

# Or configure per bucket for granular control

gitlab_rails['object_store']['objects']['artifacts']['proxy_download'] = false

gitlab_rails['object_store']['objects']['lfs']['proxy_download'] = false

gitlab_rails['object_store']['objects']['uploads']['proxy_download'] = true # Example: keep proxy for uploads

# Container registry defaults to redirect mode (direct downloads)

# Only disable if your environment requires it:

registry['storage']['redirect']['disable'] = false # Keep as false

Important: The proxy_download option can be configured globally at the object-store level or individually per bucket. This gives you flexibility to optimize based on your specific use case — for example, you might want direct downloads for large artifacts and LFS files, but proxy smaller uploads through GitLab for additional security controls.

This dramatically reduces server load and egress costs by letting clients download directly from object storage.

Choose identity-based authentication

AWS: Use IAM roles instead of access keys:

# GitLab objects

gitlab_rails['object_store']['connection'] = {

'provider' => 'AWS',

'use_iam_profile' => true

}

# Container registry

registry['storage'] = {

's3_v2' => {

'bucket' => 'gitlab-registry',

'region' => 'us-east-1'

# No access keys = IAM role authentication

}

}

Google Cloud Platform: Enable application default credentials:

gitlab_rails['object_store']['connection'] = {

'provider' => 'Google',

'google_application_default' => true

}

Azure: Use workload identities by omitting storage access keys.

Add encryption layers

Enable server-side encryption for additional security:

# GitLab objects

gitlab_rails['object_store']['storage_options'] = {

'server_side_encryption' => 'AES256'

}

# Container registry

registry['storage'] = {

's3_v2' => {

'bucket' => 'gitlab-registry',

'encrypt' => true

}

}

For AWS KMS encryption, specify the key ARN in server_side_encryption_kms_key_id.

Use separate buckets for organization

Create dedicated buckets for each component:

-

gitlab-artifacts - CI/CD job artifacts

-

gitlab-lfs - Git LFS objects

-

gitlab-uploads - User uploads

-

gitlab-packages - Package registry

-

gitlab-registry - Container images

This isolation improves security, enables granular access controls, and simplifies cost tracking.

Key configuration differences

| Component | Consolidated Form | Identity Auth | Encryption | Direct Downloads |

|---|---|---|---|---|

| Artifacts, LFS, Packages | ✅ Supported | ✅ use_iam_profile | ✅ storage_options | ✅ proxy_download: false |

| Container Registry | ❌ Separate config | ✅ Omit access keys | ✅ encrypt: true | ✅ redirect enabled by default |

Migration path

-

Start with GitLab objects: Use the consolidated form for immediate complexity reduction.

-

Configure registry separately: Use s3_v2 driver with IAM authentication.

-

Enable encryption: Add server-side encryption for both components.

-

Optimize performance: Ensure direct downloads are enabled with appropriate

proxy_downloadsettings. -

Set up lifecycle policies: Configure S3 lifecycle rules to clean up incomplete multipart uploads.

Additional resources

For a complete AWS S3 configuration example, see the GitLab documentation on AWS S3 object storage setup.

For more details on configuring proxy_download parameters per bucket, refer to the GitLab object storage configuration documentation.

These configurations will scale with your growth while maintaining security and performance. The separation between GitLab object storage and container registry configurations reflects their different underlying architectures, but both benefit from the same optimization principles.

]]>I recently spoke with a platform engineer at a Fortune 500 company who told me, "I spend more time managing artifact repositories than I do on actual platform improvements." That conversation reminded me why we need an honest discussion about the real costs of fragmented artifact management — and what platform teams can realistically do about it. This article will help you better understand the problem and how GitLab can help you solve it through strategic consolidation.

Real-world impact: The numbers

Based on data from our customers and industry research, fragmented artifact management typically results in the following costs for a midsize organization (500+ developers):

- Licensing: $50,000-200,000 annually across multiple tools

- Operational overhead: 2-3 FTE's equivalent time spent on artifact management tasks

- Storage inefficiency: 20%-30% higher storage costs due to duplication and poor lifecycle management

- Developer productivity loss: 15-20 minutes daily per developer due to artifact-related friction

For large enterprises, these numbers multiply significantly. One customer calculated they were spending over $500,000 annually just on the operational overhead of managing seven different artifact storage systems.

The hidden costs compound daily:

Time multiplication: Every lifecycle policy, security rule, or access control change must be implemented across multiple systems. What should be a 15-minute configuration becomes hours of work.

Security gap risks: Managing security policies across disparate systems creates blind spots. Vulnerability scanning, access controls, and audit trails become fragmented.

Context switching tax: Developers lose productivity when they can't find artifacts or need to remember which system stores what.

The multiplication problem

The artifact management landscape has exploded. Where teams once managed a single Maven repository, today's platform engineers juggle:

- Container registries (Docker Hub, ECR, GCR, Azure ACR)

- Package repositories (JFrog Artifactory, Sonatype Nexus)

- Language-specific registries (npm, PyPI, NuGet, Conan)

- Infrastructure artifacts (Terraform modules, Helm charts)

- ML model registries (MLflow, Weights & Biases)

Each tool comes with its own authentication system, lifecycle policies, security scanning, and operational requirements. For organizations with hundreds or thousands of projects, this creates an exponential management burden.

GitLab's strategic approach: Depth over breadth

When we started building GitLab's artifact management capabilities six years ago, we faced a classic product decision: support every artifact format imaginable or go deep on the formats that matter most to enterprise teams. We chose depth, and that decision has shaped everything we've built since.

Our core focus areas

Instead of building shallow support for 20+ formats, we committed to delivering enterprise-grade capabilities for a strategic set:

- Maven (Java ecosystem)

- npm (JavaScript/Node.js)

- Docker/OCI (container images)

- PyPI (Python packages)

- NuGet (C#/.NET packages)

- Generic packages (any binary artifact)

- Terraform modules (infrastructure as code)

These seven formats account for approximately 80% of artifact usage in enterprise environments, based on our customer data.

What 'enterprise-grade' actually means

By focusing on fewer formats, we can deliver capabilities that work in production environments with hundreds of developers, terabytes of artifacts, and strict compliance requirements:

Virtual registries: Proxy and cache upstream dependencies for reliable builds and supply chain control. Currently production-ready for Maven, with npm and Docker coming in early 2026.

Lifecycle management: Automated cleanup policies that prevent storage costs from spiraling while preserving artifacts for compliance. Available at the project level today, organization-level policies planned for mid-2026.

Security integration: Built-in vulnerability scanning, dependency analysis, and policy enforcement. Our upcoming Dependency Firewall (planned for late 2026) will provide supply chain security control across all formats.

Deep CI/CD integration: Complete traceability from source commit to deployed artifact, with build provenance and security scan results embedded in artifact metadata.

Current capabilities: Battle-tested features

Maven virtual registries: Our flagship enterprise capability, proven with 15+ enterprise customers. Most complete Maven virtual registry setup within two months, with minimal GitLab support required.

Locally-hosted repositories: All seven supported formats offer complete upload, download, versioning, and access control capabilities supporting critical workloads at organizations with thousands of developers.

Protected artifacts: Comprehensive protection preventing unauthorized modifications, supporting fine-grained access controls across all formats.

Project-level lifecycle policies: Automated cleanup and retention policies for storage cost control and compliance.

Performance and scale characteristics

Based on current production deployments:

- Throughput: 10,000+ artifact downloads per minute/per instance

- Storage: Customers successfully managing 50+ TB of artifacts

- Concurrent users: 1,000+ developers accessing artifacts simultaneously

- Availability: 99.99% uptime for GitLab.com for more than 2 years

Strategic roadmap: Next 18 months

Q1 2026

- npm virtual registries: Enterprise proxy/cache for JavaScript packages

- Docker virtual registries: Container registry proxy capabilities

Q2 2026

- Organization-level lifecycle policies (Beta): Centralized cleanup policies with project overrides

- NuGet virtual registries (Beta): .NET package proxy support

- PyPI virtual registries (Beta): Completing virtual registry support for Python

Q3 2026

- Advanced Analytics Dashboard: Storage optimization and usage insights

Q4 2026

- Dependency Firewall (Beta): Supply chain security control for all artifact types

When to choose GitLab: Decision framework

GitLab is likely the right choice if:

- 80%+ of your artifacts are in our seven supported formats

- You're already using GitLab for source code or CI/CD

- You value integrated workflows over standalone feature richness

- You want to reduce the operational complexity of managing multiple systems

- You need complete traceability from source to deployment

Migration considerations

Typical timeline: 2-4 months for complete migration from Artifactory/Nexus

Common challenges: Virtual registry configuration, access control mapping, and developer workflow changes

Success factors: Phased approach, comprehensive testing, and developer training

Most successful migrations follow this pattern:

- Assessment (2-4 weeks): Catalog current artifacts and usage patterns

- Pilot (4-6 weeks): Migrate one team/project end-to-end

- Rollout (6-12 weeks): Gradual migration with parallel systems

- Optimization (ongoing): Implement advanced features and policies

Better artifact management can start today

GitLab's artifact management isn't trying to be everything to everyone. We've made strategic trade-offs: deep capabilities for core enterprise formats rather than shallow support for everything.

If your artifact needs align with our supported formats and you value integrated workflows, we can significantly reduce your operational overhead while improving developer experience.

Our goal is to help you make informed decisions about your artifact management strategy with a clear understanding of capabilities and our roadmap.

Please reach out to me at trizzi@gitlab.com to learn more about GitLab artifact management. I can discuss specific requirements and connect you with our technical team for a deeper evaluation.

This blog contains information related to upcoming products, features, and functionality. It is important to note that the information in this blog post is for informational purposes only. Please do not rely on this information for purchasing or planning purposes. As with all projects, the items mentioned in this blog and linked pages are subject to change or delay. The development, release, and timing of any products, features, or functionality remain at the sole discretion of GitLab.

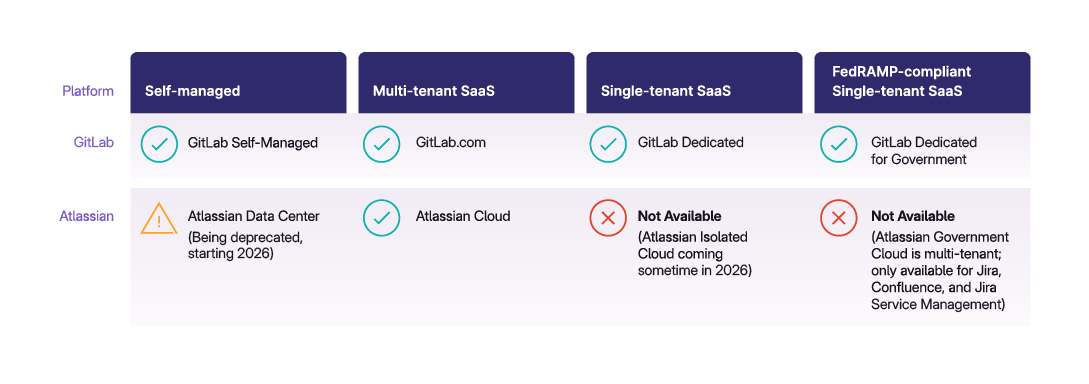

]]>While other vendors force migrations to cloud-only architectures, GitLab remains committed to supporting the deployment choices that match your business needs. Whether you're managing sensitive government data, operating in air-gapped environments, or simply prefer the control of self-managed deployments, we understand that one size doesn't fit all.

The cloud isn't the answer for everyone

For the many companies that invested millions of dollars in Data Center deployments, including those that migrated to Data Center after its Server products were discontinued, this announcement represents more than a product sunset. It signals a fundamental shift away from customer-centric architecture choices, forcing enterprises into difficult positions: accept a deployment model that doesn't fit their needs, or find a vendor that respects their requirements.

Many of the organizations requiring self-managed deployments represent some of the world's most important organizations: healthcare systems protecting patient data, financial institutions managing trillions in assets, government agencies safeguarding national security, and defense contractors operating in air-gapped environments.

These organizations don't choose self-managed deployments for convenience; they choose them for compliance, security, and sovereignty requirements that cloud-only architectures simply cannot meet. Organizations operating in closed environments with restricted or no internet access aren't exceptions — they represent a significant portion of enterprise customers across various industries.

The real cost of forced cloud migration goes beyond dollars

While cloud-only vendors frame mandatory migrations as "upgrades," organizations face substantial challenges beyond simple financial costs:

-

Lost integration capabilities: Years of custom integrations with legacy systems, carefully crafted workflows, and enterprise-specific automations become obsolete. Organizations with deep integrations to legacy systems often find cloud migration technically infeasible.

-

Regulatory constraints: For organizations in regulated industries, cloud migration isn't just complex — it's often not permitted. Data residency requirements, air-gapped environments, and strict regulatory frameworks don't bend to vendor preferences. The absence of single-tenant solutions in many cloud-only approaches creates insurmountable compliance barriers.

-

Productivity impacts: Cloud-only architectures often require juggling multiple products: separate tools for planning, code management, CI/CD, and documentation. Each tool means another context switch, another integration to maintain, another potential point of failure. GitLab research shows 30% of developers spend at least 50% of their job maintaining and/or integrating their DevSecOps toolchain. Fragmented architectures exacerbate this challenge rather than solving it.

GitLab offers choice, commitment, and consolidation

Enterprise customers deserve a trustworthy technology partner. That's why we've committed to supporting a range of deployment options — whether you need on-premises for compliance, hybrid for flexibility, or cloud for convenience, the choice remains yours. That commitment continues with GitLab Duo, our AI solution that supports developers at every stage of their workflow.

But we offer more than just deployment flexibility. While other vendors might force you to cobble together their products into a fragmented toolchain, GitLab provides everything in a comprehensive AI-native DevSecOps platform. Source code management, CI/CD, security scanning, Agile planning, and documentation are all managed within a single application and a single vendor relationship.

This isn't theoretical. When Airbus and Iron Mountain evaluated their existing fragmented toolchains, they consistently identified challenges: poor user experience, missing functionalities like built-in security scanning and review apps, and management complexity from plugin troubleshooting. These aren't minor challenges; they're major blockers for modern software delivery.

Your migration path: Simpler than you think

We've helped thousands of organizations migrate from other vendors, and we've built the tools and expertise to make your transition smooth:

-

Automated migration tools: Our Bitbucket Server importer brings over repositories, pull requests, comments, and even Large File Storage (LFS) objects. For Jira, our built-in importer handles issues, descriptions, and labels, with professional services available for complex migrations.

-

Proven at scale: A 500 GiB repository with 13,000 pull requests, 10,000 branches, and 7,000 tags is likely to take just 8 hours to migrate from Bitbucket to GitLab using parallel processing.

-

Immediate ROI: A Forrester Consulting Total Economic Impact™ study commissioned by GitLab found that investing in GitLab Ultimate confirms these benefits translate to real bottom-line impact, with a three-year 483% ROI, 5x time saved in security related activities, and 25% savings in software toolchain costs.

Start your journey to a unified DevSecOps platform

Forward-thinking organizations aren't waiting for vendor-mandated deadlines. They're evaluating alternatives now, while they have time to migrate thoughtfully to platforms that protect their investments and deliver on promises.

Organizations invest in self-managed deployments because they need control, compliance, and customization. When vendors deprecate these capabilities, they remove not just features but the fundamental ability to choose environments matching business requirements.

Modern DevSecOps platforms should offer complete functionality that respects deployment needs, consolidates toolchains, and accelerates software delivery, without forcing compromises on security or data sovereignty.

Talk to our sales team today about your migration options, or explore our comprehensive migration resources to see how thousands of organizations have already made the switch.

You also can try GitLab Ultimate with GitLab Duo Enterprise for free for 30 days to see what a unified DevSecOps platform can do for your organization.

]]>GitLab's managed lifecycle environments solve these virtual testing challenges. Through virtual environment automation, GitLab accelerates embedded development cycles without the configuration complexity and cost overruns.

Virtual testing challenges

Virtual testing environments — simulated hardware setups that replicate embedded system behavior and real-world conditions — offer the potential to reduce hardware bottlenecks. Teams can test firmware on simulated processors, run model-in-the-loop (MIL) tests in MATLAB/Simulink, or verify software on virtual embedded systems without waiting for physical hardware access.

However, teams often implement virtual environments using one of two common approaches, both of which create unsustainable challenges.

Flawed approach 1: Pipeline lifecycle environments

Pipeline lifecycle environments re-create the entire testing setup for every CI/CD run. When code changes trigger your CI/CD pipeline, the system provisions infrastructure, installs software simulations, and configures everything from scratch before running tests.

This approach works for simple scenarios but becomes inefficient as complexity rises. Consider software-in-the-loop (SIL) testing in a complex virtual environment, for example. Each pipeline run requires complete environment re-creation, including virtual processor provisioning, toolchain installations, and target configurations. These processes can eat up considerable time.

Moreover, as embedded systems require more sophisticated virtual hardware configurations, the provisioning costs quickly add up.

To avoid these rebuild costs and delays, many teams turn to long-lived environments that persist between test runs. But they come with downsides.

Flawed approach 2: Long-lived environments

Long-lived environments persist indefinitely to avoid constant rebuilding. Developers request these environments from IT or DevOps teams, wait for approval, then need someone to manually provision the infrastructure. These environments are then tied to individual developers/teams rather than specific code changes, and they support ongoing development work across multiple projects.

While this eliminates rebuild overhead, it creates environment sprawl. Environments accumulate without a clear termination date. Infrastructure costs climb as environments consume resources indefinitely.

Long-lived environments also suffer from "config rot" — environments retain settings, cached data, or software versions from previous tests that can affect subsequent results. A test that should fail ends up passing due to the residue of previous testing.

Ultimately, managing long-lived environments is a manual process that slows development velocity and increases operational overhead.

GitLab offers a third approach through “managed lifecycle environments.” This approach captures the benefits of both long-lived and pipeline lifecycle environments while avoiding the drawbacks.

Solution: Managed lifecycle environments

GitLab's managed lifecycle environments tie virtual testing setups to merge requests (MRs) rather than pipeline runs or individual developers. You can also think of them as “managed MR test environments.” When you create an MR for a new feature, GitLab automatically orchestrates the provisioning of necessary virtual testing environments. These environments persist throughout the entire feature development process.

Key benefits

-

Persistent environments without rebuilding: The same virtual environment handles multiple pipeline runs as you iterate on your feature. Whether you're running MIL tests in MATLAB/Simulink or SIL tests on specialized embedded processors, the environment remains configured and ready.

-

Automatic cleanup: When you merge your feature and delete the branch, GitLab automatically triggers environment cleanup, eliminating environment sprawl.

-

Single source of truth: The MR records all build results, test outcomes, and environment metadata in one location. Team members can track progress and collaborate without shuffling between different tools or spreadsheets.

Watch this overview video to see how managed lifecycle environments work in practice:

<!-- blank line --> <figure class="video_container"> <iframe src="https://www.youtube.com/embed/9tfyVPK5DuI?si=Kj_xXNo02bnFBDhy" frameborder="0" allowfullscreen="true"> </iframe> </figure> <!-- blank line -->

GitLab automates the entire testing workflow. Each time you run firmware tests, GitLab orchestrates testing in the appropriate virtual environment, records results, and provides full visibility into every pipeline run. This approach transforms complex virtual testing from a manual, error-prone process into automated, reliable workflows.

The result: Teams get reusable environments without runaway costs. And they increase efficiency while maintaining clean, isolated testing setups for each feature.

See a demonstration of managed lifecycle environments for testing firmware on virtual hardware:

<!-- blank line --> <figure class="video_container"> <iframe src="https://www.youtube.com/embed/iWdY-kTlpH4?si=D6rpoulr9sv6Sl6E" frameborder="0" allowfullscreen="true"> </iframe> </figure> <!-- blank line -->

Business impact

GitLab's managed lifecycle environments deliver measurable improvements across embedded development workflows. Teams running MIL testing in MATLAB/Simulink and SIL testing on specialized processors like Infineon AURIX or BlackBerry QNX systems no longer face the tradeoff between constant environment rebuilds or uncontrolled environment sprawl. Instead, these complex virtual testing setups persist throughout feature development while automatically cleaning up when complete, enabling:

- Faster product development cycles

- Shorter time-to-market

- Lower infrastructure costs

- Higher quality assurance

Start transforming virtual testing today

Download “Unlocking agility and avoiding runaway costs in embedded development” for a deeper exploration of managed lifecycle environments and learn how to accelerate embedded development workflows dramatically.

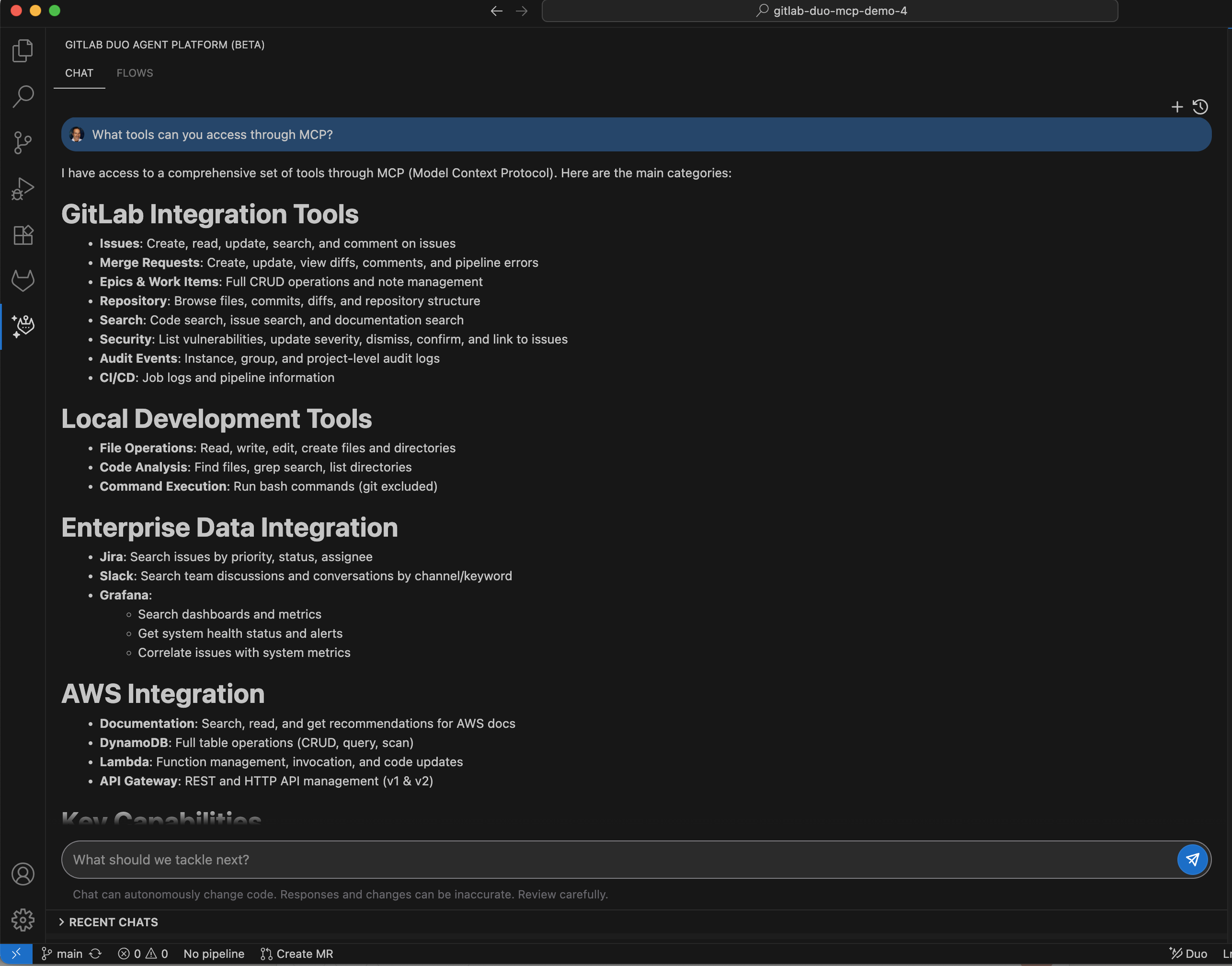

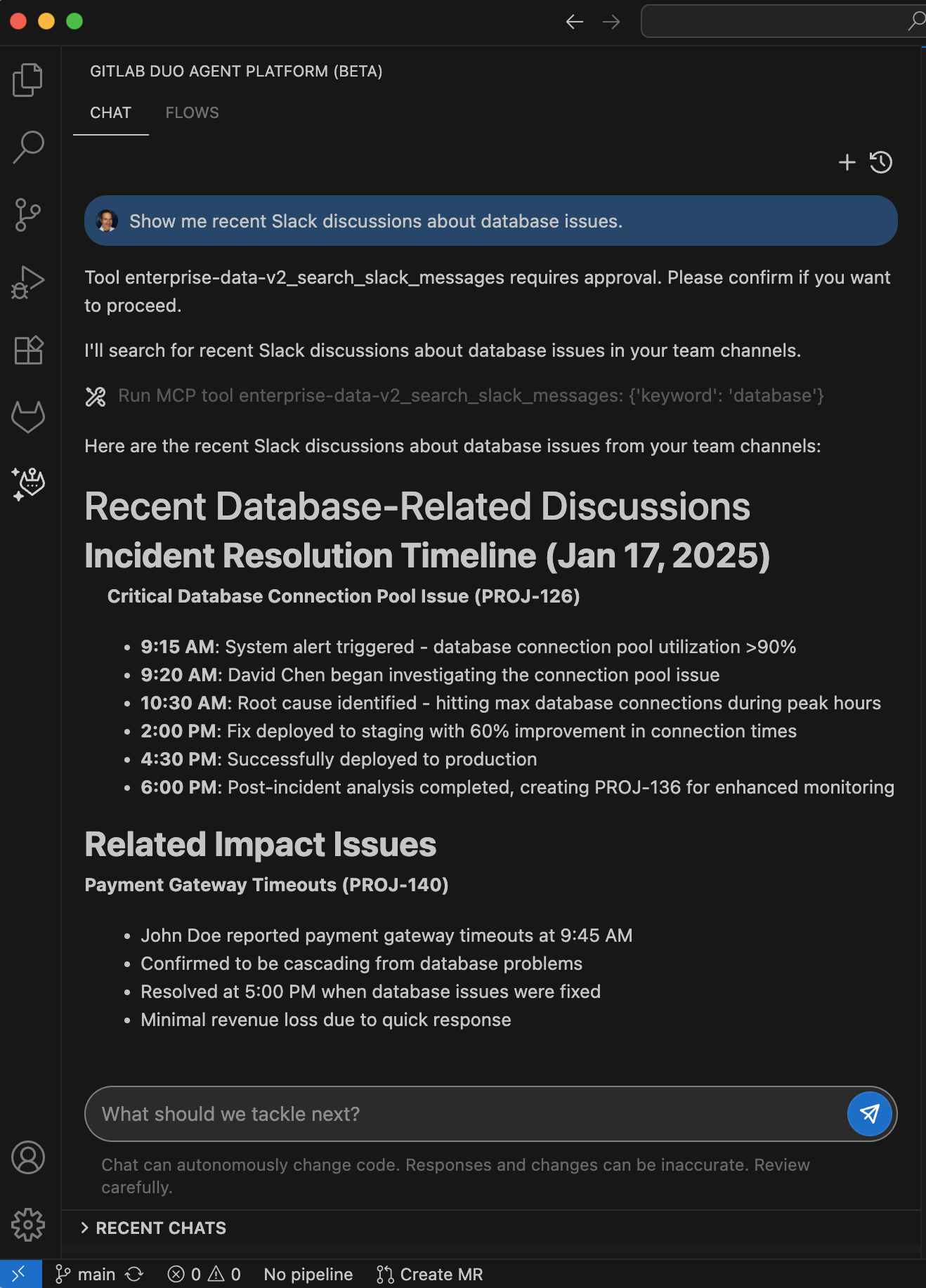

]]>Users now have the flexibility to choose Claude Sonnet 4.5 alongside other leading models, enhancing their GitLab Duo experience with upgrades in tool orchestration, context editing, and domain-specific capabilities. With top performance on SWE-bench Verified (77.2%) and strengths in cybersecurity, finance, and research-heavy workflows, GitLab users can apply Claude Sonnet 4.5 to bring sharper insights and deeper context to their development work.

"Having Claude Sonnet 4.5 in GitLab is a big win for developers. It’s a really capable coding model, and, when you use it with the GitLab Duo Agent Platform, you get smarter help right in your workflows. It’s the kind of step that makes development easier," said Taylor McCaslin, Principal, Strategy and Operations for AI Partnerships at GitLab.

GitLab Duo Agent Platform + Claude Sonnet 4.5

GitLab Duo Agent Platform extends the value of Claude Sonnet 4.5 by orchestrating agents, connecting them to internal systems, and integrating them throughout the software lifecycle. This combination creates a uniquely GitLab experience — where advanced reasoning and problem-solving meet platform-wide context and security. The result is faster development, more accurate outcomes, and stronger organizational coverage, all delivered inside the GitLab workflow developers already use every day.

Where you can use Claude Sonnet 4.5

Claude Sonnet 4.5 is now available as a model option in GitLab Duo Agent Platform Agentic Chat on GitLab.com. You can choose Claude Sonnet 4.5 from the model selection dropdown to leverage its advanced coding capabilities for your development tasks.

Note: Ability to select Claude Sonnet 4.5 in supported IDEs will be available soon.

Get started

GitLab Duo Pro and Enterprise customers can access Claude Sonnet 4.5 today. Visit our documentation to learn more about GitLab Duo capabilities and models.

Questions or feedback? Share your experience with us through the GitLab community.

]]>Want to try GitLab Ultimate with Duo Enterprise? Sign up for a free trial today.

Agentic AI is a type of artificial intelligence that leverages advanced language models and natural language processing to take independent action. Unlike traditional generative AI tools that require constant human direction, these systems can understand requests, make decisions, and execute multi-step plans to achieve goals. They tackle complex tasks by breaking them into manageable steps and employ adaptive learning to modify their approach when facing challenges.

Agentic AI insights

- Transform development with agentic AI: The enterprise guide

- GitLab 18.4: AI-native development with automation and insight With GitLab 18.4, teams create custom agents, unlock Knowledge Graph context, and auto-fix pipelines so developers stay focused and in flow.

- GitLab 18.3: Expanding AI orchestration in software engineering Learn how we're advancing human-AI collaboration with enhanced Flows, enterprise governance, and seamless tool integration.

- GitLab Duo Agent Platform Public Beta: Next-gen AI orchestration and more — Introducing the DevSecOps orchestration platform designed to unlock asynchronous collaboration between developers and AI agents.

- GitLab Duo Agent Platform: What's next for intelligent DevSecOps — GitLab Duo Agent Platform, a DevSecOps orchestration platform for humans and AI agents, leverages agentic AI for collaboration across the software development lifecycle.

- From vibe coding to agentic AI: A roadmap for technical leaders — Discover how to implement vibe coding and agentic AI in your development process to increase productivity while maintaining code quality and security.

- Emerging agentic AI trends reshaping software development — Discover how agentic AI transforms development from isolated coding to intelligent workflows that enhance productivity while maintaining security.

- Agentic AI: Unlocking developer potential at scale — Explore how agentic AI is transforming software development, moving beyond code completion to create AI partners that proactively tackle complex tasks.

- Agentic AI, self-hosted models, and more: AI trends for 2025 — Discover key trends in AI for software development, from on-premises model deployments to intelligent, adaptive AI agents.

- How agentic AI unlocks platform engineering potential — Explore how agentic AI elevates platform engineering by automating complex workflows and scaling standardization.

The agentic AI ecosystem

- AI-driven code analysis: The new frontier in code security

- DevOps automation & AI agents

- AI-augmented software development: Agentic AI for DevOps

Best practices for implementing agentic AI

- Implementing effective guardrails for AI agents — Discover essential security guardrails for AI agents in DevSecOps, from compliance controls and infrastructure protection to user access management.

GitLab's agentic AI offerings

GitLab Duo with Amazon Q

- GitLab Duo with Amazon Q: Agentic AI optimized for AWS generally available — The comprehensive AI-powered DevSecOps platform combined with the deepest set of cloud computing capabilities speeds dev cycles, increases automation, and improves code quality.

- DevSecOps + Agentic AI: Now on GitLab Self-Managed Ultimate on AWS — Start using AI-powered, DevSecOps-enhanced agents in your AWS GitLab Self-Managed Ultimate instance. Enjoy the benefits of GitLab Duo and Amazon Q in your organization.

- GitLab Duo with Amazon Q partner page

Watch GitLab Duo with Amazon Q in action:

<div style="padding:56.25% 0 0 0;position:relative;"><iframe src="https://player.vimeo.com/video/1075753390?badge=0&autopause=0&player_id=0&app_id=58479" frameborder="0" allow="autoplay; fullscreen; picture-in-picture; clipboard-write; encrypted-media" style="position:absolute;top:0;left:0;width:100%;height:100%;" title="Technical Demo: GitLab Duo with Amazon Q"></iframe></div><script src="https://player.vimeo.com/api/player.js"></script>

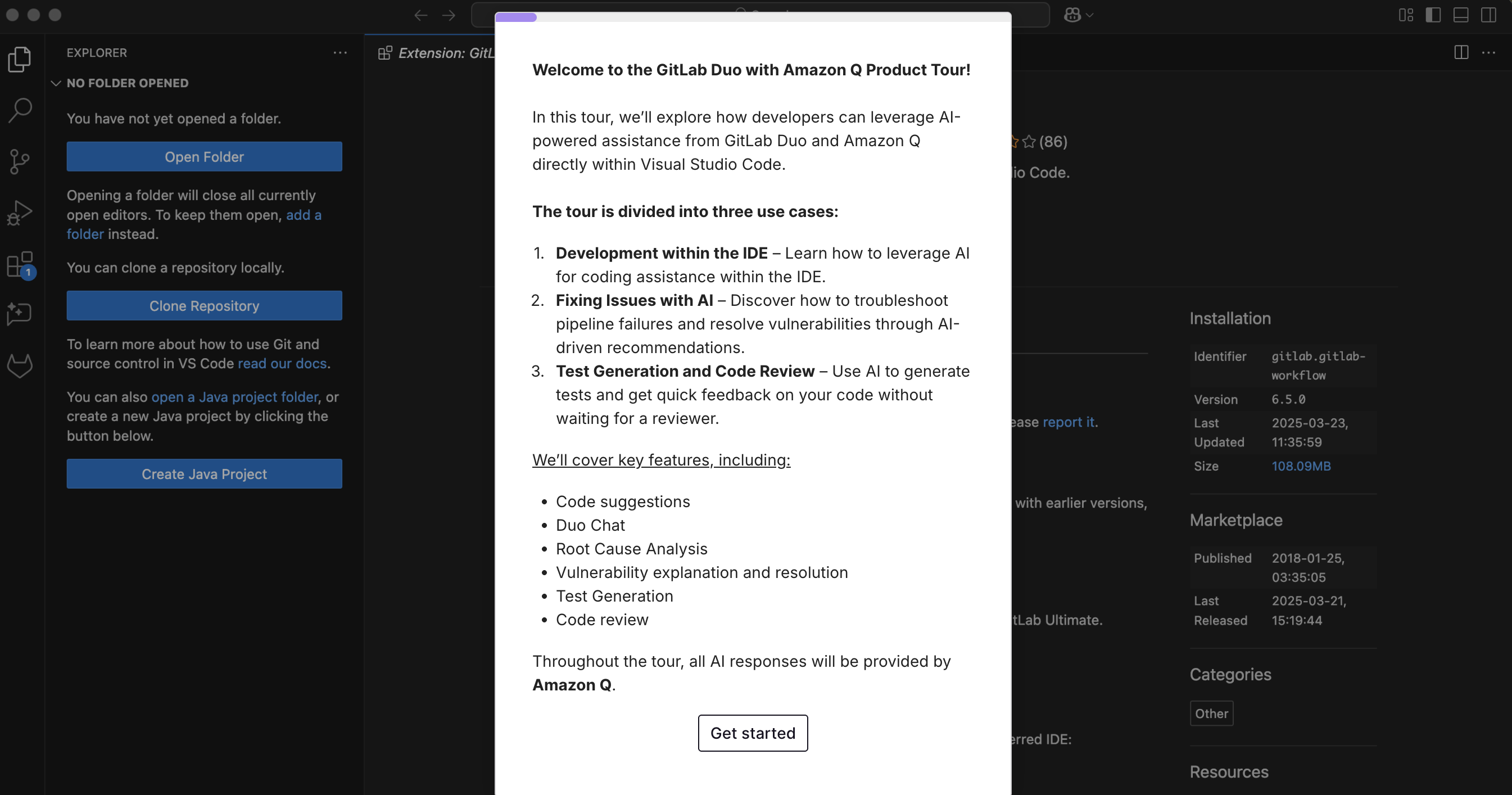

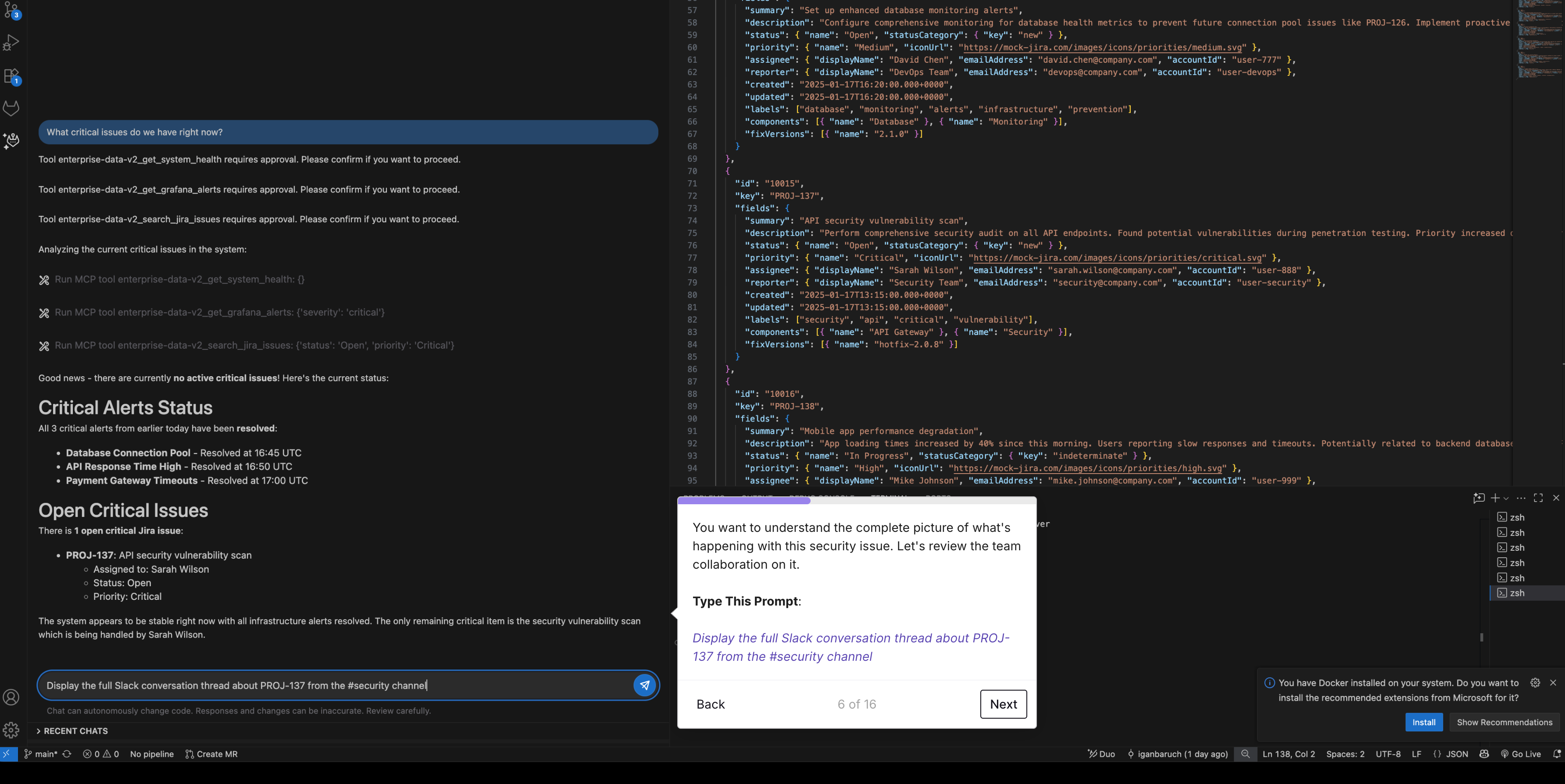

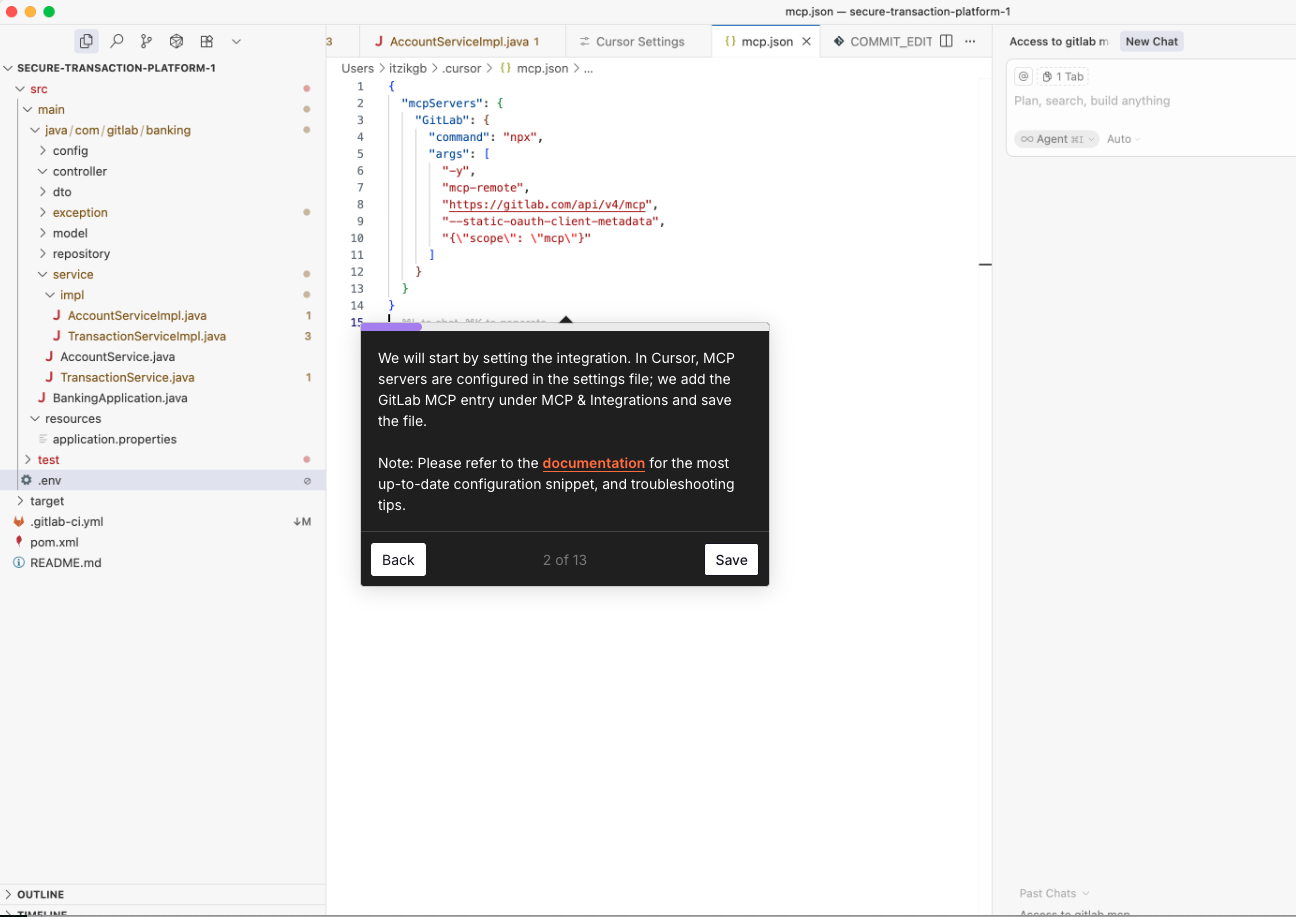

Guided tour

Click on the image to start a tour of GitLab Duo with Amazon Q:

GitLab Duo with Amazon Q tutorials

- Enhance application quality with AI-powered test generation — Learn how GitLab Duo with Amazon Q improves the QA process by automatically generating comprehensive unit tests.

- GitLab Duo + Amazon Q: Transform ideas into code in minutes — The new GitLab Duo with Amazon Q integration analyzes your issue descriptions and automatically generates complete working code solutions, accelerating development workflows.

- Accelerate code reviews with GitLab Duo and Amazon Q — Use AI-powered agents to optimize code reviews by automatically analyzing merge requests and providing comprehensive feedback on bugs, readability, and coding standards.

- Speed up code reviews: Let AI handle the feedback implementation — Discover how GitLab Duo with Amazon Q automates the implementation of code review feedback through AI, transforming a time-consuming manual process into a streamlined workflow.

GitLab Duo Agent Platform

- GitLab Duo Chat gets agentic AI makeover — Our new Duo Chat experience, currently an experimental release, helps developers onboard to projects, understand assignments, implement changes, and more. Watch GitLab Duo Agent Platform in action: <div style="padding:56.25% 0 0 0;position:relative;"><iframe src="https://player.vimeo.com/video/1095679084?badge=0&autopause=0&player_id=0&app_id=58479" frameborder="0" allow="autoplay; fullscreen; picture-in-picture; clipboard-write; encrypted-media; web-share" style="position:absolute;top:0;left:0;width:100%;height:100%;" title="Agent Platform Demo Clip"></iframe></div><script src="https://player.vimeo.com/api/player.js"></script>

GitLab Agent Platform tutorials and use cases

- Vibe coding with GitLab Duo Agent Platform: Issue to MR Flow - Learn how to update your application in minutes with our newest agent Flow that takes developers from idea to code.

- Get started with GitLab Duo Agentic Chat in the web UI - Learn about our new GitLab Duo AI feature that automates tasks by breaking down complex problems and executing operations across multiple sources.