Image Annotation on the Semantic Web

Editors' Draft $Date: 2007/02/15 16:03:24 $ $Revision: 1.226 $

- This version:

-

http://www.w3.org/2001/sw/BestPractices/MM/image_annotation.html

- Latest version:

-

http://www.w3.org/2005/Incubator/mmsem/XGR-image-annotation/

- Previous version:

-

http://www.w3.org/TR/2006/WD-swbp-image-annotation-20060322/

- Editors:

- Jacco van Ossenbruggen, Center for Mathematics and Computer Science (CWI Amsterdam)

- Raphaël Troncy, Center for Mathematics and Computer Science (CWI Amsterdam)

- Giorgos Stamou, IVML, National Technical University of Athens

- Jeff Z. Pan, University of Aberdeen (Formerly University of Manchester)

- Contributors:

- Christian Halaschek-Wiener, University of Maryland

- Nikolaos Simou, IVML, National Technical University of Athens

- Vassilis Tzouvaras, IVML, National Technical University of Athens

-

- Also see Acknowledgements.

Copyright

© 2006

W3C

®

(MIT, ERCIM, Keio), All Rights

Reserved. W3C liability,

trademark

and document

use rules apply.

Many applications that process multimedia content make use of

some form of metadata that describe the multimedia content. The

goals of this document are to explain the advantages of using

Semantic Web languages and technologies for the creation,

storage, manipulation, interchange and processing of image

metadata. In addition, it provides guidelines for Semantic

Web-based image annotation, illustrated by use cases. Relevant

RDF and OWL vocabularies are discussed, along with a short

overview of publicly available tools.

After reading this document, readers may

turn to separate documents discussing individual image

annotation vocabularies,

tools,

and other relevant

resources.

Most of the current approaches to image annotation are not based on Semantic

Web languages. Interoperability between these technologies and

RDF and OWL-based approaches is not the topic of this document.

This document is target at everybody with an interest in image

annotation, ranging from non-professional end-users that are

annotating their personal digital photos to professionals

working with digital pictures in image and video banks,

audiovisual archives, museums, libraries, media production and

broadcast industry, etc.

-

To illustrate the benefits of using semantic technologies in image annotations.

-

To provide guidelines for applying semantic technologies in this area.

-

To collect currently used vocabularies for Semantic Web-based

image annotation.

-

To provide use cases with examples of Semantic Web-based

image annotation.

This is an editors' draft intended to become a

Working Group Note produced by the Multimedia

Annotation on the Semantic Web Task Force of the W3C Semantic

Web Best Practices & Deployment Working Group, which

is part of the W3C

Semantic Web activity.

Discussion of this document

is invited on the public mailing list public-swbp-wg@w3.org

(public

archives). Public comments should include "comments: [MM]"

at the start of the Subject header.

Publication as a

Working Group Note does not imply endorsement by the W3C

Membership. This is a draft document and may be updated,

replaced or obsoleted by other documents at any time. It is

inappropriate to cite this document as other than work in

progress. Other documents may supersede this document.

The need for annotating digital image data is recognized in a wide

variety of different applications, covering both professional and

personal usage of image data. At the time of writing, most work

done in this area does not yet use semantic-based technologies.

This document explains the advantages of using Semantic Web

languages and technologies for image annotations and provides

guidelines for doing so. It is organized around a number of

representative use cases, and a description of Semantic Web

vocabularies and tools that could be used to help accomplish the

task mentioned in the uses cases.

The use cases are intended as a representative set of examples,

providing both personal and industrial, and open and closed domain

examples. They will be used later to discuss the vocabularies and

tools that are relevant for image annotation on the Semantic

Web. Example scenarios are given in Section

5. The use cases differ in the topics depicted by the images

or their usage community. These criteria often determine the tools

and vocabularies used in the annotation process.

Many personal users have thousands of digital photos from vacations,

parties, traveling, friends and family, everyday life, etc. Typically, the

photos are stored on personal computer hard drives in a simple

directory structure without any metadata. The user wants generally

to easily access this content, view it, use it in his homepage,

create presentations, make part of it accessible for other people

or even sell part of it to image banks. Too often, however, the

only way for this content to be accessed is by browsing the

directories, their name providing usually the date and the

description with one or two words of the original event captured by

the specific photos. Obviously, this access becomes more and more

difficult as the number of photos increases and the content becomes

quickly unused in practice. More sophisticated users leverage

simple photo organizing tools allowing them to provide keyword

metadata, possibly along with a simple taxonomy of categories. This

is a first step towards a semantically-enabled solution. Section 5.1 provides an example

scenario for this use case using Semantic Web technologies.

Let us imagine that a museum in fine arts has asked a specialized

company to produce high resolution digital scans of the most

important art works of their collections. The museum's quality

assurance requires the possibility to track when, where and by whom

every scan was made, with what equipment, etc. The museum's

internal IT department, maintaining the underlying image database,

needs the size, resolution and format of every resulting image. It

also needs to know the repository ID of the original work of

art. The company developing the museum's website additionally

requires copyright information (that varies for every scan,

depending on the age of the original work of art and the collection

it originates from). It also want to give the users of the website

access to the collection, not only based on the titles of the

paintings and names of their painters, but also based on the topics

depicted ('sun sets'), genre ('self portraits'), style

('post-impressionism'), period ('fin de siècle'), region

('west European'). Section 5.2

shows how all these requirements can be fulfilled using Semantic

Web technologies.

Audiovisual archive centers are used to manage very large

multimedia databases. For instance, INA, the French Audiovisual

National Institute, has been archiving TV documents since the fifties,

the radio documents since the forties, and stores more than 1 million

hours of broadcast programs. They have recently put online and in free access

more than 10000 hours of French TV programs. The images and sound archives kept at

INA are primarily intended for professional use (journalists, film

directors, producers, audiovisual and multimedia programmers and

publishers, in France and worldwide) or communicated for research

purposes (for a public of students, research workers, teachers and

writers), but they are, now, more and more available for the general public.

In order to allow an efficient access to the data stored,

most of the parts of these video documents are described and

indexed by their content. The global multimedia information system

should then be fine-grain enough detailed to support some very

complex and precise queries. For example, a journalist or a film

director client might ask for an excerpt of a previously

broadcasted program showing the first goal of a given football

player in its national team, scored with its head. The query could

additionally contain some more technical requirements such that the

goal action should be available according to both the front camera

view and the reverse angle camera view. Finally, the client might

or might not remember some general information about this football

game, such that the date, the place and the final score. Section 5.3 gives a possible

solution for this use case using Semantic Web technologies.

Many organizations maintain extremely large-scale image

collections. The National Aeronautics and Space Administration

(NASA), for example, has hundreds of thousands of images, stored in

different formats, levels of availability and resolution, and with

associated descriptive information at various levels of detail and

formality. Such an organization also generates thousands of images

on an ongoing basis that are collected and cataloged. Thus, a

mechanism is needed to catalog all the different types of image

content across various domains. Information about both the image

itself (e.g., its creation date, dpi, source) and about the

specific content of the image is required. Additionally, the

associated metadata must be maintainable and extensible so that

associated relationships between images and data can evolve

cumulatively. Lastly, management functionality should provide

mechanisms flexible enough to enforce restriction based on content

type, ownership, authorization, etc. Section 5.4 gives an example solution for

this use case.

An health-care provider might be interested in annotating medical

images for clinical applications, education or other purposes.

Diagnostic images involved in patient care need to be properly

annotated from different points of view: the image modality

(radiograph, MRI, etc) and its acquisition parameters (including

time), the patient and anatomical body part depicted by the

image, the associated report data such as diagnosis reports and

electronic patient records. When such images are used for

medical education, anonymization is needed in order

to not reveal personal data to unauthorized users. Educational

images need a clear description of modality, anatomical parts,

and morphological and diagnostic comments. Such metadata may

refer to the complete image or, often, to just one or more

subregions, which need to be identified in some way. Educational

metadata could be directly shown to users or maintained hidden

to develop self-learning skills.

Section 5.5 gives an example solution addressing

these requirements.

Before discussing example solutions to the use cases, this section

provides a short overview of image annotation in general, focussing

on the issues that often make image annotation much more complex

that initially expected. In addition, it briefly discusses the

basic Semantic Web concepts that are required to understand the

solutions provided later.

Annotating images on a small scale for personal usage, as described

in the first use case above, can be relatively simple, as

examplified by popular keyword-based tagging systems such as Flickr.

Unfortunately, for more ambitious annotation tasks, the situation

quickly becomes less simple. Larger scale industrial strength

image annotation is notoriously complex. Trade offs along several

dimensions make the professional multimedia annotations difficult:

-

Production versus post-production annotation

A general rule is that it is much easier to annotate earlier

rather than later. Typically, most of the information that is

needed for making the annotations is available during production

time. Examples include time and date, lens settings and other

EXIF metadata added to JPEG images by most digital cameras at the

time a picture is taken, experimental data in scientific and

medical images, information from scripts, story boards and edit

decision lists in creative industry, etc. Indeed, maybe the

single most best practice in image annotation is that in general,

adding metadata during the production process is much cheaper and

yields higher quality annotations than adding metadata in a later

stage (such as by automatic analysis of the digital artifact or

by manual post-production data).

-

Generic vs task-specific annotation

Annotating images without having a specific context, goal or task

in mind is often not cost effective: after the target application

has been developed, it may turn out that images have been

annotated with information that insufficiently covers the new

requirements. Redoing the annotations is then an unavoidable,

but costly solution. On the other hand, annotating with

only the target application in mind may also not be cost

effective. The annotations may work well with that one

application, but if the same metadata is to be reused in the

context of other applications, it may turn out to be too

specific, and unsuited for reuse in a different context. In most

situations the range of applications in which the metadata will

be used in the future is unknown at the time of annotation. When

lacking a crystal ball, the best the annotator can do in practice

is use an approach that is sufficiently specific for the

application under development, while avoiding unnecessary

application-specific assumptions as much as possible.

-

Manual versus automatic annotation and the "Semantic Gap"

In general, manual annotation can provide image descriptions at

the right level of abstraction. It is, however, time consuming

and thus expensive. In addition, it proves to be highly

subjective: different human annotators tend to "see" different

things in the same image. On the other hand, annotation based on

automatic feature extraction is relatively fast and cheap, and

can be more systematic. It tends to result, however, in image

descriptions that are too low level for many applications. The

difference between the low level feature descriptions provided by

image analysis tools and the high level content descriptions

required by the applications is often referred to, in the

literature, as the Semantic Gap. In the remainder, we

will discuss use cases solutions, vocabularies and tools for both

manual and automatic image annotation.

-

Different types of metadata

While various classifications of metadata have been described in

the literature, every annotator should at least be aware of the

difference between annotations describing properties of the image

itself, and those describing the subject matter of the image,

that is, the properties of the objects, persons or concepts

depicted by the image. In the first category, typical

annotations provide information about title, creator, resolution,

image format, image size, copyright, year of publication, etc.

Many applications use a common, predefined and relatively small

vocabulary defining such properties. Examples include the Dublin Core and VRA

Core vocabularies. The second category describes what is

depicted by the image, which can vary wildly with the type of

image at hand. In many applications, it is also useful to

distinguish between objective observations ('the person in the

white shirt moves his arm from left to right') versus subjective

interpretations ('the person seems to perform a martial arts

exercise'). As a result, one sees a large variation in

vocabularies used for this purpose. Typical examples vary from

domain-specific vocabularies (for example, with terms that are

very specific for astronomy images or sport images) to

domain-independent ones (for example, a vocabulary with terms

sufficiently generic to describe any news photo). In addition,

vocabularies tend to differ in size, granularity, formality etc.

In the remainder, we discuss the above metadata categories. Note

that in the first type it is not uncommon that a vocabulary only

defines the properties and defers the definitions of the values

of those properties to another vocabulary. This is true, for

example, for both Dublin Core and VRA Core. This means that,

typically, in order to annotate a single image one needs terms

from multiple vocabularies.

-

Lack of Syntactic and Semantic Interoperability

Many different file formats and tools for image annotations are

currently in use. Reusing metadata created by another tool is

often hindered by a lack of interoperability. First, a tool may

use a different syntax for its file formats. As a consequence,

other tools are not able to read in the annotations produced by

this tool. Second, a particular tool may assign a different

meaning (semantics) to the same annotation. In this situation,

the tool may be able to read annotations from other tools, but

will fail to process them in the way originally intended.

Both problems can be solved by using Semantic Web technology.

First, the Semantic Web provides developers with means to

explicitly specify the syntax and semantics of their metadata.

Second, it allows tool developers to make explicit how their

terminology relates to that of other tools. The document will

provide examples of both cases.

This section briefly describe the role of Semantic Web technologies

in image annotations. The aim of the Semantic Web is to augment the

existing Web so that resources (Web pages, images etc.) are more

easily interpreted by programs (or "intelligent agents"). The idea

is to associate Web resources with semantic categories which

describe the contents and/or functionalities of Web resources.

Annotations alone do not establish the semantics of what is being

marked-up. One way generally followed to introduce semantics to

annotations is to get a community to agree on what a set of

concepts mean, and what terms have to be used for them.

Informal versus formal semantics

Consider the following example of an image anntotated using the Dublin Core Metadata Element Set. The

Dublin Core provides 15 "core" information properties, such as

"Title", "Creator" or "Date". The following RDF/XML example code

represents the statements: 'There is an image Ganesh.jpg

created by Jeff Z. Pan whose title is An image of

the Elephant Ganesh'. The first four lines define the XML namespaces used in this description. A good

starting point for more information on RDF is the RDF Primer.

<rdf:RDF xml:base="http://example.org/"

xmlns="http://example.org/"

xmlns:dc="http://purl.org/dc/elements/1.1/"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<rdf:Description rdf:about="Ganesh.jpg">

<dc:title>An image of the Elephant Ganesh</dc:title>

<dc:creator>Jeff Z. Pan</dc:creator>

</rdf:Description>

</rdf:RDF>

The disadvantage of the example above is that the meaning of all

the metadata values are strings, which cannot easily be interpreted

by automated procedures. Applications that need to process the

image based on this meaning, need to have this processing hardwired

into their code. For example, it would be hard for an application

to find out that this image could match queries such as "show me

all images with animals" or "show me all images of Indian deities".

An approach to solve this is to take the informal agreement about

the meaning of the terms used in the domain as a starting point and

to convert this into a formal, machine processable version. This

version is than typically referred to as an ontology. It

provides, for a specific domain, a shared and common

vocabulary, including important concepts, their

properties, mutual relations and constraints. This formal

agreement can be communicated between people and heterogeneous,

distributed application systems. The ontology-based approach might

be more difficult to develop. It is, however, more powerful than

the approach that is based only on informal agreement. For example,

machines can use the formal meaning for reasoning about, completing

and validating the annotations. In addition, human users can more

thoroughly define and check the vocabulary using axioms expressed

in a logic language. Ideally, the concepts and properties of an

ontology should have both formal definitions and natural language

descriptions to be unambiguously used by humans and software

applications.

For example, suppose the community that is interested in this

picture has agreed to use concepts from the WordNet vocabulary to annotate its images. One

could simple add two lines of RDF under the dc:creator line

of the example above:

<dc:subject rdf:resource="http://www.w3.org/2006/03/wn/wn20/instances/synset-Indian_elephant-noun-1"/>

<dc:subject rdf:resource="http://www.w3.org/2006/03/wn/wn20/instances/synset-Ganesh-noun-1"/>

Now, by refering to the formally defined concepts of "Indian

Elephant" and "Ganesh" in WordNet, even applications that have

never encountered these concepts before could request more

information about the WordNet definitions of the concepts and find

out, for example, that "Ganesh" is a hyponym of the concept "Indian

Deity", and that "Indian Elephant" is a member of the "Elephas"

genus of the "Elephantidae" family in the animal kingdom.

Choosing which vocabularies to use for annotating image is a key

decision in an annotation project. Typically, one needs more

than a single vocabulary to cover the different relevant aspects

of the images. A separate document named

Vocabularies

Overview discusses a number of individual vocabularies that

are relevant for images annotation. The remainder of this

section discusses more general issues.

Many of the relevant vocabularies have been developed prior to

the Semantic Web, and Vocabularies

Overview lists

many translations of such vocabularies to RDF or OWL. Most

notably, the key International Standard in this area, the Multimedia Content Description standard,

widely known as MPEG-7, is defined using XML Schema. At the

time of writing, there is no commonly accepted mapping from the

XML Schema definitions in the standard to RDF or OWL. Several

alternative mappings, however, have been developed so far and

are discussed in the overview.

Another relevant vocabulary is the VRA

Core. Where the Dublin Core (DC)

specifies a small and commonly used vocabulary for on-line

resources in general, VRA Core defines a similar set targeted

especially at visual resources, specializing the DC elements.

Dublin Core and VRA Core both refer to terms in their

vocabularies as elements, and both use

qualifiers to refine elements in similar way. All the

elements of VRA Core have either direct mappings to comparable

fields in Dublin Core or are defined as specializations of one

or more DC elements. Furthermore, both vocabularies are defined

in a way that abstracts from implementation issues and

underlying serialization languages. A key difference, however,

is that for Dublin Core, there exists a commonly accepted

mapping to RDF, along with the associated schema. At the time of

writing, this is not the case for VRA Core, and the overview

discusses the pros and cons of the alternative mappings.

Many annotations on the Semantic Web are about an entire

resource. For example, a <dc:title> property

applies to the entire document. For images and other multimedia

documents, one often needs to annotate a specific part of a

resource (for example, a region in an image). Sharing the

metadata dealing with the localization of some specific part of

multimedia content is important since it allows to have multiple

annotations (potentially from multiple users) referring to the

same content.

There are at least two possibilities to annotate a spatially-localized

region of an image:

-

In the ideal situation, the target image already specifies this specific

part, using a (anchor) name that is addressable in the URI fragment

identifier. This can be done, for example, in SVG.

-

Otherwise, the region needs to be described in the metadata itself, as

it is done in MPEG-7. The drawback of this strategy is that

various different annotations of a single region have then to redefine each time

the borders of this region. The region definition could be defined once for all in

an independant and identified file, but then, the annotation will be about

this XML snippet definition and not about the image region content.

The next section discusses general characteristics of the existing annotation

tools for images.

Among the numerous tools used for image archiving and description, some of them

may be used for semantic annotation. The aim of this section is to

identify some key characteristics of semantic image annotation tools, such as the type of

content they can handle or if they allow fine-grained annotations, so as to provide

some guidelines for their proper use. Using these characteristics as criteria, users of these

tools could choose the most appropriate for a specific application.

Type of Content. A tool can annotate different type of content.

Usually, the raw content is an image, whose format can be jpg, png, tif, etc. but there

are tools that can annotate videos as well.

Type of Metadata. An annotation can be targeted for different use.

Following the categorization

provided by The Making of

America II project, the metadata can be descriptive (for description

and identification of information), structural

(for navigation and presentation), or administrative (for management and

processing). Most of the tools can be used in order to provide

descriptive metadata and for some of them, the user can also provide structural and

administrative information.

Format of Metadata. An annotation can be expressed in different format.

This format is important since it should ensure

interoperability with other (semantic web) applications. MPEG-7 is often used as

the metadata format for exchanging automatic analysis results whereas OWL and RDF are

better appropriate in the Semantic Web world.

Annotation level. Some tools give to the user the

opportunity to annotate an image using vocabularies while others allow free text

annotation only. When ontologies are used (in RDF or OWL format),

the annotation level is considered to be controlled since the semantics is generally

provided in a more formal way, whereas if they are not, the annotation level is

considered to be free.

Client-side Requirement. This characteristic refers to

whether users can use a Web browser to access the service(s) or need to install

a stand-alone application.

License Conditions. Some of the tools are open source while some

others are not. It is important for the user and for potential researchers and

developers in the area of multimedia annotation to know this issue

before choosing a particular tool.

Collaborative or individual. This characteristic refers

to the possible usage of the tool as an annotation framework for web-shared image

databases or as an individual user multimedia content annotation tool.

Granularity. Granularity specifies whether annotation is

segment based or file based. This is an important characteristic since in some applications,

it could be crucial to provide the structure of the image. For example, it is

useful to provide annotations for different regions of the image, describing several cues of

information (like a textual part or sub-images) or defining and describing different objects

visualized in the image (e.g. people).

Threaded or unthreaded. This characteristic refers to the

ability of the tool to respond or add to a previous annotation and to stagger/structure

the presentation of annotations to reflect this.

Access control. This refers to the

access provided for different users to the metadata. For example, it is important to

distinguish between users that have simple access (just view) and users that have full

access (view or change).

Concluding, the appropriateness of a tool depends on the nature of annotation that the user

requires and cannot be predetermined. A separate web page is maintained with

Semantic Web

Image Annotation Tools, and categorizes most of the annotation tools found in the Internet,

according to the characteristics described above. Any comments, suggestions or new tools

annoucements will be added to this separate document. The tools can be used for different

types of annotations, depending on the use cases, as shown in the following section.

This section describes possible scenarios for how

Semantic Web technology could be used for supporting the

use cases presented in Section 1.

These scenarios are provided purely as illustrative examples and do not imply

endorsement by the W3C membership or the Semantic Web Best

Practices and Deployment Working Group.

Possible Semantic Web-based solution

A photo from a personal collection

The proposed scenario for the use case of the management of Personal Digital

Photo Collections, described in Section 1.1,

is based on the annotation of the photos using multiple vocabularies to

describe their properties and their content. The properties of the image itself,

e.g. the creator, the resolution, the date of capture, are described with terms

from the DC and VRA vocabularies. For the description of the content of the images

a variety of existing vocabularies are used and few domain specific ontologies

are created, because the potential subject of a photo from a personal digital

collection is very wide, and may include notions such as vacations, special events

of the personal history, landscapes, locations, people, objects, etc.

As a sample from a personal digital photo collection, a photo that depicts the vacations

of a person named Katerina Tzouvara in Thailand is chosen. The FOAF vocabulary is

then used to describe the person depicted in the image. Three domain specific

ontologies: the location, the landscape and the event ontology are used to describe the

location, the landscape and the event depicted on the photo. The RDF format is chosen

as the most appropriate for all the vocabularies, the ontologies and the annotation file.

Any existing editor can be used for editing the ontologies and the annotation file.

The corresponding RDF annotation file is available here.

The Annotation process

Firstly, a decision about the format of the annotation file has to be made.

The RDF/XML syntax of RDF is chosen in order to gain interoperability.

The RDF/XML syntax of RDF is well-formed XML with an overlay of constraints

and is parsable with XML technology.

The first line of the annotation file is the XML declaration line. The next

element is the RDF element that encloses the RDF based content. Within the

RDF element, the various namespaces associated with

each element in the file are declared as attributes. These namespaces reference existing

RDF schemas and ontologies from which particular elements have been used to

annotate the target image. Specifically, the following schemas are used:

<!-- Declaration of the namespaces of the schemas and ontologies being used -->

<?xml version="1.0" encoding="UTF-8"?>

<rdf:RDF

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

xmlns:owl="http://www.w3.org/2002/07/owl#"

xmlns:xsd="http://www.w3.org/2001/XMLSchema#"

xmlns:dc="http://purl.org/dc/elements/1.1/"

xmlns:foaf="http://xmlns.com/foaf/0.1/"

xmlns:event="http://www.altova.com/ontologies/personal_history_event#"

xmlns:locat="http://www.altova.com/ontologies/location#"

xmlns:lsc="http://www.altova.com/ontologies/landscape#"

xmlns:perph="http://www.altova.com/ontologies/usecase_personal_photo_management#"

xmlns:vra="http://www.vraweb.org/vracore/vracore3#" >

The inner element that follows (rdf:Description), wraps the subject-predicate-object

triples. The subject, declared by the rdf:about attribute is the image

(identified by its URI) that will be annotated, the predicates are properties of the image

and the objects are the values of these properties.

<rdf:Description rdf:about="http://www.w3.org/2001/sw/BestPractices/MM/images/examples/Personal.jpg">

The following block of triples provides general information about the entire photo.

The Dublin Core terms are mainly used to provide this kind of information,

since they are general enough and widely accepted by the metadata communities.

The first predicate-object pair states that the object of the annotation file is an image,

using the DC term-property type (dc:type) as the predicate, and the DCMI Type

term-concept Image as the object.

The DC term-property description (dc:description) in the next line

provides a short linguistic human-understandable description of the subject of the image.

The DC term-property creator (dc:creator) is then used for specifying the

creator of the image. In order to define that the creator is a person

(and e.g. not an organization or a company), the FOAF term-class Person (foaf:Person)

is used. The name of the person is defined using the FOAF term-properties familyname

(foaf:familyname) and firstname (foaf:firstname). The values of these

properties are RDF Literal. Note that these two properties are preferred to the general

foaf:name property since the absence of any further rules or standards for the

construction of individual names could mislead the reasoning engines

that would not be able to discriminate the first name from the family name.

The DC property date (dc:date) completes the description describing the capture day

of the photo. The RDF Literal value of this property

is conformed to the ISO 8601 specifications for representing dates and times.

<!-- Description and general information about the entire photo, e.g. description, creator, date -->

<!-- using Dublin Core and FOAF -->

<dc:type rdf:resource="http://purl.org/dc/dcmitype/Image"/>

<dc:description>Photo of Katerina Tzouvara during Vacations in Thailand</dc:description>

<dc:creator>

<foaf:Person>

<foaf:familyname>Stabenaou</foaf:familyname>

<foaf:firstname>Arne</foaf:firstname>

</foaf:Person>

</dc:creator>

<dc:date>2002-12-40</dc:date>

Some more technical information about the format and the resolution of the photo

are included in the annotation file using the DC property format (dc:format) and

the term JPEG of the MIME vocabulary, and the VRA property measurements.resolution

(vra:measurements.resolution). Though they may not seem really important for this

specific use case, these properties should exist for the purpose of interchanging

personal digital photos among users with different hardware and software capabilities.

<!-- Technical information, e.g. format, resolution, using DC, VRA, MIME -->

<dc:format>JPEG</dc:format>

<vra:measurements.resolution>300 x 225px</vra:measurements.resolution>

The rest of the annotation file focuses on the description of the content of the

image. In order to provide a complete description of the content, it is often

useful to try to answer the questions "when", "where" and "why" the photo has been taken,

and "who" and "what" is depicted on the photo, because these are the most probable questions

that the end-user would like to be queried during the retrieval process.

Firstly, the location and the landscape depicted on the photo are described.

To do so, two domain ontologies are built as simple as needed for the

purposes of the annotation of this image: the location ontology, whose prefix is

locat, and the landscape ontology, whose prefix is lsc. The location ontology

provides concepts such as continent (locat:Continent), country (locat:Country),

city (locat:City), and properties for the representation

of the fact that an image is taken in a specific continent (locat:located_in_Continent),

country (locat:located_in_Country) and city (locat:located_in_City).

The landscape ontology classes represent concepts such as mountain (lsc:Mountain),

beach (lsc:Beach), sand (lsc:Sand) and tree (lsc:Tree).

The FOAF property depicts (foaf:depicts) stands for the relation

that an image depicts something or someone. The FOAF property name (foaf:name)

informs that any kind of location or landscape has a specific name. The

TGN vocabulary

is used for the names of the locations and landscapes, e.g. Thailand, and the

AGROVOC thesaurus

is used for the names related to agriculture, e.g. Phoenix Dactyliphera.

For the representation of the relation that a city belongs to a specific country, the property

locat:belongs_to_Country has been used.

<!-- Information about the location and the objects depicted using a landscape ontology -->

<!-- and the TGN and AGROVOC thesaurus -->

<locat:located_in_Continent>

<locat:Continent>Asia</locat:Continent>

</locat:located_in_Continent>

<locat:located_in_Country>

<locat:Country>Thailand</locat:Country>

</locat:located_in_Country>

<locat:located_in_City>

<locat:City>

<foaf:name>Phi Phi</foaf:name>

<locat:belongs_to_Country>

<locat:Country>Thailand</locat:Country>

</locat:belongs_to_Country>

</locat:City>

</locat:located_in_City>

<foaf:depicts>

<lsc:Beach/>

</foaf:depicts>

<foaf:depicts>

<lsc:Palm_Tree>Phoenix Dactyliphera</lsc:Palm_Tree>

</foaf:depicts>

<foaf:depicts rdf:resource="http://www.altova.com/ontologies/landscape#Sand"/>

The advantages of using Semantic Web technologies in this use case can be already shown

at this stage. Suppose that the annotation file did not include the lines that

fully declare explicitly in which country the picture has been taken, but that it included

only the lines that state that the photo was taken in the city Phi Phi which belongs to the

country Thailand. When the end-user will query for retrieving photos depicting Thailand,

the application for the management of the digital photo collection using reasoning techniques

should return as a result all the photos that are explicitly declared to show Thailand,

but also all the photos that are implicitly declared to portray Thailand, e.g. by being explicitly

declared to be captured in a city that belongs to Thailand.

To describe the events captured on the image, a simple ontology representing the important

events for a person is created. This ontology conceptualizes occasions such as business

traveling (event:Business_Traveling or event:Conference),

vacations (event:Vacations), sports activities

(event:Sports_Activity), celebrations (event:Celebration or

event:Birthday_Party), etc.

<!-- Information about the kind of event depicted by the image -->

<foaf:depicts rdf:resource="http://www.altova.com/ontologies/event#Vacations"/>

Finally, the foaf:depicts property and the foaf:Person concept are used

for identifying the person shown on the image.

<!-- Information about the persons depicted by the image -->

<foaf:depicts>

<foaf:Person>

<foaf:familyname>Tzouvara</foaf:familyname>

<foaf:firstname>Katerina</foaf:firstname>

</foaf:Person>

</foaf:depicts>

</rdf:Description>

</rdf:RDF>

Conclusion

It is obvious, from this analysis of the annotation process, that there

are many important issues under discussion in order to improve the efficiency

of Semantic Web technologies. Firstly, the various vocabularies, such as FOAF,

VRA, TGN, do not have yet a standardized representation in a standardized

ontology language, such as OWL or RDF. Secondly, there do not exist many domain

specific vocabularies about the description of the content of visual document,

e.g. there are not any landscape, personal history event vocabularies, nor

vocabularies about the possible objects depicted by an image. This deficiency

of annotation standards provokes interoperability problems between the different

types of annotated content during the management and retrieval process.

Finally, another important concern is to which depth the annotation process

should proceed. In the example above, one might find it important to annotate

the clothing of the person too, but someone else may believe that it is more

important to annotate the objects on the beach, etc. This is a matter of the

annotation approach which should be defined according to the end-user needs.

In conclusion, there is still much work to be done for improving the use of

Semantic Web technologies for describing multimedia material.

However, as shown in this use case analysis, deploying

ontology-based annotations on personal computers applications will

enable a much more focused and integrated content management

and ease the exchange of personal photos.

Possible Semantic Web-based solution

Claude Monet, Garden at Sainte-Adresse.

Image courtesy of

Mark

Harden, used with permission.

Many of the requirements of the use case described in Section 1.2

can be met by using the vocabulary developed by the VRA in

combination with domain-specific vocabularies such as Getty's AAT

and ULAN.

In this section, we provide as an example a set of RDF annotations

of a painting by Claude Monet, which is in English known as "Garden

at Sainte-Adresse". It is part of the collection of the

Metropolitan Museum of Art in New York.

The corresponding RDF file

is available as a

separate document. No special annotation tools where used to

create the annotations. We assume that cultural heritage

organizations that need to publish similar metadata will do so by

exporting existing information from their collection database to

RDF. Below, we discuss the different annotations used in this

file.

House keeping

The file starts as a typical RDF/XML file, by defining the XML

version and encoding and defining entities for the RDF and VRA

namespaces that will be used later. Note that we use the RDF/OWL schema of VRA Core developed by Mark

van Assem.

<?xml version='1.0' encoding='ISO-8859-1'?>

<!DOCTYPE rdf:RDF [

<!ENTITY rdf "http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<!ENTITY vra "http://www.vraweb.org/vracore/vracore3#">

Work versus Image

The example includes annotations about two different images of the

same painting. An important distinction made by VRA vocabulary is

the distinction between annotations describing a work of art itself

and annotations describing (digital) images of that work. This

example also uses this distinction. In RDF, to say something about

a resource, that resource needs to have a URI. We will thus not

only need the URIs of the two images, but also a URI for the

painting itself:

<!ENTITY image1 "http://www.metmuseum.org/Works_Of_Art/images/ep/images/ep67.241.L.jpg">

<!ENTITY image2 "http://www.artchive.com/artchive/m/monet/adresse.jpg">

<!ENTITY painting "http://thing-described-by.org/?http://www.metmuseum.org/Works_Of_Art/images/ep/images/ep67.241.L.jpg">

]>

URI and ID conventions

VRA Core does not specify how works, images or annotation records

should be identified. For the two images, we have chosen for the

most straightforward solution and use the URI of the image as the

identifying URI. We did not have, however, a similar URI that

identifies the painting itself. We could not reuse the URI of one

of the images. This is not only conceptually wrong, but would also

lead to technical errors: it would make the existing instance of

vra:Image also an instance of the vra:Work class,

while this is not allowed by the schema.

In the example, we have decided to 'mint' the URI of the painting

by arbitrary selecting the URI of one of the images, and prefixing

it by

http://thing-described-by.org/?. This creates a new URI

that is distinct from the image itself, but when a the browser

resolves it, it will be redirected to the image URI by the

thing-described-by.org web server (one could argue if the

use of an http-based URI is actually appropriate here. See What do HTTP URIs Identify? and [httpRange-14] for more details on this

discussion).

Warning: The annotations described below also contain a

vra:idNumber.currentRepository element, that defines the

identifier used locally in the museum's repositories.

These local identifiers should not be confused with the globally

unique identifier that is provided by the URI.

More housekeeping: starting the RDF block

The next line opens the RDF block, declares the namespaces using

the XML entities defined above. Out of courtesy, it uses

rdf:seeAlso to help agents find the VRA schema that is

used.

<rdf:RDF xmlns:rdf="&rdf;" xmlns:vra="&vra;"

rdf:seeAlso="http://www.w3.org/2001/sw/BestPractices/MM/vracore3.rdfs"

>

Description of the work (painting)

The following lines describe properties of the painting itself: we

will deal with the properties of the two images later. First, we

provide general information about the painting such as the title,

its creator and the date of creation. For these properties, the VRA

closely follows the Dublin Core conventions:

<!-- Description of the painting -->

<vra:Work rdf:about="&painting1;">

<!-- General information -->

<vra.title>Jardin à; Sainte-Adresse</vra.title>

<vra:title.translation>Garden at Sainte-Adresse</vra:title.translation>

<vra:creator>Monet, Claude</vra:creator> <!-- ULAN ID:500019484 -->

<vra:creator.role>artist</vra:creator.role> <!-- ULAN ID:31100 -->

<vra:date.creation>1867</vra:date.creation>

Text fields and controlled vocabularies

Many values are filled with RDF Literals, of which the value is not

further constraint by the schema. But many of these values are

actually terms from other controlled vocabularies, such as the

Getty AAT, ULAN or a image

type defined by MIME. Using controlled

vocabularies solves many problems associated with free text

annotations. For example, ULAN recommends a spelling when an

artist's name is used for indexing, so for the vra:creator

field we have exactly used this spelling ("Monet, Claude"). The

ULAN identifiers of the records describing Claude Monet and the

"artist" class are given in XML comments above. The use of

controlled vocabulary can avoid confusion and the need for

"smushing" different spellings for the same name later.

However, using controlled vocabularies does not solve the problem

of ambiguous terms. The annotations below use three different meanings

for "oil paint", "oil paintings" and "oil painting (technique)".

The first refers to the type of paint used on the canvas, the

second to the type of work (e.g. the work is an oil painting, and

not an etching) and the last to the painting technique used by

artist. All three terms refer to different concepts that are part

of different branches of the AAT term hierarchy (the AAT

identifiers of these concepts are mentioned in XML comments).

However, the use of terms that are so similar for different

concepts is bound to lead to confusion. Instead, one could switch

from using owl:datatypeProperties to using

owl:objectProperties, and replace the literal text by a reference

to the URI of the concept used. For example, one could change:

<vra:material.medium>oil paint</vra:material.medium>

to

<vra:material.medium rdf:resource="http://www.getty.edu/aat#300015050"/>

This approach requires, however, that an unambiguous URI-based

naming scheme is defined for all terms in the target vocabulary

(and in this case, such a URI-based naming scheme does not yet

exist for AAT terms). Additional Semantic Web-based processing is

also only possible once these vocabularies become available in RDF

or OWL.

<!-- Technical information -->

<vra:measurements.dimensions>98.1 x 129.9 cm</vra:measurements.dimensions>

<vra:material.support>unprimed canvas</vra:material.support> <!-- AAT ID:300238097 -->

<vra:material.medium>oil paint</vra:material.medium> <!-- AAT ID:300015050 -->

<vra:type>oil paintings</vra:type> <!-- AAT ID:300033799 -->

<vra.technique>oil painting (technique)</vra.technique> <!-- AAT ID:300178684 -->

<!-- Associated style, etc. -->

<vra:stylePeriod>Impressionist</vra:stylePeriod> <!-- AAT ID:300021503 -->

<vra:culture>French</vra:culture> <!-- AAT ID:300111188 -->

Annotating subject matter

For many applications, it is useful to know what is actually

depicted by the painting. One could add annotations of this style

to an arbitrary level of detail. To keep the example simple, we

have chosen to record only the names of the people that are

depicted on the painting, using the vra:subject field.

Also for simplicity, we have chosen not to annotate specific parts

or regions of the painting. This might have been appropriate, for

example, to identify the associated regions that depict the various

people in the painting:

<!-- Subject matter: (who/what is depicted by this work -->

<vra:subject>Jeanne-Marguerite Lecadre (artist's cousin)</vra:subject>

<vra:subject>Madame Lecadre (artist's aunt)</vra:subject>

<vra:subject>Adolphe Monet (artist's father)</vra:subject>

Provenance: annotating the past

Many of the fields below do not contain information about the

current situation of the painting, but information about places and

collections the painting has been in the past. This provides

provenance information that is important in this domain.

<!-- Provenance -->

<vra:location.currentSite>Metropolitan Museum of Art, New York</vra:location.currentSite>

<vra:location.formerSite>Montpellier</vra:location.formerSite>

<vra:location.formerSite>Paris</vra:location.formerSite>

<vra:location.formerSite>New York</vra:location.formerSite>

<vra:location.formerSite>Bryn Athyn, Pa.</vra:location.formerSite>

<vra:location.formerSite>London</vra:location.formerSite>

<vra:location.formerRepository>

Victor Frat, Montpellier (probably before 1870 at least 1879;

bought from the artist); his widow, Mme Frat, Montpellier (until 1913)

</vra:location.formerRepository>

<vra:location.formerRepository>Durand-Ruel, Paris, 1913</vra:location.formerRepository>

<vra:location.formerRepository>Durand-Ruel, New York, 1913</vra:location.formerRepository>

<vra:location.formerRepository>

Reverend Theodore Pitcairn and the Beneficia Foundation, Bryn Athyn, Pa. (1926-1967),

sale, Christie's, London, December 1, 1967, no. 26 to MMA

</vra:location.formerRepository>

<vra:idNumber.currentRepository>67.241</vra:idNumber.currentRepository> <!-- MMA ID number -->

Copyright and origin of metadata

The remaining properties describe the origin the sources used for

creating the metadata and a rights management statement. We have

used the vra:description element to provide a link to a

web page with additional descriptive information:

<!-- extra information, source of this information and copyright issues -->

<vra:description>For more information, see

http://www.metmuseum.org/Works_Of_Art/viewOne.asp?dep=11&viewmode=1&item=67%2E241§ion=description#a</vra:description>

<vra:source>Metropolitan Museum of Art, New York</vra:source>

<vra:rights>Metropolitan Museum of Art, New York</vra:rights>

Image properties

Finally, we define the properties that are specific to the two

images of the painting, which differ in resolution, copyright, etc.

The first set of annotations describe a 500x300 pixel image that is

located at the website of the Metropolitan itself, while the second

set describes the properties of a larger resolution (1075 x 778px)

image at Mark Harden's Artchive website.

Note that VRA Core does not specify how Works and their associated Images

should be related. In the example we follow Van

Assem's suggestion and use vra.relation.depicts to

explicitly link the Image to the Work it depicts.

<!-- Description of the first online image of the painting -->

<vra:Image rdf:about="&image1a;">

<vra:type>digital images</vra:type> <!-- AAT ID: 300215302 -->

<vra:relation.depicts rdf:resource="&painting1;"/>

<vra.measurements.format>image/jpeg</vra.measurements.format> <!-- MIME -->

<vra.measurements.resolution>500 x 380px</vra.measurements.resolution>

<vra.technique>Scanning</vra.technique>

<vra:creator>Anonymous employee of the museum</vra:creator>

<vra:idNumber.currentRepository>ep67.241.L.jpg</vra:idNumber.currentRepository>

<vra:rights>Metropolitan Museum of Art, New York</vra:rights>

</vra:Image>

<!-- Description of the second online image of the painting -->

<vra:Image rdf:about="&image1b;">

<vra:type>digital images</vra:type> <!-- AAT ID: 300215302 -->

<vra:relation.depicts rdf:resource="&painting1;"/>

<vra:creator>Mark Harden</vra:creator>

<vra.technique>Scanning</vra.technique>

<vra.measurements.format>image/jpeg</vra.measurements.format> <!-- MIME -->

<vra.measurements.resolution>1075 x 778px</vra.measurements.resolution>

<vra:idNumber.currentRepository>adresse.jpg</vra:idNumber.currentRepository>

<vra:rights>Mark Harden, The Artchive, http://www.artchive.com/</vra:rights>

</vra:Image>

</rdf:RDF>

Conclusion and discussion

The example above reveals several technical issues that are still

open. For example, the way the URI for the painting was minted is

rather arbitrary. Preferably, there would have been a commonly

accepted URI scheme for paintings (c.f. the LSID

scheme used to identify concepts from the life sciences). At the

time of writing, the VRA, AAT and ULAN vocabulary used have

currently no commonly agreed upon RDF or OWL representation, which

reduces the interoperability of the chosen approach. Tool support

is another issue. While some major database vendors already start

to support RDF, generating the type of RDF as shown here from

existing collection databases will in many cases require non

trivial custom conversion software.

From a modeling point of view, subject matter annotations are

always non-trivial. As stated above, it is hard to give general

guidelines about what should be annotated and to what depth, as

this can be very application dependent. Note that in this example,

we annotated the persons that appear in the painting, and we

modeled this information as properties of the painting URI, not of

the two image URIs. But if we slightly modify our use case and

assume one normal image and one X-ray image that reveals an older

painting under this one, it might make more sense to model more

specific subject matter annotations as properties of the specific

images.

Nevertheless, the example shows that a large part of issues

described by the use case can be solved using current Semantic Web

technology. It shows how RDF can be used together with existing

vocabularies to annotate various aspects of paintings and the

images that depict them.

Possible Semantic Web-based solution

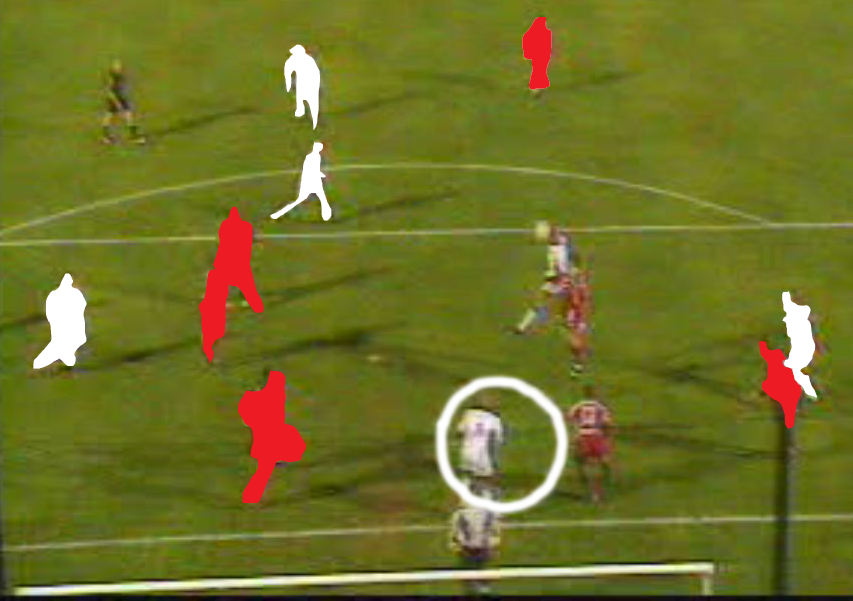

Drawing representing an offside position of a football player while his partner is

scoring with his head during a game.

Personal artwork, used with permission.

The use case described in Section 1.3

is typically one that requires the use of multiple

vocabularies. Let us imagine that the image to be described is about

a refused goal of a given soccer player (e.g.

J.A Boumsong) for

an active offside position during a particular game (e.g. Auxerre-Metz played on 2002/03/16).

First, the image can be extracted from a weekly sports

magazine broadcasted on a TV channel. This program may be fully

described using the vocabulary developed by the

[TV Anytime forum]. Second, this image shows the player

Jean-Alain Boumsong scoring with his head during the game

Auxerre-Metz. The context of this football game could be described

using the [MPEG-7] vocabulary while the

action itself might be described by a soccer ontology such as the

one developed by [Tsinaraki]. Finally, a

soccer fan may notice that this goal was actually refused for an

active offside position of another player. On the image, a circle

could highlight this player badly positioned. Again, the description

could merge MPEG-7 vocabulary for delimiting the relevant image

region and a domain specific ontology for describing the action

itself.

In the following, we provide as an example a set of RDF annotations

illustrating these three levels of description as well as the

vocabularies involved.

The image context

Let us consider that the image comes from a weekly sports magazine named Stade 2 broadcasted on

March, 17th 2002 on the French public channel France 2. This context can be

represented using the TV Anytime vocabulary which allows for a TV

(or radio) broadcaster to publish its program listings on the web

or in an electronic program guide. Therefore, this vocabulary

provides the necessary concepts and relations for cataloging the

programs, giving their intended audience, format and genre, or some

parental guidance. The vocabulary contains also the vocabulary for

describing afterwards the real audience and the peak viewing times

which are of crucial importance for the broadcasters in order to

adapt their advertisement rates.

<?xml version='1.0' encoding='ISO-8859-1'?>

<!DOCTYPE rdf:RDF [

<!ENTITY rdf "http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<!ENTITY xsd "http://www.w3.org/2001/XMLSchema#">

]>

<rdf:RDF

xmlns:rdf="&rdf;"

xmlns:xsd="&xsd;"

xmlns:tva="urn:tva:metadata:2002">

<tva:Program rdf:about="program1">

<tva:hasTitle>Stade 2</tva:hasTitle>

<tva:hasSynopsis>Weekly Sports Magazine broadcasted every Sunday</tva:hasSynopsis>

<tva:Genre rdf:resource="urn:tva:metadata:cs:IntentionCS:2002:Entertainment"/>

<tva:Genre rdf:resource="urn:tva:metadata:cs:FormatCS:2002:Magazine"/>

<tva:Genre rdf:resource="urn:tva:metadata:cs:ContentCS:2002:Sports"/>

<tva:ReleaseInformation>

<rdf:Description>

<tva:ReleaseDate xsd:date="2002-03-17"/>

<tva:ReleaseLocation>fr</tva:ReleaseLocation>

</rdf:Description>

</tva:ReleaseInformation>

</tva:Program>

</rdf:RDF>

Apollo 7 Saturn rocket launch -

October, 10th 1968. Image courtesy of NASA, available at

GRIN,

used with permission.

Possible Semantic Web-based solution

One possible solution for the requirements expressed in the use case description

in Section 1.4

is an annotation environment that enables users to annotate information

about images and/or their regions using concepts in ontologies

(OWL and/or RDFS). More specifically, subject matter experts will

be able to assert metadata elements about images and their

specific content. Multimedia related ontologies can be used to

localize and represent regions within particular images. These

regions can then be related to the image via a

depiction (or annotation) property. This functionality

(to represent images, image regions, etc.) can be provided,

for example, by the MINDSWAP

digital-media ontology, in conjunction with FOAF (to assert image

depictions). Additionally, in order to represent the low level

image features of regions, the aceMedia

Visual Descriptor Ontology can be used.

Domain Specific Ontologies

In order to describe the content of such images, a mechanism to

represent the domain specific content depicted within them is

needed. For this use case, domain ontologies that define space

specific concepts and relations can be used. Such ontologies are

freely available and include, but are not limited to the following:

- Shuttle related

(OWL /

RDFS)

- Space vehicle system related

(OWL /

RDFS)

Visual Ontologies

As discussed above, this scenario requires the ability to state

that images (and possibly their regions) depict certain things. For

example, consider a picture of the Apollo

7 Saturn rocket launch. One would want to make assertions that

include that the image depicts the Apollo 7 launch, the

Apollo 7 Saturn IB space vehicle is depicted in a rectangular

region around the rocket, the image creator is NASA,

etc. One possible way to accomplish this is to use a combination of

various multimedia related ontologies, including FOAF and the MINDSWAP

digital-media ontology. More specifically, image depictions can

be asserted via a depiction property (a sub-property of

foaf:depiction) defined in the MINDSWAP Digital Media

ontology. Thus, images can be semantically linked to instances

defined on the Web. Image regions can defined via an

ImagePart concept (also defined in the MINDSWAP Digital

Media ontology). Additionally, regions can be given a bounding box

by using a property named svgOutline, allowing localizing of

image parts. Essentially SVG outlines (SVG XML literals) of the

regions can be specified using this property. Using the Dublin Core

and the EXIF

Schema more general annotations about the image can be asserted

including its creator, size, etc. A subset of these sample

annotations are shown in the RDF graph below in Figure 2.

Figure 2 illustrates how the approach links metadata to the image:

- image content, e.g., Apollo 7 Launch, is identified by

http://www.mindswap.org/2005/owl/digital-media#depicts

- image subparts are identified by the property

http://www.mindswap.org/2005/owl/digital-media#hasRegion

- image regions are localized using

http://www.mindswap.org/2005/owl/digital-media#svgOutline and an SVG snippet

Additionally, the entire annotations of the Apollo 7 launch are shown below in RDF/XML.

<rdf:RDF

xmlns:j.0="http://www.w3.org/2003/12/exif/ns#"

xmlns:j.1="http://www.mindswap.org/2005/owl/digital-media#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:j.2="http://semspace.mindswap.org/2004/ontologies/System-ont.owl#"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

xmlns:owl="http://www.w3.org/2002/07/owl#"

xmlns:dc="http://purl.org/dc/elements/1.1/"

xmlns:j.3="http://semspace.mindswap.org/2004/ontologies/ShuttleMission-ont.owl#"

xml:base="http://example.org/NASA-Use-Case" >

<rdf:Description rdf:about="A0">

<j.1:depicts rdf:resource="#Saturn_1B"/>

<rdf:type rdf:resource="http://www.mindswap.org/~glapizco/technical.owl#ImagePart"/>

<rdfs:label>region2407</rdfs:label>

<j.1:regionOf rdf:resource="http://grin.hq.nasa.gov/IMAGES/SMALL/GPN-2000-001171.jpg"/>

<j.1:svgOutline>

<svg xml:space="preserve" width="451" heigth="640" viewBox="0 0 451 640">

<image xlink:href="http://grin.hq.nasa.gov/IMAGES/SMALL/GPN-2000-001171.jpg" x="0" y="0" width="451" height="640" />

<rect x="242.0" y="79.0" width="46.0" height="236.0" style="fill:none; stroke:yellow; stroke-width:1pt;"/>

</svg>

</j.1:svgOutline>

</rdf:Description>

<rdf:Description rdf:about="http://grin.hq.nasa.gov/IMAGES/SMALL/GPN-2000-001171.jpg">

<j.0:imageLength>640</j.0:imageLength>

<dc:date>10/11/1968</dc:date>

<dc:description>Taken at Kennedy Space Center in Florida</dc:description>

<j.1:depicts rdf:resource="#Apollo_7_Launch"/>

<j.1:hasRegion rdf:nodeID="A0"/>

<dc:creator>NASA</dc:creator>

<rdf:type rdf:resource="http://www.mindswap.org/~glapizco/technical.owl#Image"/>

<j.0:imageWidth>451</j.0:imageWidth>

</rdf:Description>

<rdf:Description rdf:about="#Apollo_7_Launch">

<j.3:launchDate>10/11/1968</j.3:launchDate>

<j.3:codeName>Apollo 7 Launch</j.3:codeName>

<j.3:has_shuttle rdf:resource="#Saturn_1B"/>

<rdfs:label>Apollo 7 Launch</rdfs:label>

<j.1:depiction rdf:resource="http://grin.hq.nasa.gov/IMAGES/SMALL/GPN-2000-001171.jpg"/>

<rdf:type rdf:resource="http://semspace.mindswap.org/2004/ontologies/ShuttleMission-ont.owl#Launch"/>

</rdf:Description>

<rdf:Description rdf:about="#Saturn_1B">

<rdfs:label>Saturn_1B</rdfs:label>

<j.1:depiction rdf:nodeID="A1"/>

<rdfs:label>Saturn 1B</rdfs:label>

<rdf:type rdf:resource="http://semspace.mindswap.org/2004/ontologies/System-ont.owl#ShuttleName"/>

<j.1:depiction rdf:nodeID="A0"/>

</rdf:Description>

</rdf:RDF>

In order to represent the low level features of images, the aceMedia

Visual Descriptor Ontology can be used. This ontology contains

representations of MPEG-7 visual descriptors and models Concepts

and Properties that describe visual characteristics of objects. For

example, the dominant color descriptor can be used to describe the

number and value of dominant colors that are present in a region of

interest and the percentage of pixels that each associated color

value has.

Available Annotation Tools

Existing toolkits, such as PhotoStuff and M-OntoMat-Annotizer, currently provide

graphical environments to accomplish the annotation tasks mentioned

above. Using such tools, users can load images, create regions

around parts of the image, automatically extract low-level features

of selected regions (via M-OntoMat-Annotizer), assert statements

about the selected regions, etc. Additionally, the resulting

annotations can be exported as RDF/XML (as shown above), thus

allowing them be shared, indexed, and used by advanced

annotation-based browsing (and searchable) environments.

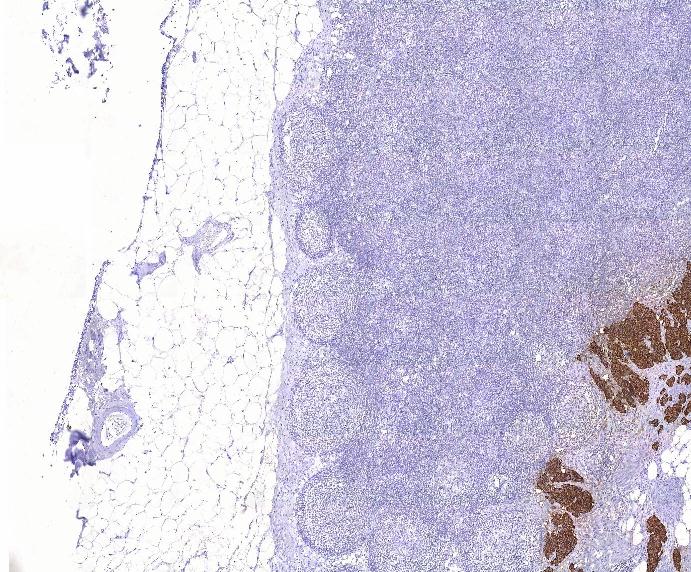

Possible Semantic Web-based solution

Microscopic image of lymph tissue.

Image courtesy of prof. C.A. Beltrami, University of Udine,

used with permission.

A solution for the requirements expressed in the use case

described in Section 1.5 includes support

for annotating information about images and their regions.

Given the numerous controlled vocabularies (including thesauri and

ontologies such as ICD9,

MeSH,

SNOMED) that are already in use in

the biomedical domain, the annotations should be

able to make use of the concepts of these controlled vocabularies, and

not be free text or keywords only. And given the many data stores that are already

available (including electronic patient records), annotation should

not be a fully manual process. Instead, metadata should be

(semi)automatically linked to, or extracted from, these existing

sources.

For some medical imaging, image annotation is already done through

image formats able to carry metadata, like

DICOM for

radiology. Preferably, Semantic Web-solutions should be made

interoperable with such embedded metadata image formats.

Domain Specific Ontologies

In order to describe the content of such images, a mechanism to

represent the domain specific content depicted within them is

needed. For biomedical concepts, many vocabularies are available,

including, but not limited to:

-

The ICD9-CM classification includes pathology and procedures

concepts.

-

SNOMED aims at the complete description of a clinical

record. Eleven modules include terms for anatomy, diagnosis,

morphological aspects, organisms, procedures and techniques, and more.

-

MeSH is a terminology developed for describing scientific literature

items.

-

NCI Thesaurus, more recently developed, is a complete ontology

devoted to concepts related to oncology.

The National Library of Medicine also developed

UMLS (Unified Medical Language System),

including a meta-thesaurus where terms coming from over 60

biomedical terminologies in many languages are connected in a

semantic network. The orginal formats of all these ontologies are

not in RDF or OWL. However, they can be (or already have been)

converted into these languages. All metadata described above is

covered by one or more of these vocabularies. Furthermore, thanks

to UMLS, it is also possible to move from one vocabulary to another

using the meta-thesaurus as a bridge.

As a sample, the gastric mucosa can be brought from MeSH, where it

has unique ID D005753, and is placed in the digestive system

subtree as a part of the stomach as well as in the tissue subtree

among the membranes.

Using semantic annotations

Such kind of metadata may help in retrieving images with greater

recall than usual. In fact, a student might search for images of

the stomach. If annotated as suggested, the student will be able to

discover this image of the gastric mucosa, since it is a part of the

stomach according to the MeSH ontology. On the other side, when looking for

samples of membranes, she will again find also this image, since it

is also depicting a specific kind of membrane. The same could be

said for queries involving epithelium, which is not the main

subject of the image, but it is still a part of it.

A possible scenario

As a scenario, let's consider an image from the medical field of

histology, a field that studies the microscopic structure of

tissue. The image is taken using a microscope, and stored for

educational aims. Due to this specific purpose, there is no need

to annotate the image with sensible patient' data. However, some

personal data are needed to interpret the image meaning: for example, age

and gender meaning are sometimes useful variables, as also patient personal and familiar

history are. These could become annotations of the image and

eventually also of other images of the same patient, acquired with

the same tool or even some other modality. This may correspond to

the following RDF code, where we also consider that to the same

patient, many images could be associated (in this case, image1.jpg

and image2.jpg):

<?xml version="1.0"?>

<rdf:RDF

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"

xmlns:dc="http://purl.org/dc/elements/1.1/"

xmlns:dcterms="http://purl.org/dc/terms/">

<rdf:Description rdf:about="http://www.telemed.uniud.it/cases/case1/">

<dc:creator>Beltrami, Carlo Alberto</dc:creator>

<dc:contributor>Della Mea, Vincenzo</dc:contributor>

<dc:title>Sample annotated image</dc:title>

<dc:subject>

<dcterms:MESH>

<rdf:value>A10.549.400</rdf:value>

<rdfs:label>lymph nodes</rdfs:label>

</dcterms:MESH>

</dc:subject>

<dc:subject>

<dcterms:MESH>

<rdf:value>C04.697.650.560</rdf:value>

<rdfs:label>lymphatic metastasis</rdfs:label>

</dcterms:MESH>

</dc:subject>

<dc:subject>

<dcterms:MESH>

<rdf:value>C04.557.470.200.025.200</rdf:value>

<rdfs:label>carcinoid tumor</rdfs:label>

</dcterms:MESH>

</dc:subject>

<dc:date>2006-05-30</dc:date>

<dc:language>EN</dc:language>

<dc:description>Female, 86 yrs. Some clinical history. This field might be further structured.</dc:description>

<dc:identifier>http://www.telemed.uniud.it/cases/case1/</dc:identifier>

<dc:relation.hasPart rdf:resource="http://www.telemed.uniud.it/cases/case1/image1.jpg"/>

<dc:relation.hasPart rdf:resource="http://www.telemed.uniud.it/cases/case1/image2.jpg"/>

</rdf:Description>

</rdf:RDF>

The subject has been described using terms from MeSH. Another

level of description regards the biological sample and its

preparation for microscopy. The biological sample comes from a body

organ. It has been sectioned and stained using some specific

chemical product. Associated to the sample, there are one or more

diagnostic hypotheses, which derive from both patient data and

image (or images) content. Let's consider to have a chromogranin

stained section of a lymph node (chromogranin is an

immunohistochemical antibody), showing signs of metastatis from a

carcinoid tumor. A final level of description regards the

acquisition device, constituted by a microscope with specific

optical properties (the most important of which are objective

magnification and numerical aperture, which influence the optical

resolution and thus the visible detail), and the camera, with its

pixel resolution and color capabilities. In this scenario, the

image might have been acquired with a 10x objective, 0.20 NA, at a

resolution of 1600x1200 pixel.

<rdf:Description rdf:about="http://www.telemed.uniud.it/cases/case1/image1.jpg">

<dp:image dp:staining="chromogranin" dp:magnification="10" dp:na="0.20"

dp:xsize="1600" dp:ysize="1200" dp:depth="24" dp:xres="1.2" dp:yres="1.2"/>

<dc:Description>chromogranin-stained section of lymph node</dc:Description>

<dc:subject>

<dcterms:MESH>

<rdf:value>C030075</rdf:value>

<rdfs:label>chromogranin A</rdfs:label>

</dcterms:MESH>

</dc:subject>

<dc:subject>

<dcterms:MESH>

<rdf:value>E05.200.750.551.512</rdf:value>

<rdfs:label>immunohistochemistry</rdfs:label>

</dcterms:MESH>

</dc:subject>

<dc:subject>

<dcterms:MESH>

<rdf:value>E05.595</rdf:value>

<rdfs:label>microscopy</rdfs:label>

</dcterms:MESH>

</dc:subject>

</rdf:Description>

In addition to global metadata referring to the whole image,

multimedia related ontologies can be used to localize and represent

regions within particular images. Annotations can provide for a

description of specific morphological features, including a

specification of the depicted anatomical details, presence and kind

of abnormal cells, and generally speaking, features supporting the

above mentioned diagnostic hypotheses. In such an image, metastatic

cells are evidentiated in brown by the staining, and can be

semantically annotated. In this case, the MeSH terminology does not

provide sufficient detail, so that we must chose another source of

terms: the NCI Thesaurus is one possibility.

<rdf:Description id="area1">

<dp:rectangle>some rectangle definition</dp:rectangle>

</rdf:Description>

<rdf:Description about="#area1">

<dc:Description>metastatic cells</dc:Description>

<dc:subject>

<dcterms:NCI>

<rdf:value>C4904</rdf:value>

<rdfs:label>Metastatic_Neoplasm_to_Lymph_Nodes</rdfs:label>

</dcterms:NCI>

</dc:subject>

<dc:subject>

<dcterms:NCI>

<rdf:value>C12917</rdf:value>

<rdfs:label>Malignant_Cell</rdfs:label>

</dcterms:NCI>

</dc:subject>

</rdf:Description>

Current Semantic Web technologies are sufficiently generic to support

annotation of a wide variety of Web resources, including image

resources. This document provides examples of the use of Semantic

Web languages and tools for image annotation, based on use cases

for a wide variety of domains. It also briefly surveys some

currently available vocabularies and tools that can be used to