Projects

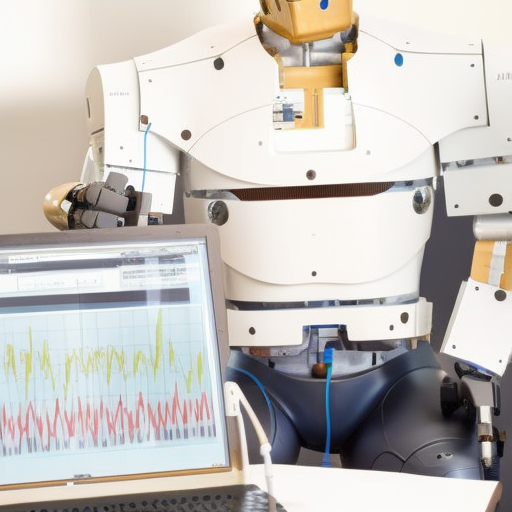

As models get smarter, humans won't always be able to independently check if a model's claims are true or false. We aim to circumvent this issue by directly eliciting latent knowledge (ELK) inside the model’s activations.

Alignment-MineTest is a research project that uses the open source Minetest voxel engine as a platform for studying AI alignment.

Featured