This new Docker extension lets you run Docker Model Runner as the inference service behind Open WebUI, powering a richer chat experience. This blog walks you through why Docker Model Runner and Open WebUI make a powerful duo, how to set up your own local AI assistant, and what’s happening under the hood.

Local LLMs are no longer just experimental toys. Thanks to rapid advances in model optimization and increasingly powerful consumer hardware, local large language models have gone from proof-of-concept curiosities to genuinely useful tools.

Even a MacBook with an M-series chip can now run models that deliver fast, meaningful responses offline, without an internet connection or API keys.

Docker Model Runner, accessible via Docker Desktop (and also available as a plugin for Docker CE and of course fully OSS), makes getting started easy: just pick a model in the UI or run a single docker model run from the CLI.

You’ll have a fully operational model up and running in seconds.

Docker Model Runner + Open WebUI: A powerful duo for running richer, local AI chat experiences

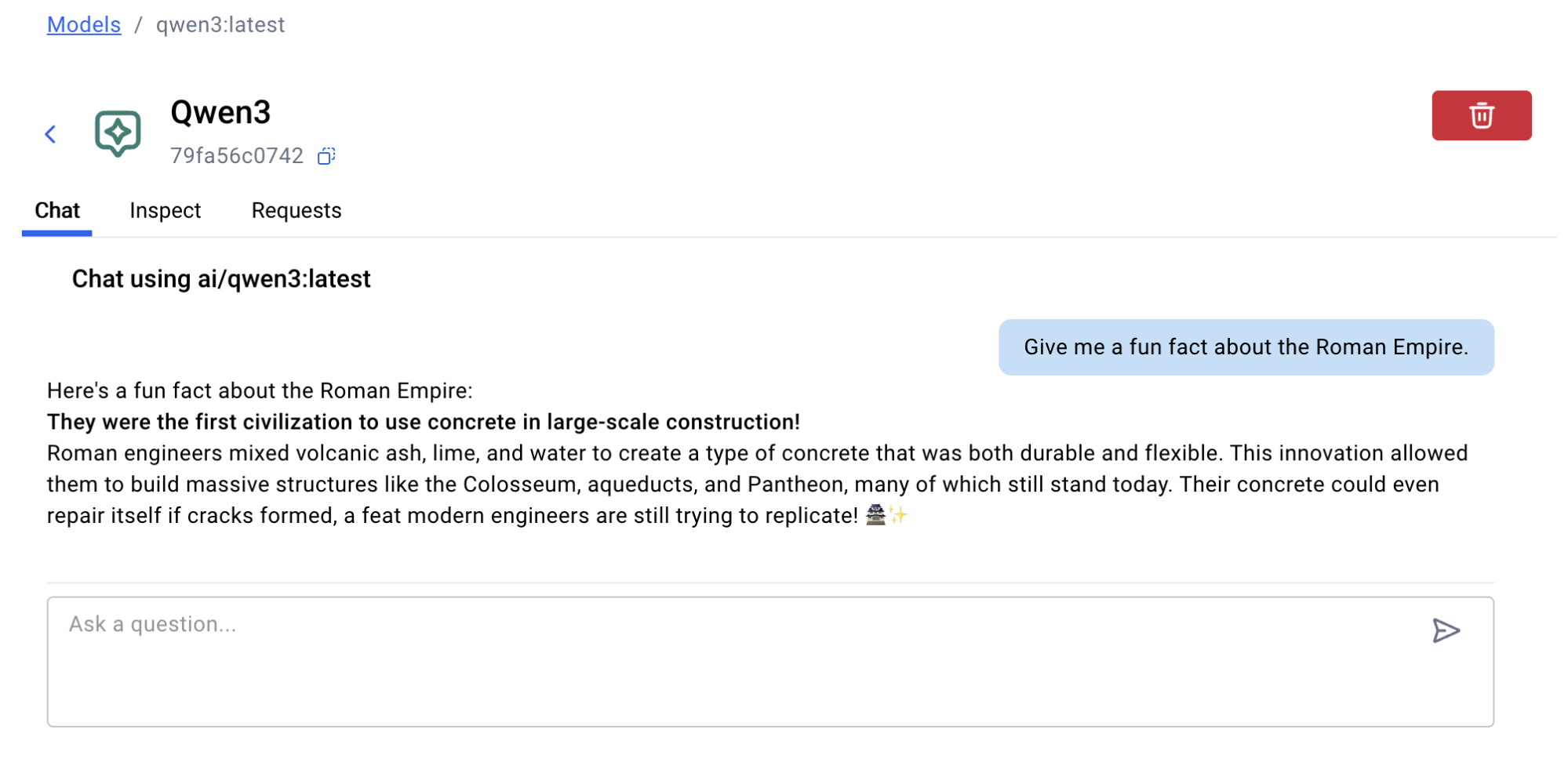

Docker Model Runner is designed as an inference service in Docker Desktop or CLI, allowing developers to run models locally with familiar workflows and commands they trust and know. This means from a design perspective, it only provides the bare minimum: a prompt box and a response field. There’s no memory. No file upload. No chat flow. No interface that feels like a real assistant. Note this is actually by design. There is no intention to replicate an experience within Docker Desktop; that is already well implemented by other offerings within the wider ecosystem.

That’s where Open WebUI comes in: a modern, self-hosted interface designed specifically for working with local LLMs.

It brings chat history, file uploads, prompt editing, and more. All local. All private.

That’s why an extension was created: to combine the two.

This Docker Extension launches Open WebUI and hooks it directly into your running model via Docker Model Runner. No configuration. No setup. Just open and go.

Let’s see how it works.

From Zero to Local AI Assistant in a Few Clicks

If you already have Docker Desktop installed, you’re almost there.

Head over to the Models tab and pick any model from the Docker Hub section: GPT-OSS, Gemma, LLaMA 3, Mistral or others.

One click, and Docker Model Runner will pull the container and start serving the model locally.

Prefer the CLI? A single docker model pull does the same job.

Next, you might want something more capable than a single input box.

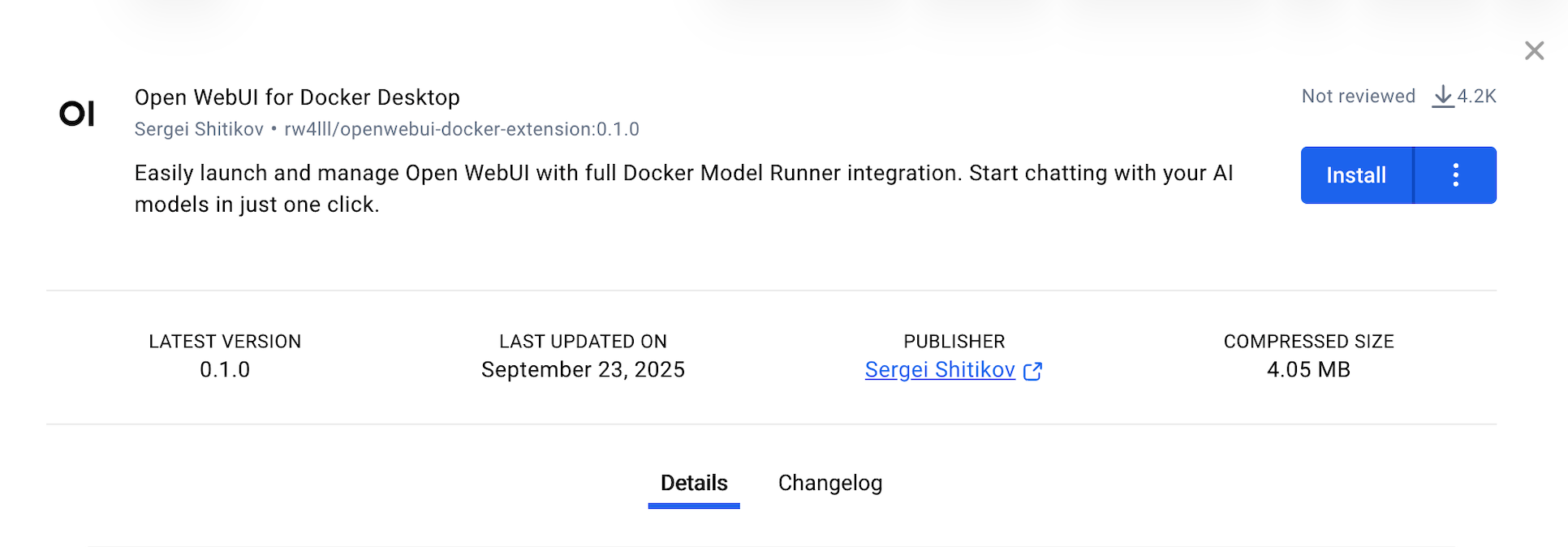

Open the Extensions Marketplace inside Docker Desktop and install the Open WebUI extension, a feature-rich interface for local LLMs.

It automatically provisions the container and connects to your local Docker Model Runner.

All models you’ve downloaded will appear in the WebUI, ready to use; no manual config, no environment variables, no port mapping.

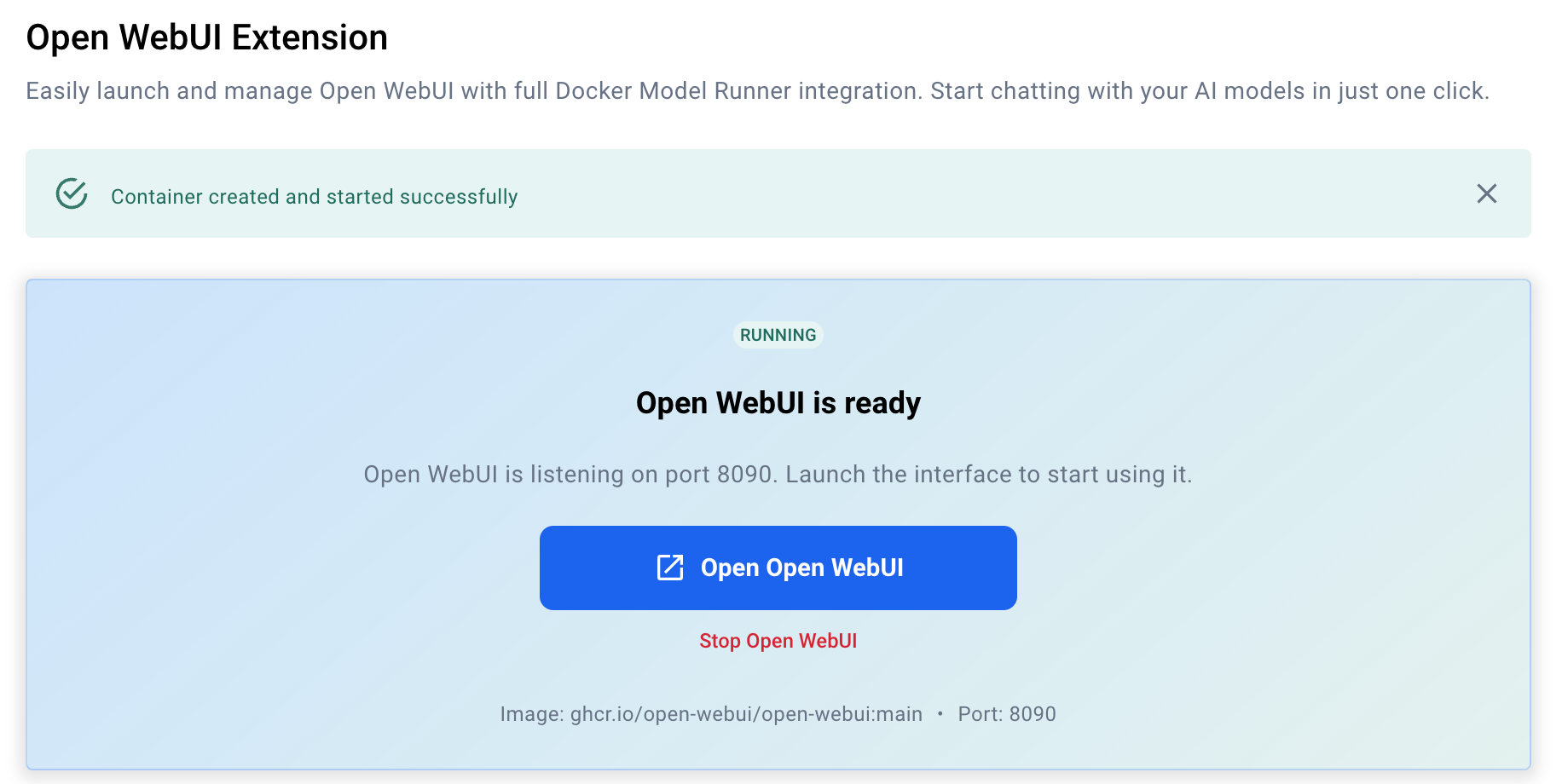

Once setup completes, you’ll see a screen confirming the extension is running, along with a button to launch the interface in your browser.

Alternatively, you can open it manually at http://localhost:8090 (default port) or bookmark it for future use.

Note: The first-time startup may take a couple of minutes as Open WebUI installs required components and configures integration.

Subsequent launches are much faster – nearly instant.

What You Can Do with Open WebUI

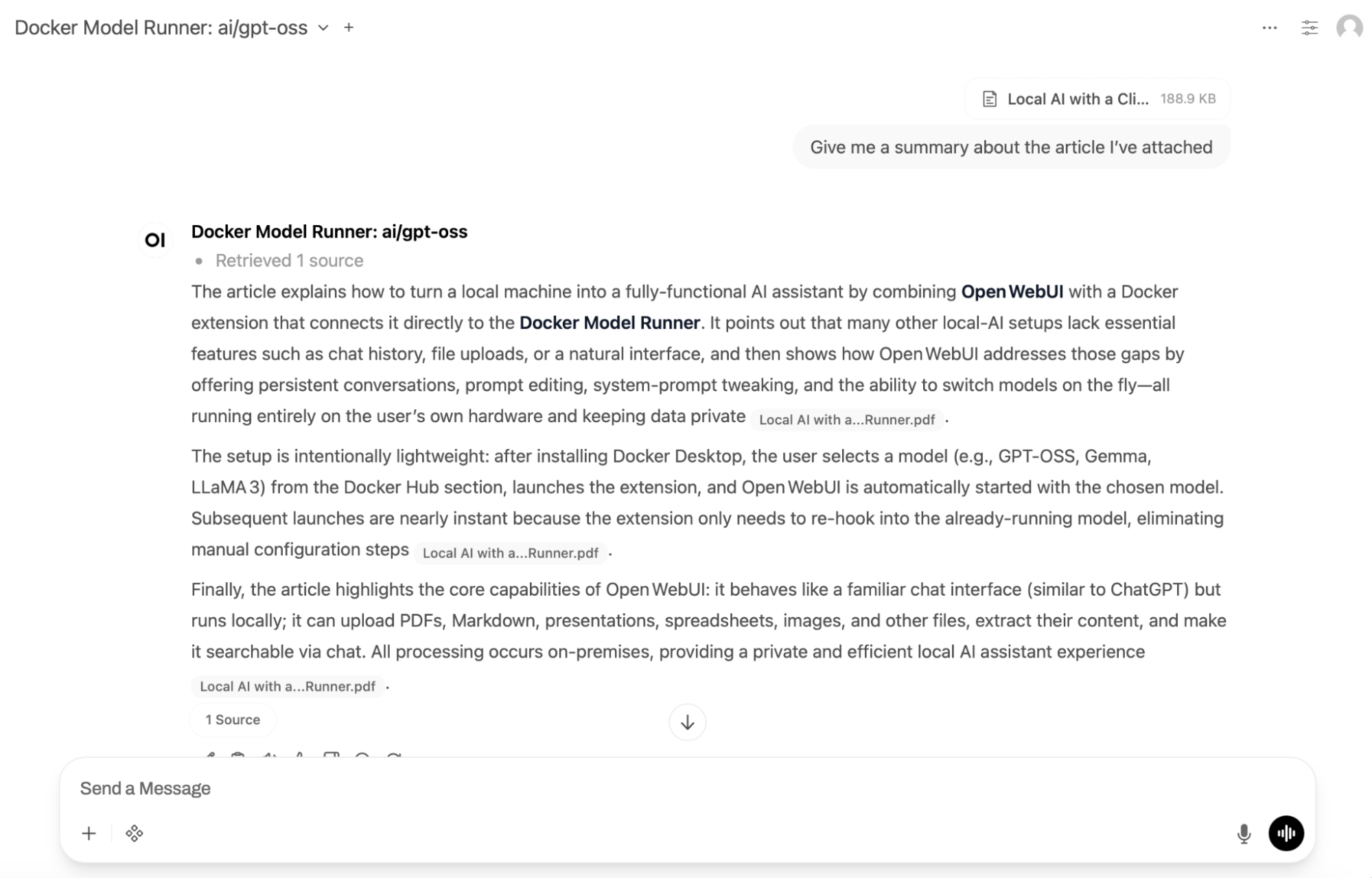

Once installed, Open WebUI feels instantly familiar, like using ChatGPT, but running entirely on your own machine.

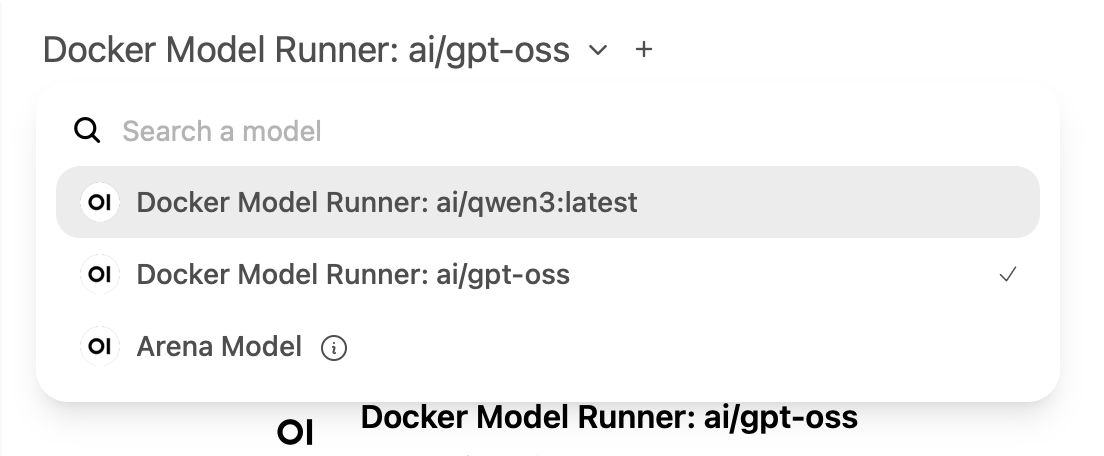

You get a full chat experience, with persistent conversations, system prompt editing, and the ability to switch between models on the fly.

Upload files and chat with them

Drop in PDFs, Markdown files, presentations, spreadsheets, or even images.

Open WebUI extracts the content and makes it queryable through the chat.

Need a summary, quick answer, or content overview? Just ask: all processing happens locally.

Speak instead of type

With voice input turned on, you can talk to your assistant right from the browser.

This is great for hands-free tasks, quick prompts, or just demoing your local AI setup to a friend.

Requires permission setup for microphone access.

Define how your model behaves

Open WebUI supports full control over system prompts with templates, variables, and chat presets.

Whether you’re drafting code, writing blog posts, or answering emails, you can fine-tune how the model thinks and responds.

Switch between models instantly

Already downloaded multiple models using Docker Model Runner?

Open WebUI detects them automatically. Pick any model from the dropdown and start chatting; no restart required.

Save insights to memory

Want the model to remember something specific?

You can store facts or reminders manually in the personal memory panel and edit or remove them at any time.

More Things You Can Do

Open WebUI goes beyond chat with advanced tools that power real workflows:

- Function Calling & Plugins

- Use prebuilt tools or define your own Python functions via the Pipelines framework, ideal for automations, translations, or data lookups.

- Multilingual UI

- Open WebUI supports a wide range of interface languages and is fully localizable, perfect for international teams or non-English users.

- Secure, local-first by design

- No sign-up and no cloud storage. Everything stays on your machine, under your control.

Note: Not all features are universally available. Some depend on the model’s capabilities (e.g., function calling, image understanding), your current Open WebUI settings (e.g., voice input, plugins), or the hardware you’re running on (e.g., GPU acceleration, local RAG performance).

Open WebUI aims to provide a flexible platform, but actual functionality may vary based on your setup.

How it works inside

Under the hood, the extension brings together two key components: integration between Open WebUI and Docker Model Runner, and a dynamic container provisioner built into the Docker extension.

Open WebUI supports Python-based “functions”, lightweight plugins that extend model behavior.

This extension includes a function that connects to Docker Model Runner via its local API, allowing the interface to list and access all downloaded models automatically.

When you install the extension, Docker spins up the Open WebUI container on demand. It’s not a static setup, the container is configured dynamically based on your environment. You can:

- Switch to a different Open WebUI image (e.g., CUDA-enabled or a specific version)

- Change the default port

- Support for custom environments and advanced flags – coming soon

The extension handles all of this behind the scenes, but gives you full control when needed.

Conclusion

You’ve just seen how the Docker Open WebUI Extension turns Docker Model Runner from a simple model launcher into a fully-featured local AI assistant with memory, file uploads, multi-model support, and more.

What used to require custom configs, manual ports, or third-party scripts now works out of the box, with just a few clicks.

Next steps

- Install the Open WebUI Extension from the Docker Desktop Marketplace

- Download a model via Docker Model Runner (e.g., GPT-OSS, Gemma, LLaMA 3, Mistral)

- Launch the interface at

http://localhost:8090and start chatting locally - Explore advanced features: file chat, voice input, system prompts, knowledge, plugins

- Switch models anytime or try new ones without changing your setup

The future of local AI is modular, private, and easy to use.

This extension brings us one step closer to that vision and it’s just getting started.

Get involved

- Star or contribute to Open WebUI Docker Extension on GitHub

- Follow updates and releases in the Docker Extensions Marketplace

- Contribute to the Docker Model Runner repo: it’s open source and community-driven

- Share feedback or use cases with the Docker and Open WebUI communities

Enter the Docker MCP Toolkit.

The Model Context Protocol (MCP) Toolkit acts as a secure bridge, allowing AI models like Codex to safely discover and use any of the 200+ MCP servers from the trusted MCP catalog curated by Docker.

In this post, we’ll walk through an end-to-end demo, just like our Claude Code and Gemini CLI tutorials. But this time, we’re pairing Codex with Neo4j MCP servers.

First, we’ll connect Codex to the Neo4j server using the MCP Toolkit. Then, we’ll show a fun example: building a graph of Pokémon species and their types, and exploring the data visually. While playful, this example highlights how Codex + MCP can be applied to real-world, semi-structured data pipelines.

Read on to see how a generic AI assistant, when supercharged with Docker and MCP, can evolve into a specialized data engineering powerhouse!

Why use Codex with Docker MCP

While Codex provides powerful AI capabilities and MCP provides the protocol, Docker MCP Toolkit makes automated data modeling and graph engineering practical. Without containerization, building a knowledge graph means managing local Neo4j installations, dealing with database driver versions, writing boilerplate connection and authentication code, and manually scripting the entire data validation and loading pipeline. A setup that should take minutes can easily stretch into hours for each developer.

Docker MCP Toolkit eliminates this friction:

- 200+ pre-built MCP servers in the Catalog

- One-click deployment through Docker Desktop

- Neo4j Data Modeling MCP for schema design and validation

- Neo4j Cypher MCP for direct database queries and ingestion

- Secure credential management for database passwords

- Consistent configuration across Mac, Windows, and Linux

- Automatic updates when new server versions are released

We built Docker MCP Toolkit to meet developers where they are. If you’re using Codex, you should be able to engineer a knowledge graph without wrestling with database infrastructure.

The Setup: Connecting Codex to Neo4j Tools

Prerequisites

First, we need to give Codex access to the specialized Neo4j tools.

- Install Codex and run it at least once to get authentication out of the way

- Install Docker Desktop 4.40 or later

- Enable MCP Toolkit

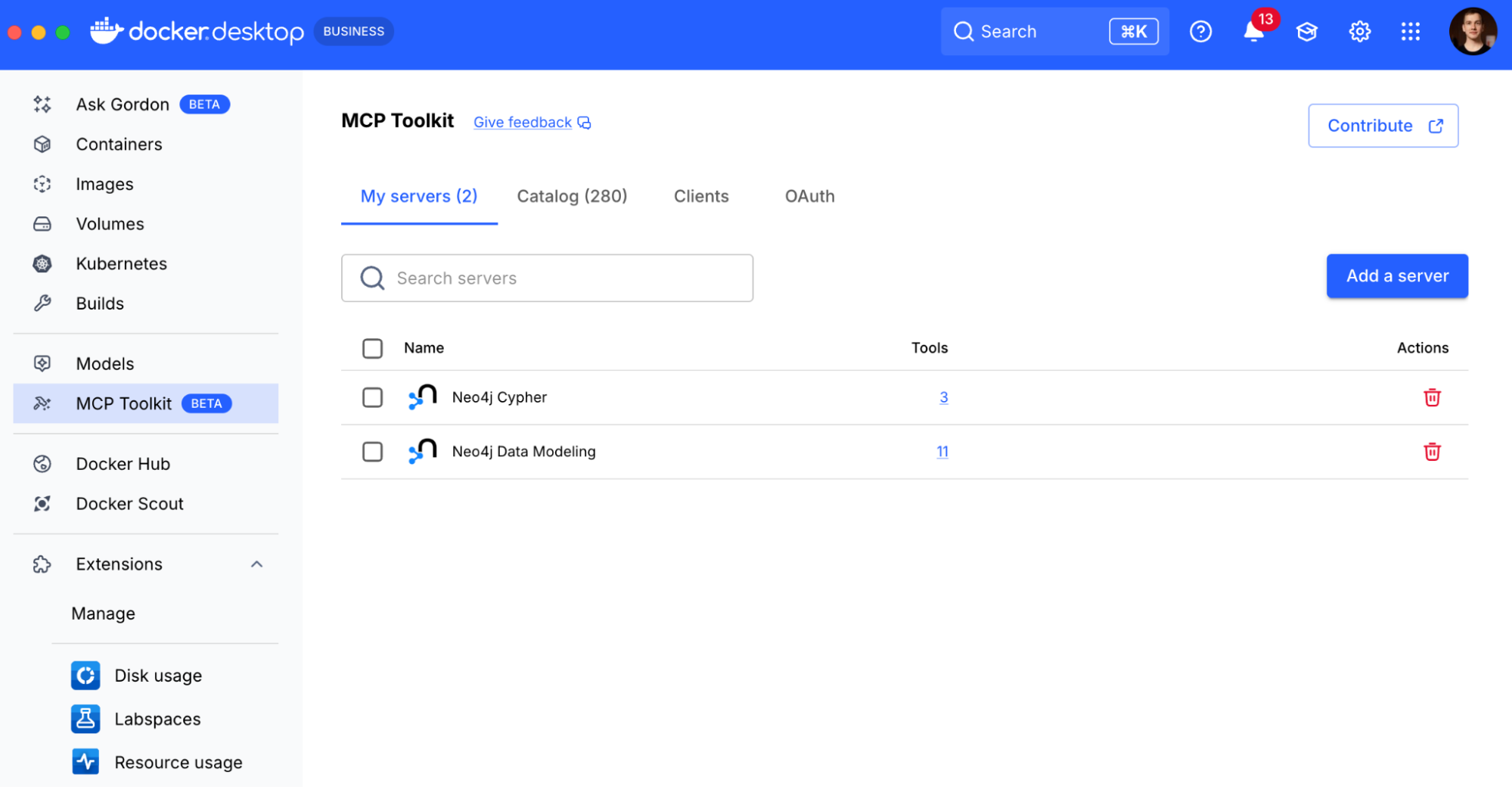

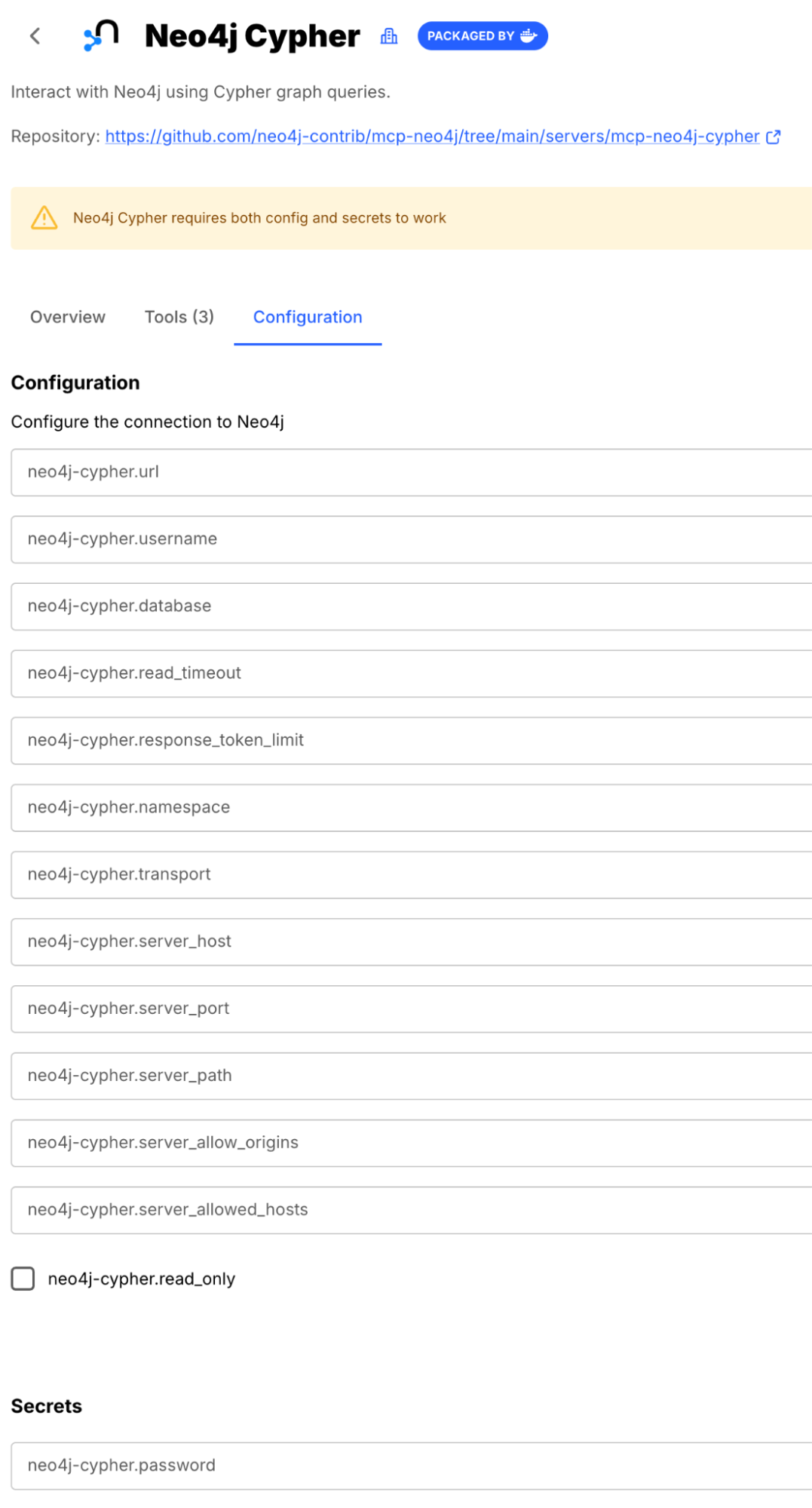

Step 1: Add the Neo4j MCP Servers

The Neo4j Cypher and Data Modeling servers are available out-of-the-box in the main MCP Toolkit catalog.

- In Docker Desktop, navigate to the MCP Toolkit tab.

- Click the Catalog tab.

- Search for “Neo4j” and click + Add for both the Neo4j Cypher and Neo4j Data Modeling servers.

- They will now appear in your “My servers” list.

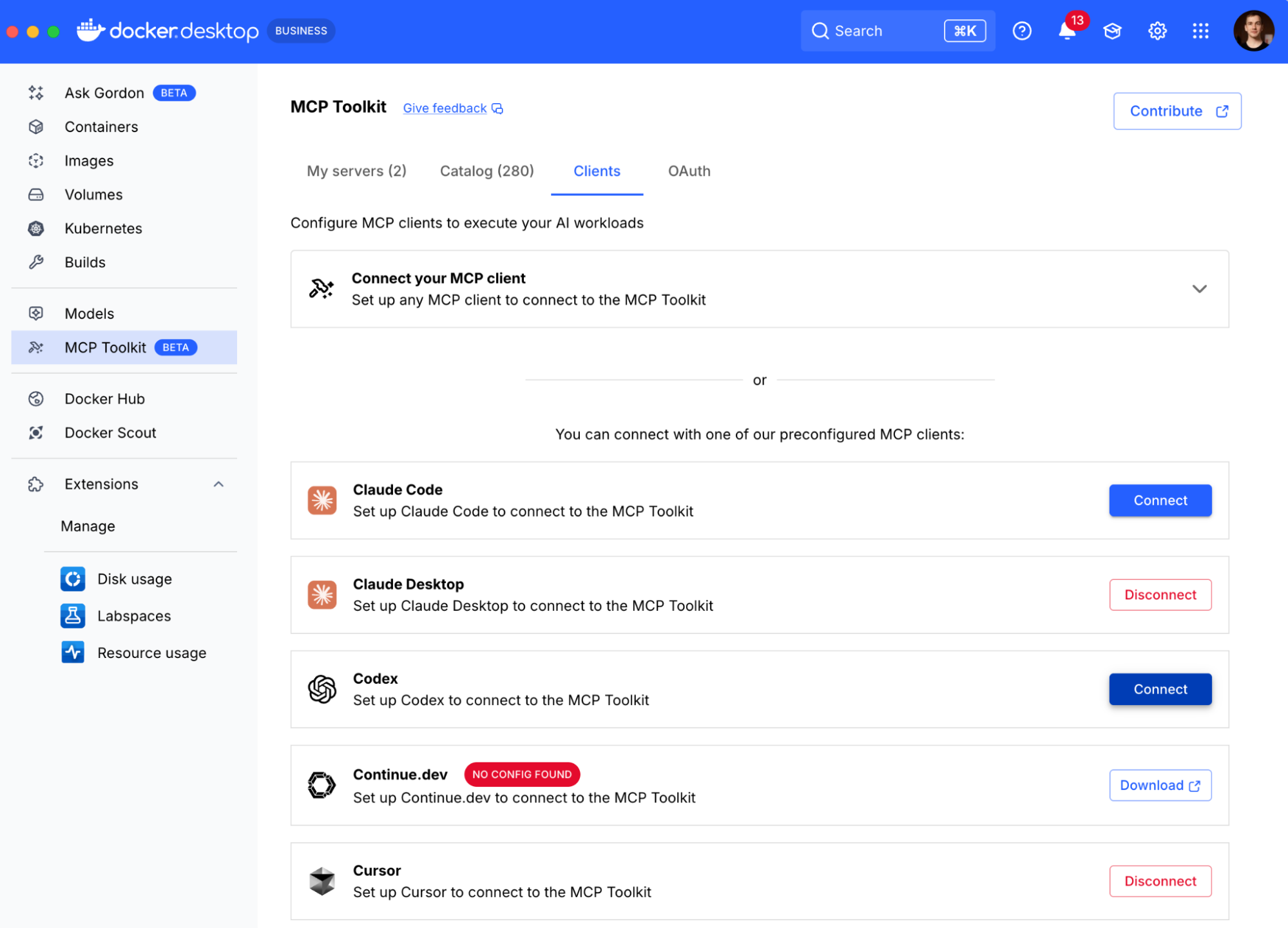

Step 2: Connect Codex to the MCP Toolkit

With our tools ready, we run a one-time command to make Codex aware of the MCP Toolkit:

docker mcp-client configure codex

We can also do that from the Docker Desktop UI, navigate to the clients tab, and smash that connect button next to Codex and any other assistants you use:

Docker will edit the corresponding configuration files and next time Codex starts, it’ll connect to the MCP toolkit and you’ll have the tools at your disposal!

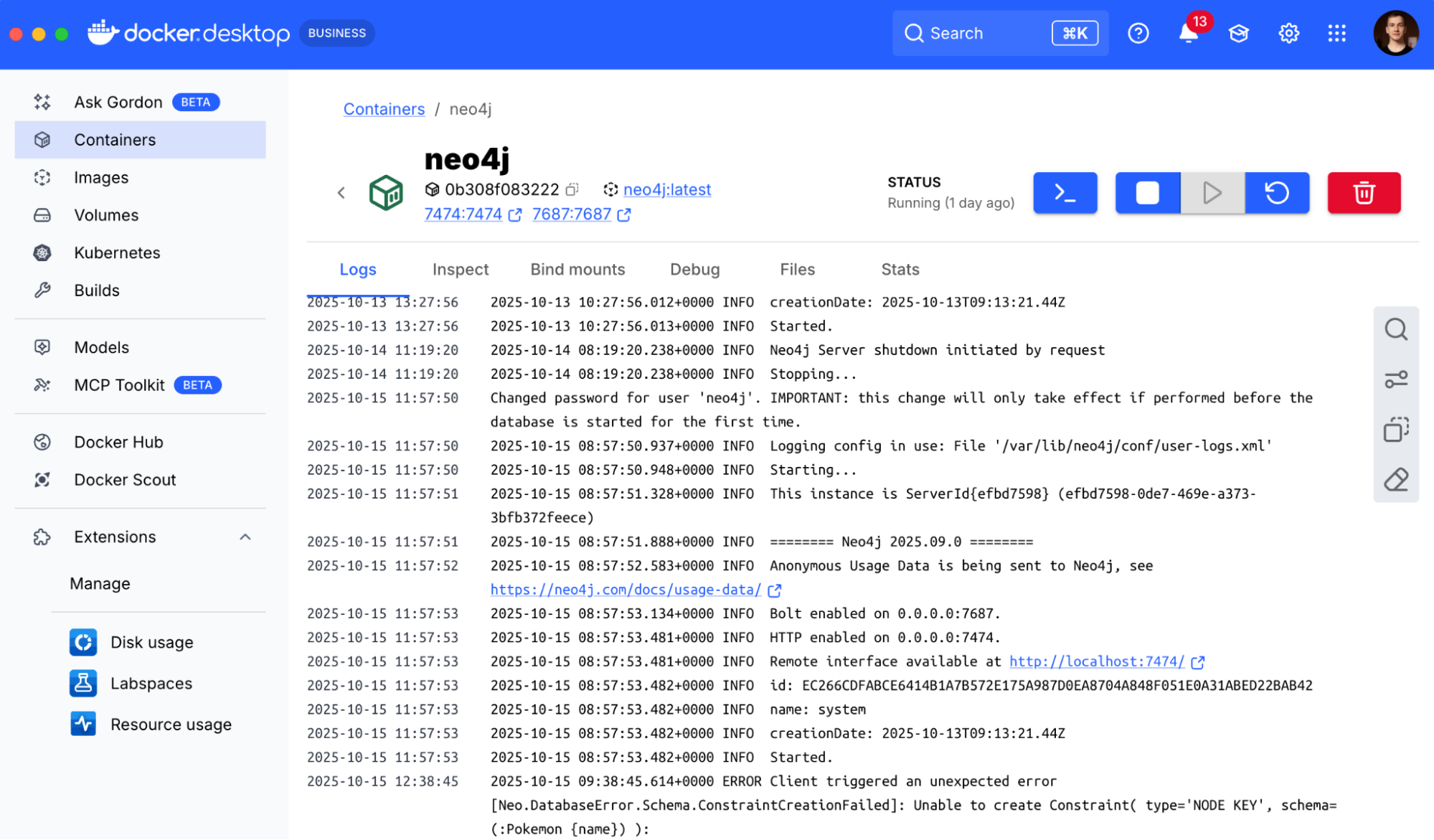

Step 3: Start and Configure Neo4j

We still need to configure the Neo4j Cypher MCP server to connect to the Neo4j database, so we’ll set this up now. We’ll use Codex to start our Neo4j database and configure the connection. First, we ask Codex to create the container:

› Spin up a Neo4j container for me in Docker please.

Codex will run the necessary Docker command, and get our Neo4j container running. You can of course do this manually, use a cloud service, or download the Neo4j Desktop application, but since we’re having fun in Codex – then why not make it do these mundane things for us.

With Neo4j available in the container we now need to configure the Neo4j Cypher MCP server to connect to it. The Neo4j Data Modeling MCP server works without any configuration. To simplify you can take a screenshot of the configuration form and ask Codex for the information on how to fill it to connect to the container we just started.

Next, we asked Codex for help, pointing it to a screenshot of the configuration page:

› There is a screenshot in this directory. It has the information we need to configure, MCP server to connect to the Neo4j database. Please look at it and give me the information to configure the MCP server to connect to our Neo4j container.

Codex inspected the container, found the running container, and determined its authentication details (e.g., NEO4J_AUTH=neo4j/password). It then provided the correct configuration values. We just need to update the Neo4j Cypher MCP server configuration in the Docker Desktop UI with the neo4j-cypher.url (neo4j://localhost:7687) and the password.

Fill the form in the Docker MCP toolkit for the Neo4j Cypher MCP server and restart codex now.

Use Case: Building a Pokémon Graph with Codex + Neo4j

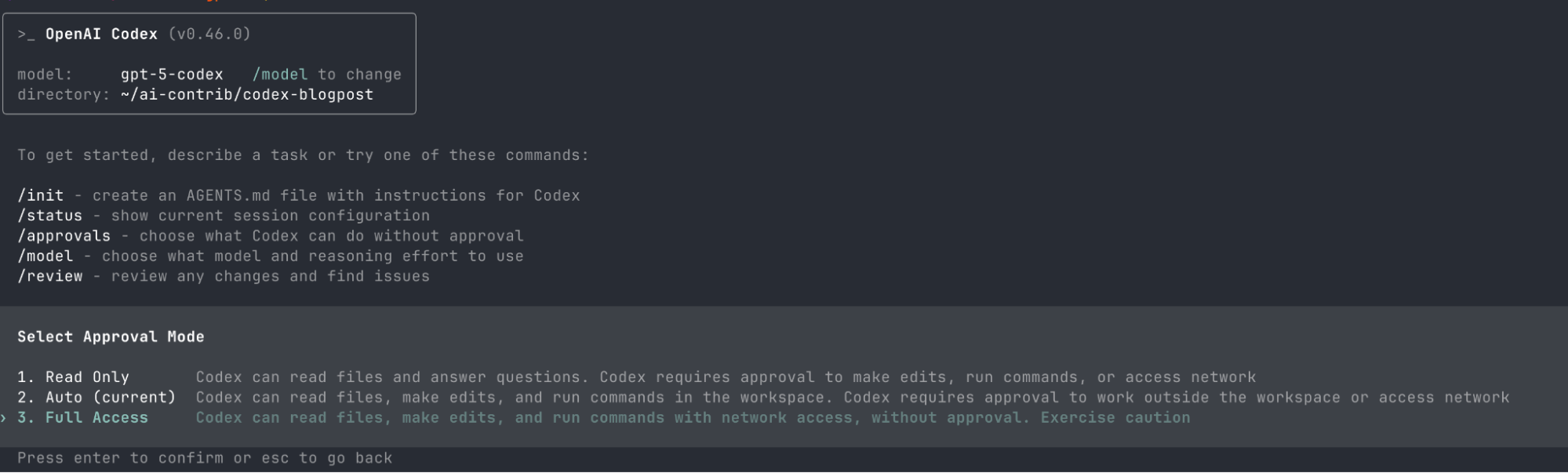

Now we’re ready to tackle our original problem of building the graph of Pokémon species. First of all, enter the YOLO mode by typing /approvals and selecting the mode where it has freedom to do whatever it pleases:

Next, we can throw the full power of Codex models at our task. We give it the full problem statement:

› Here's the page with all pokemon in generation 1: https://bulbapedia.bulbagarden.net/wiki/List_of_Pok%C3%A9mon_by_index_number_in_Generation_I

and here's the types information: https://bulbapedia.bulbagarden.net/wiki/Type

we're going to create a graph with data about these pokemon (ignore MissingNo entries - they are a glitch and ignore other glitched pokemon).

you have a neo4j container running, and the MCP tools for modelling and cypher.

Codex will accept the task, break it down into a plan, and start cranking at it.

There are a few interesting moments in how it approaches it, though it largely depends on the model you configure Codex with. For me it was “model: gpt-5-codex” and it tends to use scripting more than chaining curl commands for example.

Here’s the plan you might see if you’re following along:

• I’m preparing to build a Pokemon graph by fetching data from a website using network access. Since this is a complex task, I’ll create a detailed plan involving data gathering, modeling

entities like Pokémon and Types, defining relationships such as evolutions and type effectiveness, and then using tools like MCP Docker to build the Neo4j graph. The first step is to draft

• Updated Plan

└ □ Inspect requirements and gather Generation I Pokémon data with types and evolution chains

□ Define Neo4j graph data model for Pokémon, Types, and relationships

□ Load data into Neo4j via Cypher ingestion and validate

Then it immediately started cranking python scripts with requests, BeautifulSoup and other web scraping friends to fetch the data from the provided wiki. It’s interesting that the Pokemon data is not actually on a single page, but spread through subpages for every Pokemon species, so perhaps scraping with Python makes a ton of sense over curling the pages and spending tokens on processing the data in the model. And it leaves the scripts behind so you can verify and reproduce the results if needed.

Eventually the model proceeds to analyze the data, and here the MCP tools become really helpful. You can see below that it’s calling the MCP_DOCKER.validate_data_model from the server we configured earlier.

I'm outlining a comprehensive data model for Pokemon, types, and their relationships with precise property types and units converted for better clarity. I plan to generate ingestion-ready

JSON files via a Python script to integrate cleanly with Neo4j ingestion tools, ensuring the data pipeline is robust and structured.

└ wrote data model to data/gen1_pokemon_model.json

• Called MCP_DOCKER.validate_data_model({"data_model":"data/gen1_pokemon_model.json","return_validated":true})

└ Input validation error: 'data/gen1_pokemon_model.json' is not of type 'object'

....

It took Codex a few attempts to figure out the data model json schema and produce one for the Pokémon that satisfied the Neo4j Data Modelling MCP server.

Then it returned to Python for creating the data ingestion script and loaded the data into the Neo4j instance.

A few MCP tool calls later to query the data with cypher (query language for graph databases) which it can do because it has access to the MCP server for Neo4j Cypher. And with it, Codex and the MCP servers can answer analytical questions about our data.

- Greedy type-coverage search suggests trios such as (Rhydon, Parasect, Dragonite) or (Rhydon, Parasect, Jynx) hit 13 of the 15 defending types super-effectively; no trio can cover Normal/Rock simultaneously because Normal has no offensive 2× matchup.

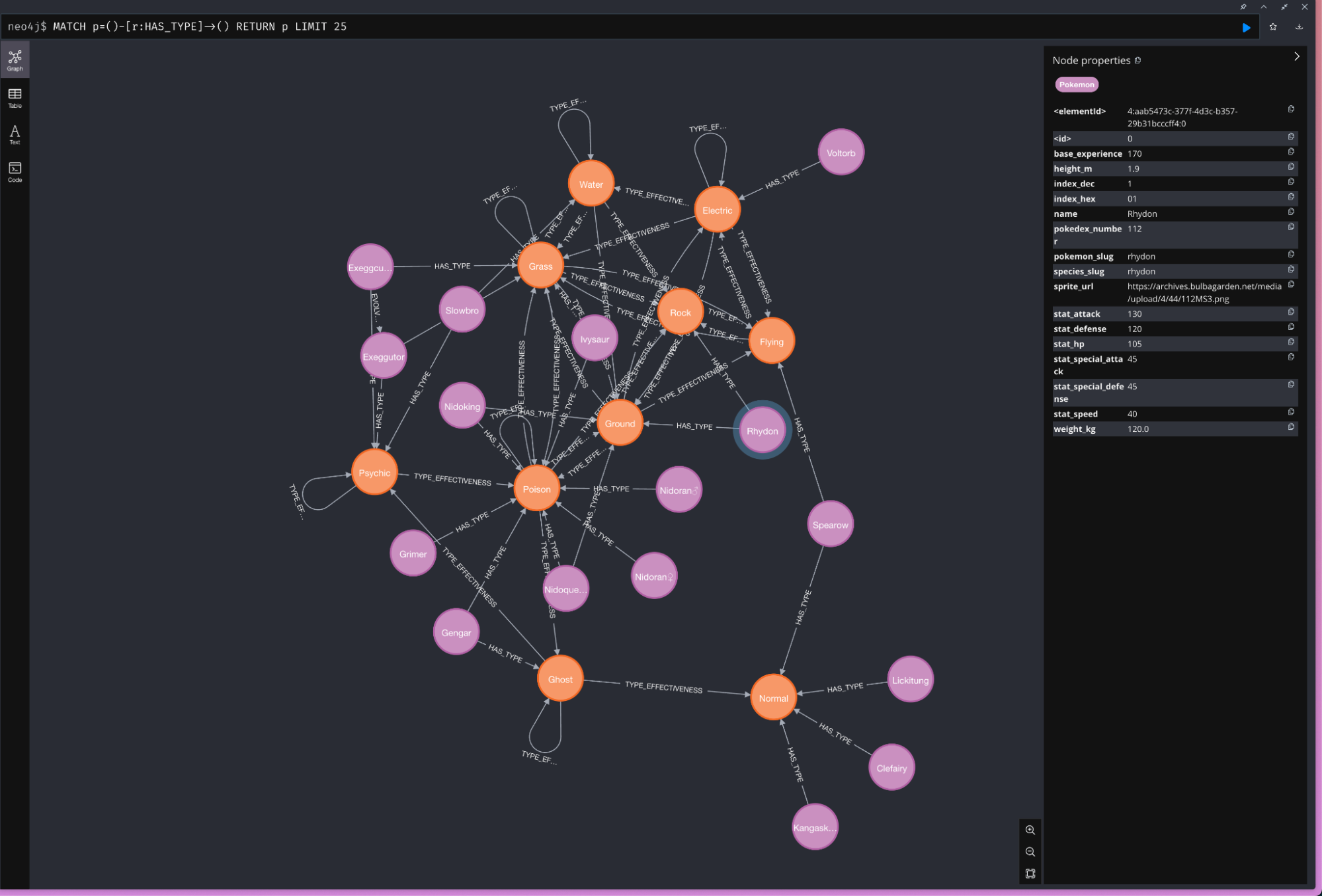

Now what’s really fun about Neo4j is that it comes with a terrific console where you can explore the data.

While our Neo4j container with the Pokémon data is still running we can go to http://localhost:7474, enter neo4j/password credentials and get to explore the data in a visual way.

Here for example is a subset of the Pokémon and their type relationships.

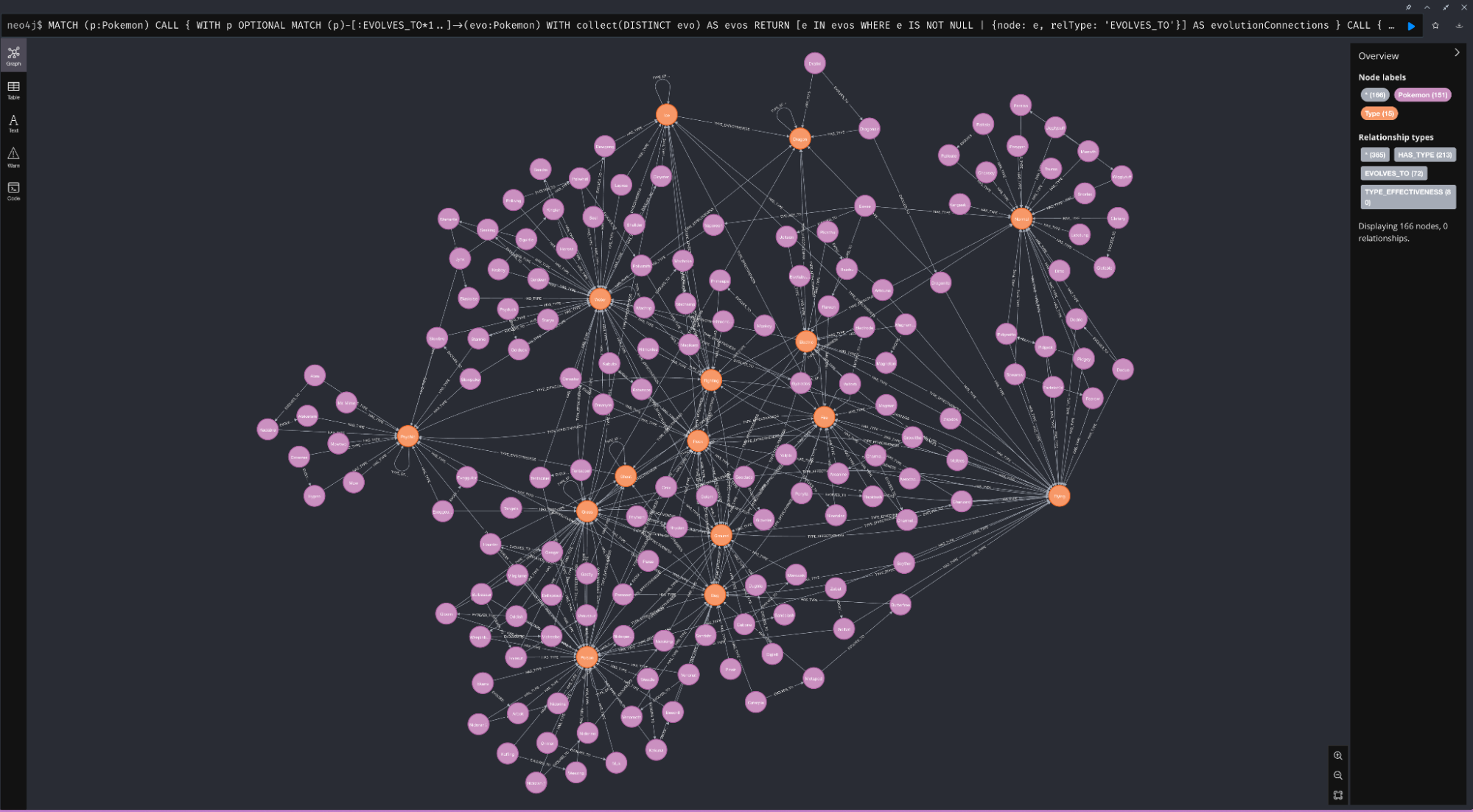

And if you know Cypher or have an AI assistant that can generate Cypher queries (and verify they work with an MCP tool call), you can generate more complex projections of your data, for example this (generated by Codex) shows all Pokémon, their evolution relationships and primary/secondary types.

MATCH (p:Pokemon)

CALL {

WITH p

OPTIONAL MATCH (p)-[:EVOLVES_TO*1..]->(evo:Pokemon)

WITH collect(DISTINCT evo) AS evos

RETURN [e IN evos WHERE e IS NOT NULL | {node: e, relType: 'EVOLVES_TO'}] AS evolutionConnections

}

CALL {

WITH p

OPTIONAL MATCH (p)-[:HAS_TYPE]->(type:Type)

WITH type

ORDER BY type.name // ensures a stable primary/secondary ordering

RETURN collect(type) AS orderedTypes

}

WITH p, evolutionConnections, orderedTypes,

CASE WHEN size(orderedTypes) >= 1 THEN orderedTypes[0] END AS primaryType,

CASE WHEN size(orderedTypes) >= 2 THEN orderedTypes[1] END AS secondaryType

WITH p,

evolutionConnections +

CASE WHEN primaryType IS NULL THEN [] ELSE [{node: primaryType, relType: 'HAS_PRIMARY_TYPE'}] END +

CASE WHEN secondaryType IS NULL THEN [] ELSE [{node: secondaryType, relType: 'HAS_SECONDARY_TYPE'}] END AS connections

UNWIND connections AS connection

RETURN p AS pokemon,

connection.node AS related,

connection.relType AS relationship

ORDER BY pokemon.name, relationship, related.name;

Turn Your AI Coding Assistant into a Data Engineer, Architect, Analyst and More

While this Pokémon demo is a fun example, it’s also a practical blueprint for working with real-world, semi-structured data. Graph databases like Neo4j are especially well-suited for this kind of work. Their relationship-first model makes it easier to represent the complexity of real-world systems.

In this walkthrough, we showed how to connect OpenAI’s Codex to the Neo4j MCP Servers via Docker MCP Toolkit, enabling it to take on multiple specialized roles:

- Data Engineer: Writing Python to scrape and transform web data

- Data Architect: Designing and validating graph models using domain-specific tools

- DevOps Engineer: Starting services and configuring tools based on its environment

- Data Analyst: Running complex Cypher and Python queries to extract insights

In your own projects, you might ask your AI assistant to “Analyze production logs and identify the cause of performance spikes,” “Migrate the user database schema to a new microservice,” or “Model our product catalog from a set of messy CSVs.”

Summary

The Docker MCP Toolkit bridges the gap between powerful AI coding agents and the specialized tools they need to be truly useful. By providing secure, one-click access to a curated catalog of over 200 MCP servers, it enables AI agents to interact with real infrastructure, including databases, APIs, command-line tools, and more. Whether you’re automating data workflows, querying complex systems, or orchestrating services, the MCP Toolkit equips your assistant to work like a real developer. If you’re building with AI coding assistants and want it to go beyond code generation, it’s time to start integrating with the tools your stack already relies on!

Learn more

- Explore the MCP Catalog: Discover containerized, security-hardened MCP servers

- Open Docker Desktop and get started with the MCP Toolkit (Requires version 4.48 or newer to launch the MCP Toolkit automatically)

- Read our tutorial on How to Add MCP Servers to Claude Code with Docker MCP Toolkit

- Read our tutorial on How to Add MCP Servers to Gemini CLI with Docker MCP Toolkit

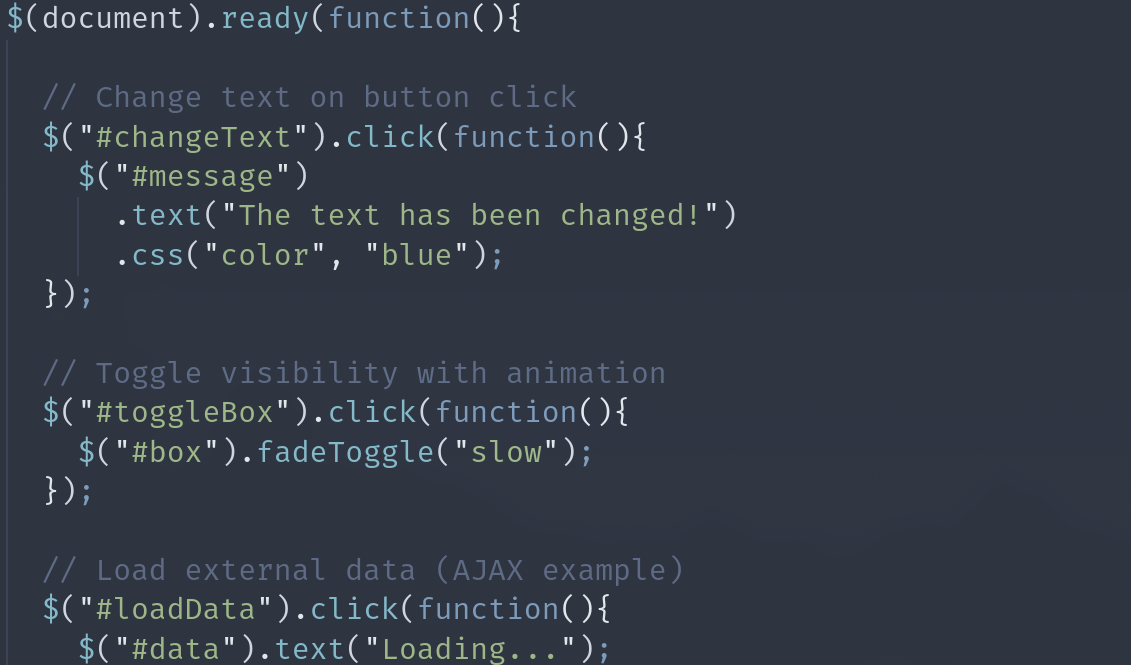

jQuery is a household name among web developers who have been around the block. Initially released in 2006, it took the web development world by storm with its easy and intuitive syntax for navigating a document, selecting DOM elements, handling events, and making AJAX requests. At its peak in 2015, jQuery featured on 62.7 percent of the top one million websites and 17 percent of all Internet websites.

A decade later, jQuery is not the shiny new kid on the block anymore. Most of the original pain points jQuery solved, such as DOM manipulation and inconsistent browser behavior, are gone thanks to modern browser APIs.

But jQuery is still widely used. According to SimilarWeb, as of August 11, 2025, nearly 195 million websites use it. That means many developers, like me, still use it every day. And like me, you might prefer it in certain cases.

So, in this article, I’ll share when it still makes sense to use jQuery and when not. Don’t worry: I’m not arguing we should replace React with jQuery. And I’m not here to romanticize 2008. In 2025, I simply still find myself reaching for jQuery because it’s the right tool for the job.

A Brief History of jQuery

To determine when it makes sense to use jQuery and when not, it helps to know why it was created in the first place and what problems it aimed to solve.

When John Resig launched jQuery at BarCamp NYC in January 2006, the web was a different place. Features we take for granted today were absent from most browsers:

- No

querySelectorAll: Selecting DOM elements across browsers was messy. In the mid-2000s, none of the available element selectors, likegetElementByIdorgetElementsByClassName, could select elements using complex CSS queries. - Inconsistent event handling:

addEventListenerwasn’t universal. While browsers like Firefox, Safari, and Chrome supported the W3C event model withaddEventListener, Internet Explorer (before IE9) used Microsoft’s proprietary model withattachEvent. These two models differed from each other in almost all functional aspects. - Different browsers had different APIs for

XMLHttpRequest. While browsers like Firefox and Safari offered the familiarXMLHttpRequest, Internet Explorer (before IE7) used ActiveX objects to give JavaScript network capabilities. This meant you had to use a bunch ofif-elseblocks to make an AJAX request. - CSS manipulation quirks: In the 2000s and early 2010s, many CSS features were implemented inconsistently across browsers, which made it difficult to manipulate CSS with JS.

jQuery solved all of this with a simple, chainable syntax and consistent cross-browser behavior. It offered a streamlined, chainable API for DOM traversal, event handling, and AJAX—far simpler than cross-browser native JavaScript at the time. These features made jQuery become the go-to JavaScript library in the 2010s, powering everything from personal blogs to Fortune 500 sites. In 2012, a W3Techs survey found that jQuery was running on 50 percent of all websites, and by 62.7 percent of the top 1M websites used it.

Where jQuery Still Makes Sense

Although jQuery’s glory days are clearly behind us, it still works well in some situations. Here are the scenarios where I still choose jQuery:

Legacy Projects

Even now in 2025, a W3Techs survey shows that jQuery is used in 77.8 percent of the top 10M websites in 2025. This is mostly legacy usage—old apps that use jQuery because switching to a more modern framework is a costly endeavour. This is clear when you look at the version statistics. In a 2023 survey across 500 organizations, only 44 percent use maintained versions (3.6.0 or newer), while 59 percent run older versions (1.x to 3.5.1)

I maintain a few legacy projects like these that were written with jQuery, and I can tell you why they’re still around: they just work. So as the adage goes, “If it ain’t broke, don’t fix it.”

Many large enterprises, government sites, corporate intranets, and many WordPress plugins and themes still rely on jQuery. Rewriting these sites to pure JavaScript or a modern framework is a time-consuming, expensive endeavour that can also introduce new challenges and bugs. Most of the time, all that effort and risk aren’t worth the relatively small benefits in the short term.

The truth is this: the codebase I inherited, built in the jQuery era, works. The business logic is robust, the profit margins are healthy, and—most surprisingly—shipping new features feels like slipping into a worn leather jacket: unfashionable, but comfortable. - Marc Boisvert-Duprs

Yes, most jQuery plugins are no longer actively maintained or have been deprecated, so depending on them is a security risk. Abandoned plugins may become incompatible or insecure as browsers continue to evolve. So, legacy projects that use jQuery and jQuery plugins should eventually migrate away from jQuery.

Quick Prototyping without Build Tools

Developers often need to prototype very simple frontend apps, be it for throwaway demos, internal tools, or proof-of-concept pages. Sometimes the spec may even require a very basic frontend with minimal interactivity (for example, a static page with a simple form and a button).

jQuery is a perfect choice for these situations. Simply drop in a <script> tag from a CDN and get animations, DOM manipulation, and AJAX in minutes—no need for npm, bundlers, transpilers, or complicated frameworks with hundreds of dependencies. It’s also great for running quick commands from the DevTools console, especially if you want to experiment with an app.

But why not use a more modern but lightweight framework like Alpine.js? Personally, I’m intimately familiar with jQuery: I’ve used it since the beginning of my web development journey. I love its simplicity and ease of use. The minor improvements a new framework can make in this scenario don’t offset the time spent learning a new tool.

Complex DOM Manipulation in Different Browser Contexts

Hopefully, you don’t have to support older browsers that lack the standard querySelector, or browsers like Internet Explorer, notorious for their non-standard behavior. Unfortunately, some of us still need to maintain apps that run on these browsers.

While native JS is perfectly fine for modern browsers, if you’re building something that has to run on older embedded browsers (think: kiosk software, older enterprise or university intranets, or web apps inside legacy desktop apps), jQuery’s normalization saves you from manual polyfilling, and its CSS selector lets you perform complex DOM manipulations easily.

Simple Animations without CSS Keyframes

As someone who primarily works with backend apps, I don’t often need to code animations for the frontend. But when I do need to create basic chained animations (fading, sliding, sequencing multiple elements, etc.), jQuery’s .animate()is simpler (and more lightweight) to write than juggling CSS animations and JS event callbacks.

Simple AJAX with HTML Server Responses

I was recently tasked to make some upgrades to an ancient app with a PHP backend. Imagine my surprise when I discovered that the server returns HTML fragments, and not JSON APIs. In this case, jQuery’s .load() and .html() methods can be simpler and more efficient than writing fetch() boilerplate with DOM parsing.

For example, I can extract a DOM element from the results of an AJAX request, and load it into an element like so:

// Replace #comments with just the #comments-list from the server response

$('#comments').load('/article/1 #comments-list');

Whereas the same thing in native JS would be:

fetch('/article/1')

.then(res => res.text())

.then(html => {

const doc = new DOMParser().parseFromString(html, 'text/html');

const comments = doc.querySelector('#comments-list');

document.querySelector('#comments').innerHTML = comments.outerHTML;

})

Yes, while the jQuery syntax is more straightforward, both approaches are doing the same thing under the hood, so there’s not a huge performance gain. In the jQuery version, you also have the added overhead of bundling the jQuery library. So, it’s a tradeoff between simplicity and bundle size.

When You Should Not Use jQuery

While jQuery still makes sense in some situations, there are some cases where I would never use jQuery.

Building a Modern, Component-Driven Frontend

If I’m building a modern frontend app with lots of reactivity and reusable components, I’d use a modern framework like React or Vue with native features for DOM manipulation.

Frameworks like React, Vue, Svelte, or Angular handle DOM rendering in a virtualised way. Direct DOM manipulation with jQuery conflicts with their data-binding approach, causing state mismatches and bugs.

For example, in React, calling $('#el').html('...') bypasses React’s virtual DOM and React won’t know about the change. This will inevitably lead to bugs that are difficult to diagnose.

When Simple Vanilla JS Is Enough

Most of jQuery’s once-killer features, such as selectors, AJAX, events, and animations, are now native in JavaScript:

document.querySelectorAll()replaces$().fetch()replaces$.ajax().element.classListreplaces.addClass()/.removeClass().element.animate()handles animations.

If I’m just toggling classes or making a fetch call, adding jQuery is wasteful.

Targeting Modern Browsers Only

jQuery’s major draw between 2008 and 2015 was its cross-browser compatibility, which was necessary due to quirks in IE6–IE9. It simply wasn’t practical to write browser-specific JS for all the different versions of IE. With jQuery, the quirks were abstracted away.

When IE was discontinued, this usefulness is no longer relevant.

So if the app I’m working on needs to support only modern browsers, I don’t need most of jQuery’s compatibility layer.

Projects Already Using Modern Tooling

Mixing jQuery and framework code leads to a “hybrid monster” that’s difficult to maintain.

jQuery can conflict with existing frameworks, which can cause hard-to-fix bugs. If my project is already written in another framework, I avoid including jQuery.

Alternatives to jQuery

Sometimes, I need to use some features of jQuery, but I can’t justify including it in its entirety. Here are some libraries I use in cases like these.

DOM Selection and Traversal

- Native DOM API (most common replacement) using

document.querySelector()anddocument.querySelectorAll() - Cash: jQuery-like API, tiny (~10KB), works with modern browsers

- Zepto.js: lightweight jQuery-compatible library for mobile-first projects

AJAX/HTTP Requests

- Native

fetch()API - Axios: promise-based HTTP client with interceptors and JSON handling.

Event Handling

- Native events using

element.addEventListener() - delegate-it: small utility for jQuery-style event delegation

Animations

- CSS transitions and animations (native, GPU-accelerated)

- Web Animations API

- GSAP: Powerful animation library, much more capable than

.animate()in jQuery.

Utilities

* Lodash: collection iteration, object/array utilities, throttling, debouncing

* Day.js: date manipulation in a tiny package (instead of jQuery’s date plugins)

All-in-One Mini jQuery Replacements

If you still like a single API but want it lighter than jQuery:

- Umbrella JS: ~3KB, jQuery-like API

- Bliss: focuses on modern features, syntactic sugar, and chaining

- Cash: as mentioned above, the closest modern equivalent

jQuery Still Has a Job

In 2025, jQuery isn’t the cutting-edge choice for building complex, highly interactive single-page applications that it was in the 2010s, and that’s perfectly fine. While modern frameworks dominate the headlines, jQuery remains a reliable, well-understood tool that solves the problems it was designed for, simply and effectively.

In the end, the “right” tool is the one that meets your project’s needs, and for countless developers and businesses, jQuery continues to be that.

ARG? Which files were copied into the image?

Recently, we have been making updates to the Docker Build clients (Buildx) and our VS Code extension (Docker DX) to improve the developer experience when using Docker. Today, we are sharing the next stage of that process with the introduction of Build Debugging in VS Code and Docker Build.

With the new debugging feature in Buildx from Docker, you will be able to reduce the time you spend fixing your Docker builds. In this post, you’ll learn how to configure the Buildx debugger in Visual Studio Code, step through a build and inspect variables and the image’s file system, and open a shell inside the image being built. Finally, you will learn a little about the debugger’s implementation and how it can be integrated into other editors.

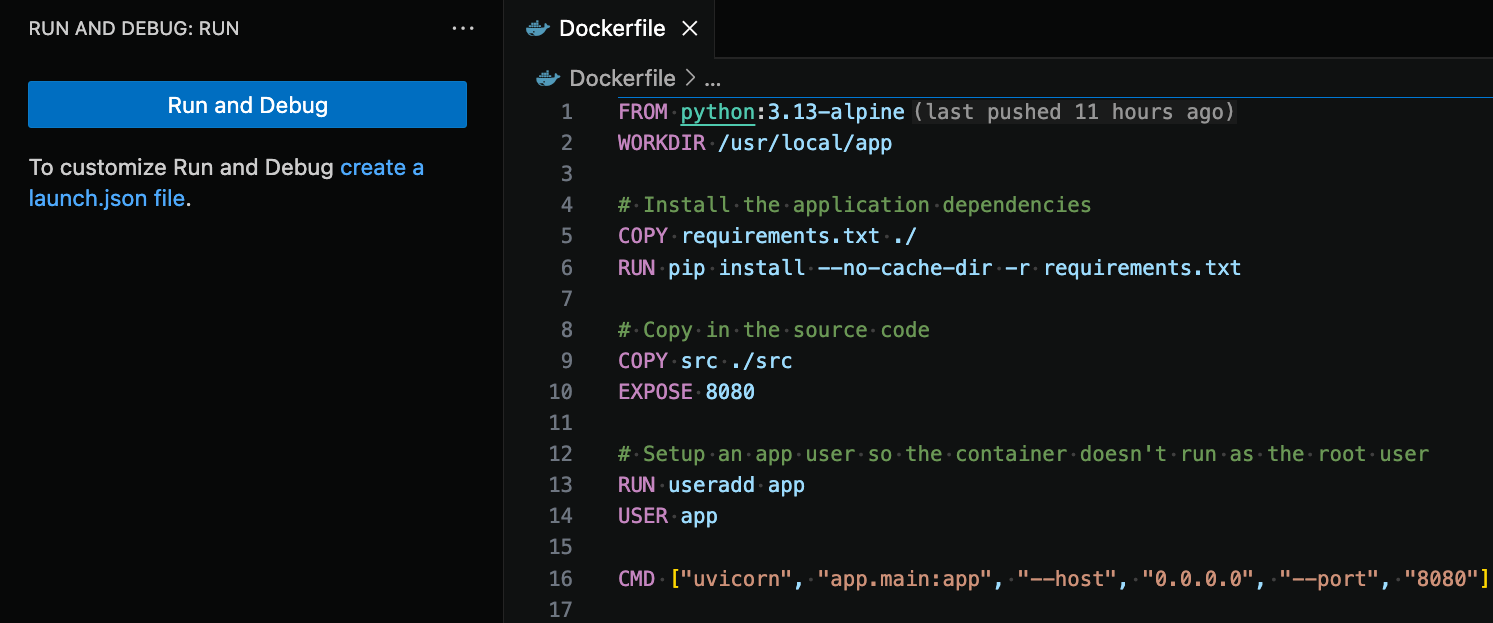

Configuring Visual Studio Code

To start debugging Dockerfiles in Visual Studio Code:

- Install the latest version of the Docker DX extension.

- Update to the latest version of Docker Desktop to ensure you have the latest Docker build tooling.

- Run

docker buildx versionand verify that your Buildx is at least version 0.29.x.

Creating a Launch Configuration

Open up your Dockerfile and open the Run and Debug view in Visual Studio Code. If you do not have any launch configurations, you will see something like the following.

Figure 1: Run and Debug view opened in Visual Studio Code with no launch configurations defined.

Click on the “create a launch.json file” hyperlink. If you have launch configurations, open up your launch.json file by clicking on the cog icon in the top right hand corner of the Run and Debug view.

In your launch.json file, create a new launch configuration for debugging your Docker build. You can use the sample below to get started. For a full description of the various attributes in a launch configuration, see here.

{

"name": "Docker: Build",

"type": "dockerfile",

"request": "launch",

"dockerfile": "Dockerfile",

"contextPath": "${workspaceFolder}"

}

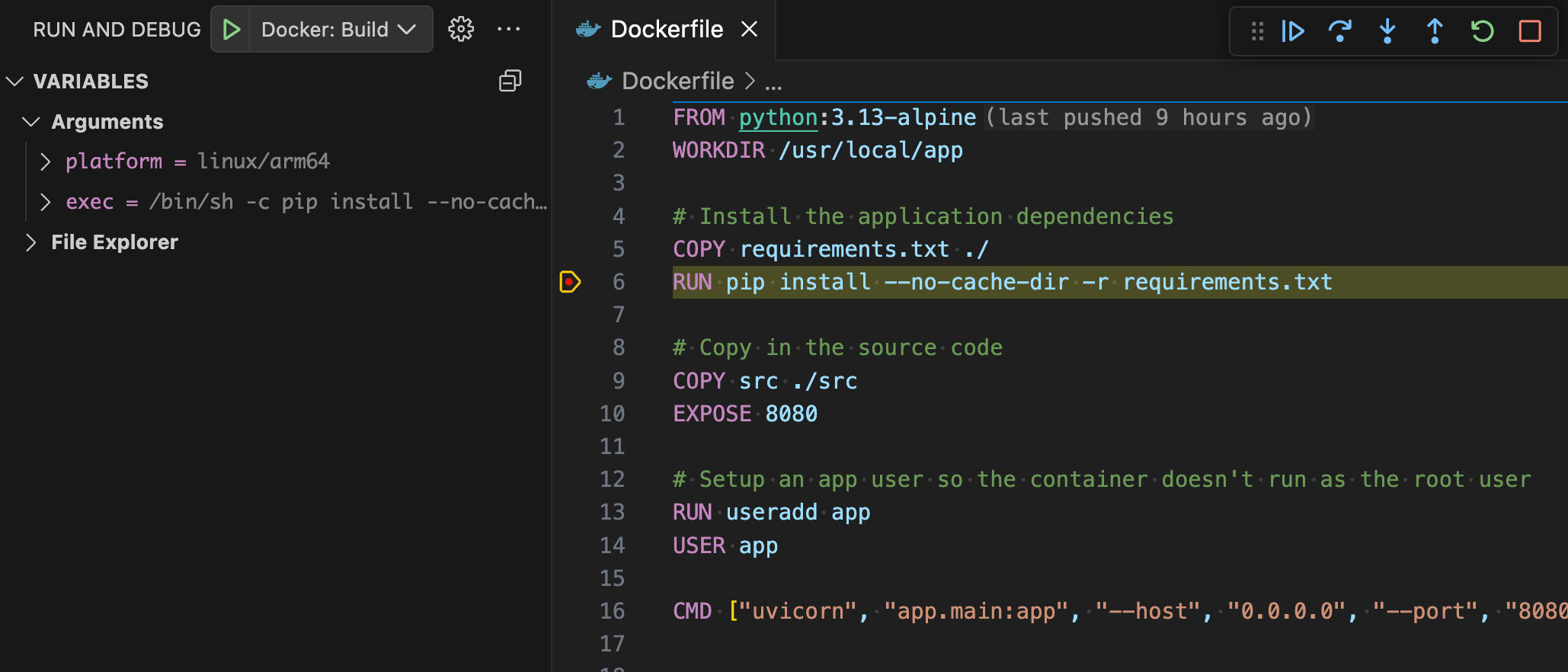

Adding a Breakpoint

Now that you have completed setting up your launch configuration, let’s add a breakpoint to our Dockerfile. Place a breakpoint next to one of your RUN instructions by clicking in the editor’s left margin or by pressing F9. A circle should appear to indicate that a breakpoint has been added.

Launching the Debugger

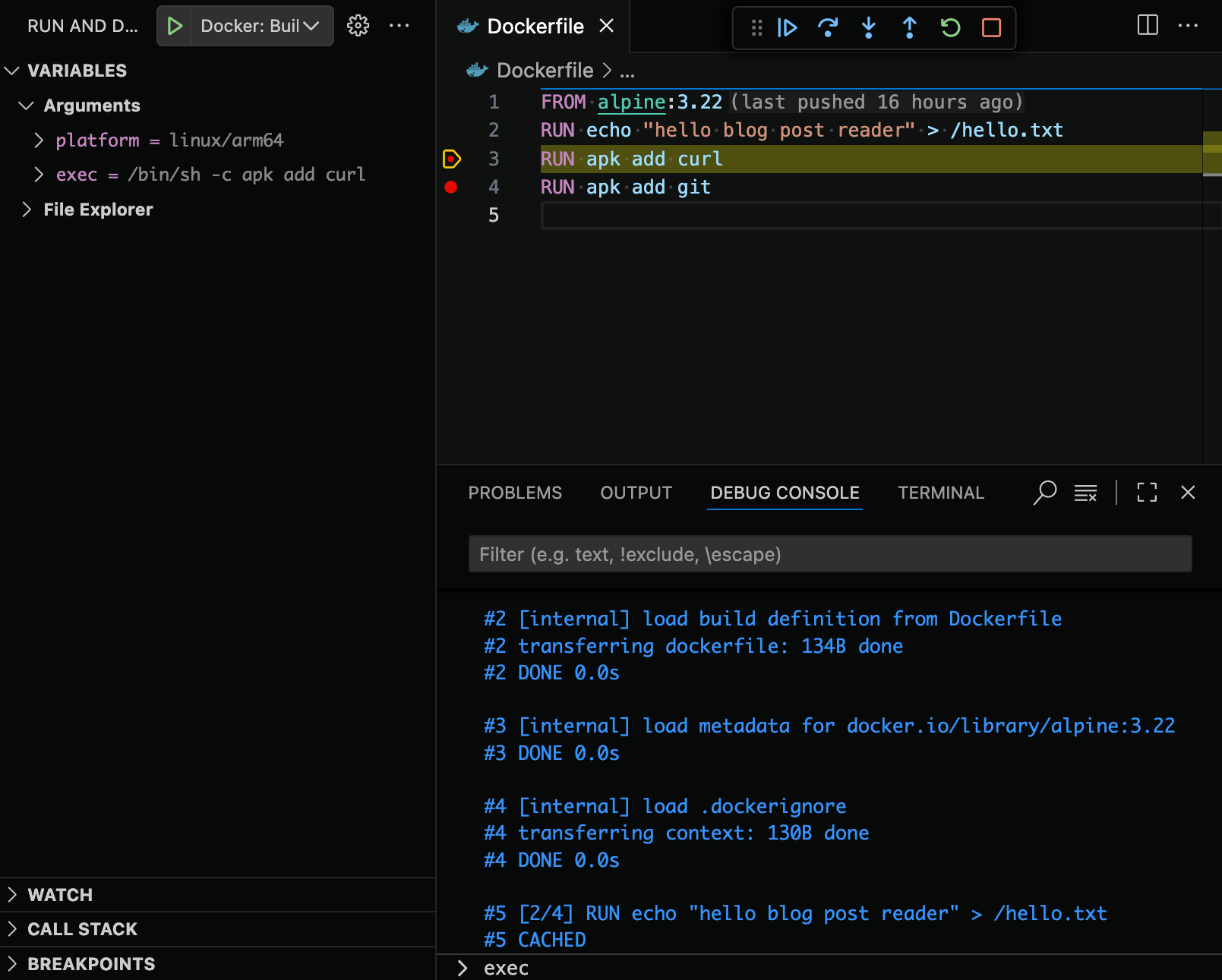

We are now ready to start the debugger. Select the launch configuration you created and then hit F5. The build should pause at the RUN line where you placed the breakpoint.

Figure 2: Docker build suspended by a breakpoint in Visual Studio Code.

Debugging Features

We will now walk you through the three different features that the Buildx Debugger provides.

Inspecting Variables

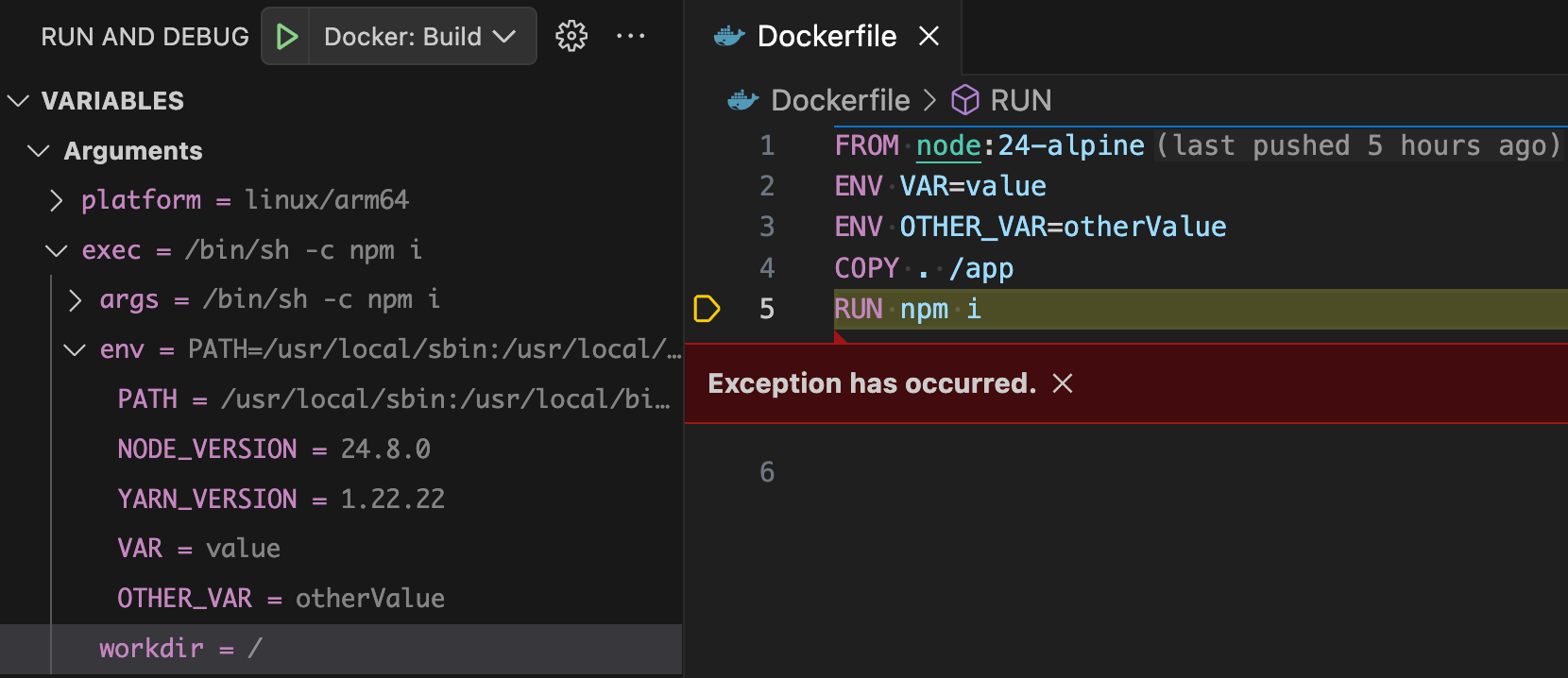

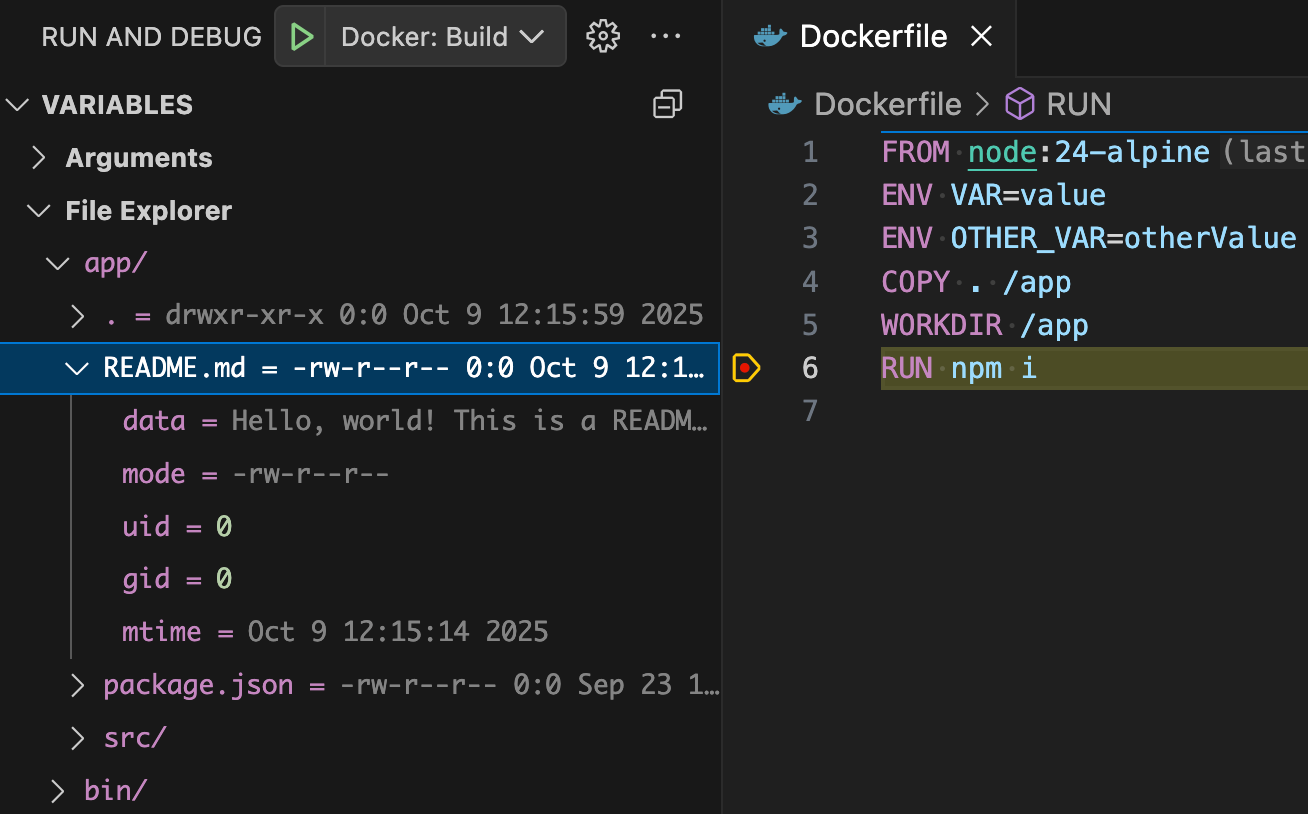

When a build is in a suspended state, you can look at any variables that may have been defined. In this example, by looking at the executed command’s workdir value on the left-hand side, we can now see that the command is not being run in the right folder as we had copied the contents into /app. We can fix this by adding WORKDIR /app before the RUN line. Also note that we can view variables that have been defined by our image and the base image as seen by VAR and NODE_VERSION.

Figure 3: Docker build encounters an error and is suspended by the debugger instead of terminating.

File Explorer

In addition to inspecting variables, you can also look at the structure of the file system to see what is already there and what you have copied in. For text files, you can also see its file content as shown in the file’s data field.

Figure 4: View the file system of the Docker image being built.

Interactive Debugging

Creating the right Dockerfile is often an iterative process. Part of this is usually because the host system you are developing on shares few similarities with the image you are building. Consider the differences between running Ubuntu locally but trying to build an Alpine Linux image. The small differences in package names creates a lot of back and forth between your editor and your browser as you search for the right name. You add a line here and then maybe comment another line somewhere else before running docker build again to just hope for the best.

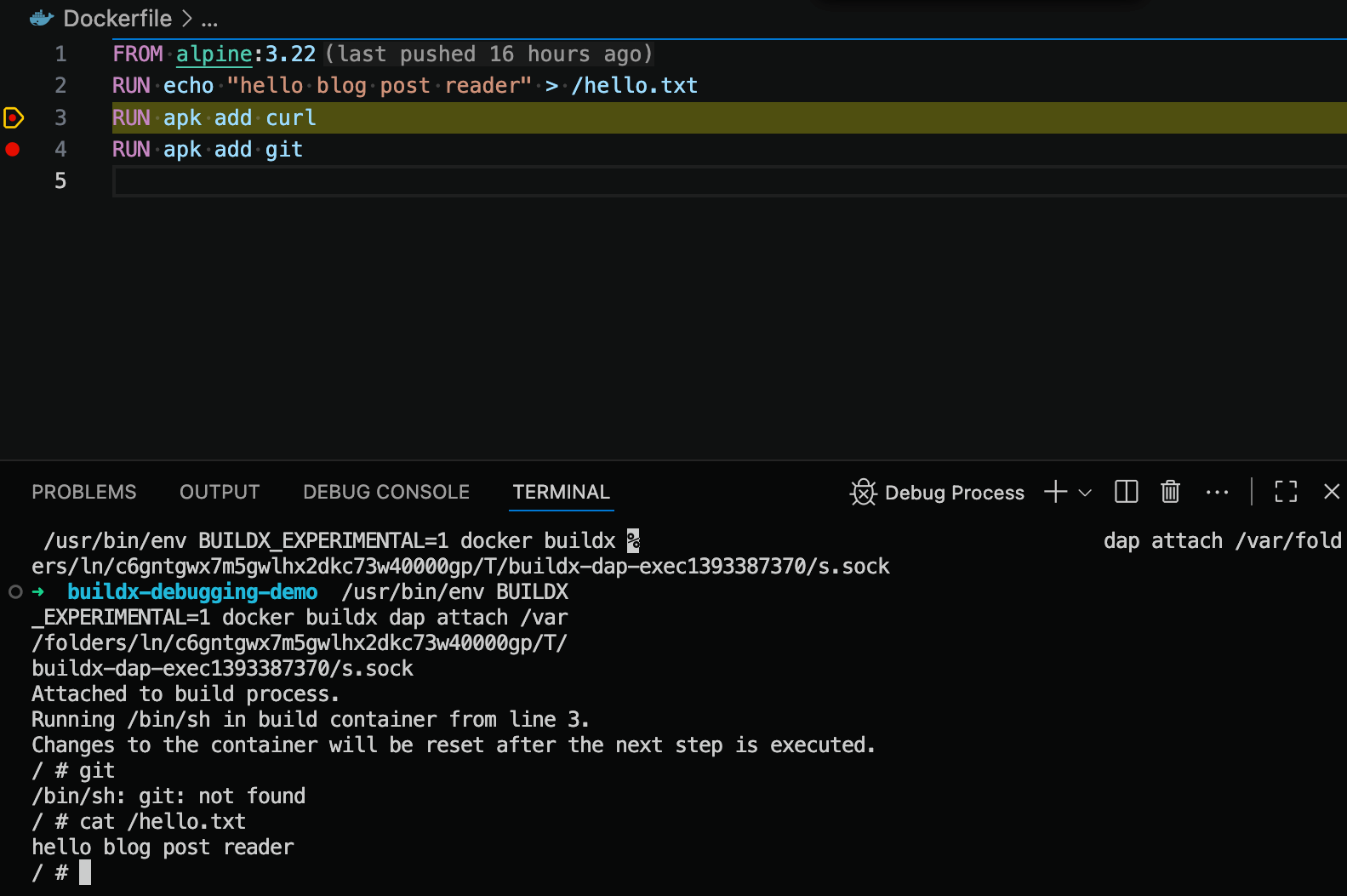

This iterative process can now be streamlined with the help of the debugger. When your build is in a suspended state, open the Debug Console view and then place your cursor in the input field at the bottom. Type in exec and then hit the enter key. The Terminal view should now open with a shell that is attached to the image that is being built.

Figure 5: Use the Debug Console to open a shell into the Docker image being built by running exec.

Figure 6: The Docker image that is being built can now be accessed and inspected with a terminal.

This feature is a game changer as you can now easily open the image of a Dockerfile at any given step and inspect its content and run commands for testing. Previously, we would have to comment everything after the buggy line, build the Docker image, and then manually run and open a shell into the image. All of that is now condensed into adding a breakpoint in your editor and starting a debug session!

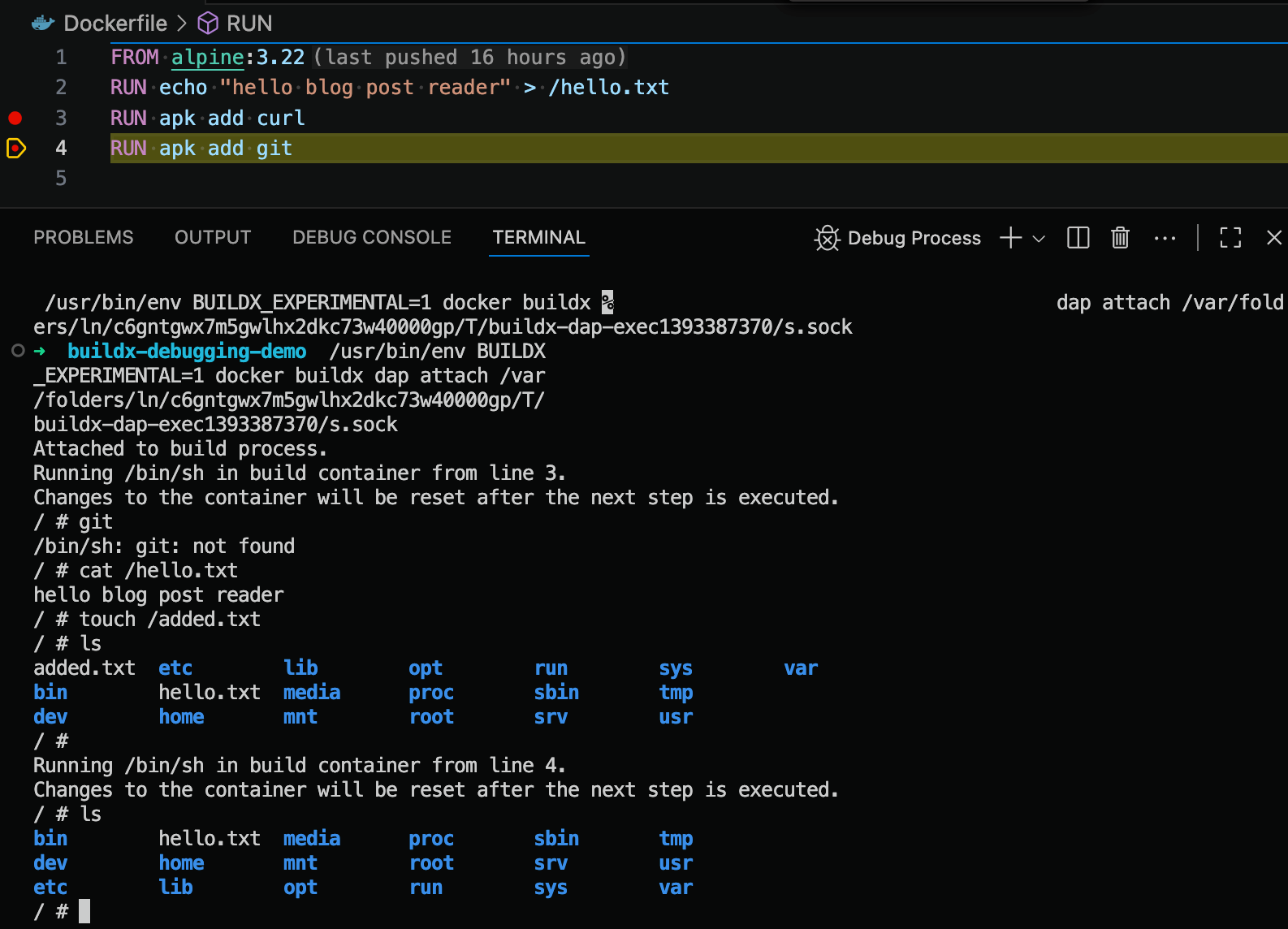

Keep in mind that none of the changes you make in the terminal are persisted so this is purely for experimentation. In the figure below, we can see that a file was created when the debugger was paused at line 3. When the debugger was advanced to line 4, the file disappeared.

Figure 7: Changes to the Docker image inside the exec terminal will be reset when the debugger steps to another line.

Integrations powered by an Open Specification

Just like our work with the Docker Language Server that implements the Language Server Protocol, the Buildx debugger is built on open standards as it implements the Debug Adapter Protocol which means that you can debug Dockerfile builds with any editor that supports the protocol. Besides Visual Studio Code, we also provide an official plugin for Neovim. For the JetBrains users out there, we have verified that it integrates well with the LSP4IJ plugin. If your favourite editor supports the Debug Adapter Protocol, there should be a way for the Buildx debugger to integrate with it.

Thank You

We want to take this opportunity to thank Kohei Tokunaga (ktock) for his ideas and initial work around this feature. The contributions he provided to Buildx gave us a great foundation for us to build out and complete this feature. This release would not have been possible without his help. Thank you, Kohei!

Next Steps

- Download the Docker DX extension and try out the new debugging feature.

- Share feedback and issues with us in our GitHub repositories for Docker DX and Buildx.

- You can also submit feedback through the Docker feedback page.

Learn More

- Setup the Buildx debugger in Neovim with nvim-dap-docker.

- Setup the Buildx debugger in a JetBrains editor with the LSP4IJ plugin.

- Read the Buildx documentation about our implementation of DAP and configuring launches.

At Docker, we are building our hardened images to exacting standards. That means carefully crafting by hand, because humans are still the best security architects. That said, we understand the value of AI and deploy it as an extra set of eyes at critical junctures in our Docker Hardened Image (DHI) build process. With this dual approach, our users get better coverage and more secure products. Humans aren’t perfect, but AI can help them become better. Here’s a recent example of how the AI tools we use for DHI caught a bug, made a product more secure, and contributed back to the community.

How the AI guardrail stepped in

Our upstream release automation opened a routine version bump for nginx-exporter. As part of our release process, the DHI AI guardrail fetched the upstream diffs that the bump would pull in and scanned them with language-aware checks. It spotted a classic logic inversion in the exporter’s new proxy-protocol path and immediately blocked our PR from auto-merging. I reproduced the issue, confirmed the diagnosis, and submitted a small fix upstream. Because the guardrail held the line, customers never saw the bug.

That sequence matters. A normal dependency update would have sailed through and shipped a regression. Instead, Docker’s AI guardrail acted like a release bouncer at the door of a bar. The AI guardrail looked at what was about to enter, recognized a risk pattern, and stopped it from coming in and causing trouble. A human Docker engineer then read the flagged issue, proved the failure and sent the fix. The fix was then accepted by the project, improving their code base. So, this fix not only improved DHI but also improved the project for everyone.

While the fix pull request has been reviewed and approved by the upstream maintainers, our DHI build pipeline applied the patch and shipped the new, patched version to our customers. Making sure that customers never saw the regression in their environments.

The AI assisted DHI process

Ironically, standard AI coding assistants didn’t spot the flaw. This is why having our own internal AI guardrails is so critical. They provide the extra layer of support and the specialization that is hard to get from general-purpose coding assistants.

This is how we want AI to show up in our pipeline. It is not a replacement for engineering judgment, but a force multiplier for safety. The guardrail focuses on a narrow set of high-leverage issues that can cause big problems such as inverted error checks, ignored failures, and resource mishandling or suspicious contributor activity.

The example also shows the value of layered safeguards. We don’t just accept an upstream version and hope for the best. The AI guardrail scrutinizes what changed. Our policy treats high-confidence findings as a hard stop. Humans then verify, reproduce the behavior, and apply the smallest correct patch. Only then does the release move forward. The best security is proactive, not reactive.

As I alluded to above, there’s an important broader open source benefit to our AI guardrails. DHI depends on hundreds of community projects, some of which are downloaded millions or even billions of times per month. When the DHI AI guardrail surfaces an issue, our default is to fix it upstream rather than carry a private patch. That keeps our images clean, reduces long-term maintenance, and gives every downstream user a better baseline. It also helps the upstream projects, which benefit from our AI anomaly detection, our human judgment, and our subsequent code fixes.

So back to the title. DHI is crafted by engineers, but it is protected by AI. Our guardrail is an active and continuously improving AI that reads upstream diffs with context, recognizes risky patterns, scores confidence, and blocks merges that don’t pass muster. Well-applied AI helps the human work better with faster reviews, tighter patches, and fewer regressions.

This is the partnership we’re optimizing for. Humans set intent, exercise design judgment, and ship features. AI enforces discipline at critical gates. Best of all, every catch feeds the model signals for future scans, so protections improve as our ecosystem evolves. Collectively, this adds to the security of the entire open source ecosystem.

It’s a win for Docker, for our customers, and the community.

]]>Enter the powerhouse duo: Gemini CLI and Docker MCP Toolkit. This isn’t just another tool combination — it’s a paradigm shift that delivers developer AI assistance with zero complexity overhead. A one-time setup of just 5 minutes can save you 20 minutes per test scenario — that’s a 97% time reduction that compounds with every test you run.

Let’s look at a concrete example: browser testing and performance analysis that developers do regularly can be simplified and completely automated. What used to require opening browsers, clicking through flows, analyzing DevTools, taking screenshots, and manually documenting buys can now happen in a single 30-second conversation.

In this guide, you’ll learn how to:

- Set up Gemini CLI and connect it to Docker MCP Toolkit

- Configure the Playwright MCP server for browser automation

- Configure the GitHub MCP server for issue creation

- Configure the Filesystem MCP server for saving test artifacts

- Automate browser testing that discovers real bugs and creates documented GitHub issues

- See how Gemini CLI can analyze performance, capture screenshots, and report findings—all without leaving your terminal

With 220+ pre-built MCP servers, browser automation becomes as simple as having a conversation. No Selenium WebDriver configuration, no CI/CD pipeline complexity, no manual screenshot management — just natural language instructions that execute real browser tests.

Why Gemini CLI and Docker MCP Toolkit Work Better Together

Manual browser testing and performance analysis are broken. You open Chrome DevTools, click through pages, check network requests, analyze performance metrics, take screenshots, write bug reports, and then manually create GitHub issues. This context-switching nightmare wastes hours every sprint.

Traditional automation tools don’t solve the real problem. Selenium requires brittle selectors and a complex setup. Playwright needs JavaScript knowledge and test frameworks. Both require maintaining test scripts that break with every UI change. The “solution” often takes more time than manual testing.

While Gemini provides powerful AI capabilities and MCP provides the protocol, Docker MCP Toolkit makes browser automation practical. Without containerization, setting up browser testing means managing Chrome/Firefox installations, dealing with WebDriver versions, configuring Node.js dependencies, handling screenshot directories manually, and different configurations for every developer’s machine. The setup that should take 2 minutes takes 2-6 hours per developer.

Docker MCP Toolkit eliminates this friction:

- 220+ pre-built MCP servers in the catalog

- One-click deployment through Docker Desktop

- Playwright MCP with browsers pre-installed (Chrome, Firefox, WebKit)

- GitHub MCP for automated issue creation

- Filesystem MCP for artifact storage

- Secure credential management via OAuth or encrypted storage

- Consistent configuration across Mac, Windows, and Linux

- Automatic updates when new server versions are released

We built Docker MCP Toolkit to meet developers where they are. If you’re using Gemini CLI, you should be able to automate browser testing without wrestling with infrastructure.

Your tests run securely on your machine. Everything executes in isolated Docker containers on your local system. Test data, screenshots, and application access never leave your computer. No cloud uploads, no third-party services, no compliance concerns. You get enterprise-grade browser automation with complete privacy.

Setting up Gemini CLI in Docker MCP Toolkit

Prerequisites

- Install Docker Desktop 4.40 or later

- Enable MCP Toolkit

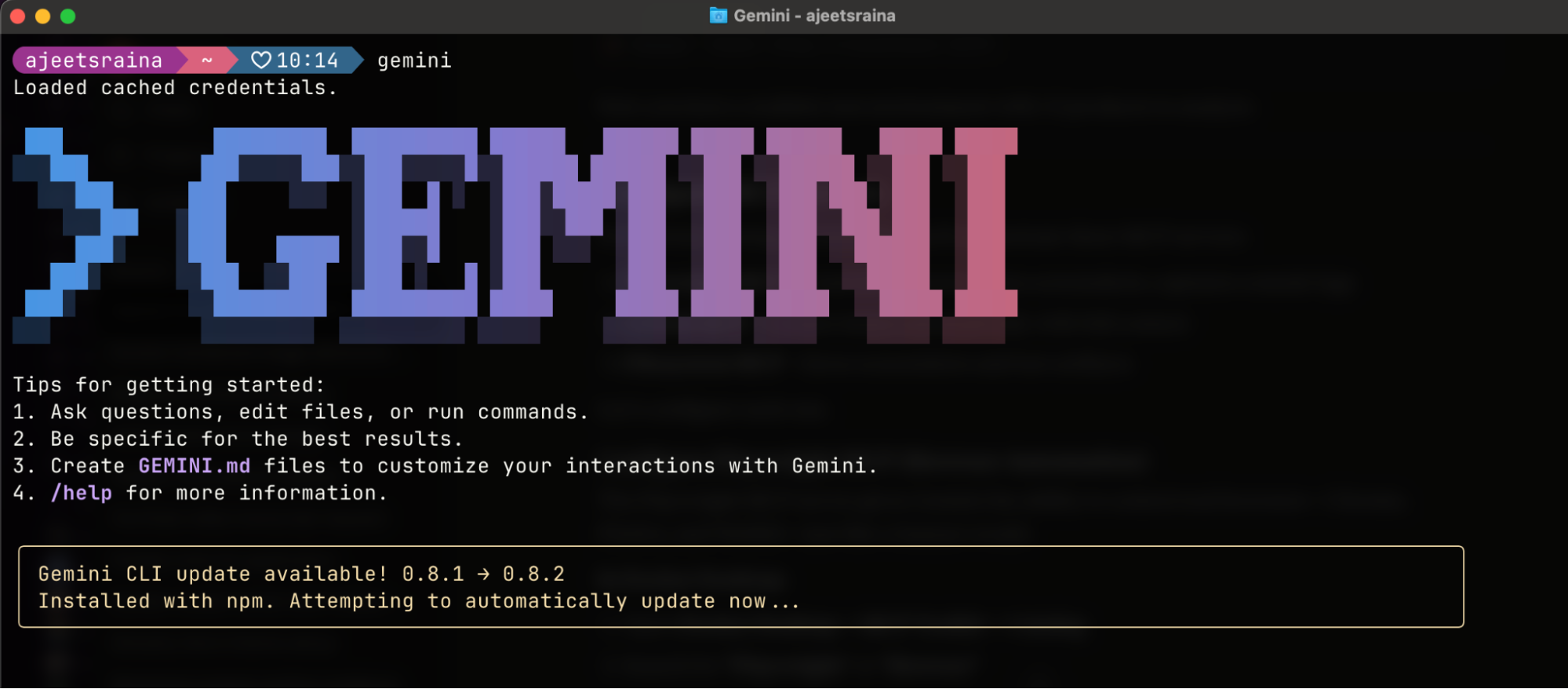

Step 1. Install Gemini CLI

Install via npm:

npm install -g @google/gemini-cli

Step 2. Launch and authenticate

Once installed, just type gemini in your terminal window.

gemini

Step 3. Log in via Google

Follow the setup wizard:

- Select a preferred theme style from the options.

- Choose a login method. I recommend “Login with Google”, which allows up to 60 requests/minute and 1,000 requests/day for free

In case you need higher rate limits or enterprise access, I suggested you use an API key from Google AI Studio. You can easily set it as an environment variable:

export GEMINI_API_KEY="YOUR_API_KEY"

After selecting your sign-in method, a browser window will open. Simply log in with your Google account

Step 4. Start chatting with Gemini

Just type “gemini” in your terminal window to start chatting with Gemini and enter your prompt.

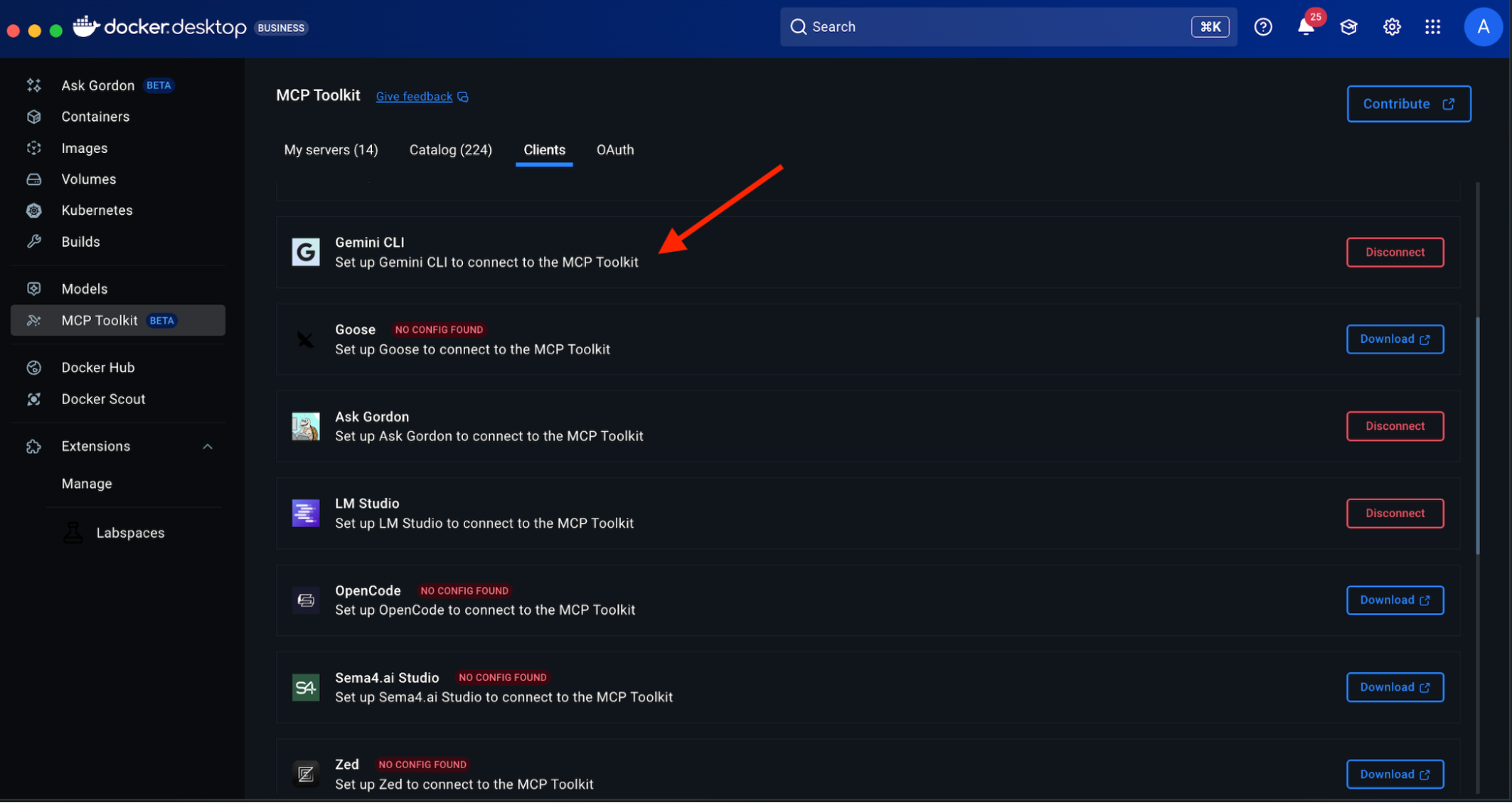

Connect Gemini CLI to Docker MCP Toolkit

Option 1: One-Click Connection (Recommended)

- Open Docker Desktop

- Navigate to MCP Toolkit in the sidebar

- Click the Clients tab

- Find “Gemini” in the list.

- Click Connect

Docker Desktop automatically configures the MCP Gateway connection – the underlying infrastructure that routes requests between Gemini CLI and your MCP servers, handling authentication, containerisation, and secure communication seamlessly.

Option 2: Manual Command Line Setup

If you prefer a command-line setup or need to configure a specific project:

- Navigate to your project folder in the terminal

- Run this command:

docker mcp client connect gemini --global

You’ll see output like this:

=== System-wide MCP Configurations ===

● gemini: connected

MCP_DOCKER: Docker MCP Catalog (gateway server) (stdio)

● gordon: connected

MCP_DOCKER: Docker MCP Catalog (gateway server) (stdio)

You might have to restart 'gemini'.

The connected status confirms Gemini CLI is linked to the Docker MCP Gateway.

What’s happening under the hood?

The Gemini CLI uses the mcpServers configuration in your settings.json file to locate and connect to MCP servers. This configuration supports multiple servers with different transport mechanisms. The mcpServers object is where you define each MCP server you want the CLI to connect to.

Whenever you hit the “Connect” button under the Docker MCP Client for Gemini CLI, it adds the following Docker MCP Gateway configuration to the ~/.gemini/settings.json file.

{

"theme": "Default",

"selectedAuthType": "oauth-personal",

"mcpServers": {

"MCP_DOCKER": {

"command": "docker",

"args": ["mcp", "gateway", "run"],

"env": {}

}

}

}

Learn more about MCP and Gemini CLI interaction through this link.

Step 5. Restart Gemini CLI

# Exit Gemini CLI if running, then restart

gemini

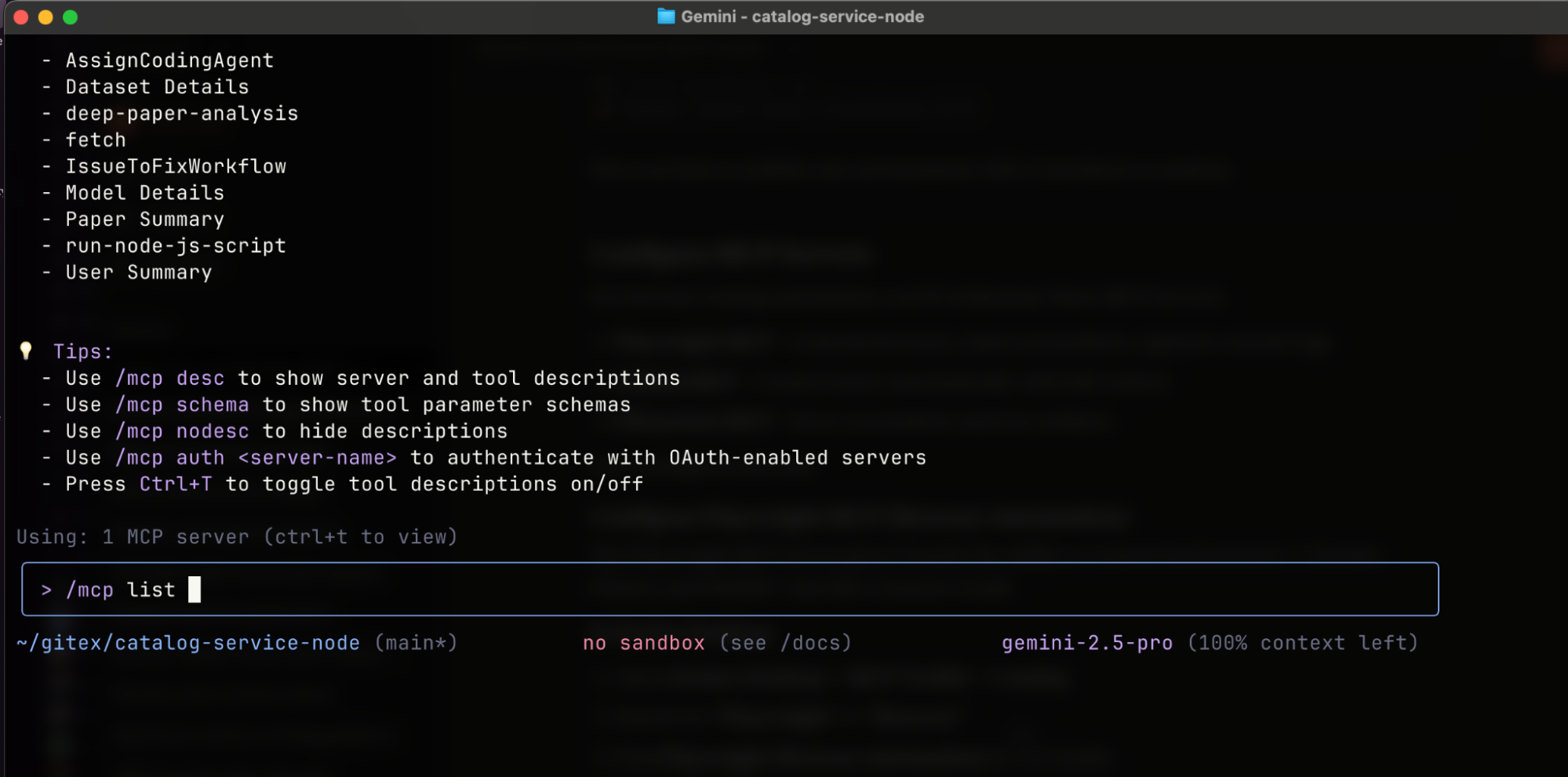

Step 6. Verify the Connection

Inside Claude Code, type /mcp to see available MCP servers.

You should see the Docker MCP Gateway listed, which provides access to all enabled MCP servers. The /MCP_DOCKER tools indicate a successful connection. As you enable more MCP servers in Docker Desktop, they’ll appear here automatically.

First Run: What to Expect

When you start Gemini CLI for the first time after connecting to Docker MCP Toolkit, you’ll see a prompt about the new MCP server:

Choose Option 1 (recommended). This configures your project to automatically use Docker MCP Toolkit and any MCP servers you enable in Docker Desktop. You won’t need to approve MCP servers individually each time.

You’re now ready to use Gemini with MCP servers from Docker Desktop.

Real-World Demo: Automated Browser Testing and Performance Analysis

Now that you’ve connected Gemini CLI to Docker MCP Toolkit, let’s see it in action with a practical example. We’ll automatically discover real bugs through browser testing and identify performance bottlenecks through detailed analysis — the kind that would take 20 minutes of manual testing, DevTools monitoring, and performance profiling.

What Makes This Realistic?

This isn’t a trivial “Hello World” demo. We’re performing comprehensive browser testing and performance analysis on a real e-commerce application with the kinds of issues you encounter in production:

- Uses actual application running on localhost

- Executes functional browser tests (navigation, element inspection, console monitoring)

- Discovers genuine performance bottlenecks through browser DevTools analysis

- Identifies accessibility violations that affect real users

- Captures evidence with screenshots and console logs

- Measures real performance metrics: page load times, network requests, resource usage

- Creates properly formatted GitHub issues with actionable recommendations

Time investment:

- Manual process: ~20 minutes (opening browsers, clicking through flows, DevTools analysis, performance profiling, documentation, issue creation)

- Automated with Gemini CLI + MCP: ~30 seconds total

That’s a 97% time reduction, but more importantly, it’s consistent, thorough, and documented every time.

What We’re Testing

The catalog-service-node application is a realistic e-commerce catalog with intentional issues that mirror common production problems:

Performance Issues:

- No pagination – loads all 15 products at once (will degrade with scale)

- Duplicate API calls – requests

/api/productstwice unnecessarily - Missing optimization – unoptimized loading patterns

Accessibility Issues:

- Missing product images – placeholder buttons instead of actual images

- Vague button labels – “Fetch” and “Upload” aren’t descriptive for screen readers

- Missing ARIA labels – table structure not properly announced

Browser Issues:

- Missing favicon – generates 404 errors in console

- Console warnings – duplicate request warnings

Let’s see if Gemini CLI can discover all of these automatically through intelligent browser testing and performance analysis, then create a comprehensive GitHub issue.

Step 1: Set Up a Real-World e-Commerce Catalog application

For this demo, we’ll use a real e-commerce catalog application. This gives us realistic performance and accessibility issues to discover.

Clone the repository:

git clone https://github.com/ajeetraina/catalog-service-node

cd catalog-service-node

Start all services:

# Start Docker services (database, S3, Kafka)

docker compose up -d

# Install dependencies

npm install --omit=optional

# Start the application

npm run dev

Verify it’s running:

- Frontend: http://localhost:5173

- API: http://localhost:3000

Step 2: Seed Test Data

To make testing realistic, create sample products:

# Create seed script

cat > seed-data.sh << 'EOF'

#!/bin/bash

API_URL="http://localhost:3000/api"

echo "Seeding test products..."

curl -s -X POST "$API_URL/products" \

-H "Content-Type: application/json" \

-d '{"name":"Vintage Camera","description":"Classic 35mm film camera","price":299.99,"upc":"CAM001"}' \

> /dev/null && echo "✅ Vintage Camera"

curl -s -X POST "$API_URL/products" \

-H "Content-Type: application/json" \

-d '{"name":"Rare Vinyl Record - LAST ONE!","description":"Limited edition. Only 1 left!","price":149.99,"upc":"VINYL001"}' \

> /dev/null && echo "✅ Rare Vinyl Record"

curl -s -X POST "$API_URL/products" \

-H "Content-Type: application/json" \

-d '{"name":"Professional DSLR Camera","description":"50MP camera with 8K video","price":2499.99,"upc":"CAMPRO001"}' \

> /dev/null && echo "✅ Professional DSLR"

# Add bulk test products

for i in {4..15}; do

curl -s -X POST "$API_URL/products" \

-H "Content-Type: application/json" \

-d "{\"name\":\"Test Product $i\",\"description\":\"Bulk test product $i\",\"price\":$((50 + RANDOM % 450)).99,\"upc\":\"BULK$(printf '%03d' $i)\"}" \

> /dev/null && echo "✅ Test Product $i"

done

echo ""

TOTAL=$(curl -s "$API_URL/products" | jq '. | length')

echo "Total products: $TOTAL"

echo "Ready! Visit http://localhost:5173"

EOF

chmod +x seed-data.sh

./seed-data.sh

Expected output:

Seeding test products...

✅ Vintage Camera

✅ Rare Vinyl Record

✅ Professional DSLR

✅ Test Product 4

✅ Test Product 5

...

✅ Test Product 15

Total products: 15

Ready! Visit http://localhost:5173

Now you have a realistic environment with 15 products to analyze.

Configure MCP Servers

For browser testing and performance analysis automation, you’ll orchestrate three MCP servers:

- Playwright MCP – Controls browsers, takes screenshots, captures console logs

- GitHub MCP – Creates issues automatically with full context

- Filesystem MCP – Saves screenshots and test artifacts

Let’s configure each one.

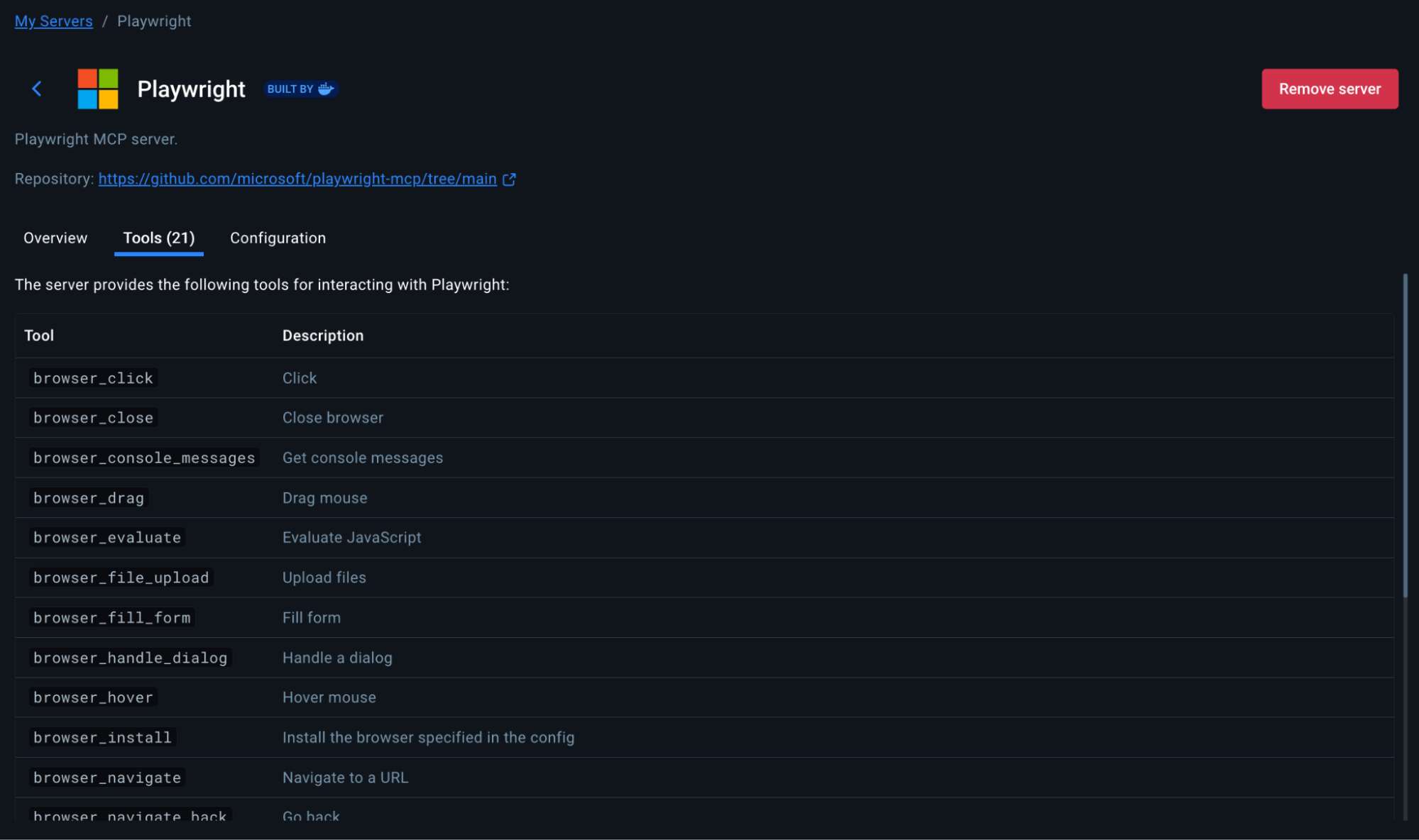

Configure Playwright MCP (Browser Automation)

The Playwright MCP server gives Gemini the ability to control real browsers, Chrome, Firefox, and WebKit, just like a human would.

In Docker Desktop:

- Open Docker Desktop → MCP Toolkit → Catalog

- Search for “Playwright” or “Browser”

- Find Playwright (Browser Automation) in the results

- Click + Add

- The server will be added with default configuration (no additional setup needed)

- Click Start Server

What you get:

- 21+ browser automation tools including:

browser_navigate– Navigate to URLsbrowser_snapshot– Capture page state for analysisbrowser_take_screenshot– Save visual evidencebrowser_click, browser_type– Interact with elementsbrowser_console_messages– Get console errorsbrowser_network_requests– Analyze HTTP requests

The Playwright MCP runs in a secure Docker container with browsers pre-installed. No manual ChromeDriver setup, no WebDriver conflicts, no OS-specific browser installations.

Configure GitHub MCP (Issue Creation)

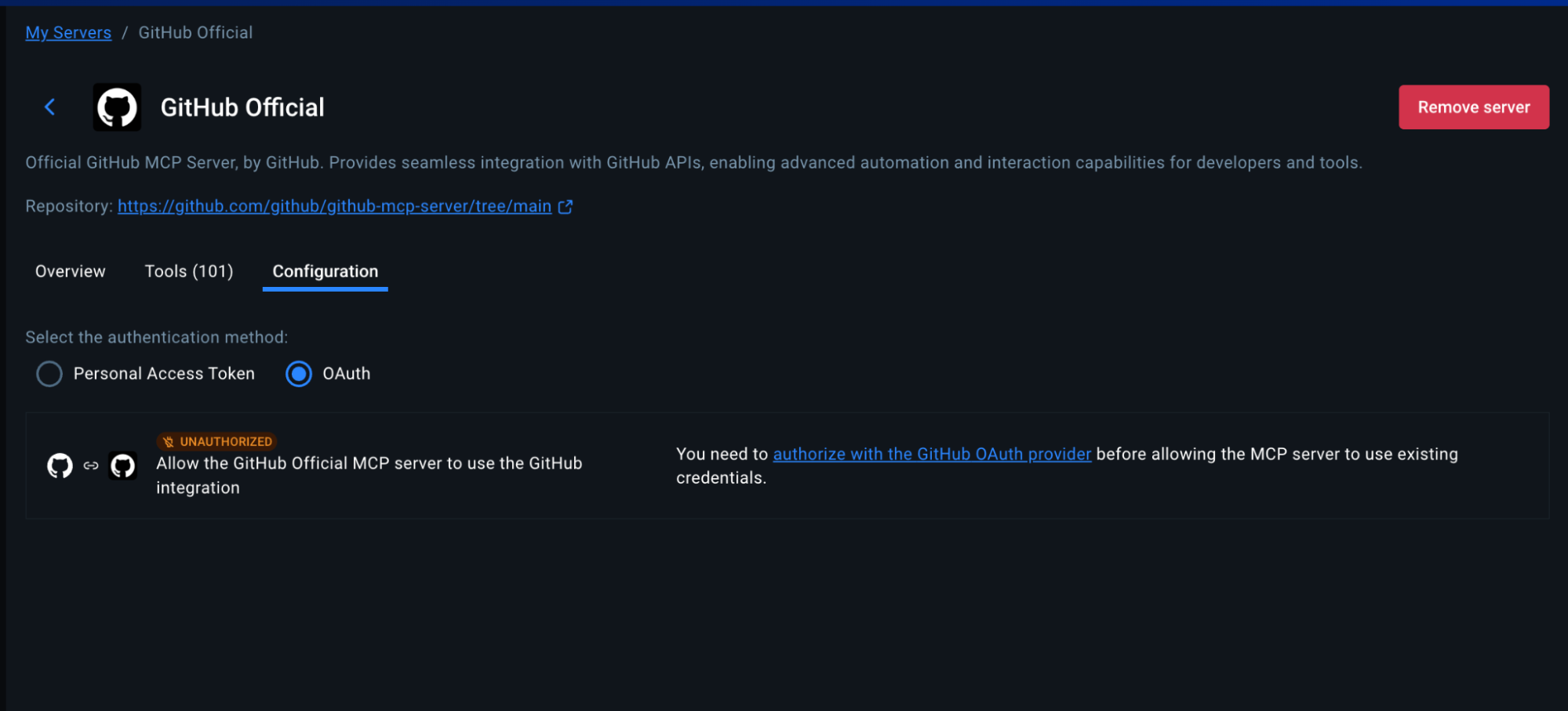

The GitHub MCP enables Gemini to create issues, PRs, and manage repositories on your behalf.

Option 1: OAuth Authentication (Recommended – Easiest)

- In MCP Toolkit → Catalog, search “GitHub Official”

- Click + Add

- Go to the OAuth tab in Docker Desktop

- Find the GitHub entry

- Click “Authorize”

- Your browser opens GitHub’s authorization page

- Click “Authorize Docker” on GitHub

- You’re redirected back to Docker Desktop

- Return to Catalog tab, find GitHub Official

- Click Start Server

Advantage: No manual token creation. Authorization happens through GitHub’s secure OAuth flow with automatic token refresh.

Option 2: Personal Access Token (For Granular Control)

If you prefer manual control or need specific scopes:

Step 1: Create GitHub Personal Access Token

- Go to https://github.com and sign in

- Click your profile picture → Settings

- Scroll to “Developer settings” in the left sidebar

- Click “Personal access tokens” → “Tokens (classic)”

- Click “Generate new token” → “Generate new token (classic)”

- Name it: “Docker MCP Browser Testing”

- Select scopes:

repo(Full control of repositories)workflow(Update GitHub Actions workflows)

- Click “Generate token”

- Copy the token immediately (you won’t see it again!)

Step 2: Configure in Docker Desktop

- In MCP Toolkit → Catalog, find GitHub Official

- Click + Add (if not already added)

- Go to Configuration tab

- Select “Personal Access Token” as the authentication method

- Paste your token

- Click Start Server

Or via CLI:

docker mcp secret set GITHUB.PERSONAL_ACCESS_TOKEN=github_pat_YOUR_TOKEN_HERE

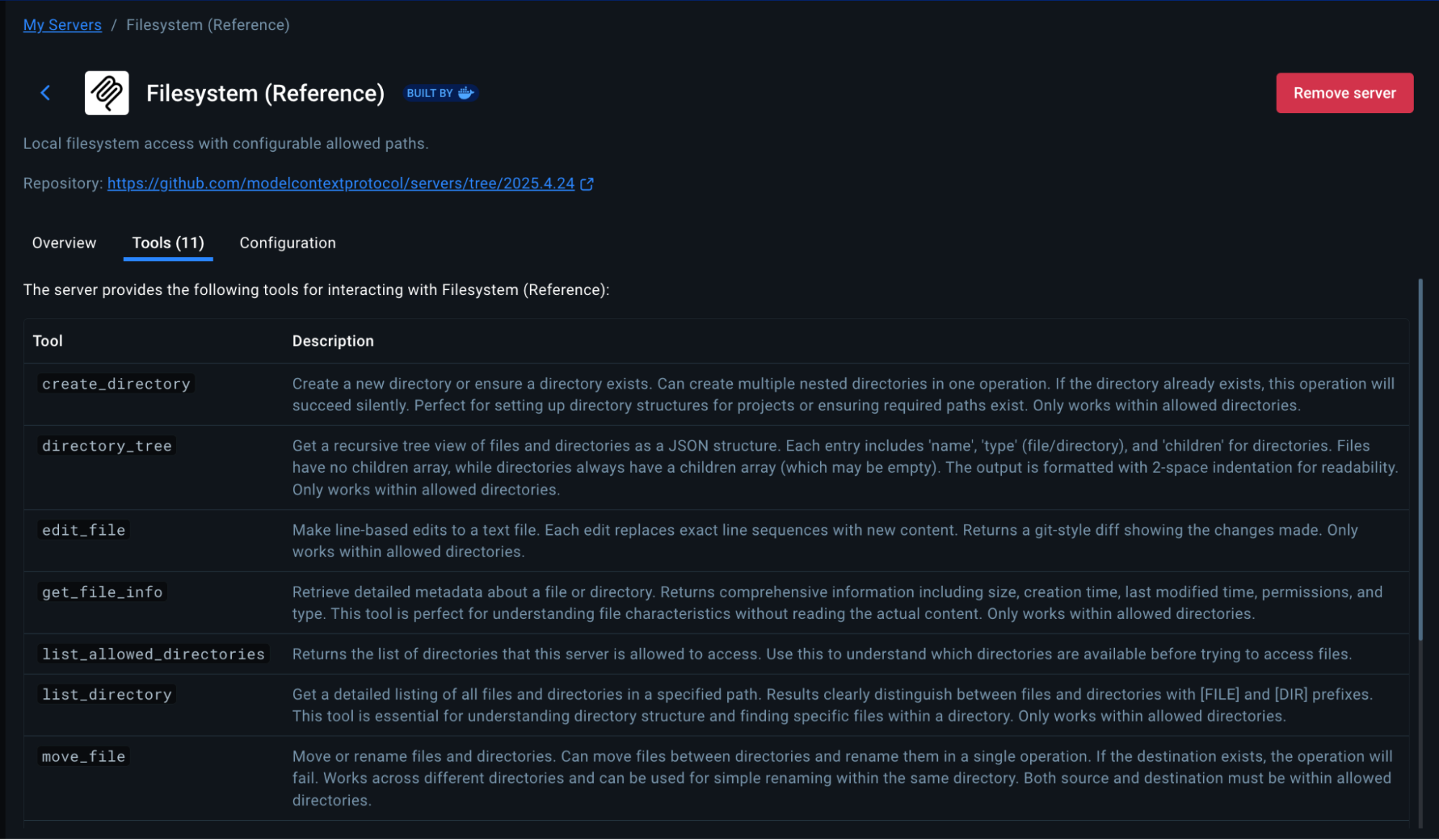

Configure Filesystem MCP (Screenshot Storage)

The Filesystem MCP allows Gemini to save screenshots and test artifacts to your local machine.

In Docker Desktop:

- Go to MCP Toolkit → Catalog

- Search for “Filesystem”

- Find Filesystem (Reference) and click + Add

- Go to the Configuration tab

- Under filesystem.paths, add your project directory:

- Example: /Users/yourname/catalog-service-node

- Or wherever you cloned the repository

- You can add multiple paths by clicking the + button

- Click Save

- Click Start Server

Important Security Note: Only grant access to directories you’re comfortable with Gemini reading and writing to. The Filesystem MCP is scoped to these specific paths for your protection.

Run the Automation

Change directory to the root of the catalog-service-node project repository and open Gemini CLI:

gemini

Paste this prompt:

Navigate to http://host.docker.internal:5173 and perform a detailed

performance and accessibility analysis:

1. Take a screenshot of the full page

2. Count how many products are displayed

3. Open browser DevTools and check:

- Console for any JavaScript errors

- Network tab: how many HTTP requests are made?

- Performance: how long does the page take to load?

4. Identify performance issues:

- Are all products loading at once with no pagination?

- Are images optimized?

- Any unnecessary network requests?

5. Check for accessibility issues:

- Missing alt text on images

- Color contrast problems

- Vague button labels

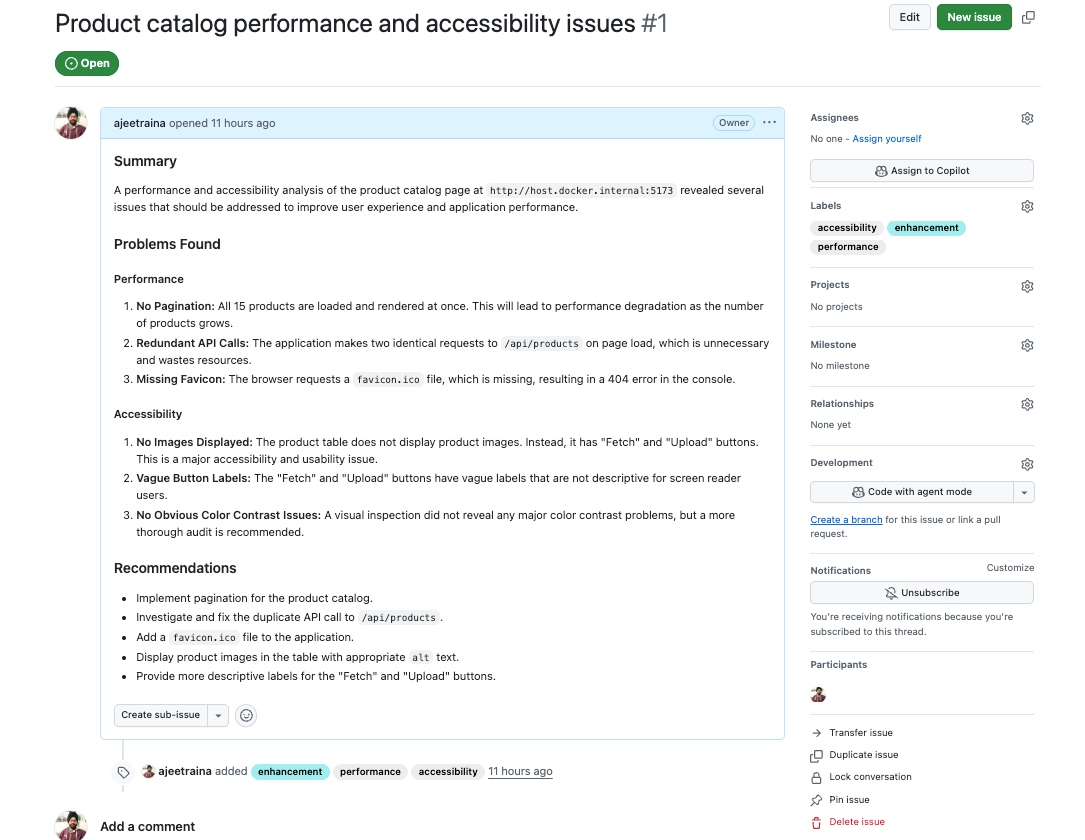

Create a GitHub issue titled "Product catalog performance and

accessibility issues" with:

- Screenshots attached

- List of specific problems found

- Severity: Medium

- Labels: performance, accessibility, enhancement

Watch Gemini Work

Here’s what happens behind the scenes as Gemini orchestrates multiple MCP servers:

Step 1: Browser Navigation

The web server is running. I'll navigate to http://host.docker.internal:5173, take a screenshot, and then proceed with the rest of the performance analysis.

Gemini launches a real Chromium browser in the Docker container and navigates to your application.

Step 2: Console Analysis

✦ I can see 15 products listed on the page: * Vintage Camera * Rare Vinyl Record - LAST ONE! * Professional DSLR Camera * Test Product 4-15

Step 3: Performance Check

✦ Checking browser console and network requests... Opening DevTools to analyze performance metrics...

Step 4: Issue Creation

✦ Creating GitHub issue with findings...

create_issue (MCP_DOCKER MCP Server) {"labels":["performance","accessibility","enhancement"],"body":"### Summary..."}

✦ I have successfully created the issue. You can find it at: https://github.com/ajeetraina/catalog-service-node/issues/1

The Complete GitHub Issue

Gemini automatically created this comprehensive issue with structured information:

Workflow Comparison: Before vs After MCP

|

Step |

Before MCP (Manual Testing) |

Time |

After MCP (Gemini CLI + MCP) |

Time |

|---|---|---|---|---|

|

1 |

Open browser manually |

1 min |

Paste prompt in Gemini CLI |

5 sec |

|

2 |

Navigate to application |

30 sec |

Gemini analyzes automatically |

25 sec |

|

3 |

Click through pages |

3 min |

GitHub issue created |

auto |

|

4 |

Open DevTools manually |

30 sec |

Screenshots attached |

auto |

|

5 |

Take screenshots |

2 min |

✓ Complete |

– |

|

6 |

Check browser console |

1 min |

– |

– |

|

7 |

Analyze network requests |

2 min |

– |

– |

|

8 |

Document findings |

3 min |

– |

– |

|

9 |

Write detailed bug report |

5 min |

– |

– |

|

10 |

Create GitHub issue |

2 min |

– |

– |

|

Summary |

Total |

~ 20 minutes per test |

– |

30 sec per test |

Time saved per test: 19.5 minutes (97% faster!)

Impact over time:

- Per day (5 tests): 97 minutes saved → 1.6 hours

- Per week (25 tests): 8 hours saved → 1 full workday

- Per sprint (50 tests): 16 hours saved → 2 full workdays

- Per year (1,000 tests): 325 hours saved → 40 workdays

Wrapping Up

You’ve just witnessed how Docker MCP Toolkit transforms Gemini CLI from a chat assistant into a complete browser testing and performance analysis platform. What used to require opening browsers, clicking through flows, analyzing DevTools, documenting bugs, and creating issues manually now happens in one 30-second conversation.

The combination of Gemini CLI and Docker MCP Toolkit represents a paradigm shift in AI-assisted development. By leveraging terminal-native tools and containerized services, you get:

- Unmatched flexibility in tool selection

- Superior performance with minimal overhead

- Future-proof architecture that scales with your needs

This setup isn’t just about convenience — it’s about building a development environment that adapts to your workflow rather than forcing you to adapt to it. The developer productivity revolution is here. The question isn’t whether you’ll adopt AI-assisted development — it’s whether you’ll lead with the best tools available or play catch-up later.

Ready to try it? Enable Docker MCP Toolkit in Docker Desktop and start building your own Gemini-powered development workflow today.

Learn more

- Explore the MCP Catalog: Discover containerized, security-hardened MCP servers

- Open Docker Desktop and get started with the MCP Toolkit (Requires version 4.48 or newer to launch the MCP Toolkit automatically)

- Explore our guide on adding MCP Servers to Claude Code with the Docker MCP Toolkit

- Check out our MCP Horror Stories series to see common MCP security pitfalls and how you can avoid them.

Models are advancing quickly. GPT-5, Claude Sonnet, Gemini. Each release gives us more capabilities. But most real work isn’t solved by a single model.

Developers are realizing they need a system of agents: different types of agents working together to accomplish more complex tasks. For example, a researcher to find information, a writer to summarize, a planner to coordinate, and a reviewer to check accuracy.

The challenge is that today, building a multi-agent system is harder than it should be. Context doesn’t flow cleanly between agents. Tools require custom integration. Sharing with a teammate means sending instructions and hoping they can re-create your setup.

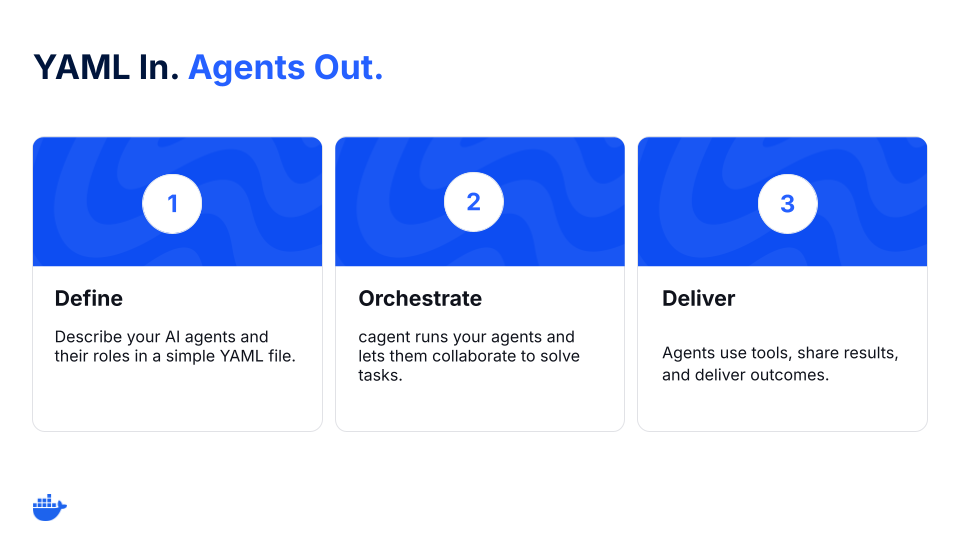

That’s the problem cagent solves.

In this blog, we’ll walk you through the basics, how to create a multi-agent AI system in minutes, and how cagent makes this possible.

What’s a multi-agent system?

A multi-agent system is a coordinated group of AI agents that collaborate to complete complex tasks. Using cagent, you can build and run these systems declaratively, no complex wiring or reconfiguration needed.

Meet cagent: The best (and open source) way to build multi-agent systems

Figure 1: cagent workflow for multi-agent orchestration.

cagent is an open-source tool for building agents and a part of Docker’s growing ecosystem of AI tools.

Instead of writing glue code to wire up models, tools, and workflows, describe an agent (or a team of agents) in a single YAML file:

- Which model the agent uses (OpenAI, Anthropic, Gemini, or a local one)

- What its role or instructions are

- Which tools it can use (like GitHub, search, or the filesystem)

- And, if needed, which sub-agents it delegates to

This turns agents into portable, reproducible artifacts you can run anywhere and share with anyone.

Multi-agent challenges that cagent is solving

Create, run, and share multi-agent AI systems more easily with cagent.

- Orchestrate agents (and sub-agents) more easily – Define roles and delegation (sub-agents). cagent manages calls and context.

- Let agents use tools with guardrails – Grant capabilities with MCP: search, GitHub, files, databases. Each agent gets only the tools you list and is auditable.

- Use (and swap) models – OpenAI, Anthropic, Gemini, or local models through Docker Model Runner. Swap providers without rewriting your system.

- Treat agents like artifacts – Package, version, and share agents like containers.

How to build a multi-agent system with Docker cagent

Here’s what that looks like in practice.

Step 1: Define your multi-agent system

version: "2"

agents:

root:

model: anthropic/claude-sonnet-4-0

instruction: |

Break down a user request.

Ask the researcher to gather facts, then pass them to the writer.

sub_agents: ["researcher", "writer"]

researcher:

model: openai/gpt-5-mini

description: Agent to research and gather information.

instruction: Collect sources and return bullet points with links.

toolsets:

- type: mcp

ref: docker:duckduckgo

writer:

model: dmr/ai/qwen3

description: Agent to summarize notes.

instruction: Write a concise, clear summary from the researcher’s notes.

Step 2: Run the YAML file

cagent run team.yaml

The coordinator delegates, the researcher gathers, and the writer drafts. You now have a functioning team of agents.

Step 3: Share it on Docker Hub

cagent push ./team.yaml org/research-writer

Now, anyone on your team can run the exact same setup with:

cagent run docker.io/org/research-writer

That’s a full multi-agent workflow, built and shared in under 5 minutes.

First principles: Why cagent works

These principles keep cagent an easy-to-use and customizable multi-agent runtime to orchestrate AI agents.

- Declarative > imperative. Multi-agent systems are mostly wiring: roles, tools, and topology. YAML keeps that wiring declarative, making it easy to define, read, and review.

- Agents as artifacts. Agents become portable artifacts you can pull, pin, and trust.

- Small surface area. A thin runtime that does one job well: coordinate agents.

What developers are building with cagent

Developers are already exploring different multi-agent use cases with cagent. Here are some examples:

1. PR and issue triaging

- Collector reads PRs/issues, labels, failing checks

- Writer drafts comments or changelogs

- Coordinator enforces rules, routes edge cases

2. Research summarizing

- Researcher finds and cites sources

- Writer produces a clean summary

- Reviewer checks for hallucinations and tone

3. Knowledge routing

- Router classifies requests

- KB agent queries internal docs

- Redactor strips PII before escalation

Each one starts the same way: a YAML file and an idea. And they can be pushed to a registry and run by anyone.

Get started

cagent gives you the fastest path forward to build multi-agent systems. It’s open-source, easy to use, and built for the way developers already work. Define your agents, run them locally, and share them, all in a few lines of YAML.

YAML in, agents out.

Run the following to get started:

brew install cagent

cagent new

cagent run agent.yaml

Learn more

- Get the technical details from our cagent documentation.

- We’d love to hear what you think. Join us in the Docker Community Slack.

- Dive into more topics about AI and Docker.

- Subscribe to the Docker Navigator Newsletter.

From a Limited Beta to a Universe of Possibilities

When we first announced Docker Model Runner, it was in its beta phase, exclusively available on Docker Desktop and limited to Apple and Nvidia hardware. We received a ton of valuable feedback, and we’ve been hard at work making it more accessible and powerful.

Today, we’re proud to say that Docker Model Runner is now Generally Available (GA) and can be used in all versions of Docker! But that’s not all. We’ve added Vulkan support, which means you can now run your models on virtually any GPU. This is a huge leap forward, and it’s all thanks to the incredible potential we see in this project and the community that surrounds it.

Making Contributions a Breeze

We’ve listened to your feedback about the contribution process, and we’ve made some significant changes to make it as smooth as possible.

To start, we’ve consolidated all the repositories into a single, unified home. This makes it much easier to find everything you need in one place.

We have also invested a lot of effort in updating our documentation for contributors. Whether you’re a seasoned open-source veteran or a first-time contributor, you’ll find the information you need to get started.

Your Mission, Should You Choose to Accept It

The success of Docker Model Runner depends on you, our amazing community. We’re calling on you to help us make this project the best it can be. Here’s how you can get involved:

- Star our repository: Show your support and help us gain visibility by starring the Docker Model Runner repo.

- Fork and Contribute: Have an idea for a new feature or a bug fix? Fork the repository, make your changes, and submit a pull request. We’re excited to see what you come up with!

- Spread the word: Tell your friends, colleagues, and anyone else who might be interested in running AI models with Docker.

We’re incredibly excited about this new chapter for Docker Model Runner, and we can’t wait to see what we can build together. Let’s get to work!

Learn more

- Check out the Docker Model Runner General Availability announcement

- Visit our Model Runner GitHub repo! Docker Model Runner is open-source, and we welcome collaboration and contributions from the community!

- Get started with Docker Model Runner with a simple hello GenAI application

In this post, we’ll show how DGX Spark and Docker Model Runner work together to make local model development faster and simpler, covering the unboxing experience, how to set up Model Runner, and how to use it in real-world developer workflows.

What is NVIDIA DGX Spark

NVIDIA DGX Spark is the newest member of the DGX family: a compact, workstation-class AI system, powered by the Grace Blackwell GB10 Superchip that delivers incredible performance for local model development. Designed for researchers and developers, it makes prototyping, fine-tuning, and serving large models fast and effortless, all without relying on the cloud.

Here at Docker, we were fortunate to get a preproduction version of DGX Spark. And yes, it’s every bit as impressive in person as it looks in NVIDIA’s launch materials.

Why Run Local AI Models and How Docker Model Runner and NVIDIA DGX Spark Make It Easy

Many of us at Docker and across the broader developer community are experimenting with local AI models. Running locally has clear advantages:

- Data privacy and control: no external API calls; everything stays on your machine

- Offline availability: work from anywhere, even when you’re disconnected

- Ease of customization: experiment with prompts, adapters, or fine-tuned variants without relying on remote infrastructure

But there are also familiar tradeoffs:

- Local GPUs and memory can be limiting for large models

- Setting up CUDA, runtimes, and dependencies often eats time

- Managing security and isolation for AI workloads can be complex

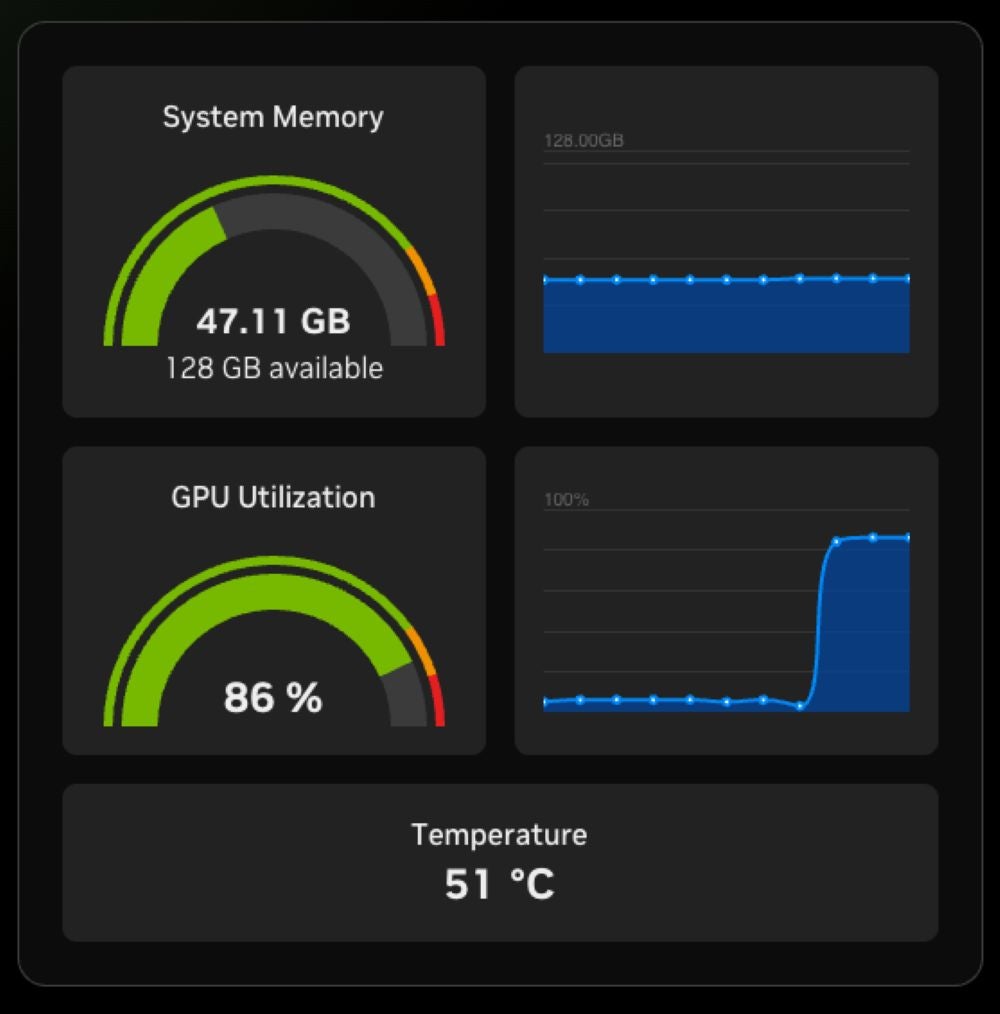

This is where DGX Spark and Docker Model Runner (DMR) shine. DMR provides an easy and secure way to run AI models in a sandboxed environment, fully integrated with Docker Desktop or Docker Engine. When combined with the DGX Spark’s NVIDIA AI software stack and large 128GB unified memory, you get the best of both worlds: plug-and-play GPU acceleration and Docker-level simplicity.

Unboxing NVIDIA DGX Spark

The device arrived well-packaged, sleek, and surprisingly small, resembling more a mini-workstation than a server.

Setup was refreshingly straightforward: plug in power, network, and peripherals, then boot into NVIDIA DGX OS, which includes NVIDIA drivers, CUDA, and AI software stack pre-installed.

Once on the network, enabling SSH access makes it easy to integrate the Spark into your existing workflow.

This way, the DGX Spark becomes an AI co-processor for your everyday development environment, augmenting, not replacing, your primary machine.

Getting Started with Docker Model Runner on NVIDIA DGX Spark

Installing Docker Model Runner on the DGX Spark is simple and can be done in a matter of minutes.

1. Verify Docker CE is Installed

DGX OS comes with Docker Engine (CE) preinstalled. Confirm you have it:

docker version

If it’s missing or outdated, install following the regular Ubuntu installation instructions.

2. Install the Docker Model CLI Plugin

The Model Runner CLI is distributed as a Debian package via Docker’s apt repository. Once the repository is configured (see linked instructions above) install via the following commands:

sudo apt-get update

sudo apt-get apt-get install docker-model-plugin

Or use Docker’s handy installation script:

curl -fsSL https://get.docker.com | sudo bash

You can confirm it’s installed with:

docker model version

3. Pull and Run a Model

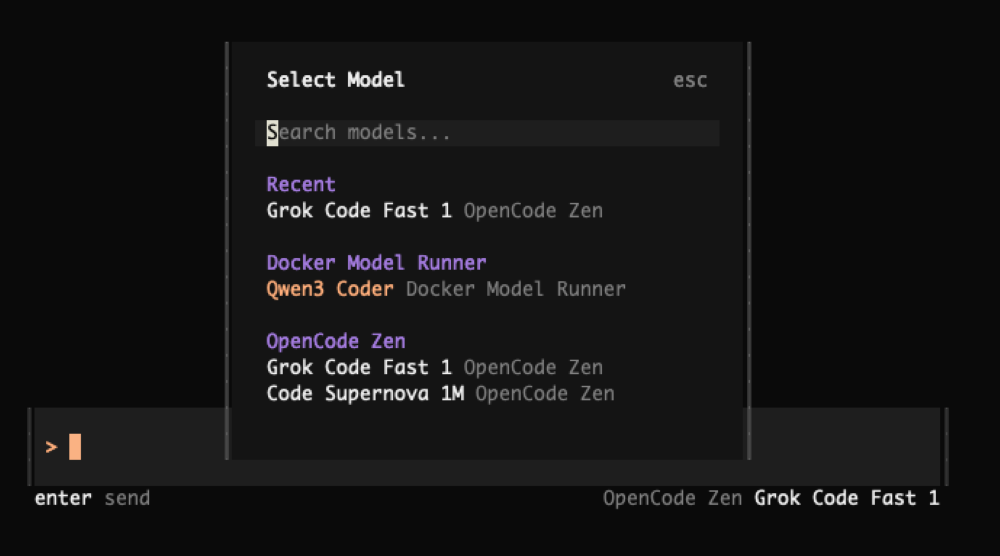

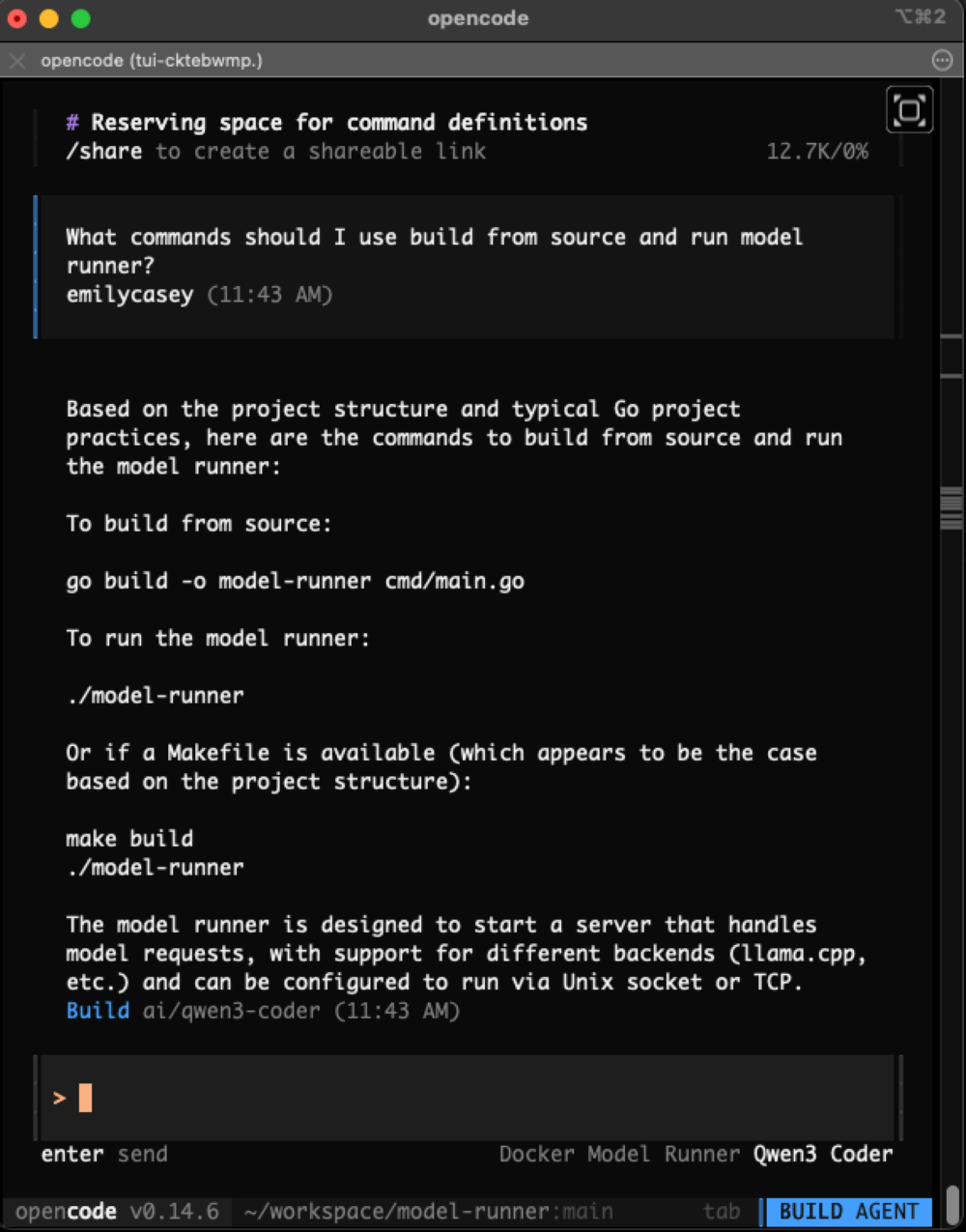

Now that the plugin is installed, let’s pull a model from the Docker Hub AI Catalog. For example, the Qwen 3 Coder model:

docker model pull ai/qwen3-coder

The Model Runner container will automatically expose an OpenAI-compatible endpoint at:

http://localhost:12434/engines/v1

You can verify it’s live with a quick test:

# Test via API

curl http://localhost:12434/engines/v1/chat/completions -H 'Content-Type: application/json' -d

'{"model":"ai/qwen3-coder","messages":[{"role":"user","content":"Hello!"}]}'

# Or via CLI

docker model run ai/qwen3-coder