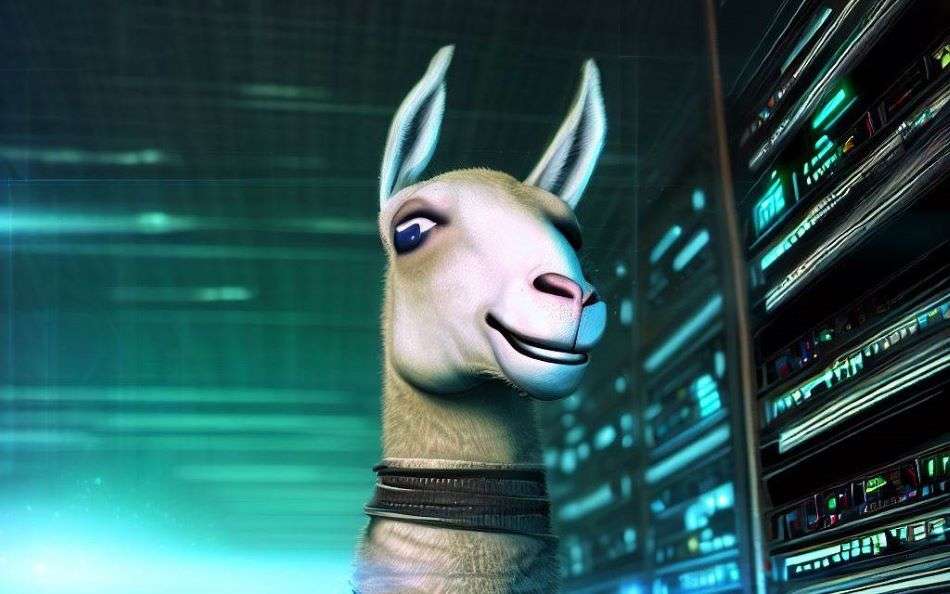

Open Source AI – Introduction to Meta AI LLama 2 and it’s Unique Capabilities

The race between Tech Titans is intensifying, reaching unprecedented speeds, as Meta, a major player, unleashes its groundbreaking release: Llama 2, a formidable Open Source AI. This exceptional Large Language Model seeks to revolutionize the landscape of Artificial Intelligence, with an unparalleled goal of democratizing access to top edge technology.

Meta AI has released Llama 2, an impressive new open source AI large language model (LLM) that demonstrates substantial advances over previous models like GPT-3. The pre-trained model and accompanying research paper highlight Meta’s commitment to sharing progress in Artificial Intelligence, even amidst political pressure to close off access.

Llama 2 builds directly off Meta’s previous LLaMA model with upgrades across the board – more data, longer context lengths, improved training techniques like grouped-query attention, and extensive benchmarking. At a high level, this release solidifies Meta as a leader in open LLMs, advancing the entire field through their open research artifacts.

What Makes Meta AI LLama 2 Special

So what sets Llama 2 apart? Here are some of the key highlights:

- Strong base capabilities: It achieves state-of-the-art results on benchmarks like MMLU, showcasing foundational skills beyond any other open model including GPT-3. This provides a powerful starting point for downstream tasks.

- Multiple model sizes: Models are released in sizes from 7 billion to 70 billion parameters, enabling cost-performance trade-offs.

- Specialized chat variants: Fine-tuned conversational models adapted from the base Llama 2 demonstrate performance rivaling closed models like ChatGPT.

- Safety focused: Extensive techniques like dataset filtering, context distillation, and reinforcement learning from human feedback improve safety and mitigate potential harms.

- Reproducible methods: The thorough documentation provides a blueprint for large-scale LLM development using preference learning, distribution control, and more.

- Democratized access: Releasing such a capable model openly makes AI more accessible to researchers worldwide and reduces compute costs.

Under the hood, Llama 2 reflects Meta’s expanded data, compute, and human resources dedicated to iterative training. But the paper emphasizes that sheer scale alone does not guarantee progress – continuous improvements in data quality, safety, and evaluation metrics were instrumental. Let’s explore some of these key factors.

High-Quality Data Collection and Curation

The Llama 2 training process exemplifies the importance of data quality and curation, not just quantity. Meta increased their corpus by 40% to 2 trillion tokens, prioritizing factual sources. They also invested heavily in subjective datasets like preference annotations. Some data highlights:

- Added 40% more unlabeled data from publicly available sources, excluding sites with personal info

- Biased sampling to emphasize factual data, reducing hallucinations

- Millions of human preference annotations comparing model outputs, focused on safety and helpfulness

- Continual data collection alongside model training to maintain distributional consistency

Having high-quality data, especially human preference data, proved critical during the later reinforcement learning stage. With tighter distributional control and focused annotation goals, tens of thousands of examples elicited superior performance to using millions of lower-quality open datasets.

Separate Reward Modeling for Helpfulness and Safety

Llama 2 deploys a novel two-pronged reward modeling approach, training separate models to optimize for helpfulness and safety. Each reward model builds on the base Llama’s architecture with a specialized linear layer.

To fuel these models, Meta’s human preference annotations distinguishing helpfulness from safety were invaluable. This prevented trading off one objective for the other during reinforcement learning.

Specific techniques like adding a confidence-based margin term to the loss function further improved reward modeling accuracy to 85-95% on strongly preferred pairs. The resulting performance gains underscore the importance of reward modeling – it caps the final capabilities of reinforced models.

Reinforcement Learning and Fine-Tuning

Llama 2 leverages a combination of reinforcement learning techniques like proximal policy optimization (PPO) and rejection sampling to steer the base models towards stronger performance based on the reward models.

Iterative RL over multiple stages provides a major boost – by version 4, Llama 2 surpasses ChatGPT in human evaluations. RL also exceeds static supervised fine-tuning, likely due to synthesizing more diversity and exploring beyond human demonstrators.

Together, these advances showcase the synergy of humans and models facilitated by RL. With the right techniques, modest amounts of human feedback can profoundly enhance large models.

Extensive Safety Precautions

In light of recent events, Llama 2 places a premium on safety, devoting nearly half the paper to related methods and evaluation. This encompasses bias/stereotyping, truthfulness, potential harms, and adversarial attacks.

Steps like data filtering, context distillation, and safety-oriented RL provide tangible safety improvements. For example, after RL the models have lower violation rates and better resist red team adversarial prompts based on Project AGI’s 2000 examples.

There are still gaps, and more work is needed as models grow more capable. But the rigorous safety methodology and transparency set a higher standard for responsible LLM releases.

Impressive Capabilities with Room to Grow

So where does Llama 2 stand in terms of raw capabilities? In a word: impressive, with plenty of headroom left.

It convincingly exceeds other open models like GPT-3 in benchmarks like SuperGLUE. The conversational models approach or match ChatGPT based on human evaluations, especially after reinforcement learning.

But Meta’s AI LLM does not surpass commercial models like Claude or GPT-4 yet. This release seems more focused on pushing open research forward as opposed to pure benchmark dominance. The strong base foundation provides fertile ground for future progress.

With the model openly available, researchers worldwide can now build on Llama 2 for a fraction of the original costs. Combining the model with techniques like chain-of-thought prompting, fine-tuning, and reinforcement learning will unlock even more capabilities.

Democratizing Access to Cutting-Edge Open Source AI

Stepping back, Llama 2 represents a bold commitment to democratize access to large language models amidst pressures against openness. Meta could have kept a model this strong closed for competitive advantage. Instead, they continue sharing progress to advance the entire field.

Releasing such a capable model openly makes AI more accessible to researchers worldwide who can build on Meta’s AI Llama model at a fraction of the original costs. This enables more voices to participate and share benefits of Artificial Intelligence.

Of course, advanced LLMs like Llama 2 carry risks if used carelessly. But broad access also allows more eyes to assess challenges and develop solutions collaboratively. Overall, Llama 2 strikes a reasonable balance – spurring innovation through openness while prioritizing safety.

As models grow more capable, maintaining this open spirit will be crucial. Llama 2 sets an example of transparency and sharing to elevate AI for the common good.

Key Takeaways

To recap, Llama 2’s key contributions include:

- Pushing open-source LLMs to new heights through model scale and improved training.

- Showcasing the importance of high-quality data curation, not just quantity.

- Separating helpfulness and safety goals during reward modeling and reinforcement learning.

- Providing extensive safety benchmarking and mitigation techniques for responsible LLM releases.

- Continuing open research sharing amidst pressures for secrecy and proprietary models.

- Enabling worldwide access to a state-of-the-art foundation for researchers everywhere to build on.

The AI field still has far to go, but thoughtful open contributions like Llama 2 help ensure progress benefits more than just a select few companies. As LLMs grow more central to products and services, maintaining openness will only become more important.

1: What exactly is Llama 2? Llama 2 is Meta AI’s latest open-source large language model (LLM). It builds on their previous LLaMA model but with upgrades like more data, longer context lengths, and improved training techniques.

2: How good is Llama 2 compared to other LLMs? Llama 2 sets new benchmarks for open-source LLMs. It exceeds models like GPT-3 across a range of tests and approaches parity with closed models like ChatGPT for conversational tasks after fine-tuning.

3: What makes Llama 2 special? Key innovations include separate reward modeling for helpfulness and safety, iterative reinforcement learning for boosting performance, extensive safety protections, and impressive capabilities accessible to all researchers.

4: Is Llama 2 better than commercial models like ChatGPT? Llama 2 matches ChatGPT in conversational ability but does not yet surpass proprietary models like GPT-4. However, its open availability unlocks huge potential for future improvements.

5: Why is Meta releasing such a strong model openly? Sharing advances openly allows more researchers worldwide to build on Llama 2 and reduce compute costs. This democratizes access to AI instead of concentrating progress within a few companies.